Whenever I visit scientists to discuss their research, there comes a moment when they say, with barely concealed pride: ‘Do you want a tour of the lab?’ It is invariably slightly touching — like Willy Wonka dying to show off his chocolate factory. I’m glad to accept, knowing what lies in store: shelves lined with bottles or reagents; gleaming, quartz-windowed cryogenic chambers; slabs of perforated steel holding lasers and lenses.

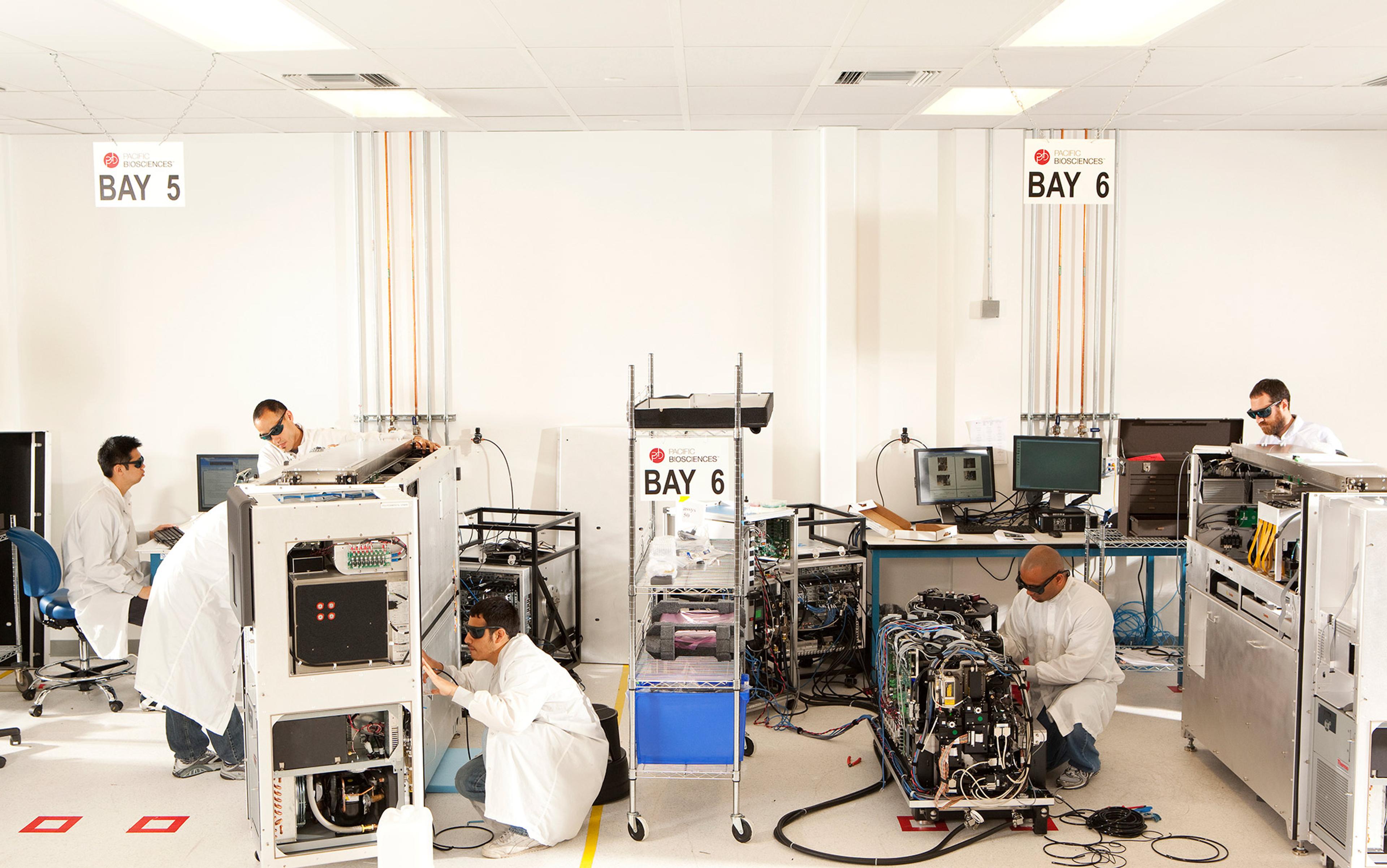

It’s rarely less than impressive. Even if the kit is off-the-shelf, it is wired into a makeshift salmagundi of wires, tubes, cladding, computer-controlled valves and rotors and components with more mysterious functions. Much of the gear, however, is likely to be homemade: custom-built for the research at hand. Whatever else it might accomplish, the typical modern lab set-up is a masterpiece of impromptu engineering — you’d need degrees in electronics and mechanics just to put it all together, never mind making sense of the graphs and numbers it produces. And like the best engineering, these set-ups tend to be kept out of sight. Headlines announcing ‘Scientists have found…’ rarely bother to tell you how the discoveries were made.

Would you care? The tools of science are so specialised that we accept them as a kind of occult machinery for producing knowledge. We figure that they must know how it all works. Likewise, histories of science focus on ideas rather than methods — for the most part, readers just want to know what the discoveries were. Even so, most historians these days recognise that the relationship between scientists and their instruments is an essential part of the story. It isn’t simply that the science is dependent on the devices; the devices actually determine what is known. You explore the things that you have the means to explore, planning your questions accordingly.

When a new instrument comes along, new vistas open up. The telescope and microscope, for example, stimulated discovery by superpowering human perception. Such developments prompt scientists to look at their own machines with fresh eyes. It’s not fanciful to see some of the same anxieties that are found in human relations. Can you be trusted? What are you trying to tell me? You’ve changed my life! Look, isn’t she beautiful? I’m bored with you, you don’t tell me anything new any more. Sorry, I’m swapping you for a newer model… We might even speak of interactions between scientists and their instruments that are healthy or dysfunctional. But how do we tell one from the other?

It seems to me that the most effective (not to mention elegant) scientific instruments serve not only as superpowers for the senses but as prostheses for the mind. They are the physical embodiments of particular thoughts. Take the work of the New Zealand physicist Ernest Rutherford, perhaps the finest experimental scientist of the 20th century. It was at a humble benchtop with cheap, improvised equipment that he discovered the structure of the atom, then proceeded to split it. Rather than being limited by someone else’s view of what one needed to know, Rutherford devised an apparatus to tell him precisely what he wanted to find out. His experiments emerged organically from his ideas: they almost seem like theories constructed out of glass and metal foil.

In one of his finest moments, at Manchester University in 1908, Rutherford and his colleagues figured out that the alpha particles spewed out during radioactive decay were the nuclei of helium atoms. The natural way to test the hypothesis is to collect the particles and see if they behave like helium. Rutherford ordered his glassblower, Otto Baumbach, to make a glass capillary tube with extraordinarily thin walls such that the alpha particles emitted from radium could pass right through. Once the particles had accumulated in an outer chamber, Rutherford connected up the apparatus to become a gas-discharge tube. As electrodes converted the atoms in the gas into charged ions, they would emit light at a wavelength that depended on their chemical identity. Thus he revealed the trapped alpha particles to be helium, disclosed by the signature wavelength of their glow. It was an exceedingly rare example of a piece of apparatus that answers a well-defined question — are alpha particles helium? — with a simple yes/no answer, almost literally by whether a light switches on or not.

A more recent example is the scanning tunnelling microscope, invented by the late Heinrich Rohrer and Gerd Binnig at IBM’s Zurich research lab in 1981. Thanks to a quantum-mechanical effect called tunnelling, the researchers knew that electrons within the surface of an electrically conducting sample should be able to cross a tiny gap to reach another electrode held just above the surface. Because tunnelling is acutely sensitive to the width of the gap, a metal needle moving across the sample at a constant, just out of contact, could trace out the sample’s topography because of surges in the tunnelling current as the tip passed over bumps. If the movement was fine enough, the map might even show the bulges produced by individual atoms and molecules. And so it did. Between the basic idea and a working device, however, lay an incredible amount of practical expertise — sheer craft — allied to rigorous thought. They were often told the instrument ‘should not work’ on principle. Nevertheless, Rohrer and Binnig got it going, inventing perhaps the central tool of nanotechnology and winning a Nobel Prize in 1986 for their efforts.

So that’s when it goes right. What about when it doesn’t? Scientific instruments have always been devices of power: those who have the best ones can find out the most. Galileo knew this — he kept up a cordial correspondence with his fellow astronomer Johannes Kepler in Prague, but when Kepler requested the loan of a telescope, the Italian found excuses. Galileo saw that, with one of these devices, Kepler would become a more serious rival. Instruments, he understood, confer authority.

Today, however, they have become symbols of prestige as never before. I have several times been invited to admire the most state-of-the-art device in a laboratory purely for its own sake, as though I was being shown a Lamborghini. Stuart Blume, a historian of medical technology of the University of Amsterdam, has argued that the latest equipment serves as a token of institutional might, a piece of window-dressing to enhance one’s competitive position in the quasi-marketplace of scientific ideas. I recently interviewed several chemists about their use of second-hand equipment, often acquired from the scientific equivalents of eBay. Strangely, they all asked to remain anonymous, as though their thrift would mark them out as second-rate scientists.

One of the dysfunctional consequences of this sort of attitude is that the machine becomes its own justification, its own measure of worth. Results seem ‘important’ not because of what they tell us but because of how they were obtained. Despite its initial myopia, the Hubble Space Telescope is one of the most glorious instruments ever made, a genuinely new window on the universe. Even so, when it first began to send back images of the cosmos in the mid-1990s, Nature was plagued with content-free submissions reporting the first ‘Hubble image’ of this or that astrophysical object. Authors were often affronted to hear that the journal wanted, not the latest pretty picture, but some insight into the process it was depicting.

At least this kind of instrument-worship is relatively harmless in the long run. More problematic is the notion of an instrument as a ‘knowledge machine’, a contraption that will churn out new understanding as long as you keep cranking the handle. Robert Aymar, former director-general of CERN, was flirting with this idea when he called the Large Hadron Collider a ‘discovery machine’. He was harking back (possibly without knowing it) to a tradition begun by Francis Bacon in his Novum Organum (1620). Bacon’s ‘organon’ was a proposed method for analysing facts, a systematic procedure (today we would call it an algorithm) for distilling observations of the world into underlying causes and mechanisms. It was, in effect, a gigantic logic engine, accepting facts at one end and ejecting theorems at the other.

Except, as it turned out, the system was so complex and intricate that Bacon never even finished describing it, let alone putting it into practice. Even if he had, it would have been to no avail: it is now generally agreed among philosophers and historians of science that this is not how knowledge comes about. The preference of the early experimental scientists, such as those who formed the Royal Society, to pile up facts in a Baconian manner while postponing indefinitely the framing of hypotheses to explain them, will get you nowhere. (It’s precisely because they couldn’t in fact restrain their impulse to interpret that men such as Isaac Newton and Robert Boyle made any progress.) Unless you begin with some hypothesis, you don’t know which facts you are looking for, and you’re liable to end up with a welter of data, mostly irrelevant and certainly incomprehensible.

Many gene-sequencing projects seem to hope that, if you have enough data, understanding will somehow fall out of the bottom of the pile

This seems obvious, and most scientists would agree. But the Baconian impulse is alive and well elsewhere, driven by the allure of ‘knowledge machines’. The ability to sequence genomes quickly and cheaply will undoubtedly prove valuable for medicine and fundamental genetics, yet these experimental techniques have already far outstripped not only our understanding of how genomes operate but our ability to formulate questions about them. Many gene-sequencing projects seem to depend on the hope that, if you have enough data, understanding will somehow fall out of the bottom of the pile. As the biologist Robert Weinberg of the Massachusetts Institute of Technology has said: ‘the dominant position of hypothesis-driven research is under threat’.

And not just in genomics. The United States and European Union have recently announced two immense projects, costing hundreds of millions of dollars each, to map out the human brain, using the latest imaging techniques to trace every last one of the billions of neural connections. Some neuroscientists are drooling at the thought of all that data. ‘Think about it,’ one told Nature in July 2013. ‘The human brain produces in 30 seconds as much data as the Hubble Space Telescope has produced in its lifetime.’

If, however, we wanted to know how cities function, creating a map of every last brick and kerb would be an odd way to go about it. Quite how these brain projects will turn all their data into understanding remains a mystery. One researcher in the EU-funded project, simply called the Human Brain Project and based in Switzerland, inadvertently revealed the paucity of theory within this information glut: ‘It is a chicken and egg situation. Once we know how the brain works, we’ll know how to look at the data.’ Of course, the Human Brain Project isn’t quite that clueless, but this hardly mitigates the enormity of this flippant statement. Science has never worked by shooting first and asking questions later, and it never will.

The faddish notion that science will soon be a matter of mining Big Data for correlations, driven in part by the belief that data is worth collecting simply because you have the instruments to do so, has been rightly dismissed as ludicrous. It fails on technical grounds alone: data sets of any complexity will always contain spurious correlations between one variable and another. But it also fails to acknowledge that science is driven by ideas, not numbers or measurements — and ideas only arise by people thinking about causative mechanisms and using them to frame good questions. The instruments should then reflect the hypotheses, collecting precisely the data that will test them.

Biology, in which the profusion of evolutionary contingencies makes the formulation of broad hypotheses particularly hard, has long felt the danger of a Baconian retreat to pure data-gathering. The Austrian biochemist Erwin Chargaff, whose work helped elucidate how DNA stores genetic information, commented on this tendency as early as 1974:

Now when I go through a laboratory… there they all sit before the same high-speed centrifuges or scintillation counters, producing the same superposable graphs. There has been very little room left for the all important play of scientific imagination.

Thanks to this, Chargaff said, ‘a pall of monotony has descended on what used to be the liveliest and most attractive of all scientific professions’. Like Chargaff, the pioneer of molecular biology Walter Gilbert saw an encroachment of corporate strategies in the repetition ad nauseam of standardised instrumental procedures. The business of science was becoming an industrial process, manufacturing data on the production line: data produced, like consumer goods, because we have the instrumental means to do so, not because anyone knows what to do with it all.

High-energy physics works on a similar industrial scale, with big machines at the centre, but at least it doesn’t suffer from a paucity of hypotheses. Indeed, it faces the opposite problem: a consensus around a single idea, into which legions of workers burrow single-mindedly. Donald Glaser, the inventor of the bubble chamber, saw this happening in the immediate postwar period, once the Manhattan Project had provided the template. He confessed that: ‘I didn’t want to join an army of people working at big machines.’ For him, the machines were taking over. Only by getting out of that racket did he devise his Nobel-prize-winning technique for spotting new particles.

To investigate the next layer of reality’s onion, there’s no getting away from the need for big particle colliders to reach the incredible energies required. But physics will be in trouble if, instead of celebrating its smartest marriages of ideas and instruments, it becomes a cult of its biggest machine.

The challenge for the scientist, particularly in the era of Big Science, is to keep the instrument in its place. The best scientific kit comes from thinking about how to solve a problem. But once it becomes a part of the standard repertoire and acquires a lumbering momentum of its own, it might start to constrain thinking more than it assists it. As the historians of science Albert van Helden and Thomas Hankins said in 1994: ‘Because instruments determine what can be done, they also determine to some extent what can be thought.’