For the French philosopher Paul Virilio, technological development is inextricable from the idea of the accident. As he put it, each accident is ‘an inverted miracle… When you invent the ship, you also invent the shipwreck; when you invent the plane, you also invent the plane crash; and when you invent electricity, you invent electrocution.’ Accidents mark the spots where anticipation met reality and came off worse. Yet each is also a spark of secular revelation: an opportunity to exceed the past, to make tomorrow’s worst better than today’s, and on occasion to promise ‘never again’.

This, at least, is the plan. ‘Never again’ is a tricky promise to keep: in the long term, it’s not a question of if things go wrong, but when. The ethical concerns of innovation thus tend to focus on harm’s minimisation and mitigation, not the absence of harm altogether. A double-hulled steamship poses less risk per passenger mile than a medieval trading vessel; a well-run factory is safer than a sweatshop. Plane crashes might cause many fatalities, but refinements such as a checklist, computer and co-pilot insure against all but the wildest of unforeseen circumstances.

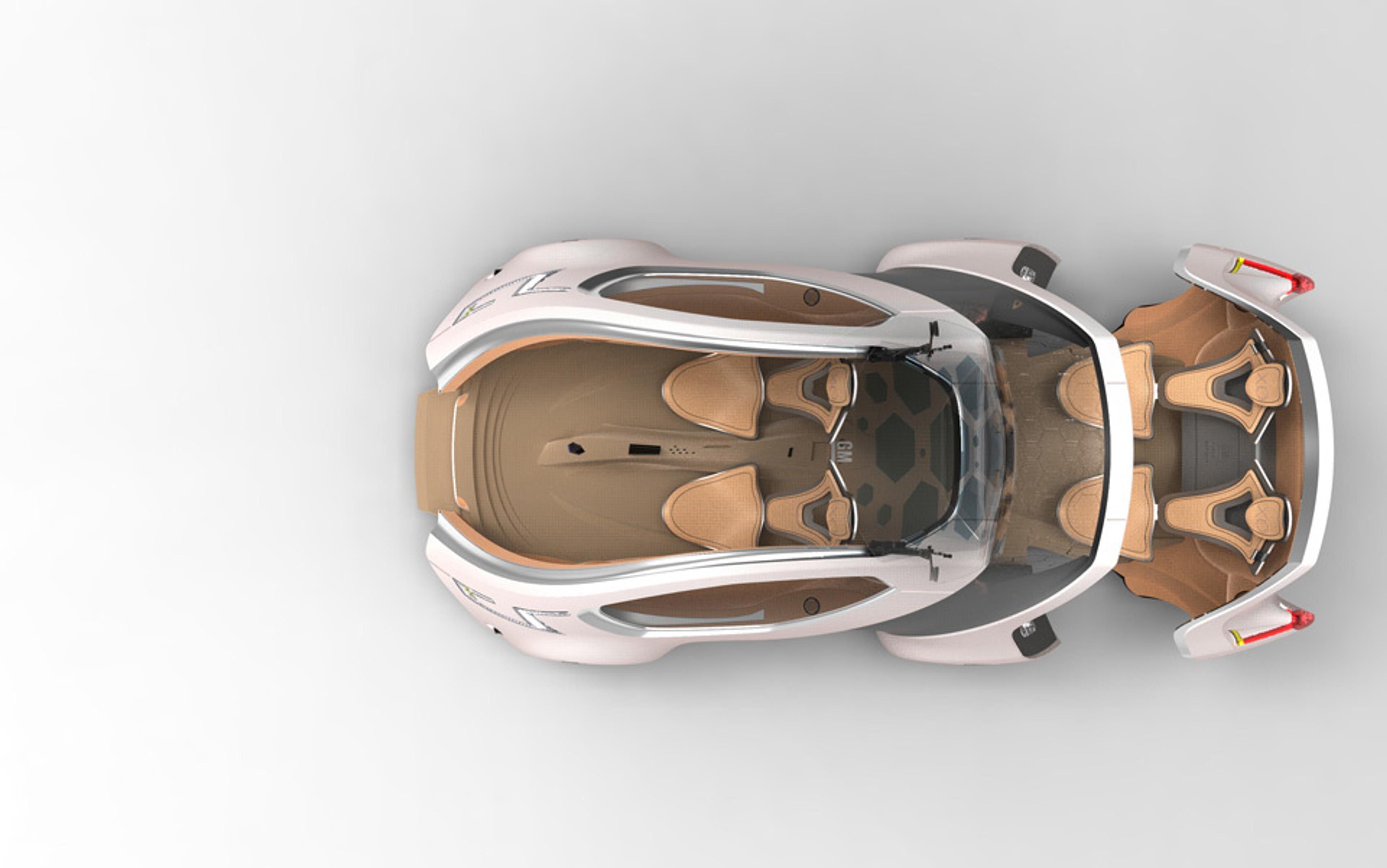

Similar refinements are the subject of one of the liveliest debates in practical ethics today: the case for self-driving cars. Modern motor vehicles are safer and more reliable than they have ever been – yet more than 1 million people are killed in car accidents around the world each year, and more than 50 million are injured. Why? Largely because one perilous element in the mechanics of driving remains unperfected by progress: the human being.

Enter the cutting edge of machine mitigation. Back in August 2012, Google announced that it had achieved 300,000 accident-free miles testing its self-driving cars. The technology remains some distance from the marketplace, but the statistical case for automated vehicles is compelling. Even when they’re not causing injury, human-controlled cars are often driven inefficiently, ineptly, antisocially, or in other ways additive to the sum of human misery.

What, though, about more local contexts? If your vehicle encounters a busload of schoolchildren skidding across the road, do you want to live in a world where it automatically swerves, at a speed you could never have managed, saving them but putting your life at risk? Or would you prefer to live in a world where it doesn’t swerve but keeps you safe? Put like this, neither seems a tempting option. Yet designing self-sufficient systems demands that we resolve such questions. And these possibilities take us in turn towards one of the hoariest thought-experiments in modern philosophy: the trolley problem.

In its simplest form, coined in 1967 by the English philosopher Philippa Foot, the trolley problem imagines the driver of a runaway tram heading down a track. Five men are working on this track, and are all certain to die when the trolley reaches them. Fortunately, it’s possible for the driver to switch the trolley’s path to an alternative spur of track, saving all five. Unfortunately, one man is working on this spur, and will be killed if the switch is made.

In this original version, it’s not hard to say what should be done: the driver should make the switch and save five lives, even at the cost of one. If we were to replace the driver with a computer program, creating a fully automated trolley, we would also instruct it to pick the lesser evil: to kill fewer people in any similar situation. Indeed, we might actively prefer a program to be making such a decision, as it would always act according to this logic while a human might panic and do otherwise.

The trolley problem becomes more interesting in its plentiful variations. In a 1985 article, the MIT philosopher Judith Jarvis Thomson offered this: instead of driving a runaway trolley, you are watching it from a bridge as it hurtles towards five helpless people. Using a heavy weight is the only way to stop it and, as it happens, you are standing next to a large man whose bulk (unlike yours) is enough to achieve this diversion. Should you push this man off the bridge, killing him, in order to save those five lives?

A similar computer program to the one driving our first tram would have no problem resolving this. Indeed, it would see no distinction between the cases. Where there are no alternatives, one life should be sacrificed to save five; two lives to save three; and so on. The fat man should always die – a form of ethical reasoning called consequentialism, meaning conduct should be judged in terms of its consequences.

When presented with Thomson’s trolley problem, however, many people feel that it would be wrong to push the fat man to his death. Premeditated murder is inherently wrong, they argue, no matter what its results – a form of ethical reasoning called deontology, meaning conduct should be judged by the nature of an action rather than by its consequences.

The friction between deontology and consequentialism is at the heart of every version of the trolley problem. Yet perhaps the problem’s most unsettling implication is not the existence of this friction, but the fact that – depending on how the story is told – people tend to hold wildly different opinions about what is right and wrong.

Pushing someone to their death with your bare hands is deeply problematic psychologically, even if you accept that it’s theoretically no better or worse than killing them from 10 miles away. Meanwhile, allowing someone at a distance – a starving child in another country for example – to die through one’s inaction seems barely to register a qualm. As philosophers such as Peter Singer have persuasively argued, it’s hard to see why we should accept this.

Great minds have been wrestling with similar complexities for millennia, perhaps most notably in the form of Thomas Aquinas’s doctrine of double effect. Originally developed in the 13th century to examine the permissibility of self-defence, the doctrine argues that your intention when performing an act must be taken into account when your actions have some good and some harmful consequences. So if you choose to divert a trolley in order to save five lives, your primary intention is the saving of life. Even if one death proves unavoidable as a secondary effect, your act falls into a different category from premeditated murder.

The doctrine of double effect captures an intuition that most people (and legal systems) share: plotting to kill someone and then doing so is a greater wrong than accidentally killing them. Yet an awkward question remains: how far can we trust human intuitions and intentions in the first place? As the writer David Edmonds explores in his excellent new book Would You Kill the Fat Man? (2013), a series of emerging disciplines have begun to stake their own claims around these themes. For the psychologist Joshua Greene, director of Harvard’s Moral Cognition Lab, the doctrine of double effect is not so much a fundamental insight as a rationalisation after the fact.

In his latest book Moral Tribes (2013), Greene acknowledges that almost everyone feels an instinctual sense of moral wrong about people using personal force to harm someone else. For him, this instinctual moral sense is important but far from perfect: a morsel of deep-rooted brain function that can hardly be expected to keep up with civilisational progress. It privileges the immediate over the distant, and actions over omissions; it cannot follow complex chains of cause and effect. It is, in other words, singularly unsuitable for judging human actions as amplified by the vast apparatus of global trade, politics, technology and economic interconnection.

Here, Greene’s arguments converge with the ethics of automation. Human beings are like cameras, he suggests, in that they possess two moral modes: automatic and manual. Our emotions are ‘automatic processes… devices for achieving behavioural efficiency’, allowing us to respond appropriately to everyday encounters without having to think everything through from first principles. Our reasoning, meanwhile, is the equivalent of a ‘manual’ mode: ‘the ability to deliberately work through complex, novel problems’.

If you can quantify general happiness with a sufficiently pragmatic precision you possess a calculus able to cut through biological baggage and tribal allegiances alike

It’s a dichotomy familiar from the work of behavioural economist Daniel Kahneman at Princeton. Unlike Kahneman, however, Greene is an optimist when it comes to overcoming the biases evolution has baked into our brains. ‘With a little perspective,’ he argues, ‘we can use manual-mode thinking to reach agreements with our “heads” despite the irreconcilable differences in our “hearts”.’ Or, as the conclusion of Moral Tribes more bluntly puts it, we must ‘question the laws written in our hearts and replace them with something better’.

This ‘something better’ looks more than a little like a self-driving car. At least, it looks like the substitution of a more efficient external piece of automation for our own circuitry. After all, if common-sense morality is a marvellous but regrettably misfiring hunk of biological machinery, what greater opportunity could there be than to set some pristine new code in motion, unweighted by a bewildered brain? If you can quantify general happiness with a sufficiently pragmatic precision, Greene argues, you possess a calculus able to cut through biological baggage and tribal allegiances alike.

Automation, in this context, is a force pushing old principles towards breaking point. If I can build a car that will automatically avoid killing a bus full of children, albeit at great risk to its driver’s life, should any driver be given the option of disabling this setting? And why stop there: in a world that we can increasingly automate beyond our reaction times and instinctual reasoning, should we trust ourselves even to conduct an assessment in the first place?

Beyond the philosophical friction, this last question suggests another reason why many people find the trolley disturbing: because its consequentialist resolution presents not only the possibility that an ethically superior action might be calculable via algorithm (not in itself a controversial claim) but also that the right algorithm can itself be an ethically superior entity to us.

For the moment, machines able to ‘think’ in anything approaching a human sense remain science-fiction. How we should prepare for their potential emergence, however, is a deeply unsettling question – not least because intelligent machines seem considerably more achievable than any consensus around their programming or consequences.

Consider medical triage – a field in which automation and algorithms already play a considerable part. Triage means taking decisions that balance risk and benefit amid a steady human trickle of accidents. Given that time and resources are always limited, a patient on the cusp of death may take priority over one merely in agony. Similarly, if only two out of three dying patients can be dealt with instantly, those most likely to be saved by rapid intervention may be prioritised; while someone insisting that their religious beliefs mean their child’s life cannot be saved may be overruled.

On the battlefield, triage can mean leaving the wounded behind, if tending to them risks others’ lives. In public health, quarantine and contamination concerns can involve abandoning a few in order to protect the many. Such are the ancient dilemmas of collective existence – tasks that technology and scientific research have made many orders of magnitude more efficient, effective and evidence-based.

If my self-driving car is prepared to sacrifice my life in order to save multiple others, this principle should be made clear in advance together with its exact parameters

What happens, though, when we are not simply programming ever-nimbler procedures into our tools, but instead using them to help determine the principles behind these decisions in the first place: the weighting of triage, the moment at which a chemical plant’s doors are automatically sealed in the event of crisis? At the other end of the scale, we might ask: should we seek to value all human lives equally, by outsourcing our incomes and efforts to the discipline of an AI’s equitable distribution? Taxation is a flawed solution to the problem; but, with determination and ingenuity, brilliant programs can surely do better. And if machines, under certain conditions, are better than us, then what right do we have to go on blundering our way through decisions likely only to end badly for the species?

You might hesitate over such speculations. Yet it’s difficult to know where extrapolation will end. We will always need machines, after all, to protect us from other machines. At a certain point, only the intervention of one artificially intelligent drone might be sufficient to protect me from another. For the philosopher Nick Bostrom at Oxford, for example, what ought to exercise us is not an emotional attachment to the status quo, but rather the question of what it means to move successfully into an unprecedented frame of reference.

In their paper ‘The Ethics of Artificial Intelligence’ (2011), Bostrom and the AI theorist Eliezer Yudkowsky argued that increasingly complex decision-making algorithms are both inevitable and desirable – so long as they remain transparent to inspection, predictable to those they govern, and robust against manipulation.

If my self-driving car is prepared to sacrifice my life in order to save multiple others, this principle should be made clear in advance together with its exact parameters. Society can then debate these, set a seal of approval (or not) on the results, and commence the next phase of iteration. I might or might not agree, but I can’t say I wasn’t warned.

What about worst case scenarios? When it comes to automation and artificial intelligence, the accidents on our horizon might not be of the recoverable kind. Get it wrong, enshrine priorities inimical to human flourishing in the first generation of truly intelligent machines, and there might be no people left to pick up the pieces.

Unlike us, machines do not have a ‘nature’ consistent across vast reaches of time. They are, at least to begin with, whatever we set in motion – with an inbuilt tendency towards the exponential. As Stuart Armstrong, who works with Nick Bostrom at the Future of Humanity Institute, has noted: if you build just one entirely functional automated car, you now have the template for 1 billion. Replace one human worker with a general-purpose artificial intelligence, and the total unemployment of the species is yours for the extrapolating. Design one entirely autonomous surveillance drone, and you have a plan for monitoring, in perpetuity, every man, woman and child alive.

In a sense, it all comes down to efficiency – and how ready we are for any values to be relentlessly pursued on our behalf. Writing in Harper’s magazine last year, the essayist Thomas Frank considered the panorama of chain-food restaurants that skirts most US cities. Each outlet is a miracle of modular design, resolving the production and sale of food into an impeccably optimised operation. Yet, Frank notes, the system’s success on its own terms comes at the expense of all those things left uncounted: the skills it isn’t worth teaching a worker to gain; the resources it isn’t cost-effective to protect:

The modular construction, the application of assembly-line techniques to food service, the twin-basket fryers and bulk condiment dispensers, even the clever plastic lids on the coffee cups, with their fold-back sip tabs: these were all triumphs of human ingenuity. You had to admire them. And yet that intense, concentrated efficiency also demanded a fantastic wastefulness elsewhere – of fuel, of air-conditioning, of land, of landfill. Inside the box was a masterpiece of industrial engineering; outside the box were things and people that existed merely to be used up.

Society has been savouring the fruits of automation since well before the industrial revolution and, whether you’re a devout utilitarian or a sceptical deontologist, the gains in everything from leisure and wealth to productivity and health have been vast.

As agency passes out of the hands of individual human beings, in the name of various efficiencies, the losses outside these boxes don’t simply evaporate into non-existence. If our destiny is a new kind of existential insulation – a world in which machine gatekeepers render certain harms impossible and certain goods automatic – this won’t be because we will have triumphed over history and time, but because we will have delegated engagement to something beyond ourselves.

Time is not, however, a problem we can solve, no matter how long we live. In Virilio’s terms, every single human life is an accident waiting to happen. We are all statistical liabilities. If you wait long enough, it always ends badly. And while the aggregate happiness of the human race might be a supremely useful abstraction, even this eventually amounts to nothing more than insensate particles and energy. There is no cosmic set of scales on hand.

Where once the greater good was a phrase, it is now becoming a goal that we can set in motion independent of us. Yet there’s nothing transcendent about even our most brilliant tools. Ultimately, the measure of their success will be the same as it has always been: the strange accidents of a human life.