Mural of John Coltrane by graffitti artist Omen. Photo by Deeboy/Flickr

In December 1964, over a single evening session in Englewood Cliffs, New Jersey, John Coltrane and his quartet recorded the entirety of A Love Supreme. This jazz album is considered Coltrane’s masterpiece – the culmination of his spiritual awakening – and sold a million copies. What it represents is all too human: a climb out of addiction, a devotional quest, a paean to God.

Five decades later and 50 miles downstate, over 12 hours this April and fuelled by Monster energy drinks in a spare bedroom in Princeton, New Jersey, Ji-Sung Kim wrote an algorithm to teach a computer to teach itself to play jazz. Kim, a 20-year-old Princeton sophomore, was in a rush – he had a quiz the next morning. The resulting neural network project, called deepjazz, trended on GitHub, generated a buzz of excitement and skepticism from the Hacker News commentariat, got 100,000 listens on SoundCloud, and was big in Japan.

This half-century gulf, bracketed by saxophone brass and Python code, has seen a rise in computer-generated music and visual art of all methods and genres. Computer art in the era of big data and deep learning, though, is a reckoning for algorithms, capital-A. We must now embrace – either to wrestle or to caress – computer art.

In industry, there is blunt-force algorithmic tension – ‘Efficiency, capitalism, commerce!’ versus ‘Robots are stealing our jobs!’ But for algorithmic art, the tension is subtler. Only 4 per cent of the work done in the United States economy requires ‘creativity at a median human level’, according to the consulting firm McKinsey and Company. So for computer art – which tries explicitly to zoom into this small piece of that vocational pie – it’s a question not of efficiency or equity, but of trust. Art requires emotional and phrenic investments, with the promised return of a shared slice of the human experience. When we view computer art, the pestering, creepy worry is: who’s on the other end of the line? Is it human? We might, then, worry that it’s not art at all.

Algorithms’ promise holds potent popular allure. A search for the word ‘algorithm’ in the webpages of the empirically minded site FiveThirtyEight (where I’m on staff) returns 516 results, as I write. I’m personally responsible for more than a few of those. In the age of big data, algorithms are meant to treat disease, predict the decisions of the Supreme Court, revolutionise sports and predict the beauty of sunsets. They will also, it’s said, prevent suicide, improve your arugula, predict police misconduct, and tell if a movie will bomb.

The more grandiose would-be applications of algorithms and artificial intelligence (AI) are often preceded by ostensibly more manageable proving grounds – games, say. Before IBM’s question-answering computer, Watson, treats cancer, for example, it goes on the TV quiz show Jeopardy! Google’s AlphaGo took on a top human Go champion in a ‘grand challenge’ for AI. But these contests aren’t trivial stepping stones – they can be seen as affronts to humankind. One commentator, realising that Google’s program would win a match, said he ‘felt physically unwell’.

It’s much the same for computer art projects. Kim and his friend Evan Chow, whose code is used in deepjazz, are members of the youngest generation of a long lineage of computer ‘artists’. (These two aren’t exactly starving artists, though. This summer, Kim’s working at Merck, and Chow’s at Uber.) As the three of us sat in a high-backed wooden booth in Cafe Vivian, on the Princeton campus, actual, honest-to-God human jazz played over the speakers – Rahsaan Roland Kirk’s frenetic ‘Pedal Up’ (1973) – and as Kim played me samples generated by deepjazz from his laptop, we were awash in an unholy jazz + jazz = jazz moment.

‘The idea is pretty profound,’ Kim said, as I strained to decipher what was human in the cacophony. ‘You can use an AI to create art. That’s normally a process that we think of as immutably human.’ Kim agreed that deepjazz, and computer art, is often a proving ground, but he saw ends as well as means. ‘I’m not going to use the word “disruptive”,’ he said, then continued: ‘It’s crazy how AI could shape the music industry,’ imagining an app built on tech like deepjazz. ‘You hum a melody and the phone plays back your own custom, AI-generated song.’

Like a profitless startup, the value of many computer-art projects thus far is their perceived promise. The public deepjazz demo is limited, and improvises off just one song, ‘And Then I Knew’ (1995) by the Pat Metheny Group (Kim wasn’t quite sure how to pronounce ‘Metheny’). But the code is public, and it’s been tweaked to noodle the Friends theme song, for example.

Of course it’s not just jazz music, and not just deepjazz, that has gotten the computer treatment – jigs and folk songs, a ‘Genetic Jammer’, polyphonic music, and quite a bit else has been put through the algorithmic ringer.

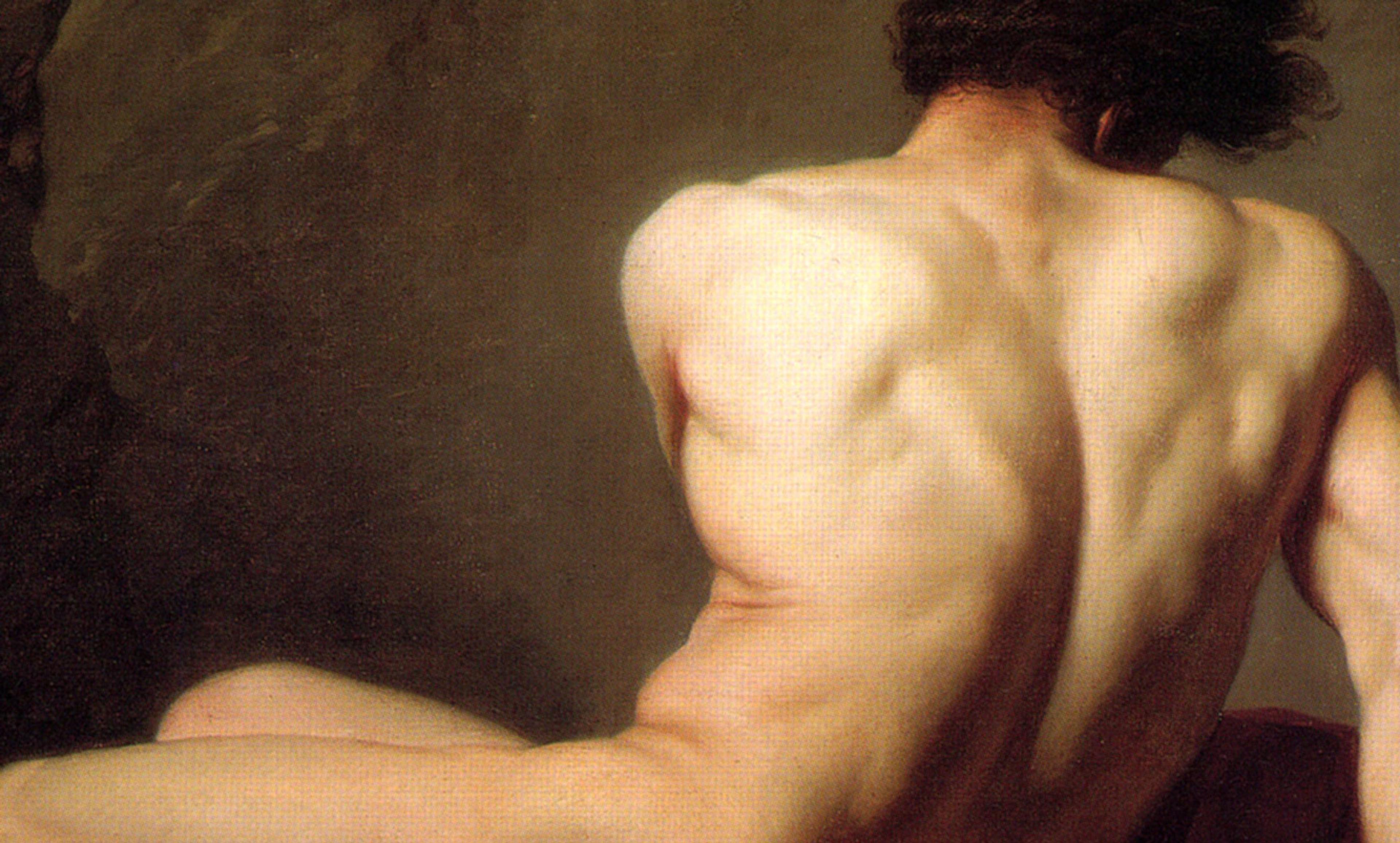

Visual art, too, has been subjected to algorithms for decades now. Two engineers created this image – probably the first computer nude – at Bell Labs in Murray Hill, New Jersey, somewhere geographically between Coltrane and Kim, in 1966. The piece was exhibited at the Museum of Modern Art in 1968.

The New York Times reviewed one of the first exhibitions of computer art, in 1965 (just a few months after Coltrane’s recording session) featuring work by two scientists and an IBM #7094 digital computer, at a New York gallery, now long shuttered. ‘So far the means are of greater interest than the end,’ the Times wrote. But the review, by the late Stuart Preston, goes on to strike a surprisingly enthusiastic tone:

No matter what the future holds – and scientists predict a time when almost any kind of painting can be computer-generated – the actual touch of the artist will no longer play any part in the making of a work of art. When that day comes, the artist’s role will consist of mathematically formulating, by arranging an array of points in groups, a desired pattern. From then on, all will be entrusted to the deus ex machina. Freed from the tedium of technique and the mechanics of picture-making, the artist will simply ‘create’.

The machine is just the brush – a human holds it. There are, indeed, examples of computers helping musicians to simply ‘create’.

Emily Howell is a computer program. A 1990s creation of David Cope, now a professor emeritus at the University of California at Santa Cruz, ‘she’ was born out of Cope’s frustrating struggle to finish an opera of his own. (Howell’s compositions are performed by human musicians.)

This music is passable. It might even be good and, for me, is safely on the right bank of the uncanny valley. But another thing that makes it more interesting is the simple fact that it I know it was composed by a computer. I’m interested in that as a medium – an amplification of Cope’s artistic expression, rather than a sublimation. But the tension persists.

I’ve fallen down other rabbit holes, too: for one, the work of Manfred Mohr, an early algorithmic art pioneer who is himself a (human) jazz musician, as well as an artist. Namely his painting, P‑706/B (2000), based on a six-dimensional hypercube. I spent the next hour reading about Mohr, the man.

Courtesy Manfred Mohr

Sometimes in ‘computer music’ it’s also the other way around – humans name the tune, software dances to it. And in one of these cases, the market has spoken loudly. Vocaloids are singing synthesisers, developed by Yamaha, and anthropomorphised by the Japanese company Crypton. One popular Vocaloid, Hatsune Miku (the name translates to ‘the first sound from the future’), headlined a barn-burning North American tour this year, where Miku appeared as a hologram, drawing lines around the block for $75 tickets at New York’s Hammerstein Ballroom. Miku is a huge pop star, but not a human. ‘She’ also appeared on the Late Show with David Letterman.

So it’s increasingly not just dorm-room hackers and cloistered academics pecking at computer art to show off their chops or get papers published. Last month, the Google Brain team announced Magenta, a project to use machine learning for exactly the purposes described here, and asked the question: ‘Can we use machine learning to create compelling art and music?’ (The answer is pretty clearly already ‘Yes,’ but there you go.) The project follows in the footsteps of Google’s Deep Dream Generator, which reimagines images in arty, dreamy (or nightmarish) ways, using neural networks.

But the honest-to-God truth, at the end of all of this, is that this whole notion is in some way a put-on: a distinction without a difference. ‘Computer art’ doesn’t really exist in an any more provocative sense than ‘paint art’ or ‘piano art’ does. The algorithmic software was written by a human, after all, using theories thought up by a human, using a computer built by a human, using specs written by a human, using materials gathered by a human, at a company staffed by humans, using tools built by a human, and so on. Computer art is human art – a subset rather than a distinction. It’s safe to release the tension.

A different human commentator, after witnessing the program beat the human champ at Go, felt physically fine and struck a different note: ‘An amazing result for technology. And a compliment to the incredible capabilities of the human brain.’ So it is with computer art. It’s a compliment to the human brain – and a complement to oil paints and saxophone brass.