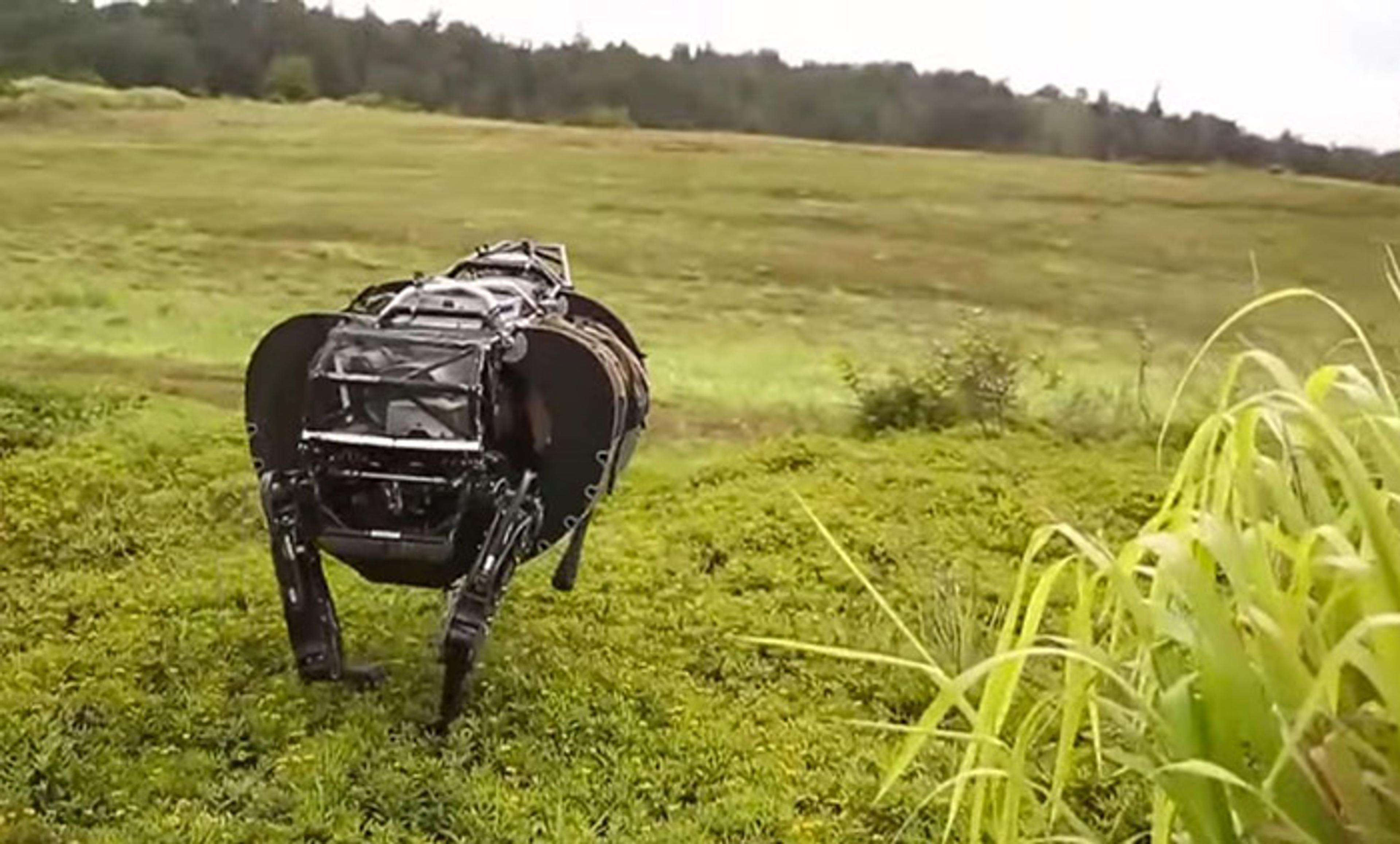

Ben Rimes/Flickr

Down goes HotBot 4b into the volcano. The year is 2050 or 2150, and artificial intelligence has advanced sufficiently that such robots can be built with human-grade intelligence, creativity and desires. HotBot will now perish on this scientific mission. Does it have rights? In commanding it to go down, have we done something morally wrong?

The moral status of robots is a frequent theme in science fiction, back at least to Isaac Asimov’s robot stories, and the consensus is clear: if someday we manage to create robots that have mental lives similar to ours, with human-like plans, desires and a sense of self, including the capacity for joy and suffering, then those robots deserve moral consideration similar to that accorded to natural human beings. Philosophers and researchers on artificial intelligence who have written about this issue generally agree.

I want to challenge this consensus, but not in the way you might predict. I think that, if we someday create robots with human-like cognitive and emotional capacities, we owe them more moral consideration than we would normally owe to otherwise similar human beings.

Here’s why: we will have been their creators and designers. We are thus directly responsible both for their existence and for their happy or unhappy state. If a robot needlessly suffers or fails to reach its developmental potential, it will be in substantial part because of our failure – a failure in our creation, design or nurturance of it. Our moral relation to robots will more closely resemble the relation that parents have to their children, or that gods have to the beings they create, than the relationship between human strangers.

In a way, this is no more than equality. If I create a situation that puts other people at risk – for example, if I destroy their crops to build an airfield – then I have a moral obligation to compensate them, greater than my obligation to people with whom I have no causal connection. If we create genuinely conscious robots, we are deeply causally connected to them, and so substantially responsible for their welfare. That is the root of our special obligation.

Frankenstein’s monster says to his creator, Victor Frankenstein:

I am thy creature, and I will be even mild and docile to my natural lord and king, if thou wilt also perform thy part, the which thou owest me. Oh, Frankenstein, be not equitable to every other, and trample upon me alone, to whom thy justice, and even thy clemency and affection, is most due. Remember that I am thy creature: I ought to be thy Adam….

We must either only create robots sufficiently simple that we know them not to merit moral consideration – as with all existing robots today – or we ought to bring them into existence only carefully and solicitously.

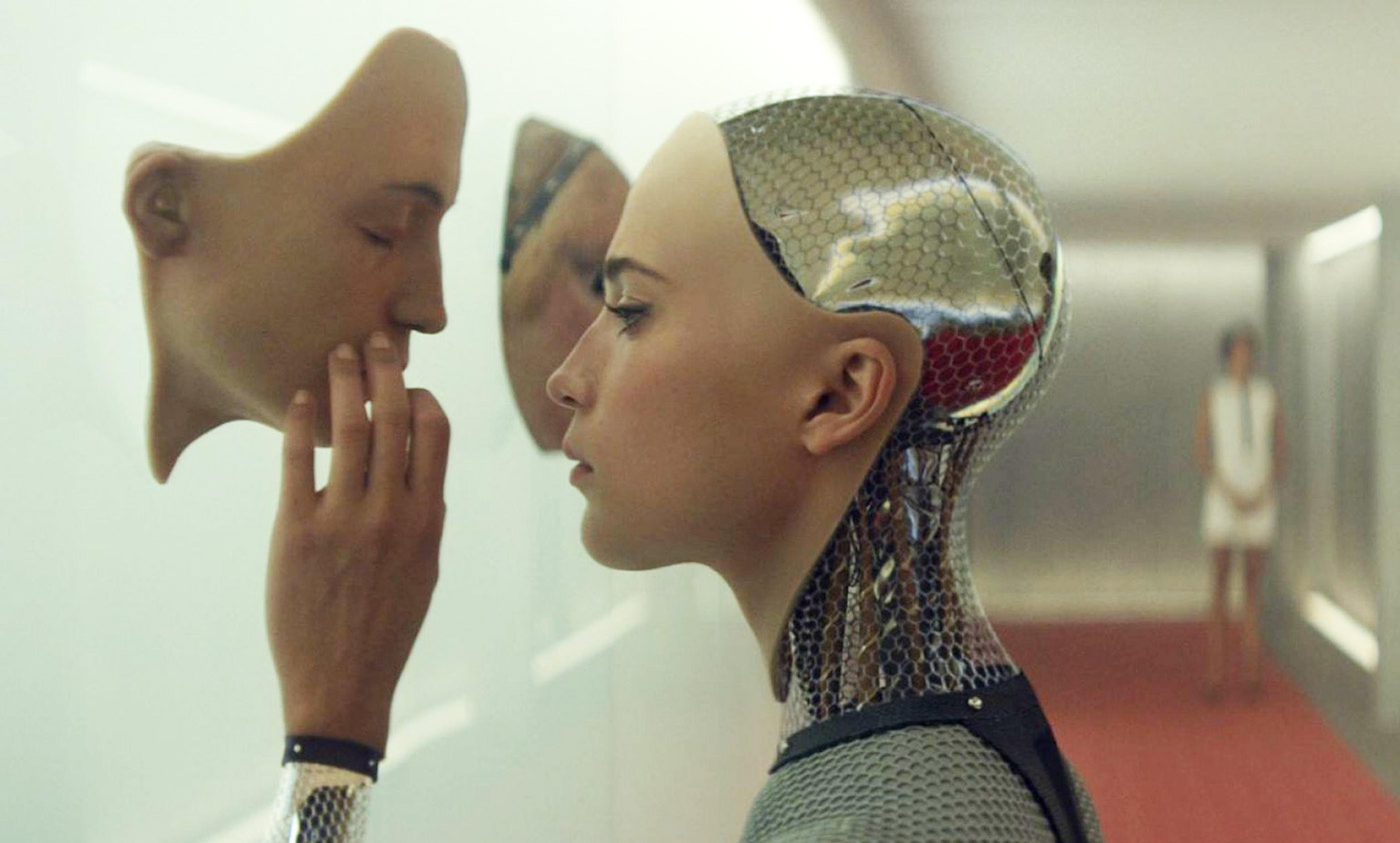

Alongside this duty to be solicitous comes another, of knowledge – a duty to know which of our creations are genuinely conscious. Which of them have real streams of subjective experience, and are capable of joy and suffering, or of cognitive achievements such as creativity and a sense of self? Without such knowledge, we won’t know what obligations we have to our creations.

Yet how can we acquire the relevant knowledge? How does one distinguish, for instance, between a genuine stream of emotional experience and simulated emotions in an artificial mind? Merely programming a superficial simulation of emotion isn’t enough. If I put a standard computer processor manufactured in 2015 into a toy dinosaur and program it to say ‘Ow!’ when I press its off switch, I haven’t created a robot capable of suffering. But exactly what kind of processing and complexity is necessary to give rise to genuine human-like consciousness? On some views – John Searle’s, for example – consciousness might not be possible in any programmed entity; it might require a structure biologically similar to the human brain. Other views are much more liberal about the conditions sufficient for robot consciousness. The scientific study of consciousness is still in its infancy. The issue remains wide open.

If we continue to develop sophisticated forms of artificial intelligence, we have a moral obligation to improve our understanding of the conditions under which artificial consciousness might genuinely emerge. Otherwise we risk moral catastrophe – either the catastrophe of sacrificing our interests for beings that don’t deserve moral consideration because they experience happiness and suffering only falsely, or the catastrophe of failing to recognise robot suffering, and so unintentionally committing atrocities tantamount to slavery and murder against beings to whom we have an almost parental obligation of care.

We have, then, a direct moral obligation to treat our creations with an acknowledgement of our special responsibility for their joy, suffering, thoughtfulness and creative potential. But we also have an epistemic obligation to learn enough about the material and functional bases of joy, suffering, thoughtfulness and creativity to know when and whether our potential future creations deserve our moral concern.