Despite the vastness of the sky, airplanes occasionally crash into each other. To avoid these catastrophes, the Traffic Alert and Collision Avoidance System (TCAS) was developed. TCAS alerts pilots to potential hazards, and tells them how to respond by using a series of complicated rules. In fact, this set of rules — developed over decades — is so complex, perhaps only a handful of individuals alive even understand it anymore. When a TCAS is developed, humans are pushed to the sidelines and, instead, simulation is used. If the system responds as expected after a number of test cases, it receives the engineer’s seal of approval and goes into use.

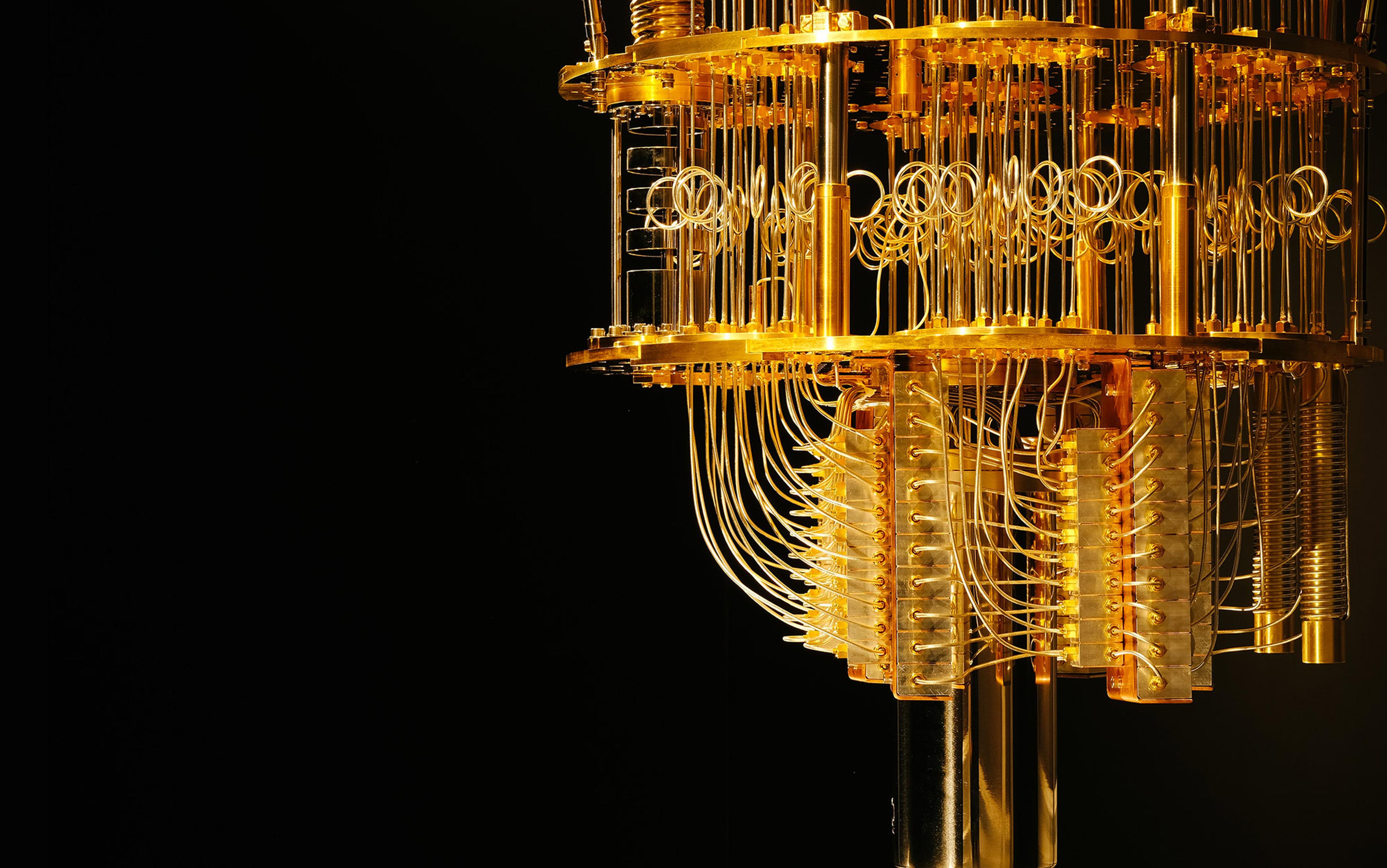

While the problem of avoiding collisions is itself a complex question, the system we’ve built to handle this problem has essentially become too complicated for us to understand, and even experts sometimes react with surprise to its behaviour. This escalating complexity points to a larger phenomenon in modern life. When the systems designed to save our lives are hard to grasp, we have reached a technological threshold that bears examining.

For centuries, humans have been creating ever-more complicated systems, from the machines we live with to the informational systems and laws that keep our global civilisation stitched together. Technology continues its fantastic pace of accelerating complexity — offering efficiencies and benefits that previous generations could not have imagined — but with this increasing sophistication and interconnectedness come complicated and messy effects that we can’t always anticipate. It’s one thing to recognise that technology continues to grow more complex, making the task of the experts who build and maintain our systems more complicated still, but it’s quite another to recognise that many of these systems are actually no longer completely understandable. We now live in a world filled with incomprehensible glitches and bugs. When we find a bug in a video game, it’s intriguing, but when we are surprised by the very infrastructure of our society, that should give us pause.

One of the earliest signs of technology complicating human life was the advent of the railroads, which necessitated the development of standardised time zones in the United States, to co-ordinate the dozens of new trains that were criss-crossing the continent. And things have gotten orders of magnitude more complex since then in the realm of transportation. Automobiles have gone from mechanical contraptions of limited complexity to computational engines on wheels. Indeed, it’s estimated that the US has more than 300,000 intersections with traffic signals in its road system. And it’s not just the systems and networks these machines inhabit. During the past 200 years, the number of individual parts in our complicated machines — from airplanes to calculators — has increased exponentially.

The encroachment of technological complication through increased computerisation has affected every aspect of our lives, from kitchen appliances to workout equipment. We are now living with the unintended consequences: a world we have created for ourselves that is too complicated for our humble human brains to handle. The nightmare scenario is not Skynet — a self-aware network declaring war on humanity — but messy systems so convoluted that nearly any glitch you can think of can happen. And they actually happen far more often than we would like.

machines are interacting with each other in rich ways, essentially as algorithms trading among themselves, with humans on the sidelines

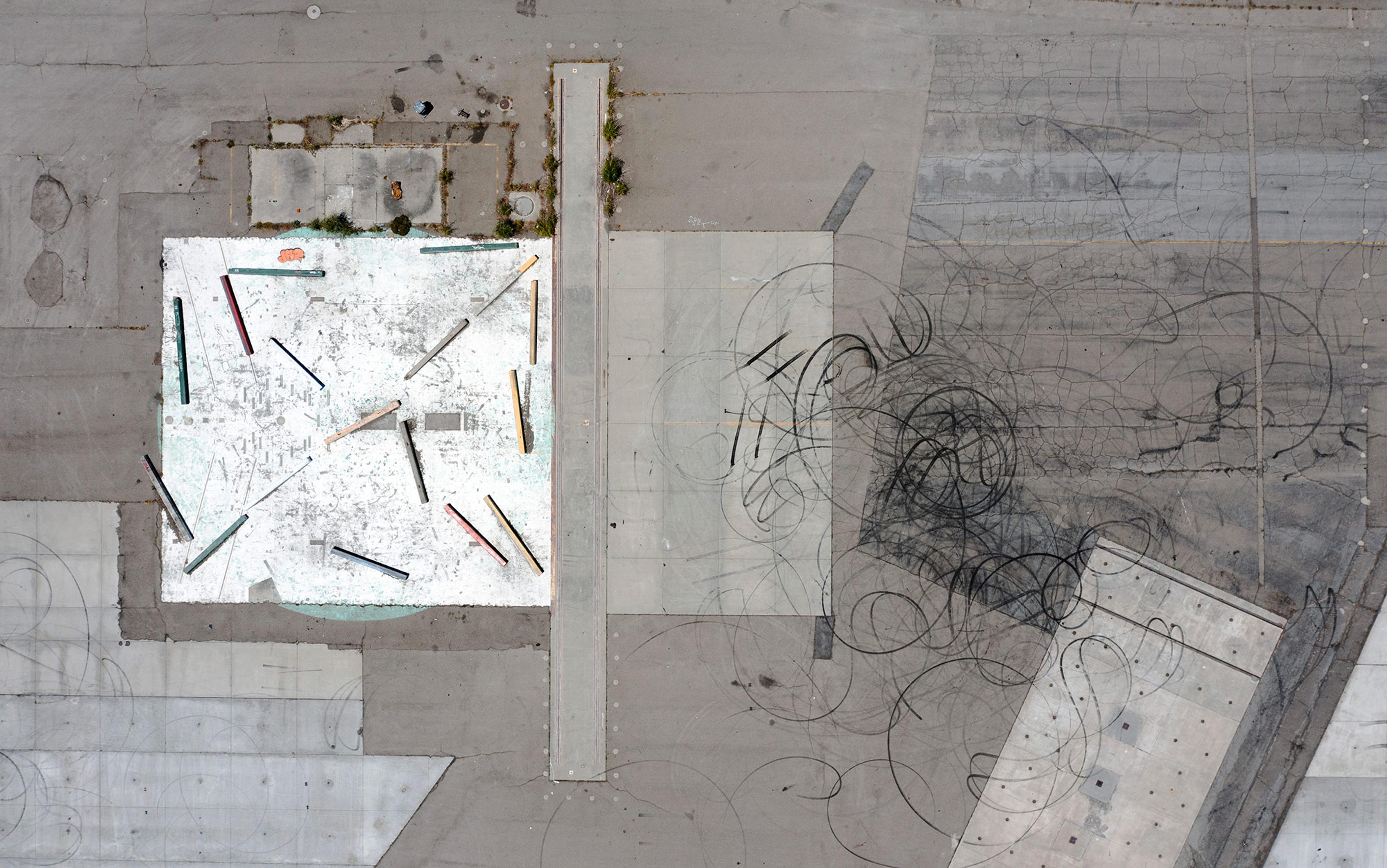

We already see hints of the endpoint toward which we seem to be hurtling: a world where nearly self-contained technological ecosystems operate outside of human knowledge and understanding. As a scientific paper in Nature in September 2013 put it, there is a complete ‘machine ecology beyond human response time’ in the financial world, where stocks are traded in an eyeblink, and mini-crashes and spikes can occur on the order of a second or less. When we try to push our financial trades to the limits of the speed of light, it is time to recognise that machines are interacting with each other in rich ways, essentially as algorithms trading among themselves, with humans on the sidelines.

It used to be taken for granted that there would be knowledge that no human could possibly attain. In his book The Guide for the Perplexed, the medieval scholar Moses Maimonides opined that ‘man’s intellect indubitably has a limit at which it stops’ and even enumerated several concepts he thought we would never grasp, including ‘the number of the stars of heaven’ and ‘whether that number is even or odd’. But then the Scientific Revolution happened, and with it, a triumphalism of understanding. Hundreds of years later, we now know the exact number of objects in the night sky visible to the naked eye — it’s 9,110 (an even number).

But ever since the Enlightenment, we have moved steadily toward the ‘Entanglement’, a term coined by the American computer scientist Danny Hillis. The Entanglement is the trend towards more interconnected and less comprehensible technological surroundings. Hillis argues that our machines, while subject to rational rules, are now too complicated to understand. Whether it’s the entirety of the internet or other large pieces of our infrastructure, understanding the whole — keeping it in your head — is no longer even close to possible.

One example of this trend is our software’s increasing complexity, as measured by the number of lines of code it takes to write it. According to some estimates, the source code for the Windows operating system increased by an order of magnitude over the course of a decade, making it impossible for a single person to understand all the different parts at once. And remember Y2K? It’s true that the so-called Millennium Bug passed without serious complications, but the startling fact was that we couldn’t be sure what would happen on 1 January 2000 because the systems involved were too complex.

Even our legal systems have grown irreconcilably messy. The US Code, itself a kind of technology, is more than 22 million words long and contains more than 80,000 links within it, between one section and another. This vast legal network is profoundly complicated, the functionality of which no person could understand in its entirety. Michael Mandel and Diana Carew, of the Progressive Policy Institute in WashingtonDC, have referred to this growth of legal systems as ‘regulatory accumulation’, wherein we keep adding more and more rules and regulations. Each law individually might make sense, but taken together they can be debilitating, and even interact in surprising and unexpected ways. We even see the interplay between legal complexity and computational complexity in the problematic rollout of a website for Obamacare. The glitches in this technological system can affect each of us.

And this trend is accelerating. For instance, we now have 3D printers, vast machinery to help construct tunnels and bridges, and even software that helps with the design of new products and infrastructure, such as sophisticated computer-aided design (CAD) programs. One computational realm, evolutionary programming, even allows software to ‘evolve’ solutions to problems, while being agnostic on what shape that final solution could take. Need an equation to fit some data? Evolutionary programming can do that — even if you can’t understand the answer it comes up with.

A number of years ago, a team of research scientists tried to improve the design of a certain kind of computer circuit. They created a simple task that the circuit needed to solve and then tried to evolve a potential solution. After many generations, the team eventually found a successful circuit design. But here’s the interesting part: there were parts of it that were disconnected from the main part of the circuit, but were essential for its function. Essentially, the evolutionary program took advantage of weird physical and electromagnetic phenomena that no engineer would ever think of using in order to make the circuit complete its task. In the words of the researchers: ‘Evolution was able to exploit this physical behaviour, even though it would be difficult to analyse.’

This evolutionary technique yielded a novel technological system, one that we have difficulty understanding, because we would never have come up with something like this on our own. In chess, a realm where computers are more powerful than humans and have the ability to win in ways that the human mind can’t always understand, these types of solutions are known as ‘computer moves’ — the moves that no human would ever do, the ones that are ugly but still get results. As the American economist Tyler Cowen noted in his book Average Is Over (2013), these types of moves often seem wrong, but they are very effective. Computers have exposed the fact that chess, at least when played at the highest levels, is too complicated, with too many moving parts for a person — even a grandmaster — to understand.

intellectual surrender in the face of increasing complexity seems too extreme and even a bit cowardly, but what should we replace it with if we can’t understand our creations any more?

So how do we respond to all of this technological impenetrability? One response is to simply give up, much like the comic strip character Calvin (friend to a philosophical tiger) who declared that everything from light bulbs to vacuum cleaners works via ‘magic’. Rather than confront the complicated truth of how wind works, Calvin resorts to calling it ‘trees sneezing’. This intellectual surrender in the face of increasing complexity seems too extreme and even a bit cowardly, but what should we replace it with if we can’t understand our creations any more?

Perhaps we can replace it with the same kind of attitude we have towards weather. While we can’t actually control the weather or understand it in all of its nonlinear details, we can predict it reasonably well, adapt to it, and even prepare for it. And when the elements deliver us something unexpected, we muddle through as best as we can. So, just as we have weather models, we can begin to make models of our technological systems, even somewhat simplified ones. Playing with a simulation of the system we’re interested in — testing its limits and fiddling with its parameters, rather than understanding it completely — can be a powerful path to insight, and is a skill that needs cultivation.

For example, the computer game SimCity, a model of sorts, gives its users insights into how a city works. Before SimCity, few outside the realm of urban planning and civil engineering had a clear mental model of how cities worked, and none were able to twiddle the knobs of urban life to produce counterfactual outcomes. We probably still can’t do that at the level of complexity of an actual city, but those who play these types of games do have a better understanding of the general effects of their actions. We need to get better at ‘playing’ simulations of the technological world more generally. This could conceivably be geared towards the direction our educational system needs to move, teaching students how to play with something, examining its bounds and how it works, at least ‘sort of’.

We also need interpreters of what’s going on in these systems, a bit like TV meteorologists. Near the end of Average Is Over, Cowen speculates about these future interpreters. He says they ‘will hone their skills of seeking out, absorbing, and evaluating this information… They will be translators of the truths coming out of our networks of machines… At least for a while, they will be the only people left who will have a clear notion of what is going on.’

And when things get too complicated and we end up being surprised by the workings of the structures humanity has created? At that point, we will have to take a cue from those who turn up their collars to the unexpected wintry mix and sigh as they proceed outdoors: we will have to become a bit more humble. Those like Maimonides, who lived before the Enlightenment, recognised that there were bounds to what we could know, and it might be time to return to that way of thinking. Of course, we shouldn’t throw our hands up and say that just because we can’t understand something, there is nothing else to learn. But at the same time, it might be time to get reacquainted with our limits.