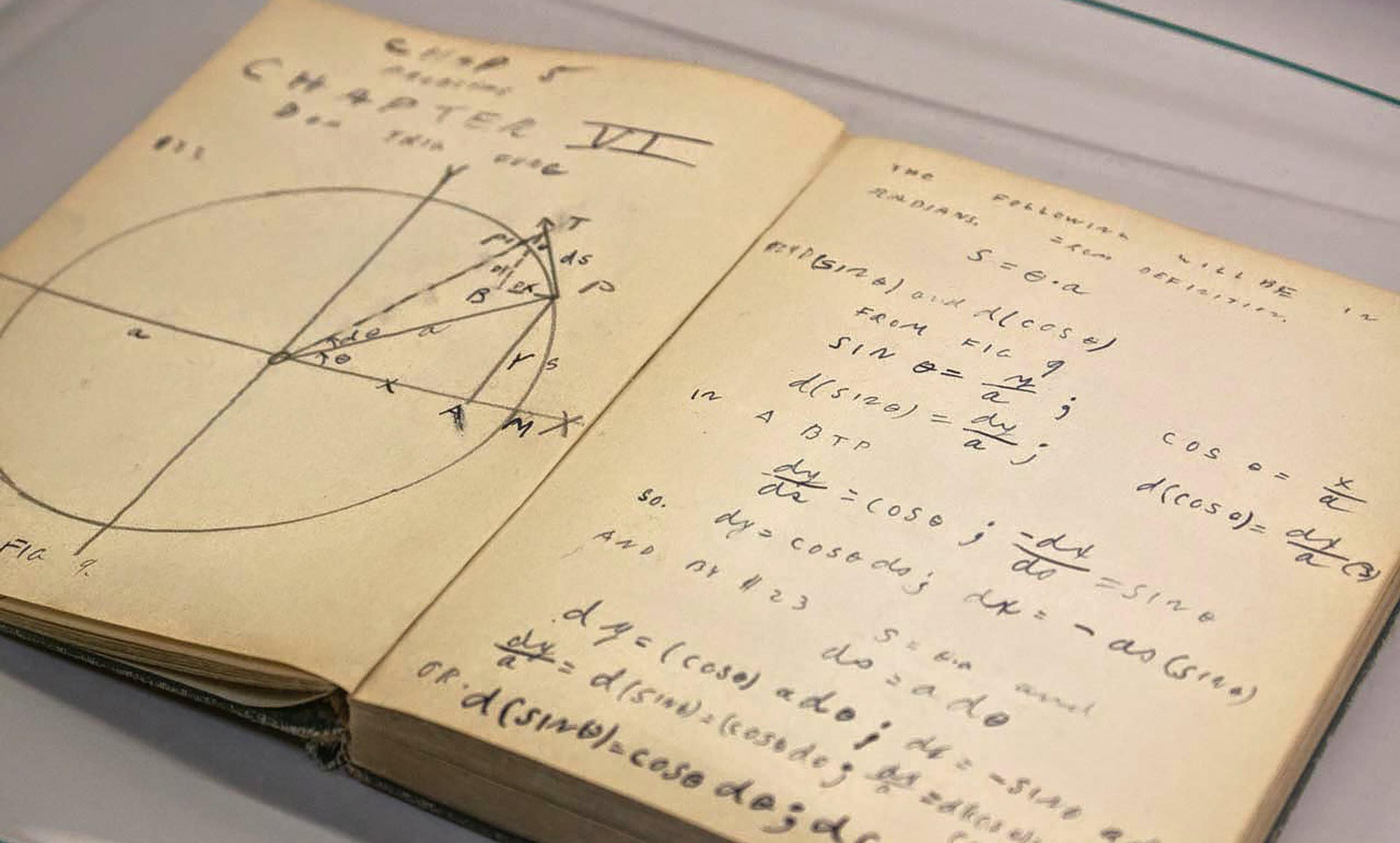

Photo by Barry Mazur/Flickr

Everyone had died – not that you’d know it, from how they were laughing about their poor choices and bad rolls of the dice. As a social anthropologist, I study how people understand artificial intelligence (AI) and our efforts towards attaining it; I’m also a life-long fan of Dungeons and Dragons (D&D), the inventive fantasy roleplaying game. During a recent quest, when I was playing an elf ranger, the trainee paladin (or holy knight) acted according to his noble character, and announced our presence at the mouth of a dragon’s lair. The results were disastrous. But while success in D&D means ‘beating the bad guy’, the game is also a creative sandbox, where failure can count as collective triumph so long as you tell a great tale.

What does this have to do with AI? In computer science, games are frequently used as a benchmark for an algorithm’s ‘intelligence’. The late Robert Wilensky, a professor at the University of California, Berkeley and a leading figure in AI, offered one reason why this might be. Computer scientists ‘looked around at who the smartest people were, and they were themselves, of course’, he told the authors of Compulsive Technology: Computers as Culture (1985). ‘They were all essentially mathematicians by training, and mathematicians do two things – they prove theorems and play chess. And they said, hey, if it proves a theorem or plays chess, it must be smart.’ No surprise that demonstrations of AI’s ‘smarts’ have focussed on the artificial player’s prowess.

Yet the games that get chosen – like Go, the main battlefield for Google DeepMind’s algorithms in recent years – tend to be tightly bounded, with set objectives and clear paths to victory or defeat. These experiences have none of the open-ended collaboration of D&D. Which got me thinking: do we need a new test for intelligence, where the goal is not simply about success, but storytelling? What would it mean for an AI to ‘pass’ as human in a game of D&D? Instead of the Turing test, perhaps we need an elf ranger test?

Of course, this is just a playful thought experiment, but it does highlight the flaws in certain models of intelligence. First, it reveals how intelligence has to work across a variety of environments. D&D participants can inhabit many characters in many games, and the individual player can ‘switch’ between roles (the fighter, the thief, the healer). Meanwhile, AI researchers know that it’s super difficult to get a well-trained algorithm to apply its insights in even slightly different domains – something that we humans manage surprisingly well.

Second, D&D reminds us that intelligence is embodied. In computer games, the bodily aspect of the experience might range from pressing buttons on a controller in order to move an icon or avatar (a ping-pong paddle; a spaceship; an anthropomorphic, eternally hungry, yellow sphere), to more recent and immersive experiences involving virtual-reality goggles and haptic gloves. Even without these add-ons, games can still produce biological responses associated with stress and fear (if you’ve ever played Alien: Isolation you’ll understand). In the original D&D, the players encounter the game while sitting around a table together, feeling the story and its impact. Recent research in cognitive science suggests that bodily interactions are crucial to how we grasp more abstract mental concepts. But we give minimal attention to the embodiment of artificial agents, and how that might affect the way they learn and process information.

Finally, intelligence is social. AI algorithms typically learn though multiple rounds of competition, in which successful strategies get reinforced with rewards. True, it appears that humans also evolved to learn through repetition, reward and reinforcement. But there’s an important collaborative dimension to human intelligence. In the 1930s, the psychologist Lev Vygotsky identified the interaction of an expert and a novice as an example of what became called ‘scaffolded’ learning, where the teacher demonstrates and then supports the learner in acquiring a new skill. In unbounded games, this cooperation is channelled through narrative. Games of It among small children can evolve from win/lose into attacks by terrible monsters, before shifting again to more complex narratives that explain why the monsters are attacking, who is the hero, and what they can do and why – narratives that aren’t always logical or even internally compatible. An AI that could engage in social storytelling is doubtless on a surer, more multifunctional footing than one that plays chess; and there’s no guarantee that chess is even a step on the road to attaining intelligence of this sort.

In some ways, this failure to look at roleplaying as a technical hurdle for intelligence is strange. D&D was a key cultural touchstone for technologists in the 1980s and the inspiration for many early text-based computer games, as Katie Hafner and Matthew Lyon point out in Where Wizards Stay up Late: The Origins of the Internet (1996). Even today, AI researchers who play games in their free time often mention D&D specifically. So instead of beating adversaries in games, we might learn more about intelligence if we tried to teach artificial agents to play together as we do: as paladins and elf rangers.