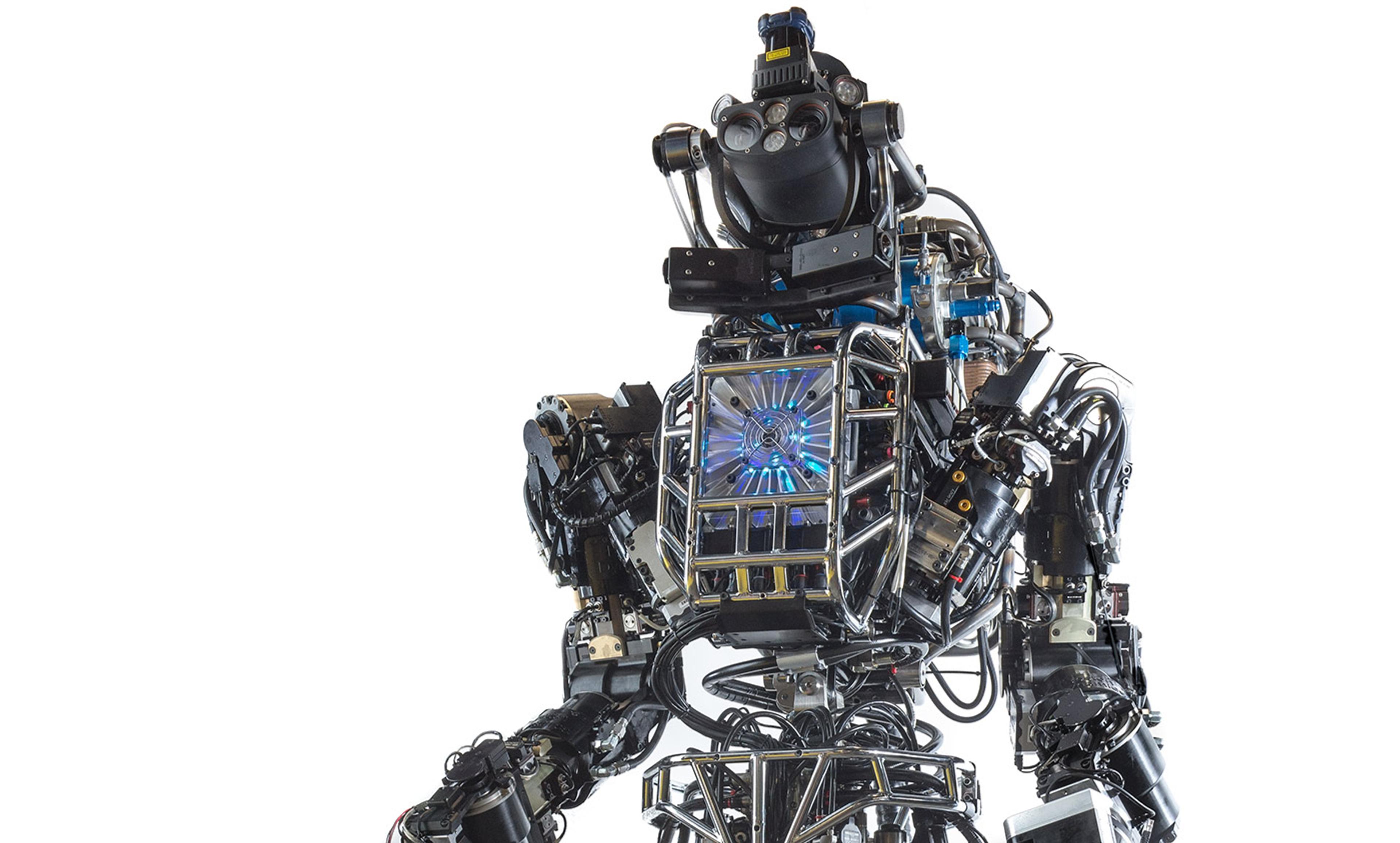

Courtesy Wikipedia

Autonomous weapons – killer robots that can attack without a human operator – are dangerous tools. There is no doubt about this fact. As tech entrepreneurs such as Elon Musk, Mustafa Suleyman and other signatories to a 2017 open letter to the United Nations have put it, autonomous weapons ‘can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons [that can be] hacked to behave in undesirable ways’.

But this does not mean that the UN should implement a preventive ban on the further development of these weapons, as the signatories of the open letter seem to urge.

For one thing, it sometimes takes dangerous tools to achieve worthy ends. Think of the Rwandan genocide, where the world simply stood by and did nothing. Had autonomous weapons been available in 1994, maybe we would not have looked away. It seems plausible that if the costs of humanitarian interventions were purely monetary, then it would be easier to gain widespread support for such interventions.

For another thing, it is naive to assume that we can enjoy the benefits of the recent advances in artificial intelligence (AI) without being exposed to at least some downsides as well. Suppose the UN were to implement a preventive ban on the further development of all autonomous weapons technology. Further suppose – quite optimistically, already – that all armies around the world were to respect the ban, and abort their autonomous-weapons research programmes. Even with both of these assumptions in place, we would still have to worry about autonomous weapons. A self-driving car can be easily re-programmed into an autonomous weapons system: instead of instructing it to swerve when it sees a pedestrian, just teach it to run over the pedestrian.

To put the point more generally, AI technology is tremendously useful, and it already permeates our lives in ways we don’t always notice, and aren’t always able to comprehend fully. Given its pervasive presence, it is shortsighted to think that the technology’s abuse can be prevented if only the further development of autonomous weapons is halted. In fact, it might well take the sophisticated and discriminate autonomous-weapons systems that armies around the world are currently in the process of developing if we are to effectively counter the much cruder autonomous weapons that are quite easily constructed through the reprogramming of seemingly benign AI technology such as the self-driving car.

Furthermore, the notion of a simple ban at the international level, among state actors, tacitly betrays a view of autonomous weapons that is overly simplistic. It is a conception that fails to acknowledge the long causal backstory of institutional arrangements and individual actors who, through thousands of little acts of commission and omission, have brought about, and continue to bring about, the rise of such technologies. As long as the debate about autonomous weapons is framed primarily in terms of UN-level policies, the average citizen, soldier or programmer must be forgiven for assuming that he or she is absolved of all moral responsibility for the wrongful harm that autonomous weapons risk causing. But this assumption is false, and it might prove disastrous.

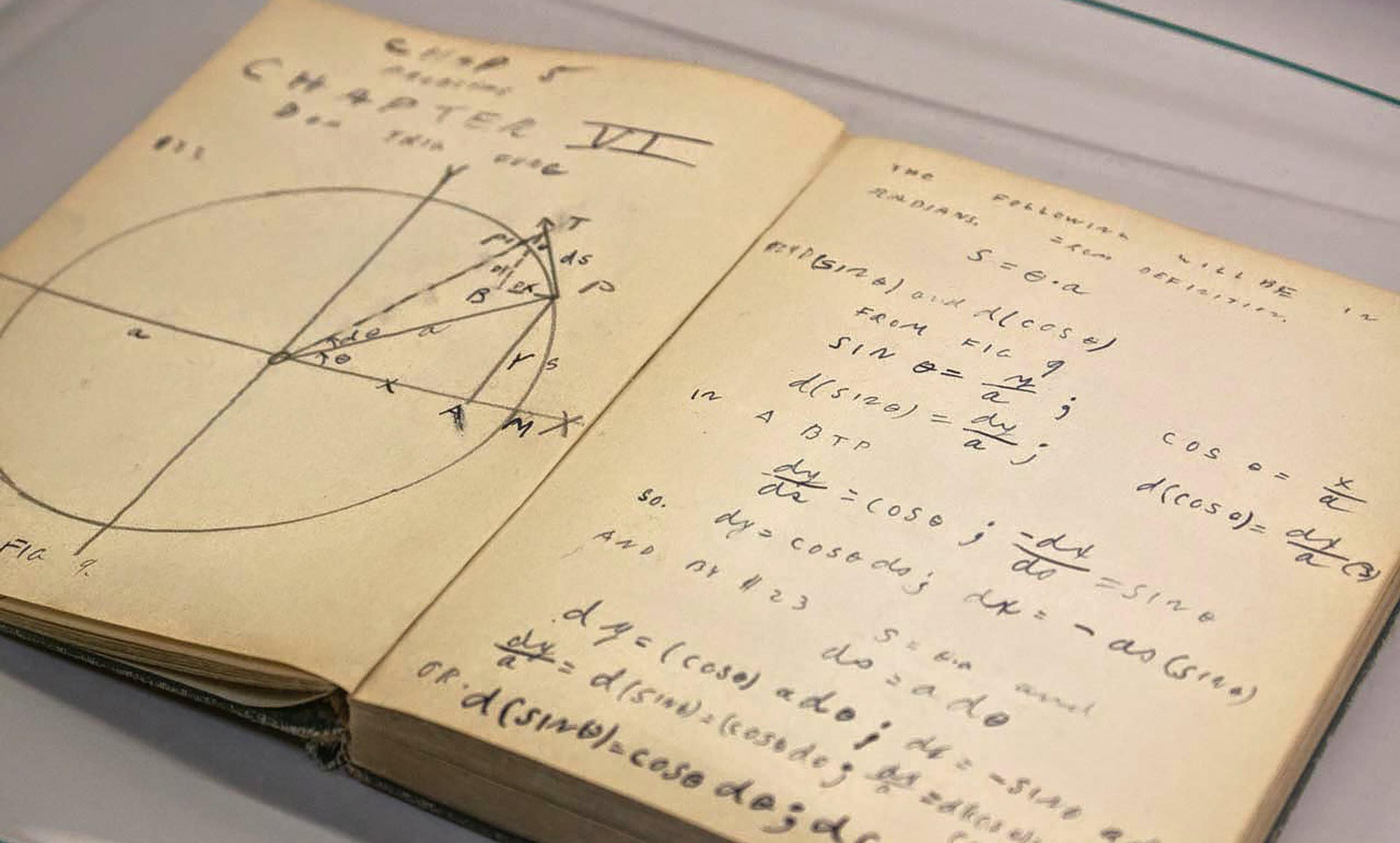

All individuals who in some way or other deal with autonomous-weapons technology have to exercise due diligence, and each and every one of us needs to examine carefully how his or her actions and inactions are contributing to the potential dangers of this technology. This is by no means to say that state and intergovernmental agencies do not have an important role to play as well. Rather, it is to emphasise that if the potential dangers of autonomous weapons are to be mitigated, then an ethic of personal responsibility must be promoted, and it must reach all the way down to the level of the individual decision-maker. For a start, it is of the utmost importance that we begin telling a richer and more complex story about the rise of autonomous weapons – a story that includes the causal contributions of decision-makers at all levels.

Finally, it is sometimes insinuated that autonomous weapons are dangerous not because they are dangerous tools but because they could become autonomous agents with ends and interests of their own. This worry is either misguided, or else it is a worry that a preventive ban on the further development of autonomous weapons could do nothing to alleviate. If superintelligence is a threat to humanity, we urgently need to find ways to deal effectively with this threat, and we need to do so quite independently of whether autonomous-weapons technology is developed further.