‘We have some big trouble,’ president John F Kennedy told his brother, the attorney general Bobby Kennedy, early in the morning of 16 October 1962.

A few hours later, the younger Kennedy was staring at pictures of Cuba taken by the U-2 surveillance aircraft. ‘Those sons of bitches, Russians,’ he said, sitting in the White House with a group of officials dedicated to overthrowing Fidel Castro.

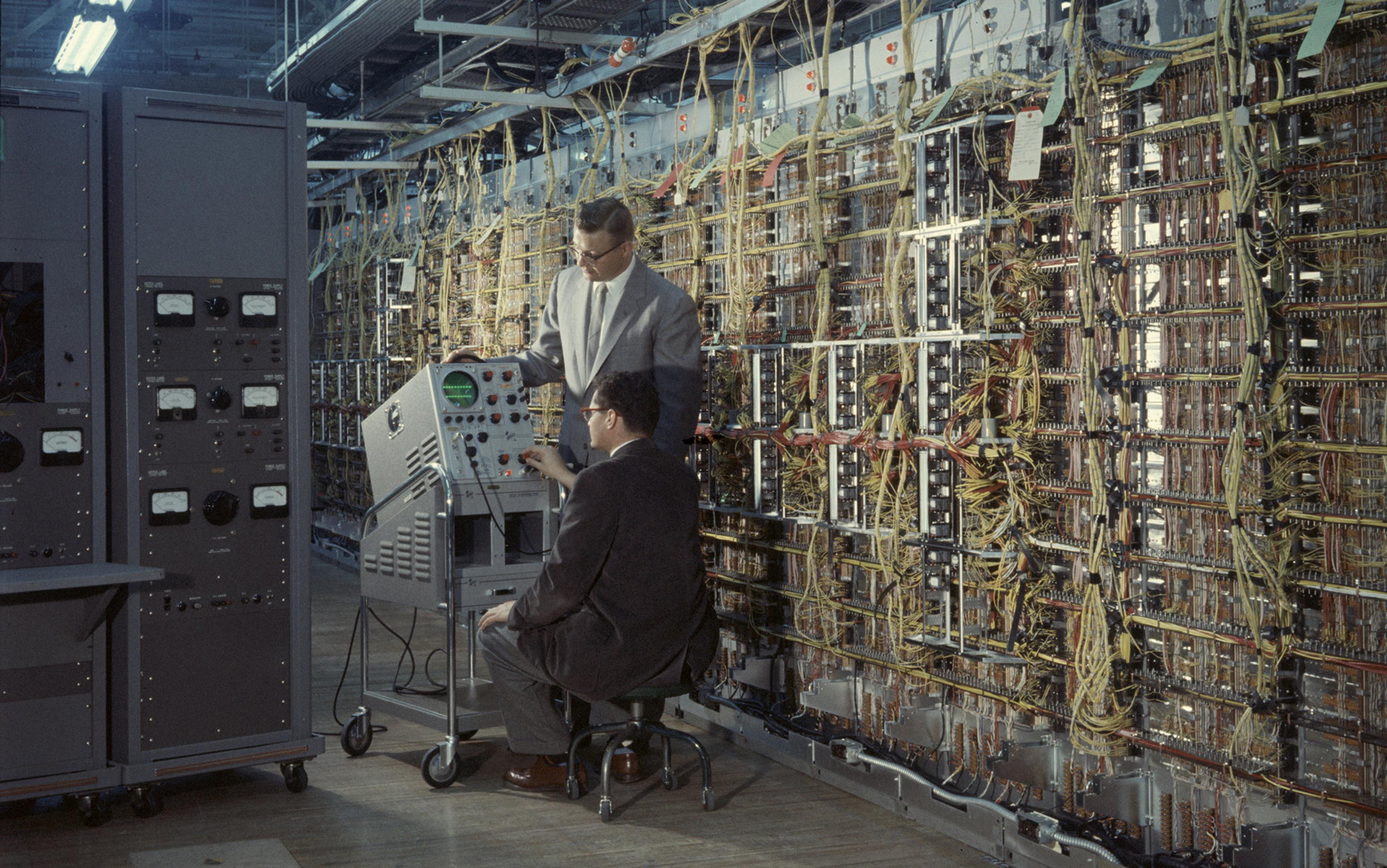

The pictures showed the telltale signs of Soviet missile launchers. The CIA had used a massive computer – which took the better part of a room to house – to calculate the precise measurements and capabilities of the missiles installed. Their dismal conclusion was that these missiles had a range of more than 1,000 miles, making them capable of reaching Washington, DC in just 13 minutes. This revelation sparked off a crisis that lasted almost two weeks. As the standoff over Cuba intensified, US military forces reached DEFCON 2, just one alert level before the start of nuclear war.

As military and civilian commanders clamoured for information on a minute-by-minute basis, computers such as the IBM 473L of the United States Air Force were being used for the first time in the midst of a conflict to process real-time information on how, for example, to allocate military forces. Yet even with the growing availability of computers, sharing that information among military commanders involved a time lag. The idea of having the information travel between connected computers did not yet exist.

After 13 days of scrambling forces to carry out a potential attack, the Soviet Union agreed to remove its missiles from Cuba. Nuclear war was averted, but the standoff also demonstrated the limits of command and control. With the complexities of modern warfare, how can you effectively control your nuclear forces if you cannot share information in real time? Unbeknown to most of the military’s senior leadership, a relatively low-level scientist had just arrived at the Pentagon to address that very problem. The solution he would come up with became the agency’s most famous project, revolutionising not just military command and control but modern computing too.

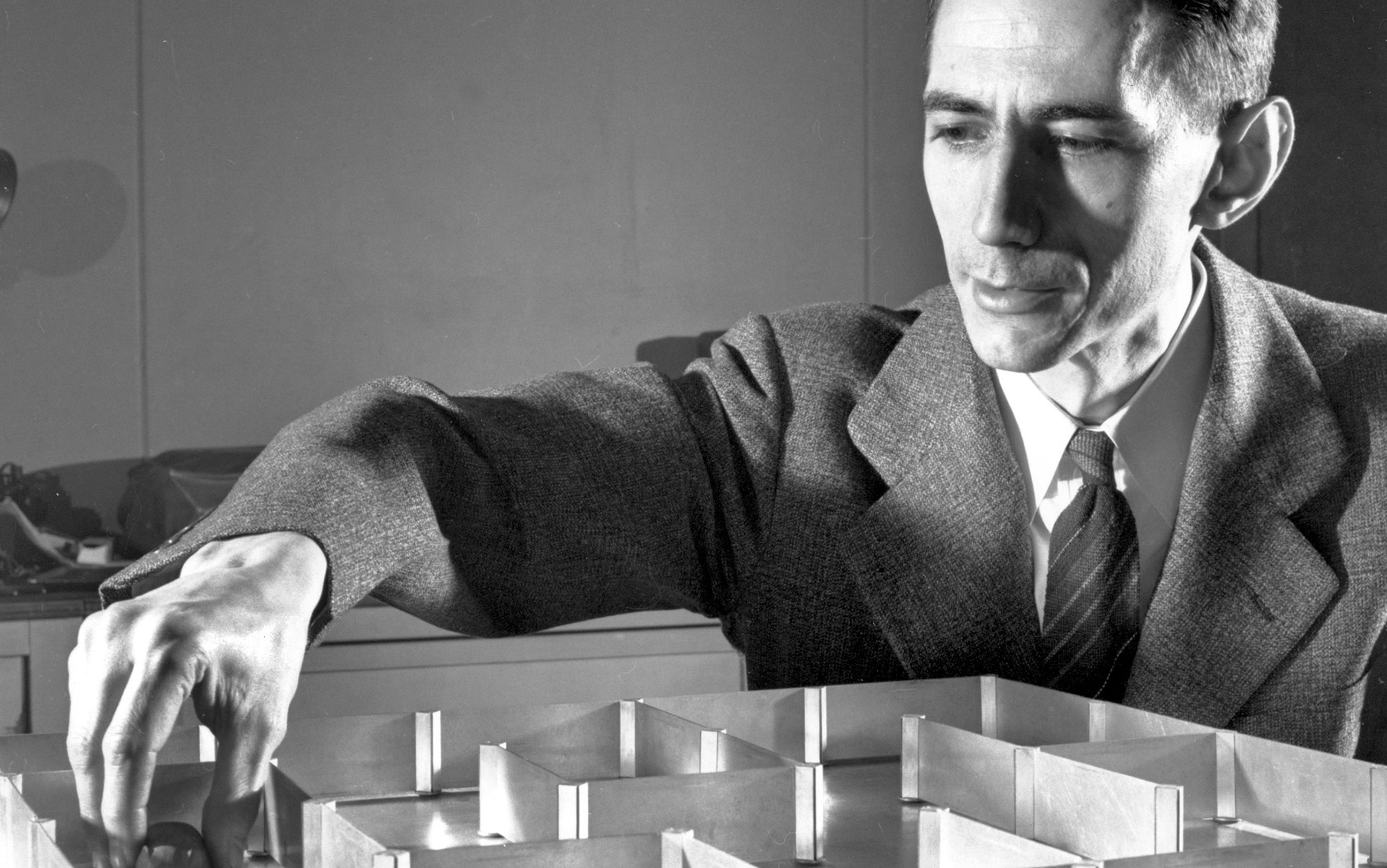

Joseph Carl Robnett Licklider – JCR, or simply Lick to his friends – spent much of his time at the Pentagon hiding. In a building where most bureaucrats measured their importance by proximity to the secretary of defence, Licklider was relieved when the Advanced Research Projects Agency, or ARPA, assigned him an office in the D‑Ring, one of the Pentagon’s windowless inner circles. There, he could work in peace.

One time, Licklider invited ARPA employees to a meeting at the Marriott hotel between the Pentagon and the Potomac River, to demonstrate how someone in the future would use a computer to access information. As the chief proselytiser for interactive computing, Licklider first wanted people to understand the concept. He was trying to demonstrate how, in the future, everyone would have a computer, people would interact directly with those computers, and the computers would all be connected together. He was demonstrating personal computing and the modern internet, years before they existed.

There is little debate that Licklider’s reserved but forceful presence in ARPA laid the foundations for computer networking – work that would eventually lead to the modern internet. The real question is: why? The truth is complicated, and it is impossible to divorce the internet’s origins from the Pentagon’s interest in the problems of war, both limited and nuclear. ARPA was established in 1958 to help the United States catch up with the Soviets in the space race, but by the early 1960s, it had branched off into new research areas, including command and control. The internet would likely not have been born without the military’s need to wage war, or at least it would not have been born at ARPA. Tracing the origins of computer networking at ARPA requires understanding what motivated the Pentagon to hire someone like Licklider in the first place.

It started with brainwashing.

‘My son! My son! Bless the lord,’ shouted Bessie Dickenson, standing on the ramp of Andrews Air Force Base in Maryland, as the child she had not seen in more than three years got off the plane. It was 1953, and their reunion was short-lived; Edward Dickenson, 23, would soon be court-martialled for cooperating with the enemy. He was one of almost two dozen POWs from the Korean War who initially opted to stay in North Korea, throwing in his lot with the communists. Dickenson then changed his mind and returned to the US, where he was initially welcomed, then called a traitor. At his court-martial, defence lawyers argued that the young man, who hailed from the almost fictional-sounding Cracker’s Neck, Virginia, was a simple country boy ‘brainwashed’ by the communists during his years in captivity. Unmoved, a panel of eight officers convicted him, and he was sentenced to 10 years in prison.

‘Brainwashing’ was a new term in the early 1950s, first introduced and popularised by Edward Hunter, a spy-turned-journalist who wrote about this dangerous new weapon that could sway men’s minds. The communists had been at work on it for years, but the Korean War was a turning point, Hunter argued. In 1958, he told the House Committee on Un-American Activities that, as a result of brainwashing tactics, ‘one in three American prisoners collaborated with the communists in some way, either as informers or propagandists’. The communists were racing ahead of the US in mental warfare, Hunter claimed.

Brainwashing soon made its way into the popular imagination with the publication of Richard Condon’s bestselling novel The Manchurian Candidate (1959), in which a POW, the son of a prominent family, returns to the US as a sleeper agent, trained for assassination (the lasting popularity of this idea is seen in the modern series Homeland, which depicts an American POW ‘turned’ by Al-Qaeda).

Whatever the truth of actual brainwashing incidents, the battle for people’s minds loomed large in the late 1950s, and was the subject of serious Pentagon discussions. The US and the Soviet Union were engaged in an ideological – and psychological – battle. Eager to exploit the science of human behaviour as it had physics and chemistry, the Pentagon commissioned a high-level panel at the Smithsonian Institution to recommend the best course of action.

The Smithsonian’s highly influential Research Group in Psychology and the Social Sciences was established in 1959 and tasked to advise the Pentagon on long-term research plans. While the panel’s full report was classified, Charles Bray, who headed the group, published some of its unclassified findings in the paper ‘Toward a Technology of Human Behaviour for Defence Use’ (1962), which outlined a broad role for the Pentagon in psychology:

In any future war of significant length, there will be ‘special warfare’, guerrilla operations, and infiltration. Subversion of our troops and populations will be attempted and prisoners of war will be subjected to ‘brainwashing’. The military establishment must be prepared to assist in promoting recuperation and cohesiveness within possibly disorganised civilian populations, while attempting to shift loyalties within enemy populations.

Psychology during the Cold War had fast become a darling of the military. ‘By the early 1960s, the DoD [US Department of Defense] was spending almost all of its social science research budget on psychology, around $15 million annually, more than the entire budget for military research and development before the Second World War,’ wrote Ellen Herman in The Romance of American Psychology (1995), her survey of the field. Of course, the Pentagon’s interests, and the Smithsonian panel’s recommendations, were about more than just brainwashing. Bray wrote of applications ranging from ‘persuasion and motivation’ to the role of computers ‘as a man-machine, scientist-computer system’. The Smithsonian panel eventually recommended to the Pentagon’s director of defence research and engineering that ARPA conduct a comprehensive programme that would include both behavioural and computer sciences.

Both were about creating a science out of human behaviour, whether it was humans interacting with machines or with other people

That recommendation was translated by Pentagon officials into two separate assignments handed down to ARPA: one in the behavioural sciences, which would include everything from the psychology of brainwashing to quantitative modelling of society, and a second in command-and-control, to focus on computers.

Though the Pentagon treated ARPA’s command-and-control and behavioural sciences assignments as distinct, the archives of the Smithsonian panel make clear that its members viewed the areas as deeply related: both were about creating a science out of human behaviour, whether it was humans interacting with machines or with other people. Who better to lead those twin efforts than a psychologist interested in computers?

On 24 May 1961, Licklider, a research psychologist who was working for the tech company Bolt, Beranek and Newman Inc in Massachusetts, was offered a job at ARPA heading the ‘Behavioural Sciences Council’. The work would be onerous and exhausting, he was told, and like most government positions of the time would not pay well either, between $14,000 and $17,000.

Licklider’s initial field was psychoacoustics, the perception of sound, but he had become interested in computers while working at the Lincoln Laboratory at the Massachusetts Institute of Technology (MIT) on ways to protect the US from a Soviet bomber attack. There, Licklider had been involved in the Semi-Automatic Ground Environment (SAGE), the Cold War computer system designed to link 23 air-defence sites to coordinate tracking of Soviet bombers in case of an attack. The SAGE computer would work with the human operators to help them to calculate the best way to respond to an incoming Soviet bomber. It was, in essence, a decision-making tool for nuclear Armageddon, and it spawned decades of popular culture notions of doomsday computers, from films such as WarGames (1983) to the Terminator franchise (1984-).

The reality was that, by the time SAGE was deployed, it was rendered almost obsolete by the advent of intercontinental ballistic missiles. Still, for scientists such as Licklider, who worked on SAGE, the experience transformed how they saw computers. Prior to SAGE, they were big mainframes that used batch processing, meaning that programs were entered one at a time, often by punch card, and then the computer did the calculations and spat out answers. The idea that someone might sit daily in front of a computer – and interact with it – was unfathomable to most. But with SAGE, operators for the first time had individual consoles that displayed information visually and, even more important, they worked directly with those consoles using buttons and light pens. SAGE was the first demonstration of interactive computing, where users could give commands directly, and time-sharing, where multiple users could work with a single computer.

Based on his experience from SAGE, Licklider envisioned the modern conception of interactive computing: a future where people worked on personal consoles at their desks, rather than having to walk into a large room and feed punch cards into machines to crunch numbers. What seems so obvious today was revolutionary in the early 1960s, when computers were still large, exotic creatures housed in university laboratories or in government facilities, and used for specialised military purposes. That vision meant doing away with batch processing, where a single user worked on a computer for a single purpose. Instead, users at remote consoles would be able to tap into the resources of a single computer, performing different functions almost simultaneously.

Licklider’s article ‘The Truly SAGE System; or, Toward a Man-Machine System for Thinking’ (1957) was one of the first manifestos outlining this new approach, and marked him as a leader of a group of scientists who wanted to transform computing. In 1960, he took this thinking a step further with the publication of what would become a seminal paper on the path toward the internet. Titled simply ‘Man-Computer Symbiosis’, the article was not the work of an ordinary computer scientist, as demonstrated by his opening lines:

The fig tree is pollinated only by the insect Blastophaga grossorun. The larva of the insect lives in the ovary of the fig tree, and there it gets its food. The tree and the insect are thus heavily interdependent: the tree cannot reproduce without the insect; the insect cannot eat without the tree; together, they constitute not only a viable but a productive and thriving partnership. This cooperative ‘living together in intimate association, or even close union, of two dissimilar organisms’ is called symbiosis.

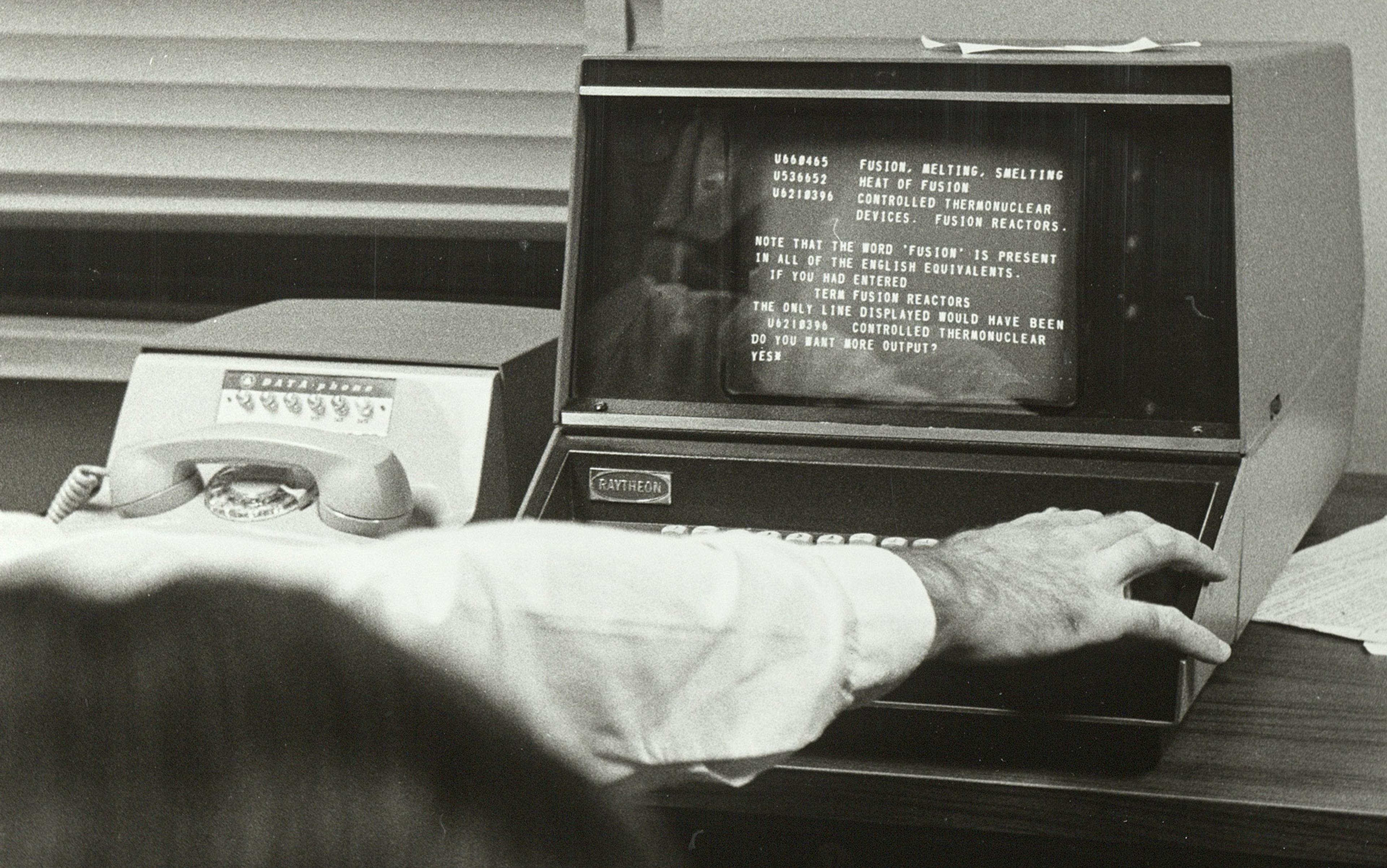

This symbiosis between man and machine was fundamentally different from the batch-processing computers of the present; it also differed from the hard-core artificial intelligence (AI) enthusiasts, who were pinning their hopes on thinking computers. Licklider suspected that true AI was much further away than some thought, and that there would be an interim period dominated by this symbiosis of man and machine. The picture he painted was of people using a network of computers, ‘connected to one another by wide-band communication lines and to individual users by leased-wire services’.

Personal consoles, time-sharing, networking – he essentially spelled out all the underpinnings of the modern internet

Military applications were certainly high on Licklider’s list: after all, his ideas were prompted by SAGE, and his essay addressed the needs of military commanders. Yet his vision was also much broader, and in his paper he included corporate leaders in need of quick decisions, and libraries whose collections would be linked together. Licklider wanted people to understand that, more than any specific application, what he was describing was an entire metamorphosis of man and machine interaction. Personal consoles, time-sharing, and networking – the article essentially spelled out all the underpinnings of the modern internet.

But all it was then was a vision; someone had to develop the underlying technologies to make it happen. When Licklider was offered the ARPA job in 1962, the position was low-pay, high-stress, and at an obscure agency barely four years old. Its employees were all temporary hires, with the expectation that they would leave after just a few years. He agreed to take the position for one year, because it offered him the opportunity to make his vision of computer networking a reality.

The same year that Licklider’s manifesto on computer networking was released, Paul Baran, an analyst at the RAND Corporation in California, published ‘Reliable Digital Communications Systems Using Unreliable Network Repeater Nodes’ (1960). The paper was Baran’s proposal for using a redundant communications network to ensure that the US could still launch its nuclear weapons after an initial attack. His description, like Licklider’s, bore a lot of similarities to the structure of the modern internet.

Years later, as people began to explore the internet’s origins, a debate emerged over who could rightfully be considered the originator of the idea. The problem with pinning it to any one person, or idea, is that a number of people were thinking about networking computers in the 1960s. The real question is who was in a position to actually translate this vision into a nuts-and-bolts reality. RAND was a possibility: although it was more of a think-tank than a research agency, it shared ARPA’s flexibility. The US Air Force might assign broad national security problems to RAND, which gave great intellectual freedom to its workers, including some of the top nuclear theorists of the 20th century. One was Herman Kahn, whose ruminations on ‘wargasm’, his term for all-out nuclear war, made him great fodder for caricature.

Baran, however, was thinking about practical solutions to nuclear war. And in 1960, he was working with colleagues at RAND on simulations to test the resiliency of the communications system in case of nuclear attack. ‘We built a network like a fishnet, with different degrees of redundancy,’ he recalled in an interview with Wired magazine in 2001. ‘A net with the minimum number of wires to connect all the nodes together, we called 1. If it was crisscrossed with twice as many wires, that was redundancy level 2. Then 3 and 4. Then we threw an attack against it, a random attack.’

‘If the command-and-control systems could be more survivable, then the country’s retaliatory capability could better allow it to withstand an attack and still function’

Picture the communications network as a series of nodes: if there is just one connection between two nodes and it is destroyed in a nuclear attack, it is no longer possible to communicate. Now imagine nodes with multiple connections to other nodes, providing an alternative path of communication if some nodes are taken out. The question for Baran was, how much redundancy is enough? Through simulations of an attack, he and his colleagues found that, if you have three levels of redundancy, the probability that two nodes in the network could survive a nuclear attack was extremely high. ‘The enemy could destroy 50, 60, 70 per cent of the targets or more, and it would still work,’ he said. ‘It’s very robust.’

Baran later explained his thinking as being aimed squarely at concerns over the hair-trigger alert that both the US and the Soviet Union maintained with nuclear weapons. Having the ability to survive a nuclear attack would, in theory, make deterrence more stable by taking away the temptation for the other side to launch a first strike. ‘The early missile-control systems were not physically robust,’ he said. ‘Thus, there was a dangerous temptation for either party to misunderstand the actions of the other and fire first. If the strategic weapons command-and-control systems could be more survivable, then the country’s retaliatory capability could better allow it to withstand an attack and still function; a more stable position.’

For the idea to work, the network would have to be digital rather than analogue, which would degrade the signal as it travelled. It was an ambitious, new idea, and the problem was that RAND, which Baran joked stood for ‘research and no development’, could not create such a system on its own.

RAND could not build the network, but the US Air Force could, and its leaders were interested in Baran’s idea. Before work started on it, however, a bureaucratic reorganisation pushed the project over to the Defense Communications Agency – a stodgy Pentagon bureaucracy that Baran suspected was stuck in the analogue world. Better to end the project, he figured, than to see it botched. ‘I pulled the plug on the whole baby. There was no point. I said: “Just wait until some competent agency comes around.”’ That competent agency would end up being ARPA.

Licklider arrived at ARPA the same month that the superpowers almost went to war over the missiles in Cuba. For senior Pentagon officials, it seemed obvious that ARPA’s work on command and control was about nuclear weapons. William Godel, then the deputy director of the agency, recalled that ARPA’s new assignment was supposed to look at alternatives to Looking Glass, the code name for the military’s ‘nuclear Armageddon’ aircraft that flew around-the-clock on alert. At the Pentagon, Harold Brown, the director of defence research and engineering, thought that he had assigned ARPA to work on problems dealing with the command and control of nuclear weapons.

Brown, who wrote the assignment, recalled being influenced by one of his deputies, Robert Prim, a mathematician from Bell Labs. Prim was heavily focused on technologies for the command and control of nuclear weapons, including research that eventually led to Permissive Action Links, security devices for nuclear weapons. Brown was unhappy with the pace of developments in the military services, so he assigned command-and-control research to ARPA in hopes that it might come up with something better.

The need to better control nuclear weapons loomed large in the fall of 1962. Just a few weeks after he started work, Licklider attended an Air Force-sponsored conference on command-and-control systems held in Hot Springs, Virginia, where the Cuban missile crisis had been top of the agenda.

‘Who can direct a battle when he’s got to write a program in the middle of that battle?’ Licklider asked

Yet the meeting had been lacklustre, with no one really coming up with any creative ideas. On the train back to Washington, DC, Licklider and the MIT professor Robert Fano struck up a conversation, and soon a host of other computer scientists on the train got involved. Licklider used it as another opportunity to proselytise for his vision: creating a better command-and-control system required coming up with an entirely new framework for man-machine interaction.

Licklider was well aware of the Pentagon’s interest in command and control of nuclear weapons. One of his early descriptions for work on computer networking referenced the need to link computers that would be part of the nascent National Military Command System used to control nuclear weapons. His vision was for something much broader, however. When he met with Jack Ruina, the head of ARPA, and Eugene Fubini, one of Brown’s deputies, Licklider pitched interactive computing to them. Rather than focusing strictly on technologies to improve command and control, he wanted to transform the way people worked with computers. ‘Who can direct a battle when he’s got to write a program in the middle of that battle?’ Licklider asked.

The new ARPA research manager was determined to show that command and control could be something more important than just building a computer to control nuclear weapons. Whenever he met Pentagon officials who’d start to talk about command and control, Licklider shifted the conversation to interactive computing. ‘I did realise that the guys in the secretary’s office started off thinking that I was running the Command and Control Office, but every time I possibly could I got them to say interactive computing,’ Licklider said. ‘I think eventually that was what they thought I was doing.’

Pentagon officials did not quite understand what Licklider was talking about, but it sounded interesting, and Ruina agreed, or at least he agreed that Licklider was smart, and the specifics were not important. When the secretary of defence ‘asked to see me about something, he never asked to see me about computer science,’ Ruina said, ‘he asked to see me about ballistics defence or nuclear testing detection. So those were the big issues.’ Licklider’s work ‘was a small but interesting programme on the side’.

That was just fine. At the young agency, newcomers such as Licklider were creating a freewheeling culture, and managers were given broad berth to establish research programmes that might be tied only tangentially to a larger Pentagon goal. The most ambitious project he launched took the name Project MAC, short for Machine-Aided Cognition or Multiple-Access Computer, through a wide-ranging $2 million grant to MIT. Project MAC covered the span of interactive computing, from AI and graphics to time-sharing and networking. ARPA provided MIT with autonomy, so long as it used the money for the goals prescribed by the agency.

Licklider, who focused on vision over reputation, also took a risk on more unknown scientists, such as Doug Engelbart, at the Stanford Research Institute. By the time Licklider was done handing out contracts, his centres of excellence stretched from the east coast to the west, and included MIT, Berkeley, Stanford, the Stanford Research Institute, Carnegie Tech, RAND, and System Development Corporation.

In April 1963, just six months after joining ARPA, Licklider dashed off a six-page memo to the people he was funding, in what would become one of the more famous missives of his time at the agency. He addressed it to the ‘Members and Affiliates of the Intergalactic Computer Network’, a tongue-in-cheek way of telling the ARPA-funded researchers they were part of a broader community working toward a common goal:

At this extreme, the problem is essentially the one discussed by science-fiction writers: ‘How do you get communications started among totally uncorrelated “sapient” beings?’ … It seems to me to be interesting and important, nevertheless, to develop a capability for integrated network operation. If such a network as I envisage nebulously could be brought into operations, we would have at least four large computers, perhaps six or eight small computers, and a great assortment of disk files and magnetic tapes units – not to mention the remote consoles and teletype stations – all churning away.

It was the clearest articulation yet of his vision for interactive computing, and vision is what mattered in 1963, because much of what Licklider was building was a foundation of research, not an actual computer network. The lack of anything concrete to show from the initial research was also potentially a liability, because few in the Pentagon at the time really understood the full potential of computers. When Ruina left in 1963, his replacement, Robert Sproull, a scientist from Cornell University in Ithaca, New York, almost cancelled Licklider’s entire programme. After the heyday of ARPA’s first year, when it managed space programmes and had a half-a-billion-dollar budget, funding for the agency had been cut almost in half by the mid-1960s, to $274 million.

Sproull was under orders to trim $15 million from ARPA’s budget, and he immediately looked for programmes that had not appeared to produce much in the past two years. Licklider’s computer work ended up at the top of his list, and the new ARPA director was on the verge of shutting it down.

Licklider dealt with the threat of cancellation with typical calm. ‘Okay, look, before you cancel this programme, why don’t you come around and look at some of the labs that are doing my work,’ he suggested. Sproull went with Licklider to three or four of the major computer centres around the country and was duly impressed. Licklider kept his funding. Asked decades later about whether he was the man who ‘almost killed the internet’, Sproull laughed and said: ‘Yes.’

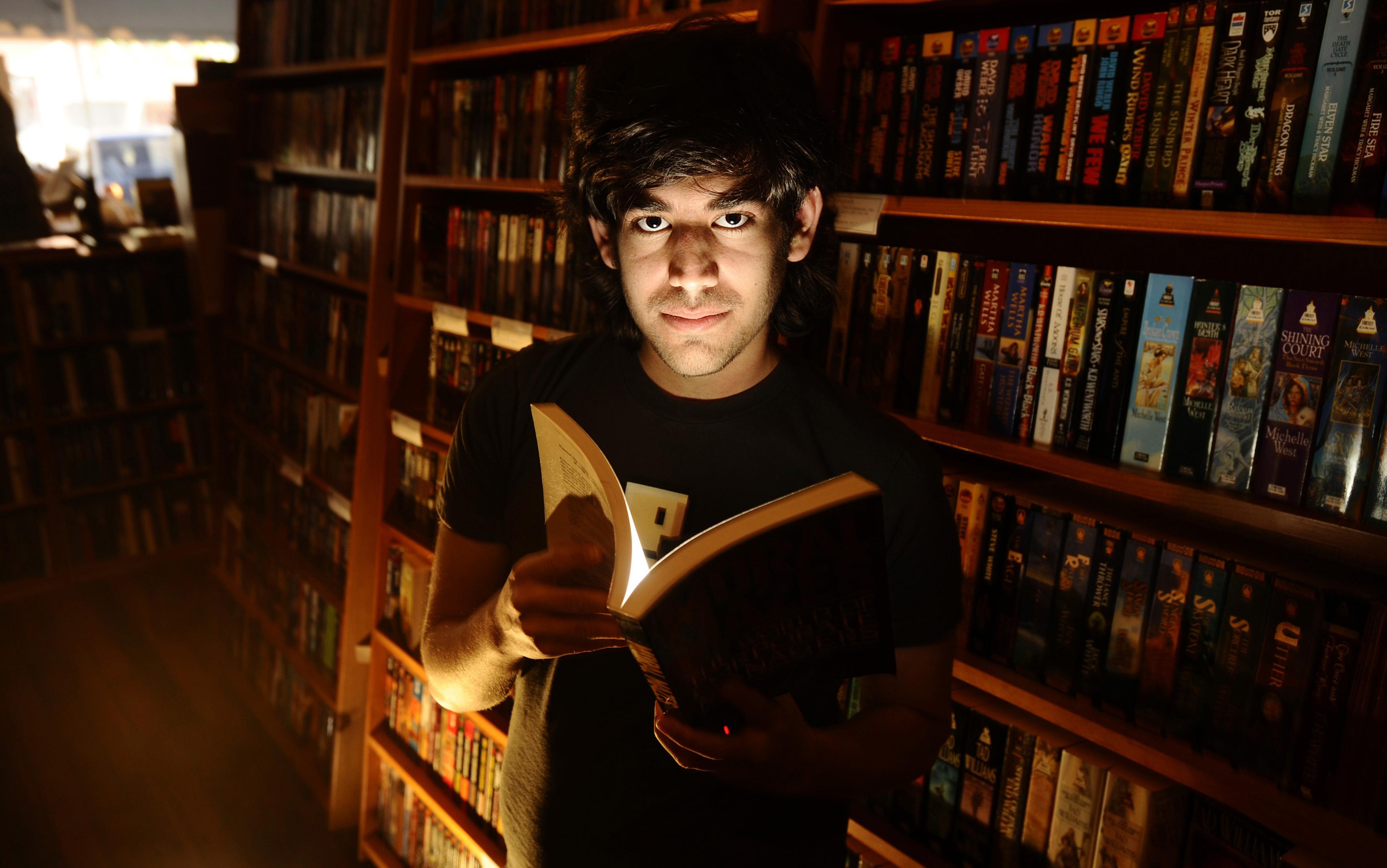

By the time Licklider left ARPA in 1964, his investments were bearing fruit, small and large. At MIT, the ARPA-sponsored time-sharing system spawned the first email program, called MAIL, written by a student named Tom Van Vleck. At Stanford Research Institute, the previously unknown Engelbart had experimented with different tools that would allow users to interact directly with computers; after trying out devices such as light pens, he eventually settled on a small wooden block, which he called a ‘mouse’.

Ivan Sutherland, a young computer scientist who had already forged an impressive reputation for his work in computer graphics, replaced Licklider, but found himself facing resistance from computer scientists. He tried to get the University of California, Los Angeles (UCLA) to create a network using three of its computers, but the researchers involved did not see how it would benefit them. Academics were scared that networking computers would allow others to tap into their coveted computer resources. Steve Crocker, then a graduate student at UCLA, recalled the battles for computer time: ‘There came a moment when the tension was so high that the police had to be called to tear the people apart who were about to fight with each other.’ When ARPA tried to carry out that first computer-networking project at UCLA, it ran into similar resistance. The head of the computer centre ‘decided that being under the gun from ARPA to produce on some timely basis was not consistent with the way a university ought to operate’ and ‘pulled the plug’ on the ARPA contract, Crocker recalled.

Sutherland called the thwarted computer-networking project ‘my major failure’. It was not actually a failure, it was just too soon. Shortly after he left, his deputy, Robert Taylor, took over. Taylor did not have Sutherland’s or Licklider’s reputation, but he did have vision and determination. In 1965, he approached Charles Herzfeld, the new ARPA director, in his E-Ring Pentagon office, and laid out his idea for a computer network that would link geographically dispersed sites. Herzfeld had long had a keen interest in computers. As a graduate student at the University of Chicago, he attended what he described as a life-changing lecture by John von Neumann, the famed mathematician and physicist, who talked about the Electronic Numerical Integrator and Computer (ENIAC), the Second World War computer that was built to speed up the calculation of artillery firing tables. Later, at ARPA, Herzfeld befriended Licklider, whose proselytising at ARPA about brain-computer symbiosis had an equally profound effect on Herzfeld. ‘I became a disciple of Licklider early on,’ he recalled.

Taylor was not proposing Licklider’s small-scale laboratory experiments of a couple of years earlier. Taylor wanted to create an actual cross-country computer network – something that had never been done before and would require significant new technology, investment and arm-twisting of researchers.

The conversation to approve the money for the ARPANET, the computer network that would eventually become the internet, took just 15 minutes

‘How much money do you need to get off the ground?’ Herzfeld asked.

‘A million dollars or so, just to get it organised,’ Taylor replied.

‘You’ve got it,’ Herzfeld replied.

And that was it. The conversation to approve the money for the ARPANET, the computer network that would eventually become the internet, took just 15 minutes. The ARPANET was a product of that extraordinary confluence of factors at the agency in the early 1960s: the focus on important but loosely defined military problems, freedom to address those problems from the broadest possible perspective and, crucially, an extraordinary research manager whose solution, while relevant to the military problem, extended beyond the narrow interests of the DoD. An assignment grounded in Cold War paranoia about men’s minds had morphed into concerns about the security of nuclear weapons and had now been reimagined as interactive computing, which would bring forth the age of personal computing.

This is an edited extract from the book ‘The Imagineers of War’ by Sharon Weinberger. © copyright 2017 by Sharon Weinberger. Published by arrangement with Alfred A Knopf, an imprint of The Knopf Doubleday Publishing Group, a division of Penguin Random House LLC.