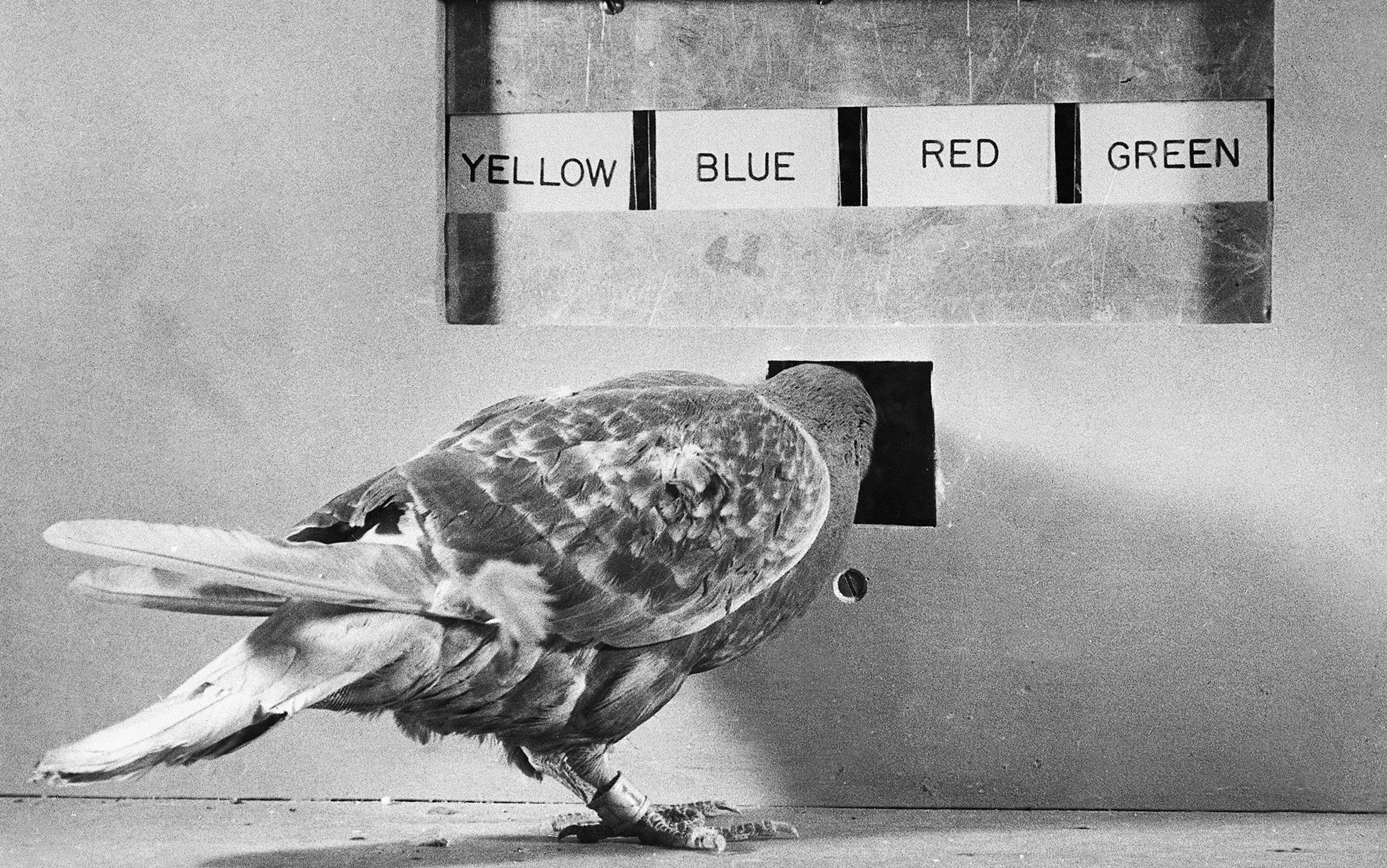

When I go online, I feel like one of B F Skinner’s white Carneaux pigeons. Those pigeons spent the pivotal hours of their lives in boxes, obsessively pecking small pieces of Plexiglas. In doing so, they helped Skinner, a psychology researcher at Harvard, map certain behavioural principles that apply, with eerie precision, to the design of 21st‑century digital experiences.

Skinner trained his birds to earn food by tapping the Plexiglas. In some scenarios, the pigeons got food every time they pecked. In other arrangements, Skinner set timed intervals between each reward. After the pigeon got food, the system stopped dispensing treats for, say, 60 seconds. Once that period had elapsed, if the bird pecked, it got another payday. The pigeons never quite mastered the timing, but they got close. Skinner would randomly vary the intervals between food availability. One time there’d be food available again in 60 seconds. The next, it might be after five seconds, or 50 seconds, or 200 seconds.

Under these unstable conditions, the pigeons went nuts. They’d peck and peck. One pigeon hit the Plexiglas 2.5 times per second for 16 hours. Another tapped 87,000 times over the course of 14 hours, getting a reward less than 1 per cent of the time.

So? Well, here’s a simple illustration of how Skinner’s pigeon research applies to contemporary digital life. I’ve picked a hypothetical example: let’s call him Michael S, a journalist. Sending and receiving emails are important parts of his job. On average, he gets an email every 45 minutes. Sometimes, the interval between emails is only two minutes. Other times, it’s three hours. Although many of these emails are unimportant or stress-inducing, some of them are fun. Before long, whenever Michael S has an internet connection, he starts refreshing his email inbox every 30 minutes, and then every five minutes and then, occasionally, every two minutes. Before long, it’s a compulsive tic – the pecking pigeon of web usage.

Should we blame Michael S for wasting hours of his life hitting a small button? We could. He does have poor self-control, and he chose a profession in which email is an important form of communication.

Then again, would we blame Skinner’s pigeons, stuck in a box, pecking away until they get their grains and hemp seeds, while a pioneering researcher plumbs the glitches in their brains? Who’s in charge, really, of this whole scenario? The pigeons? Or Skinner, who designed the box in the first place?

By 2015, it’s a platitude to describe the internet as distracting. We casually talk about digital life in terms of addiction and compulsion. In the 2000s, users nicknamed the first mainstream smartphone the crackberry. In conversation, we describe basic tools and apps – Facebook, email, Netflix, Twitter – using terms otherwise reserved for methamphetamine and slot machines.

Psychologists have been discussing the possibility of internet addiction since 1996, just three years after the release of the first mainstream web browser. But there’s no consensus about how to diagnose internet addiction, or whether it’s even a real thing. Estimates of its prevalence vary wildly. Unlike heroin, the internet doesn’t kill people, and has obvious utility. Plus, it can be difficult to disentangle the medium (the internet) from the addictive experience (pornography, for example, or online gambling).

In any case, these diagnostic categories tend toward extremes. They don’t seem to encompass the full range of experiences on display when people joke about crackberries, or talk about getting sucked into Tumblr and Facebook.

Yet, for millions of people, the internet is often understood in terms of compulsion. Critics blame the internet itself for this state of affairs, or they blame individual users. Neither makes much sense. The internet is not a predetermined experience. It’s a system of connections and protocols. There’s nothing about a global computer network that necessitates addiction-like behaviours.

Tech companies have the smartest statisticians and computer scientists, whose job it is to break your willpower

So should individuals be blamed for having poor self-control? To a point, yes. Personal responsibility matters. But it’s important to realise that many websites and other digital tools have been engineered specifically to elicit compulsive behaviour.

A handful of corporations determine the basic shape of the web that most of us use every day. Many of those companies make money by capturing users’ attention, and turning it into pageviews and clicks. They’ve staked their futures on methods to cultivate habits in users, in order to win as much of that attention as possible. Successful companies build specialised teams and collect reams of personalised data, all intended to hook users on their products.

‘Much as a user might need to exercise willpower, responsibility and self-control, and that’s great, we also have to acknowledge the other side of the street,’ said Tristan Harris, an ethical design proponent who works at Google. (He spoke outside his role at the search giant.) Major tech companies, Harris told me, ‘have 100 of the smartest statisticians and computer scientists, who went to top schools, whose job it is to break your willpower.’

In short, it’s not exactly a fair fight.

It is a testament to the strange relationship between Silicon Valley and compulsion that when Nir Eyal’s book on how to build habit-forming products was published, it generated no controversy. Instead, Hooked (2014), which teaches web designers to ‘create a craving’ in their users, was a bestseller. Prominent tech writers praised it, as did the founder of WordPress. Eyal hosted a symposium at Stanford University.

As a consultant to Silicon Valley startups, Eyal helps his clients mimic what he calls the ‘narcotic-like properties’ of sites such as Facebook and Pinterest. His goal, Eyal told Business Insider, is to get users ‘continuing through the same basic cycle. Forever and ever.’ In Hooked, he sets out to answer a simple question: ‘How is it that these companies, producing little more than bits of code displayed on a screen, can seemingly control users’ minds?’

The answer, he argues, is a simple four-step design model. Think of Facebook’s news feed. The first two steps are straightforward – you encounter a trigger (whatever prompts you to scroll down on the feed) and an opportunity for action (you actually scroll down). Critically, the outcome of this action shouldn’t be predictable – instead, it should offer a variable reward, such that the user is never quite sure what she’s going to get. On Facebook, that might be a rewarding cat video, or an obnoxious post from an acquaintance.

Finally, according to Eyal, the process should give you a chance to make some kind of investment – clicking the Like button, for example, or leaving a comment. The investment should gradually ramp up, until the user feels more and more invested in the cycle of trigger, action and reward.

Then you’re hooked.

If this sounds a bit like Skinner’s experiments, that’s because it’s modelled on them. As with the pigeons, uncertain reward can lead to obsessive behaviour. The gambling industry has been using these techniques for years, too: as Skinner himself recognised, the classic high-rep, variable-reward device is the slot machine.

There are differences between a slot machine and a website, of course

Natasha Schüll is an anthropologist at New York University who studies human-machine interactions. She fielded requests from interested Silicon Valley designers after the publication of Addiction by Design (2012), her ethnography of gambling addicts and machine designers in Las Vegas.

People expect gambling addicts to care about winning. But according to Schüll, compulsive gamblers pursue a kind of trance-like focus, which she calls ‘the machine zone’. In this zone, Schüll writes, ‘time, space and social identity are suspended in the mechanical rhythm of a repeating process’.

There are differences between a slot machine and a website, of course. With the former, the longer you’re engaged by variable rewards, the more money you lose. For a tech company in the attention economy, the longer you’re engaged by variable rewards, the more time you spend online, and the more money they make through ad revenue. While we tend to describe the internet in terms of distraction, what’s being developed, when you check email or Facebook neurotically, or get sucked into Candy Crush, is actually a particular kind of focus, one that prioritises digital motion and reward.

In the gambling world, people tend to blame the addicts. Overwhelmingly, the academic literature on gambling has focused on the minds and behaviours of addicts themselves. What Schüll argues is that there’s something in between the gambler and the game – a particular human-machine interaction, the terms of which have been deliberately engineered.

Yet we keep blaming people. As Schüll puts it: ‘It just seems very duplicitous to design with the goal of capturing attention, and then to put the whole burden onto the individual.’

One of the sharpest critics of Nir Eyal is Nir Eyal. Over the phone, he comes across as thoughtful, serious and sensitive to the ethical implications of his life’s work. He often consults with healthcare providers, applying Hooked’s tactics to, for example, digital tools that remind people to take their medicines on time. He says that he refuses to consult for porn and gambling sites. The book’s goal, Eyal said, was to inspire products ‘that could help people live happier, healthier, more connected, richer lives’.

‘The fact is, you can’t sell something to people if they don’t want that thing,’ Eyal said. ‘What I’m teaching is persuasion. It is not coercion. Coercion is when you get people to do things they don’t want to do. And frankly I don’t know how to do that.’

In Addiction by Design, Schüll writes that ‘industry designers actively marshal technology to delude gamblers – at times worrying about their delusionary tactics… and at other times defending these tactics by insisting that they give gamblers “what they want”.’

That sounded a lot like Eyal’s claim: you can’t sell anything that a customer doesn’t already want. I asked Schüll about the parallels. ‘I think [Eyal] has his heart in the right place,’ she said. But she argues that, when it comes to machine design, it’s not exactly about giving people what they do or do not want. What matters, Schüll says, is ‘the accentuating, accelerating and elaborating that happens between the wanting and the giving’.

as people have applied persuasive and hook-forming design principles to the internet, a moral queasiness has been there from the start

In other words, there’s a difference between what we want and what we get. We go online looking for entertainment, connection, information. Often we do want to be distracted. Companies seem to be very good at amplifying that outcome – taking what we want and giving us something that’s a few notches more. It’s the difference between wanting to go on Facebook for 10 minutes and ending up there for 30.

Finding a term for this amplification can be difficult. At worst, it smacks of coercion. At best, it represents an imbalance of power between ordinary consumers and the engineers trying to circumvent their willpower. Either way, gambling is instructive, in that it offers a frightening lesson: well-designed machines can bring people into zones of profitable compulsion. And as people have applied persuasive and hook-forming design principles to the internet, a moral queasiness has been there from the start.

In one of the very first papers on captology – the process of using computers to change people’s behaviours – the Stanford psychologist B J Fogg, who has taught many of Silicon Valley’s tech leaders, included a coda on ethics. ‘High-tech persuaders would do well to base their design on defensible ethical standards,’ Fogg wrote, back in 1998. It was not exactly a rousing call to enforcement and moral rigor.

In Hooked, Eyal includes a handy moral rubric. It’s supposed to help designers determine whether or not they’re applying the hook model ethically. I asked Eyal what would prevent someone from reading his book and building a coercive or harmful tool anyway. ‘Nothing,’ he admitted. ‘Nothing stops them.’ But he argues that, for many companies, ‘there is an incentive to self-regulate’ because unhappily hooked users can burn out.

These appeals to moderation, though, are fundamentally out of sync with the basic profit model of Silicon Valley.

Imagine the internet as a nearly infinite, Borgesian library. Each article, widget, slide, game level and landing page forms a room in the library. Every time you use a link to go to a new page, you pass through a door.

At first, if you want to make money, you sell whatever is in the room. Maybe it’s excellent journalism. Maybe it’s a game or a recipe. Maybe it’s an item that will get shipped to someone’s house. In this model, the internet offers a straightforward transactional experience in digital space.

Over time, instead of making money from whatever is in each room, companies begin to monetise the doors. They equip them with sensors. Each time you go through one, someone gets paid. Immediately, some people will start adding a lot of new doors. Other people will build rooms that are largely empty, but that function as waystations, designed to get as many people as possible to enter and leave.

When you open an article on, say, Slate.com, you enter an article room. Slate makes money because it sells a certain number of metered doors in each of these digital spaces. We call these doors ‘advertisements’. This architecture creates a rather strange effect, because while the ostensible goal of Slate is to get people into its rooms to read fine journalism, it actually gets paid by attracting people and then quickly sending them out – either to an advertiser’s website, or to another article.

And, in fact, this is how Slate functions. In a viral piece it published in 2013, the tech writer Farhad Manjoo partnered with Chartbeat to track how long Slate readers actually stayed on a given article. They found that 38 per cent opened the article and didn’t read it at all. Of those who began reading, fewer than 25 per cent made it to the end, and 5 per cent seemingly looked at the headline and then left. Manjoo locates this twitchiness in some vague cultural moment, suggesting that ‘we live in the age of skimming’. But this pattern shouldn’t surprise us. It’s simply a profit model working as designed.

At some point, you no longer make money by building excellent rooms. You make money by figuring out how to get people to pass through as many doors as possible – to have them scanning across the web, that scrolling hallway of doors, in a state of constant motion, click-click-clicking away.

Tristan Harris grew up practicing sleight-of-hand magic, which, he says, taught him how easily the human mind can be manipulated. While at Stanford, he studied persuasive technologies with Fogg. A number of future Silicon Valley luminaries were in the class; Harris’ partner on a class project went on to co-found Instagram. After leaving Stanford, Harris designed interfaces for Apple, and co-founded Apture, a multimedia search tool, acquired by Google in 2011. Today, Harris is a design philosopher there. On the side, he’s a vocal proponent of ethical design, and a leader of a small community working on a concept called Time Well Spent.

In an ideal Time Well Spent digi-verse, websites would ask users what they really want. To achieve this, Harris imagines a much more flexible web. If you want to spend 15 minutes on Facebook, looking at pictures of old friends, Facebook will help you do that, and gently nudge you off when your time is up. If you want to work quietly on your computer for two hours, without receiving any emails, your server will hold non-urgent messages for you, and deliver them at the end of the rest period. And if you want to play Angry Birds until your eyeballs fall out, you can do that, too.

Certain technologies already help people exercise this kind of control. One app, Freedom, allows users to shut down access to certain sites. Saent, a new productivity tool, helps users track and share their online behaviour to reduce distraction.

The organic web is like organic food: even if these tools were available, they’d require effort and know-how to access

‘We have to change from just competing for raw attention to competing for whether or not there’s a net positive contribution to people’s lives,’ Harris told me. That’s a nice idea; the question is how to get there. Making ‘a net positive contribution to people’s lives’ doesn’t necessarily satisfy investors.

Harris compares Time Well Spent’s goals to the organic food movement. The internet, in his estimation, looks like the conventional food system – limited options, most of them toxic. He is looking for ways to develop tools that let people organicise their web. He even imagines government certifications, like the organic standards, that let websites certify themselves as promoting more thoughtful, balanced usage.

This analogy seems smart, but also worrying. The organic food system is tiny, niche and inaccessible to most people. It allows an affluent handful to opt out of the larger political conversation about industrial food regulation. The same issues seem to apply for the organic web: even if these tools were available, they’d require a certain degree of effort and know-how to access. Already, tools such as Freedom seem to cater to a narrow, tech-savvy professional class. Two-thirds of Saent’s early adopters are software developers.

When you read enough articles about internet compulsion and distraction, you start to notice a strange pattern. Writers work themselves into a righteous fury about prevalence and potency of addict-like behaviours. They compare tech companies to casino owners and other proprietors of regulated industries. And then, at the peak of their rage, they suggest it’s the users – not the designers – who should change.

It’s faintly absurd. You get, for example, the technology critic and venture capitalist Bill Davidow on The Atlantic’s website writing about tech companies that ‘hijack neuroscience’, comparing them both to tobacco companies and casinos. Having described a social epidemic, he laments that there’s ‘no simple solution to this problem’. Then, suddenly mild, he suggests that users could maybe place their smartphones out of reach, sometimes, in order to focus on the people around them.

Davidow is missing the obvious point. If the situation really is as exploitative as he claims, and you aren’t naïve enough to believe that industries would regulate themselves, then you basically have one option: you regulate them.

‘Regulation’ is a scary word, especially for those of us accustomed to the libertarian mores that have governed the past two decades of internet growth. It’s understandable that we’re hesitant to talk about regulating distraction-inducing technologies. For one thing, we typically think of compulsive behaviour as the fault of machines or individuals, rather than as a designed experience, angled toward strategic ends. For another, we tend to associate regulation with paternalism that limits the choices of users.

In this case, though, it’s possible to imagine regulation that actually expands users’ choices. It doesn’t need to be especially invasive or dramatic, and it would be designed to give users more control over their experiences online.

That task would not be easy. The designer drug market provides a helpful analogy here. Every time governments ban these substances – which include synthetic cannabinoids, often called K2 or Spice – designers simply come out with a new, slightly different version that slips through the strictures of the law. Similarly, with something as slippery as distraction, and as polymorphous as the web, it’s easy to imagine companies finding ways to tweak their designs and find new ways to hook users. Still, regulation can send a message. And it can target some of the most common tools that designers use to draw users into a digital machine zone.

Here are three things we could do. First, we could require major social media and gaming sites, email providers and smartphone makers to offer distraction dashboards, so users could control certain elements of their experiences.

Facebook already allows users to turn off some (but not all) notifications. These dashboards would give users a new level of control over when, how and how often they receive notifications. We could require companies to let the users decide how many deliveries of email they receive per day, or how often a social network can update their feeds. Dashboards could also allow users to shape certain features of page layout, such as the amount of new content they see on a single page.

‘Twitter knows how much you’re checking Twitter. Gaming companies know how much you’re using free-to-play games. If they wanted to do something, they could’

It might be better to ban certain features of compulsive design outright. The most obvious target here is continuous or infinite scroll. Right now, sites such as Facebook and Twitter automatically and continuously refresh the page; it’s impossible to get to the bottom of the feed. Analogously, Tinder will let you swipe left or right pretty much indefinitely. YouTube, Netflix and similar sites automatically load the next video or show.

By the Hooked model, these tools let websites constantly reset the trigger. For the company, the advantage is clear: they keep you on the site longer. But infinite scroll has no clear benefit for users. It exists almost entirely to circumvent self-control. Giving users a chance to pause and make a choice at the end of each discrete page or session tips the balance of power back in the individual’s direction. It allows people more control over their own hook cycles.

As a second area for regulation, sites should be required to flag users who display especially compulsive behaviours. While full-blown internet addiction is difficult to define, something along those lines does seem to exist – and sites such as Facebook probably know who’s sick. This is a form of regulation that Eyal does advocate. ‘In the past, if you were an alcohol distiller, you could throw up your hands and say, look, I don’t know who’s an alcoholic,’ he said. ‘Today, Facebook knows how much you’re checking Facebook. Twitter knows how much you’re checking Twitter. Gaming companies know how much you’re using their free-to-play games. If these companies wanted to do something, they could.’ Of course, if citizens wanted to do something, we could, too, by compelling companies to provide cut-off points, and to alert users when their usage patterns resemble psychologically problematic behaviour.

A third option would be a gentler form of feedback. In this model, certain sites or browsers would be required to include tools that let users monitor themselves – how long they’ve been on a site, how many times they’ve visited in a day, and other metrics. Sites could even let users set their own cut-off points: If I’ve been on Twitter for more than an hour today, please lock me out.

Will this work? Probably not. At least in the United States, we’ve been notoriously hesitant to regulate tech companies. But sometimes it’s important to at least discuss regulation, in order to reframe the basic terms of the conversation. Digital tools offer a lot of wonderful services to users. They do so not because users and producers have identical incentives, but because users can form contracts: we trade our attention and privacy. In return, we receive services.

As in any contract, there’s a balance of power. If the architecture of today’s web is any indication, that balance is skewed toward the designers. Unless we want to keep pinging around like Skinner’s pigeons (or like poor Michael S), it’s worth paying a little more attention to those whom our attention pays.