Just about every parent will have a personal version of this scenario, especially during the COVID-19 pandemic: it is evening after a long workday. You are getting dinner started, but it’s like trying to cook in a blizzard. The children are crying, the spaghetti’s boiling over on the stove, your phone starts buzzing with a long-awaited call after a job interview, the doorbell just rang, and you’re the only adult in the house.

Now imagine someone came up to you in the middle of all of this and said: ‘Hey, I have some chocolate here. I can give you this chocolate right now with five bucks or, if you wait a half hour, I will give you the chocolate and 10 bucks.’ As you gently try to peel a toddler from your lower leg while reaching to turn off the stove burner, you say: ‘Put the chocolate and the $5 on the counter and go away.’ Making this quick decision means you have one less thing to think about – and one person less in the kitchen. You don’t have time to make what looks like the better choice of just as much chocolate but twice as much money, if only you could wait.

Now envision support systems to lift some of that load. A stove that turns down its burners when sensors pick up boiling water on its surface. A digital assistant that takes calls after three rings and says: ‘Sorry, I am unavailable for just a few minutes – please call back in five.’ A front porch sensor with a stern recorded voice (and possibly the background noise of a loud, booming dog bark) that says: ‘No solicitors’. And children who… well, there’s no technology for that – yet. But with everything else offloaded to digital supporters, you could make the cool-headed decision to delay the chocolate and get double the money to boot.

Overburdened parents aren’t the only people who could use some gadgets to offload mental cargo. One study asked pilots to press a button every time they heard an alarm signifying crisis during a flight simulation; in more than a third of cases, the volunteer pilots did not register the sound, which is rather alarming in itself. The researchers running the investigation also conducted electroencephalograms (EEGs) on the pilots during the scenarios. In the brain, the EEG findings suggested, the demands of the intense flight scenario created a cognitive bottleneck that even an urgent auditory alarm could not breach.

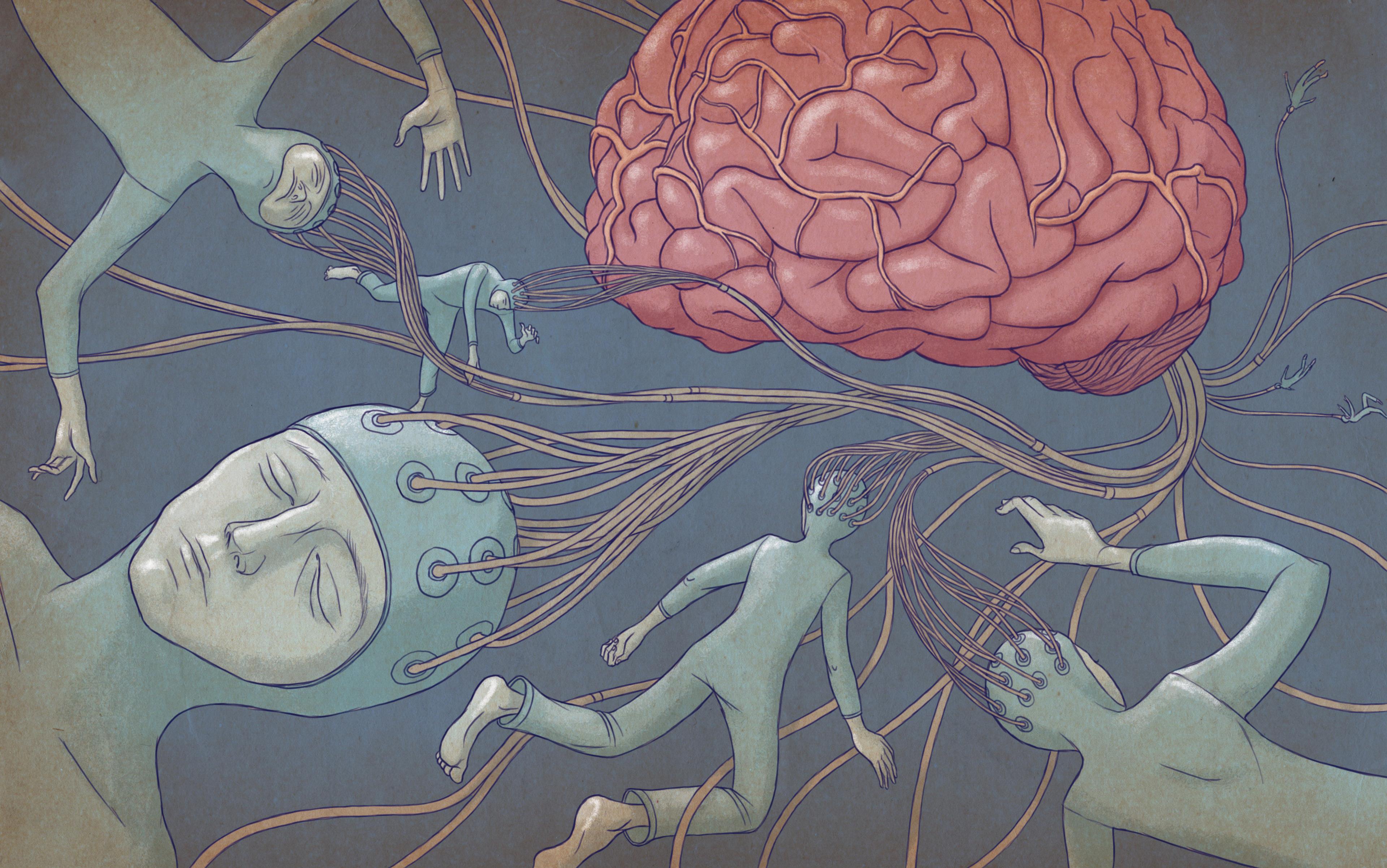

Neuroergonomics researchers are looking at what can be done to break through the chaos. They are following what happens in our bodies as our attention, executive function, emotions and moods wax and wane. They even assess how our physiological responses change in synchrony with each other.

Academics in this field seek to improve safety areas such as flight, where real-world tragedies can be caused by human error. They use neuroscience approaches to understand the brain at work and why that brain sometimes makes catastrophic errors or omissions, such as not registering a loud and insistent alarm. Once these patterns are known, machines can be used to detect them and work with their human ‘partners’ to offload burdens and prevent bad outcomes.

We need the help because our resources are limited. The human brain is not an infinitely whirring information processor. It’s an organic structure just like an oak tree or a penguin; it has a finite capacity, with access to a finite amount of energy. Our cognitive workload is how much we’re using of those resources to make a decision or get a task done.

The deliberative system in this case is at the front of your brain, the prefrontal cortex. The overload on it under duress is easily demonstrated by the N-back task, which places a strain on working memory. Working memory is the ‘place’ where we sketch information for instant recall, such as the pin number for the Zoom meeting we’re joining. In the N-back task, the test-taker has to try to remember if an item in a sequence is one they’ve already seen. It starts easily enough with only one item in the sequence but, as the items increase in number, many a prefrontal cortex reaches its limit and becomes inefficient. One study showed that when the ‘n’ or number in the sequence reaches seven, the prefrontal cortex throws up its figurative hands and gives up. The result is a decision-making collapse.

That’s why we make more impulsive decisions when we’re overloaded, unable to apply the deliberation we’d like.

We’ve all experienced that feeling of cognitive overload. With too many demands on our attention, memory and executive function, we run out of space and start to miss things, flub plans, and make crucial missteps.

Today, we have more overload than our species has ever collectively experienced before. You have to be a certain age to even remember a time when, for many people, work ended at 5pm on a Friday and usually couldn’t encroach again until the next Monday morning. That’s long gone. Today, our colleagues are in our personal space – and our pockets – 24-7-365 thanks to email, texting, social media. And not only people from work. People from everywhere are always in our space, and with them comes the information overload flooding our brains from the multiple tributaries on our smartphones.

Taking a neuroergonomic perspective, we can start to look at ways to lighten this load, whether using analogue, digital or organic gadgets. Even though gadgets are the conduit for a lot of this overload, they can also be a necessary solution to reducing the demands of the modern world.

Gadgets have freed up generations of minds from manually tracking the passage of time

Such gadgets are probably as old as tool use. The oldest known calendar shows how humans tracked the lunar cycles 10,000 years ago, not trusting only their memories but instead turning to a series of carefully spaced pits dug in the shape of the Moon phases. This earthworks calendar, uncovered in Scotland in 2013, even marks the date of the winter solstice.

The ancient Greeks took things up a notch a couple of millennia ago with a complex machine, the Antikythera mechanism, sometimes referred to as the world’s oldest known calculator. Its uses may have included tracking lunar cycles, the positions of the planets, and even cycles of competitions such as the Olympic games. From the use of the abacus to the advent of digital calculators, these gadgets have freed up generations of minds from manually tracking the passage of time and performing even fairly simple mathematical processes, for better or for worse. And today we need them more than ever.

Although modern studies use relatively sophisticated gadgets to pinpoint where brainwaves shift patterns or heartrate ticks up, we don’t need technology to tell us when, generally speaking, our brains could use a neuroergonomically supportive buddy.

That buddy doesn’t need to be a machine. Research indicates that people who spend time together romantically, professionally or educationally can start to show synchronicity in their brainwaves, resonating in time with each other. One study presented at the Neuroergonomics Conference in Munich in 2021 suggested that this synchrony goes beyond brainwaves. These authors reported a synching of heartrates and skin responses in students experiencing a lesson in the same classroom, relative to those experiencing the lesson in different classrooms. Their takeaway was that these measures could be used to indicate when students have lost attention to the lesson. But the findings also show that this synchrony is possible when minds are collected together in a community of knowledge, where sharing a burden creates more collective space for decision-making and problem-solving.

The concept of a collective mind is that, in a social species such as ours, the default isn’t a single brilliant mind doing all the work but instead a collective of them, shaped by evolution to operate together. It’s not a new idea. Steven Sloman is co-author of The Knowledge Illusion: Why We Never Think Alone (2017). He and two other co-authors recently proposed that, in using our individual brains, we rely on components from other brains in what they call ‘a community of knowledge’.

One idea they develop is the interdependence we have on each other for problem-solving, decision-making, and even memory. This dependence means that we often outsource our information, relying on our connections with others to obtain information we need, rather than holding it ourselves. As they note, we constantly connect with other brains, not only those in our immediate vicinity but across time and space (as you and I are connecting now), accessing information from other minds and weaving it into our own repertoires. We can even take ideas from people who died thousands of years ago, as long as they left an ancestral record.

When we interweave one another’s gathered knowledge strings into a mutually recognisable social pattern, we have culture. Culture can be both a boon and a burden. Cecilia Heyes, senior research fellow in theoretical life sciences at All Souls College, University of Oxford, is the author of Cognitive Gadgets: The Cultural Evolution of Thinking (2018). In an essay for Aeon, she argued that humans aren’t born with an innate instinct for certain behaviours we view as social, but we do come equipped with the tools, such as memory, attention and recognising patterns, to learn these practices. Evolution provides the basic toolkit, but culture leverages those tools socially to shape the skills, with each culture doing so in a specific, unique way.

Heyes writes about this process in Darwinian terms, with some variants of these practices surviving in specific environments and others fading away. The ones that persist can be passed along through ‘social learning’.

With less burden, we might have more time for the slow thinking that engages our best problem-solving tools

She calls the devices we use socially but not innately ‘cognitive gadgets’. With our innate tools, she argues, we can build socially useful ‘gadgets’ such as an imitation of another person, which is thought to be an underpinning of social development. Such gadgets could be a form of neuroergonomic shortcut, so that we don’t have to re-learn the meaning of a smile at every such encounter – the automatic nature of the phenomenon saves brainspace for other processes.

When we engage in activities together – whether walking, cooking or lunching – we also engage each other’s minds and, almost inevitably, we will share what’s in them. With this reciprocity, we share problems, dreams and happinesses, and in a healthy version of such sharing, get support or insight or heartfelt happiness in return. In this human-human system, we shed some of our cognitive burden, inviting other brains to engage with the problem-solving, bring useful experience to understanding it, or just share the emotion, diminishing the burden by half.

Heyes argues that if what she calls ‘the gadgets theory’ is right, the next step is that the actual gadgets of our cultural practices could ‘stimulate rapid cultural evolution of our mental faculties’.

As if bearing out this prediction, we have seen some major changes in cognitive test scores with the advent of modern technologies. Many people hold IQ to be real and even potentially relatively stable throughout life, both of which I call into question in my book, The Tailored Brain (2021). IQ has proved to be a remarkably unstable metric over the years, subject to the influences of test-taker motivation, socioeconomic status, income gaps and education, among other factors. Perhaps one of the key examples of such change is the ‘Flynn effect’, which appears to bear out the prediction that cognitive gadgets might simulate rapid changes in our ‘mental faculties’.

The Flynn effect is a shift that the late New Zealand intelligence researcher James Flynn documented in modern societies. He found that, in just a few decades of the mid-20th century, IQ scores had climbed so substantially that entire countries would score as ‘gifted’ today. Flynn thought that perhaps the increased problem-solving demands of modern society encouraged by updated educational approaches would explain higher scores on IQ tests within a generation or two. Whatever innate tools we sharpened by growing up along with these real-world cognitive tools would have interacted with each other, emerging as a global defining feature.

Another thing that technology has done, though, is to free up our cognitive space (unless you check social media 5,000 times a day). We no longer devote our innate working memory, for example, to encoding phone numbers, directions or even our daily schedules. With less burden, we might simply have more time for the slow, deliberative thinking that engages our best problem-solving tools and keeps us from making mistakes.

Some research suggests that people with higher IQ scores can take on bigger cognitive loads compared with their lower-scoring counterparts. One possible explanation is a greater processing efficiency associated with high IQ, and greater efficiency means using fewer resources. If our technological gadgets similarly enhance our efficiency and secure a greater cognitive reserve, then most of us are already part of a human-machine system. The future has arrived.

In fact, these technological gadgets are everywhere. One key issue in situations where humans commit drastic and costly errors is maintaining attention and avoiding mind wandering. Research indicates that, when we lose needed focus, we also see a drop in use of our prefrontal cortex, the seat of all that is mature and deliberative in the human brain. Experts suggest three possible ways to keep activity in this area from a collapse in performance. One is to change how the person has to engage with the tasks or adapt ‘the user interface’, one is to adapt the task itself and lighten it up cognitively, and one is to warn the user when they are nearing decision-making collapse so that they can take counter-measures.

Whether high-tech or not, digital gadgets for offloading such burdens are here in force. Calendar alerts are one way to keep an overscheduled day from overloading memory banks. I’ve got mine set to send three for each event – two days, one day, and a half hour before – which has saved me on many occasions from forgetting important meetings.

Like the pilots who don’t hear an alarm sound, though, these email alerts have lost their power to stick in my mind, so I’ve added another step: I move that last email alert to the top of my inbox and mark it as unread so that it’s boldfaced. Despite the experience of three decades with email, ‘Unread’ emails still grab my full attention. Until I actually have the meeting, I am constantly seeing that boldfaced ‘unread’ email and alerting on it. For me, at least, this neuroergonomic tactic serves to pull me out of my mental state (mind wandering or focusing on work to the point of forgetting the time) and stimulates my neurological activity so that I perform better – and don’t miss the meeting. In research lingo, I have ‘adapted the user interface’.

We can also adapt daily tasks to diminish the cognitive load they impose. Pinpointing ways to reduce high-demand activities to something more rote is one tactic, such as having the same thing for lunch every day. Another strategy is limiting the number of high-demand activities that must be done at once, such as choosing next-day clothes the night before, instead of when we’re also trying to get children out the door to school. One of the most straightforward neuroergonomic accommodations we can access is allocating tasks into uncrowded time windows so that they don’t pile up all at once. If making the week’s dinners on an uncluttered Sunday afternoon saves you from five evenings of the ‘overwhelmed in the kitchen’ scenario, that could be an adaptation worth making.

The most intense risks are linked to being ‘brainjacked’, in which real-world gadgets become gateways for bad actors

In addition to setting up these personal, face- and brain-saving supports, we can also try expanding the space in our minds. Physical activity is one method accessible to most of us. A small study recently presented at the Neuroergonomics Conference 2021 showed that, in young men, increased oxygenation to the prefrontal cortex from a one-leg pedalling exercise was linked to immediate better performance on executive function tests. These findings accord with those of other studies, including more in young men and in older people to different extents. Most of them implicate blood flow and oxygen delivery, with some evidence of a boost in a molecule that encourages the growth of new connections among neurons. These effects can lead to more efficient cognitive processing, allowing for the feeling of a smaller cognitive load.

We can even perform our own neuroergonomic research for ourselves, gaining an internal window on what happens to us under overload conditions. It might not yet meet the bar of telling us when we’re near decision-making collapse, but that day could be near.

‘Wearables’ – smartwatches, fitness trackers etc – can already monitor your heartrate changes and variability, both measures that adjust with cognitive burden. As you might expect, your heartrate goes up when you’re overloaded. On a recent trip that involved driving in treacherous ice and snow for several hours, my wearable informed me that my heart rate had gone up by several beats per minute, an increase sustained during that anxious journey. And research suggests that heartrate variability declines under overload, reflecting less flexible adjustment to input fluctuations. This kind of feedback is in the early days for consumers, but we are edging nearer to being able to consult with the gadget on our wrists for this insight into our internal states.

These and other measures are applied in literally mission-critical situations on bigger stages. Researchers have assessed using them on people staffing the NASA control room during Mars missions. These mission-control staff must interact with rovers on a 26-minute signal delay. On a regular schedule during the first 90 sols (Martian day = 24 hours, 40 minutes), the people sending commands to the rover and downloading its information worked irregular shifts every day of that period. The mismatch between the Martian sol and the Earth day meant different start times for these shifts: 8am on a given day one week but almost five hours later on the same day the following week. These big changes carried the threat of discombobulating the Earthly human brains having to adjust to them. In the intervals, the team had to plan which tasks to send to the rover at the next communication, a list that could run to hundreds of commands. Even one errant command could, according to one estimate, cost up to $400 million.

Some of these tasks could be automated and handed off to machines, but not all of them. So there’s been interest in monitoring the physiology of control-room staff for predictive signs of cognitive fatigue portending increased potential for error.

In anticipation of someday developing such monitoring, researchers have created a list of 28 ‘workload metrics’ that together can be used to identify patterns linked to a condition of error-proneness. These measures include heartrate variability, blinking, speech patterns, pupil dilation and EEG recordings. The idea is that algorithms could be used to take this information and adjust the workload demand on the human in ‘human-robot’ teams, although the models still seem to be a work in progress.

Individual people are not NASA, but we may be able to access some of the same metrics researchers do. One group is working on wearables for students and educators that detect changes in body temperature, heartrate and ‘electrodermal activity’. Their intention is to ‘improv[e] one’s awareness’ of study habits and track trends that might be useful for making adjustments to mitigate overload… and for educators to predict student engagement and focus on an activity.

With careful preparation (I know; gestures at pandemic ‘planning’), we can emphasise the benefits of some of our interfaces with machines – and with each other. Imagine a future in which machines bridge our cognitive gadgets and memories with each other. We might fill in our own gadget gaps in this way or experience and truly feel what it’s like to be the other person. Where memories begin to fade or need an accuracy check, external storage and transmission of memory to another brain could very well become a method of preservation. It would be a whole new way to maintain ancestral recollections and connect with brains of the past.

There is definitely an element of creepiness to some of these plans. We have plenty to worry about as we contemplate a world of brain-computer interfaces and human-machine co-workers. The most intense risks are linked to being ‘brainjacked’, in which these real-world gadgets become gateways for bad actors to read our minds, detect our vulnerable moments, and exploit the information. And there are also the inequities that will lead to gaps in who’s using these for good or for ill, who’s vulnerable to the downsides, and who can access them for benefit.

We may, as Heyes posits, use innate tools to evolve, tweak and reiterate cognitive gadgets, but the gadgets that really require our attention and an alert state are the ones that we view as useful but that have these dark sides, too. One thing that humans have capably done over and over is to let technology get ahead of setting boundaries against abuse and exploitation, even though we have sufficient collective mindpower to do so. It’s hard to be optimistic about what we’ll do with such great powers. But we have to remember that human optimism is itself a tool that we can use, although one that must, like the others we wield, be tempered.

To read more about the mind and the brain, visit Psyche, a digital magazine from Aeon that illuminates the human condition through psychology, philosophy and the arts.