Bookshops are wonderful places – and not all the good stuff is in books. A few months ago, I spotted a man standing in the philosophy section of a local bookshop with his daughter, aged three or four. Dad was nose-deep in a tome, and his daughter was taking care of herself. But rather than wreaking havoc with the genres or scribbling on a flyleaf, she was doing exactly as her father was: with the same furrowed brow, bowed posture and chin-stroking fingers, this small child was gazing intently at a book of mathematical logic.

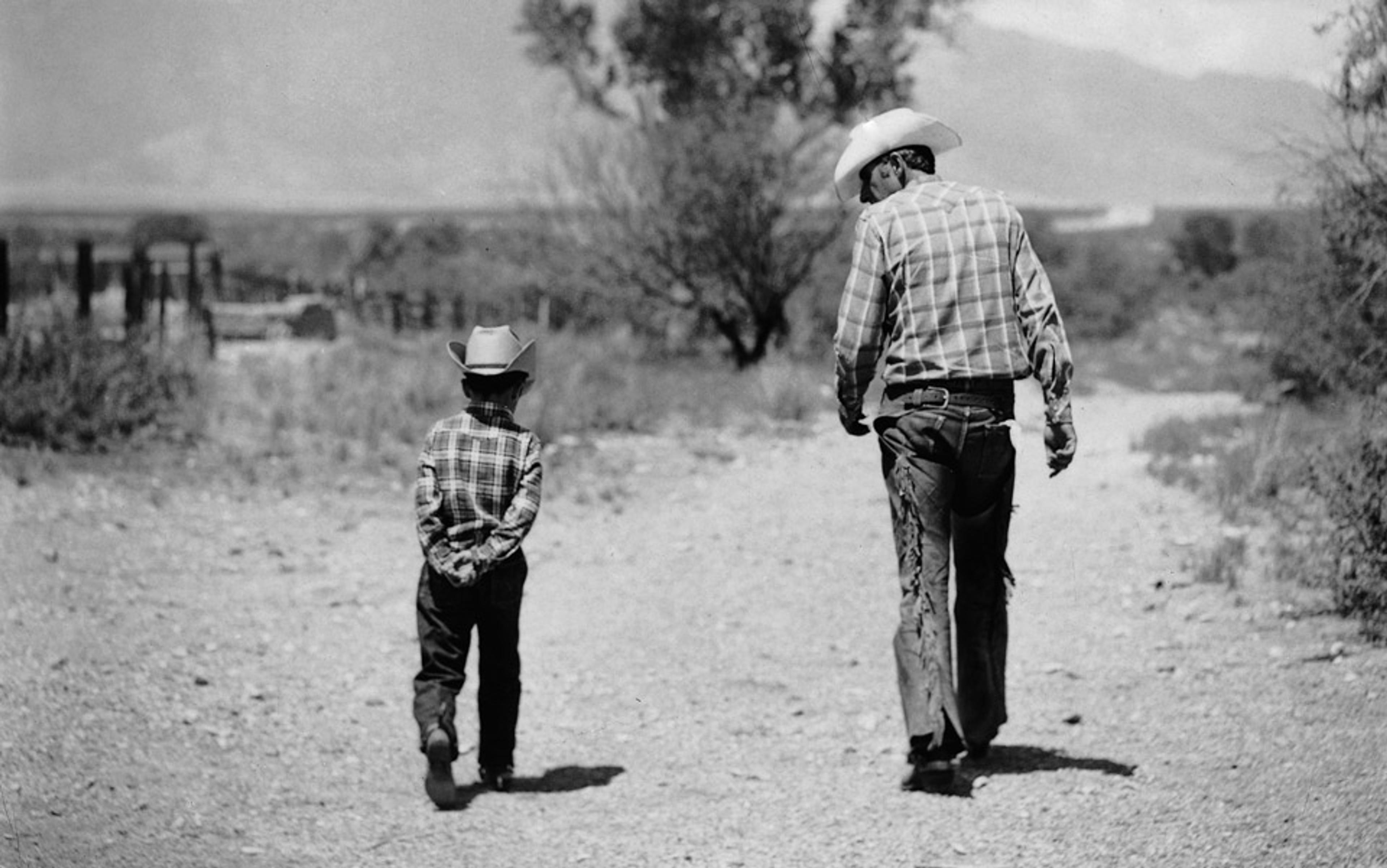

Children are masters of imitation. Copying parents and other adults is how they learn about their social world – about the facial expressions and body movements that allow them to communicate, gain approval and avoid rejection. Imitation has such a powerful influence on development, for good and ill, that child-protection agencies across the world run campaigns reminding parents to be role models. If you don’t want your kids to scream at other children, don’t scream at them.

The conventional view, inside and outside academia, is that children are ‘wired’ to imitate. We are ‘Homo imitans’, animals born with a burning desire to copy the actions of others. Imitation is ‘in our genes’. Birds build nests, cats miaow, pigs are greedy, while humans possess an instinct to imitate.

The idea that humans have cognitive instincts is a cornerstone of evolutionary psychology, pioneered by Leda Cosmides, John Tooby and Steven Pinker in the 1990s. ‘[O]ur modern skulls house a Stone Age mind,’ wrote Cosmides and Tooby in 1997. On this view, the cognitive processes or ‘organs of thought’ with which we tackle contemporary life have been shaped by genetic evolution to meet the needs of small, nomadic bands of people – people who devoted most of their energy to digging up plants and hunting animals. It’s unsurprising, then, that today our Stone Age instincts often deliver clumsy or distasteful solutions, but there’s not a whole lot we can do about it. We’re simply in thrall to our thinking genes.

This all seems plausible and intuitive, doesn’t it? The trouble is, the evidence behind it is dubious. In fact, if we look closely, it’s apparent that evolutionary psychology is due for an overhaul. Rather than hard-wired cognitive instincts, our heads are much more likely to be populated by cognitive gadgets, tinkered and toyed with over successive generations. Culture is responsible not just for the grist of the mind – what we do and make – but for fabricating its mills, the very way the mind works.

In the late 1970s, the psychologists Andrew Meltzoff and Keith Moore at the University of Washington reported that newborn babies – some of them just a few hours old – could copy a range of facial expressions, including tongue protrusion, mouth opening and lip pursing. This launched the idea that humans have an instinct to learn by imitation. Surely such a precocious achievement could only be due to clever genes; after all, it’s impossible to imagine how the average baby could learn what it would feel like to produce the expressions they see on the faces around them, just a few hours or days after birth. According to Meltzoff and Moore, their findings indicated that human babies must be born with a complex cognitive mechanism – a thinking machine that connects ‘felt but unseen movements of the self with the seen but unfelt movements of the other’.

However, cracks began to appear in Meltzoff and Moore’s picture as soon as it was published in Science magazine – and they have been spreading ever since in fine but disfiguring lines. From the outset, some other experts on child development were unable to replicate the crucial results. They found that newborns copied sticking out their tongues, but not other facial gestures – so perhaps instead of an elaborate imitation mechanism, it was a simple reflex in response to excitement. Evidence that older infants don’t copy any facial gestures, not even tongue protrusion, supported that interpretation.

Further chinks appeared when it was discovered that fully functional adults – people lucky enough to have avoided developmental problems and neurological damage – can’t imitate facial expressions unless they’ve had something called mirror experience. This is what you get when you do an action at the same time as seeing it being done. For example, I get mirror experience for eyebrow raising if I do it while looking in a mirror, or while looking at you as you register surprise in the same way, or while looking at you as you imitate me.

You can try one of these experiments at home. Film yourself on a smartphone while you’re chatting with a friend or telling a joke, then freeze the footage at some randomly chosen point. Now, try to reproduce – imitate – your facial expression in that frame. Difficult? Now try it 10 times with the same randomly chosen frame. Take a picture of your best effort each time, and then line up the 10 attempts to see whether you got better as you went along.

If your results are like ours, you’ll find no improvement. Unless you cheated, that is. If you gave yourself mirror experience – if, as you went along, you looked at the face on your phone’s screen as you were trying to copy the original – you probably showed some improvement. Without this kind of advantage, the chances are that your last effort was just as awful as the first.

If the imitation instinct was toppled, other treasured ideas would fall

If we were born with a piece of cognitive kit that connects ‘felt but unseen movements of the self with the seen but unfelt movements of the other’ – or even if we developed such a mechanism later in childhood – we should be able to improve our imitation without mirror experience. It shouldn’t be necessary to feel the facial movement while seeing the result, because we could instinctively work out what each felt movement looks like when viewed from the outside.

For several decades, child psychologists have watched the cracks spreading without taking Meltzoff and Moore’s picture off the wall. Some of the reasons for this are probably sociological. Meltzoff writes about Homo imitans in beautiful, compelling prose. Every loving parent can seemingly confirm that when you stick out your tongue at a baby, the baby likes to return the compliment. In the scientific world, the idea of an imitation instinct swiftly became a foundation stone. By the 1990s, it was supporting other claims about how the mind is shaped by the genes, many of them enshrined in evolutionary psychology. Researchers might have realised that, if the imitation instinct was toppled, other treasured ideas would fall.

But the main reason was sound and scientific: it’s very difficult to do experiments with newborn babies. When they’re not asleep, they’re often inattentive. When they’re alert, they’re often absorbed not by the things a researcher wants them to study, but by the name tag on their wrist, the flickering overhead light or the fragrance of ripe breast. So in each study of newborn imitation, only a small number of babies stayed attentive for long enough to make the cut. Along with small variations in experimental procedure, this sampling could have produced the inconsistent results.

Given these challenges, until recently, the idea of a newborn imitation instinct was still scientifically plausible – even if only some particularly lucky or careful studies managed to show it. But a study from the University of Queensland in 2016 was so large and thorough that it finally undermined this argument. Virginia Slaughter and her colleagues studied an unprecedented number of babies (106) – five to 10 times more than in most previous studies – at four points in time after birth (at one, three, six and nine weeks). They tested for imitation of nine gestures, using gold-standard procedures. They found evidence for just one: yes, it was tongue protrusion. In every other case, the babies were no more likely to produce the gesture after seeing it done than they were if they saw something else. They were no more likely to open their mouths after watching a mouth open, for example, than they were if they’d seen a sad face.

Sure, you can’t prove a negative – that no newborn baby has ever been able to imitate. But in the aftermath of the Queensland study, we have no good reason to believe that newborns can copy gestures, or therefore, that humans have an instinct for imitation.

What we’re left with instead is a wealth of evidence that humans learn to imitate in much the same way as we pick up other social skills. Some of this evidence comes from adults, such as the smartphone test mentioned earlier, and some from infants. In a study in 2018, Carina de Klerk, then at Birkbeck College in London, and her colleagues discovered that babies’ capacity to imitate depended on how much their mothers had imitated their children’s facial expressions. (It’s unclear whether the same applies to fathers; I don’t know of any comparable research.) The more a mother copies her baby’s facial gestures, the more likely the baby is to imitate others.

But the effect doesn’t generalise. Mothers who copy their babies’ facial expressions make the infants better at imitating faces, but it doesn’t make them better at copying hand movements. This specificity is important because it shows that a simple, general learning mechanism is involved. Babies associate the feel of their brows lifting with the sight of mum’s brows lifting, the feel of their mouth opening with the sight of a mouth opening. Being imitated doesn’t make the baby think that imitation is a good idea, or give her the capacity to imitate any action she likes. Rather, maternal imitation gives the baby mirror experience, an opportunity to connect a visual image of an action with a feeling. And these connections are formed, not by a special, complicated process, but by associative learning – the same kind of learning that made Pavlov’s dogs dribble when they heard a bell.

In the space of a few decades, it’s become clear that imitation is not a cognitive instinct. We are not wired to imitate, and imitation is not in our genes. Rather, children learn to imitate through interaction with other people. Parents, other adults and peers do this not only by copying what the child does, but through games that synchronise actions (eg, Pat-a-Cake), by reacting in the same way and at the same time (‘Ooh, what’s that?’), and, in most cultures, by allowing the child to spend time in front of optical mirrors – jiggling, giggling, posing, peering, grimacing, all the while building up the range of movements that they can copy in future. Whether the mirror is real or metaphorical, mirror experience is what makes children master imitators.

Imitation isn’t alone in losing the look of a cognitive instinct. ‘Cheater detection’, also known as ‘social contract reasoning’, refers to the human capacity to pick up on people who are flouting a social rule and taking a benefit they’re not entitled to. Once upon a time, this was a paradigm case in traditional evolutionary psychology, but it no longer appears to be baked in. Experiments suggest that we are pretty good at checking adherence to a rule when it relates to a familiar social context, such as underage drinking. But we’re pretty bad at it when we have to make structurally identical judgments about more abstract things, such as what proposition ‘not P’ implies about proposition ‘Q’. However, it turns out that this advantage is not confined to rules concerning cheating on a social contract: it’s evident when the rule to be checked relates to safety rather than socially conferred benefits, such as ‘Loaded guns should be kept under lock and key.’ So instead of being experts at cheater detection specifically, it seems we’re just good at reasoning about other people, a social advantage that is likely to be due to experience rather than a cognitive instinct. We all get lots of practice at thinking about people, and we care about the results; whether we’re right or wrong makes a difference to our lives. But only a handful of philosophers spend their lives pondering Ps and Qs.

Another example of a lost cognitive instinct is mind-reading: our capacity to think of ourselves and others as having beliefs, desires, thoughts and feelings. Mind-reading looks increasingly like literacy, a skill we know for sure is not in our genes. Scripts have been around for only 5,000-6,000 years, not enough time for us to have evolved a reading instinct. Like print-reading, mind-reading varies across cultures, depends heavily on certain parts of the brain, and is subject to developmental disorders. People with dyslexia have difficulty with print-reading, and people with autism spectrum disorder have difficulty with mind-reading.

Children learn to read minds through conversation with their parents

Fascinating work by the psychologists Mele Taumoepeau and Ted Ruffman at the University of Otago in New Zealand points to a further parallel between these kinds of literacy. In the Western world, mothers unwittingly use similar strategies to teach their children to read minds and to read print. When talking to their 15-month-olds – in the bath, at bedtime, while strapping them into the buggy – mothers more often mention desires and emotions than beliefs and knowledge. Mothers say a lot about wanting and feeling, and relatively little about thinking and knowing. And just how often a mother mentions desires and emotions predicts her infant’s mind-reading ability almost a year later. The more that infants hear about desires and emotions at 15 months, the better they are at using mental-state language and recognising emotions at 24 months. On the other hand, in conversation with their two-year-olds, mothers more often mention beliefs and knowledge than desires and emotions. At this point, it’s the frequency of references to thinking and knowing that predicts the child’s mind-reading ability later, at 33 months.

This pattern suggests that children learn to read minds through conversation with their parents, and the parents unconsciously adopt a strategy like that used to teach children to read print. Just as parents show their children easy-to-read words (‘cat’) before hard-to-read words (‘yacht’), they talk to their children about desires and emotions before they discuss thornier matters of belief and knowledge. Desires and emotions are relatively easy-to-read mental states, because there is often just one kind of behaviour or expression that shows what a person wants or how she feels. Reaching for something, and crying, are fairly unambiguous. Beliefs and knowledge are much trickier to read because they manifest themselves in a variety of ways. Your belief that I’m an idiot could be expressed in a turned back, a puzzled frown or a patronising smile.

Even language, once the king of cognitive instincts, appears increasingly rickety. No one doubts that the specifics of a particular language – for example, the words and idiosyncratic conventions of Urdu – are learned. However, following Noam Chomsky’s idea of ‘universal grammar’, many evolutionary psychologists believe that learning the specifics is guided by innate knowledge of fundamental grammatical rules. This view of language has been bolstered by developmental, neural and genetic findings that are now being overturned. For example, ‘specific language impairment’, a developmental disorder once thought to affect language acquisition alone, turns out not to be specific to language. Children diagnosed with the condition struggle to learn sequences of lights and objects, not just the order of words. And there doesn’t appear to be a ‘language centre’ in the brain. Broca’s area in the left hemisphere has long been regarded as the seat of language, but recent research by the neuroscientists Michael Anderson and Russell Poldrack suggests that language is scattered throughout the cortex. Broca’s area is in fact more likely to be busy when people are performing tasks that don’t involve language than when they’re reading, listening to and producing words. Similarly, FOXP2, once vaunted as a ‘language gene’, has been implicated in sequence learning more generally. Transgenic mice implanted with the human version of FOXP2 are better than their siblings at finding their way around a maze.

The loss of props such as Broca’s area and FOXP2 has not made the whole tent of the language instinct fall down. But now we know that computers can learn grammatical rules without any inbuilt grammatical knowledge, the rain is coming in through the flaps, and the whole structure looks more and more lopsided and insecure.

The evidence for cognitive instincts is now so weak that we need a whole new way of capturing what’s distinctive about the human mind. The founders of evolutionary psychology were right when they said that the secret of our success is computational mechanisms – thinking machines – specialised for particular tasks. But these devices, including imitation, mind-reading, language and many others, are not hard-wired. Nor were they designed by genetic evolution. Rather, humans’ thinking machines are built in childhood through social interaction, and were fashioned by cultural, not genetic, evolution. What makes our minds unique are not cognitive instincts but cognitive gadgets.

When I say that cognitive gadgets are built in childhood, I don’t mean that they’re conjured out of thin air. The mind of a newborn human baby is not a blank slate. Like other animals, we are born with – we genetically inherit – a huge range of abilities and assumptions about the world. We’re endowed with capacities to memorise sequences, to control our impulses, to learn associations between events, and to hold several things in mind while we work on them. Judging from what captures the attention of newborns, we are also born thinking that things that are alive are more important than inanimate objects, and that faces and voices are especially important. These skills and beliefs are part of the ‘genetic starter kit’ for mature human cognition. They are crucial because they direct our attention to other people, and act as cranes in the construction of new thinking machines. But they are not blueprints for Big Special cognitive mechanisms such as imitation, mind-reading and language.

When I say that cognitive gadgets were designed by cultural evolution, I mean something very specific. I don’t just mean that Big Special cognitive mechanisms change over generations and vary across cultures. No doubt both of these things are true – but I am saying, in addition, that our Big Special thinking machines have been shaped to do their jobs by a particular kind of Darwinian process.

A Darwinian process creates a fit between a system and its environment by sifting or selection. The system throws up ‘variants’ – different versions of a thing – and the variants interact with the world in which the system is embedded. As a result of these interactions, some variants live while others die off. Sometimes, the variants that survive have a further virtue. They’re not just good at surviving: they also do a job that, if the system was rational and sentient, it would want to be done well. In that case, the consequence of selection is adaptation: a better fit between the system and its environment.

The very idea of mental states was invented by smart people using general-purpose cognitive mechanisms

Take mind-reading as an example. Evolutionary psychology assumes that mind-reading resulted from Darwinian selection operating on different genes. According to this picture, at one time, in the Pleistocene era, our ancestors had no idea about ideas; they regarded themselves and each other as robots might, without thoughts and feelings. Then the first of many genetic mutations occurred. These mutations predisposed the bearers to think of themselves and others as having internal states – what we now call mental states – that drive behaviour. The mutations might have started with ‘desire genes’, later progressed to ‘belief genes’, and then started making people ponder complex interactions between beliefs and desires more generally. Whatever the sequence of mutations, children (supposedly) inherited mind-reading from their biological parents primarily via DNA, and the tendency spread until it was fixed in all humans. It spread because people endowed with genes that gave them a mind-reading instinct were more effective than others at cooperation and/or competition, and therefore had more babies, to whom they passed on those genes.

The alternative, cultural evolutionary story also starts with ancestors who had no idea about ideas. However, it suggests that those ancestors are somewhat closer to us in time, and highlights the fact that they had other ways of predicting and explaining behaviour. Like other animals, they could predict behaviour in a straightforward way – by learning that a fist withdraws just before it punches. And like some societies today, they relied on social roles and structures. As an adult male with offspring but a poor record in combat, The Flintstones’ Barney Rubble might be expected to posture but then back down when challenged for his meat ration.

On this account, the very idea of mental states was invented by smart people using general-purpose cognitive mechanisms. Whatever the inspiration, these people created a cultural variant: a way of thinking about behaviour that was inherited through social learning, not DNA. Adults passed it on to the pupils that were around them, not just their babies, through conversation. Further innovations, not mutations, in thinking about mental states were inherited in the same social way. In some environments, the mind-reading gadget has conferred major cooperative and competitive benefits, enabling some groups to dominate by force of numbers, conversion and immigration. In other environments, the mind-reading gadget is much less important. Societies in Oceania and Native Central America, for example, treat the mind as an ‘opaque container’, rarely invoking actors’ beliefs and intentions when interpreting their behaviour.

In the case of genetic evolution, the variants in the Darwinian process are produced by genetic mutation. They survive – they’re passed on to future generations – by genetic replication. But in the case of cultural evolution, the variants are made by innovation or ‘developmental mutation’, taught and passed down the generations by social interaction. Changes in the local environment, such as new games, technologies and child-rearing practices, produce differences in the sorts of thinking machines that children acquire. Successful new gadgets spread because people with the new device end up with more pupils – they pass on their skill to a larger number of people – than people with the original model.

The idea that our minds are filled with cognitive gadgets builds on research by anthropologists, biologists and economists. This work shows that many human beliefs and preferences are shaped by cultural evolution, such as a preference for large or small families. But my call is for something more radical, an embrace of cultural evolutionary psychology. It suggests that culture shapes not only the products or artefacts of thought but the very mechanisms that allow us to think in the first place.

‘Gadget’ seems like a good word for a thinking machine designed by cultural evolution. Unlike instincts, gadgets are made by people rather than genes. And although gadgets are small devices, they can make a big difference. The blades of a Magimix depend on the power of the central motor, and Alexa depends on the power of linked computers. Similarly, distinctively human cognitive mechanisms such as imitation could depend on the power of ancient thinking machines; on processes of attention, learning, memory and inhibitory control that we genetically inherited from our distant ancestors. Cognitive gadgets are built by and from this ancient kit. But a neat cognitive gadget such as imitation can still make our lives a lot easier – it enables us to throw a ball, drop a curtsey or make a face, just by seeing it done.

If the gadgets theory is right, new technologies could stimulate rapid cultural evolution of our mental faculties

To be fair, evolutionary psychology did something crucially important. It showed that viewing the mind as a kind of software running on the brain’s hardware can advance our understanding of the origins of human cognition. Now it’s time to take a further step: to recognise that our distinctively human apps have been created by cultural, not genetic, evolution.

The cultural evolutionary psychology I’m advocating opens up exciting new paths for future research. Because cultural evolution is faster than genetic evolution, we can discover how new cognitive gadgets get built by looking at contemporary and historical populations. We don’t have to rely on stones and bones to guess how cognitive mechanisms were put together by genetic evolution in the Pleistocene past. Through laboratory experiments and field studies, we can watch them being constructed in people alive today. Research on the effects of maternal imitation and conversation about the mind isn’t just looking at how children’s minds are fine-tuned by experience, or how inborn mental mills are fed with grist. It has the potential to show us how new mental machines are assembled from old parts through social exchange.

The cognitive gadget perspective might even help us grapple with some of the social and moral debates that swirl around technology. How will the mind cope with a life lived ever more in virtual reality, on social media or among humanoid robots? Standard evolutionary psychology claims that we won’t cope very well. We have ‘Stone Age minds’, remember, built for hunting, foraging and physical contact with a small number of people. Our mental equipment, already outdated by some 300,000 years, is liable to crash, leaving us mystified and at the mercy of artificial intelligence.

But cultural evolutionary psychology challenges this dystopian vision. Cognitive gadgets are agile, because they are coded in our culture-rich environments, and downloaded in the course of childhood. So if the gadgets theory is right, new technologies could stimulate rapid cultural evolution of our mental faculties. Virtual reality might give us prodigious capacities for imitation, exceeding even those of the professional mimics and sportspeople who are celebrated for their outstanding skills. Social media could endow us with new ways of thinking about tribes and community relations. Living and working among robots could transform our everyday conceptions of how minds work, eroding the distinction between the mental and the mechanical, so that the ‘uncanny valley’ becomes a familiar pastoral scene. In the right social and economic environments, our already ‘Space Age minds’ can sprout new gadgets, refine old ones, and move nimbly into whatever the future holds.