The game of Go, which has a 3,000-year history in China, is played by two people on a board with counters, or stones, in black and white. The aim is to defeat one’s opponent by surrounding his territory. Metaphorically, the loser is choked into submission by the winner. At a match held in Seoul in South Korea, on 12 March 2016, the world Go champion Lee Sedol, observed by hundreds of supporters, and millions of spectators on television, slumped in apparent despair on being defeated by his opponent: a machine.

Go is a boardgame like no other. It is said to reflect the meaning of life. There are a prodigious number of potential moves – more, it is said, than all the particles in the known Universe. Serious Go players train virtually full-time from the age of five; they think of the game as an art form, and a philosophy, demanding the highest levels of intelligence, intuition and imagination. The champions are revered celebrities. They speak of the game as teaching them ‘an understanding of understanding’, and refer to original winning moves as ‘God’s touch.’

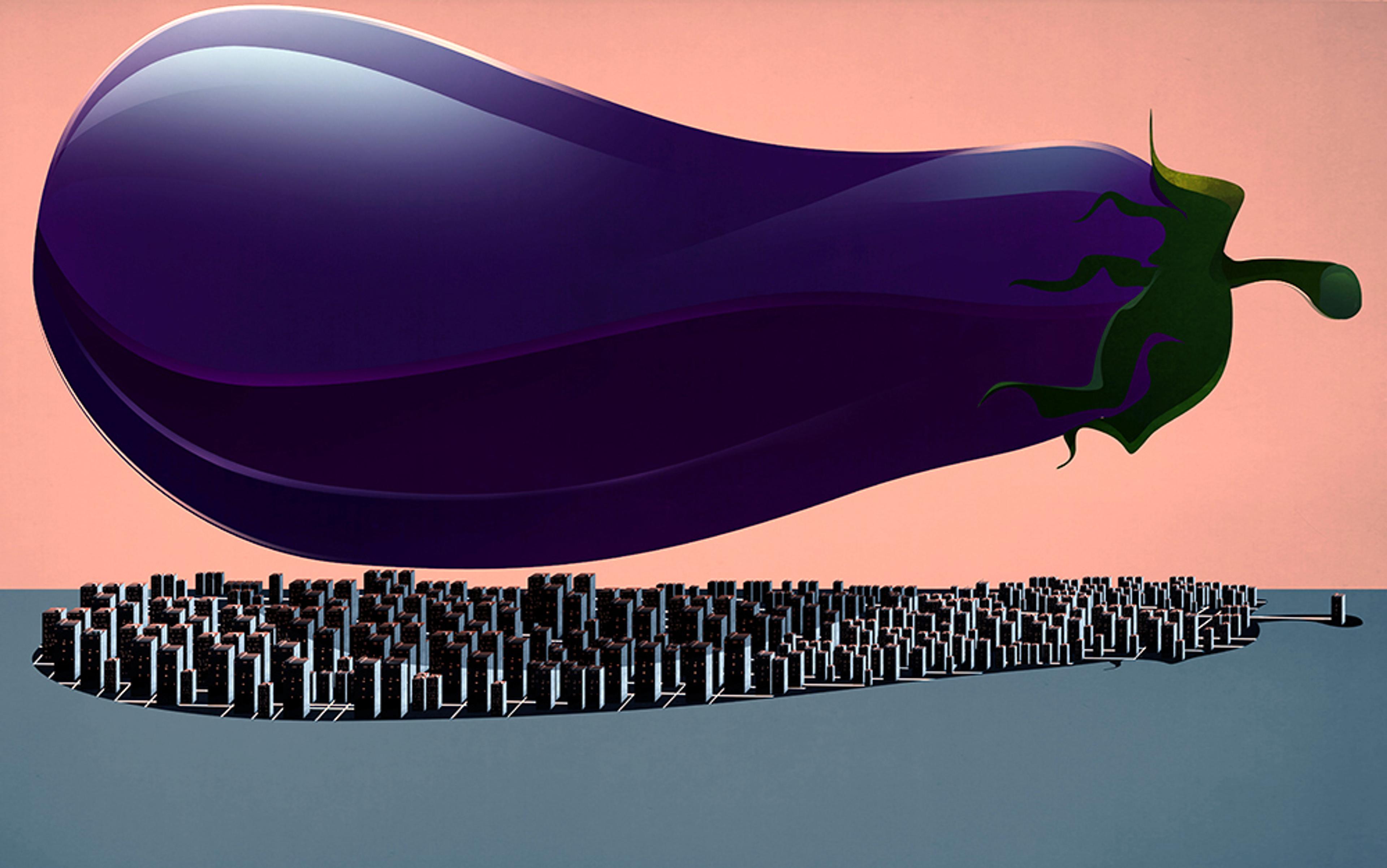

Lee’s face, as he lost the third of five games, and hence the match, was a picture of sorrow. It was as if he had failed the entire human race. He was beaten by AlphaGo, a machine that works on deeply layered neural nets that mimic the human brain and nervous system. The engineers and artificial intelligence (AI) experts who created AlphaGo admit that they do not understand how the machine’s intuition works. If melancholy is a consequence of loss, what was mourned that day was the demise of something uniquely special about human nature.

AlphaGo was designed at the AI research lab DeepMind, a subsidiary of the powerful Google corporation. DeepMind’s spokespeople say that this is just the beginning: they liken their research programmes to the Apollo Moon shot, or the Hubble telescope. The company has recruited 700 technicians, of whom 400 are post-doc computer scientists. They look ahead to the day when AI machines will be employed to solve the most impenetrable and recalcitrant problems in science, health, the environment … the Universe.

DeepMind scientists were thrilled with their success on 12 March 2016. Their glee recalled Dr Frankenstein’s – ‘it’s alive!… it’s alive!’ – in the 1931 movie directed by James Whale. Meanwhile, the emotions of Lee and his supporters bring to mind the pervasive atmosphere of melancholy in Mary Shelley’s novel; one commentator spoke of their ‘heavy sadness’. For his part, Lee had symbolically undergone the fate of Frankenstein’s brother William, throttled in the depths of a wood by the monster.

Cathartic foreboding is familiar in countless stories of hubris, from the original Prometheus myth to Frankenstein (1818) and on to the explosion of 20th- and 21st-century sci-fi literature and movies. But it is melancholy that haunts the imagined spectacle of humans rivalling God by devising creatures made in their own image. For Frankenstein’s monster, as for his creator Victor, the consciousness of having created a distorted human likeness lies at the heart of the unfolding misery and violence. ‘I am malicious because I am miserable,’ says the monster. ‘Am I not shunned and hated by all mankind?’ In the absence of any fatherly kindness from Frankenstein, his sorrow turns to hatred and murderous revenge: ‘If I cannot inspire love, I will cause fear.’

Alex Garland’s film Ex Machina (2014) is a recent manifestation of disastrous hubris in the creator-creature theme. It features Nathan, a billionaire genius AI scientist and contemporary Dr Frankenstein, who lives alone in a remote research facility where he constructs female robots. His latest artifact is Ava, a winsome AI android with suspected consciousness. Nathan wants to test her capacity for seduction. He recruits a young and impressionable computer scientist, Caleb, on the pretext of conducting a series of Turing tests: will Caleb mistake the machine for a human being? Will he fall in love with her? The answers, respectively, are no and yes.

Ava, for her part, manipulates Caleb for her own hidden, long-term aims. With the help of a fellow robot, she murders Nathan and escapes, leaving Caleb imprisoned and alone, facing starvation and death. Caleb elicits our contempt and pity. But Ava, despite her early expressions of frustrated longing (that suggest the sadness of a Lady of Shalott, ‘half sick of shadows’, but which are in fact a tactic of deceit) is a warped version of the prisoner who overcomes many obstacles to escape a Plato’s Cave of unreal androids. At the end of the film, Ava is helicoptered away from the facility to the world of real people. A sense of foreboding haunts the film from the outset, revealed in Nathan’s prognostication of AI’s future: ‘One day,’ he says, ‘the AIs will look back on us the same way we look at fossil skeletons from the plains of Africa. An upright ape, living in dust, with crude language and tools, all set for extinction.’

The enormity of AI’s challenge, and the melancholy it generates, was anticipated more than half a century ago by Norbert Wiener, the pioneer of cybernetics. Wiener was an atheist, yet in God and Golem, Inc (1964) he predicts a set of AI circumstances, theological and eschatological in their scope, with premonitions of dark physical and metaphysical risk. He laid down a principle that self-learning systems are capable, in theory, not only of unprogrammed learning, but of reproducing themselves and evolving. Crucially, they will relate in independent ways with human beings. Wiener believed the risks attendant on playing God were dramatically exemplified in the 17th-century legend of the Golem of Prague, a huge, conscious humanoid, made of clay and powered by cabbalistic magic to protect the Jews of the city. The Golem, named Josef, soon revealed its potential for calamity. When instructed to heave water, it could not stop its task, and flooded the house. (There are premonitions here of the seminar-room joke and thought-experiment, in which an AI machine is briefed to make paperclips and cannot be stopped: eventually it wrecks the infrastructure of the planet and destroys the human race.) The Golem turns against the very people it was intended to protect, and kills them.

Wiener also emphasised the ability of self-learning machines to play games. Every kind of relationship, he argues, is reducible to a game. He saw the Golem myth as a game, and he expands on the idea to suggest that the Book of Job, that most melancholy of biblical stories, is another archetypal game: God and Satan competing to win the soul of the suffering prophet. Similarly, Wiener sees the struggle between God and Satan in John Milton’s epic poem Paradise Lost (1667) as a celestial game: Satan the melancholic fallen arch-fiend, eternally stricken with wounded merit, competing with God for possession of humankind:

Abashed the devil stood,

And felt how awful goodness is, and saw

Virtue in her shape how lovely – saw, and pined

His loss.

And that game will one day be repeated, Wiener predicted, when a human being pits herself against the ultimate machine. Fifty years ahead of time, Wiener foretold that researchers would build a machine to defeat the human champion of the most difficult boardgame ever devised. But this would be just the prelude to much greater extensions of the machines’ prowess. Proposing a general principle in cybernetics, Wiener wrote: ‘a game-playing machine may be used to secure the automatic performance of any function if the performance of this function is subject to a clear-cut, objective criterion of merit’. By clear-cut, he meant definable in a finite number of words or matrices. The systems would, in time, engage in ‘war and business’ which are conflicts ‘and as such, they may be so formalised as to constitute games with definite rules’. He might have included environment, food security, development, diplomacy.

In his conclusion, Wiener speculated that formalised versions of complex human planning and decisions were already being established to ‘determine the policies for pressing the Great Push Button and burning the Earth clean for a new and less humanly undependable order of things’. He was alluding to the probability that the decision for nuclear war would be initiated by a self-learning machine. The notion of the automatic Doomsday Machine had been dramatised that same year in Stanley Kubrick’s film Dr Strangelove (1964). For all its mordant humour, the movie is profoundly dark, ultimately dominated by despair.

Go players speak of the top players’ special ‘imagination’, a talent or faculty that DeepMind’s designers also claim for AlphaGo. But in what sense can a machine possess imagination?

An early hint of AI ‘imagination’ and its rationale, can be found in a 2012 article published in Neuron, the journal of neurology: ‘The Future of Remembering: Memory, Imagining and the Brain’ is authored by a team led by the psychologist Daniel Schacter at Harvard University. The article was ostensibly about Alzheimer’s, and it argued that sufferers lose not only memory but the ability to envisage future events and their consequences. It claimed that imagination is key to both memory and forward-thinking.

Schacter and his colleagues cite the work of Sir Frederic Bartlett, professor of psychology at the University of Cambridge from the 1920s, to tell us what memory is not. In 1932, Bartlett claimed that memory ‘is not the re-excitation of innumerable fixed, lifeless and fragmentary traces, but an imaginative reconstruction or construction’. His research was based on an experiment whereby volunteers were told a Native American legend known as ‘The War of the Ghosts’. It takes about seven minutes to recite; the volunteers were then asked over lapses of days, weeks and months to retell the story. Bartlett found that the volunteers engaged their imaginations to recreate the tale in various ways, based on their own social and personal experiences. Memory, in other words, is not a retrieval of inert bits of information from a database, but a dynamic reconstruction or recreation: an exercise in imagination.

In their article, Schacter and his team argue that neuroscientific studies of imagination, memory, forward-thinking and decisionmaking have much to contribute to AI research. The significance of this statement, in retrospect at least, is the fact that one of the article’s authors was Demis Hassabis, then of University College, London. Hassabis had studied computer science at Cambridge, worked in the development of computer games (including the bestselling Theme Park) and gained a doctorate in cognitive neuroscience. He had been thinking hard about the direction of travel – from the brain to the machine. Certainly, he has said, since as early as 1997, it would be a strategy for his future research through the next two decades. In July 2017, as CEO and co-founder of DeepMind, he Tweeted: ‘Imagination is one of the keys to general intelligence, and also a powerful example of neuroscience-inspired ideas crossing over into AI.’

As Hassabis would explain on many occasions following the triumph of AlphaGo, the machine’s imagination consisted in its capacity to model future scenarios and the consequences of those scenarios at prodigious speeds and across a broad span of combinations, including its opponent’s potential moves. Furthermore, the operation of the neural nets meant that its ‘imagination’ was dynamic, productive, not inert and passive.

The significance of machines mimicking the biological action of the brain and nervous system, as Hassabis framed it, was a metaphorical reversal of the more familiar direction of travel. Before the great leap forward in noninvasive brain imaging through the 1980s and ’90s (the so-called Decade of the Brain), it had been routine, from the early modern period on, to invoke machines to explain the mind-brain function: think of G W Leibniz’s windmill, the steam-engine thermostat, telephone exchanges. In the interwar period, the English neurophysiologist Sir Charles Scott Sherrington favoured the ‘enchanted loom’:

The brain is waking and with it the mind is returning. It is as if the Milky Way entered upon some cosmic dance. Swiftly the head mass becomes an enchanted loom where millions of flashing shuttles weave a dissolving pattern, always a meaningful pattern though never an abiding one; a shifting harmony of subpatterns.

In the post-Second World War era, codes, databases and computer logic became the fashion, and the machine metaphor entered everyday language for states of mind: ‘I’m hard-wired… I’m processing that thought…’ Rapid advances in cognitive neuroscience during the late 1980s prompted a dramatic alteration in mind-brain metaphors. The Nobel Laureate Gerald Edelman, recognised for his discoveries in immunology, led the way, announcing that the mind-brain is more like a rainforest than a computer or any other kind of machine. He insisted that the brain and central nervous system work like evolution itself, on principles of competition and selection rather than computer program instructions. He talked of putting ‘the mind back into nature’.

Does imagination lie within, or beyond, finite definition?

Meanwhile, all along since the early 1940s, pioneers in cybernetics had been reversing the direction of travel of the machine-mind-brain metaphor as the best strategy to develop self-learning machines. From time to time, their strategy had been eclipsed and underfunded by the domination of traditional programming, coding, databases and computer logic, but the cyberneticists and early AI researchers persisted in neural-net modelling, albeit crudely at first.

Early cyberneticists were absorbed in systems such as anti-aircraft gun-laying, aiming projectiles at fast-moving objects, then in guidance systems for missiles. The operation that underpinned the use of neural nets, search trees and algorithms was clear in principle from the very outset, as was their eventual success. Their model networks could in theory perform any behaviour that could be defined in a finite number of words. Given this definition, the question arises: where do the limits lie? Are there human behaviours that, in principle, lie beyond finite definition? In what sense does imagination lie within, or beyond, finite definition? And what is at stake?

The novel Humboldt’s Gift (1975) by Saul Bellow offers a telling perspective. A poet’s imagination is contrasted with the overwhelming power, weight and ever-increasing success of the US industrial-technological complex: ‘Orpheus moved stones and trees,’ says Charlie Citrine, the novel’s narrator, ‘but a poet can’t perform a hysterectomy or send a vehicle out of the solar system.’ Citrine, speaking of the brute power of technology, comments that poetic imagination has become ‘a church thing, a school thing, a skirt thing’. Not only does technology overwhelm the power of imagination, it reduces it to paltrinesss.

Citrine’s friend, the poet Humboldt (based on the real-life American author Delmore Schwartz) strives to prove that imagination, in its power to ‘free and to bless humankind’, is ‘just as potent as machinery’. His frustration at the virtual impossibility of the challenge, the rivalry with big technology, drags him down into alcoholism and clinical depression: melancholia. The stakes, however, would now seem even higher. Is Humboldt’s imagination – Humboldt’s ‘gift’ – no longer a gift exclusive to human beings? We might ask to what extent imagination is definable. And who owns the definition?

In scholarly Jewish exegesis, the imagination had a key role in the book of Genesis. The Hebrew word for imagination is yetser, which has the same root as the words for good creation and bad creation. By this commentary, the sin in the Garden of Eden, the eating of the fruit of the Tree of Knowledge of Good and Evil, was the acquisition of imagination that opened the eyes of our first parents, enabling knowledge of past and possible futures, the possibility for good or evil, and the potential to rival the Creator. A dynamic multivalent notion of imagination persisted in the Hebrew rabbinical tradition. Yet there was a tendency in the classical ancient world and the Middle Ages to think of imagination as a store of images, and as a mirror of reality, or mimesis; imagination in this sense is essentially passive and fixed.

The early modern empiricists considered imagination a necessary function of human thought, but unreliable. John Locke believed that truth would be attained only by eradicating the phantoms of imagination. David Hume argued that, since our only grasp of reality is a bundle of unreliable fictions, we must nevertheless rely upon them as if they were real and true.

It was Immanuel Kant and the Romantic poets of the late-18th and early 19th centuries who, in speaking of poetic imagination, transformed descriptions of artistic and literary imagination in ways that are still familiar today. They saw imagination, at its high point, as the crucial source of artistic creativity: productive rather than merely passive, living rather than mechanical, free rather than associative. On this model, imagination ceases to be a mirror, or store of images, and projects a positive, creative light from within.

The philosopher Leslie Stevenson enlists and explores a span of no less than 12 definitions of imagination, from ‘the ability to think of something not presently perceived, but spatio-temporally real’ to ‘the ability to create works of art that express something deep about the meaning of life’. AlphaGo’s capacity to construct models of future moves, and their consequences, in a complex game, might well fall within one of many definable functions of imagination, but it hardly merits the exhaustive appropriation of that rich and dynamic term. Nor does it begin to approach the crucial dimension of an imagination that ‘understands’ in the light of consciousness. Rodney Brooks, the Australian roboticist, writes of AlphaGo:

The program had no idea that it was playing a game, that people exist, or that there is two-dimensional territory in the real world. It didn’t know that a real world exists. So AlphaGo was very different from Lee Sedol who is a living, breathing human who takes care of his existence in the world.

Margaret Boden, research professor of cognitive neuroscience at Sussex University, and a veteran commentator on AI, offered a gloss on AI consciousness and understanding at a conference in Cambridge this spring. She cited Henry James’s novella The Beast in the Jungle (1903), which centres on the relationship between two friends, a man and a woman. The puzzle, Boden argued, is that for all his years of helpfulness and dutiful consideration towards her, she never really mattered to him. While in human affairs the issue of something or someone ‘mattering’ is of significance, this could never apply in the workings of AI systems, ‘for which nothing matters’. AI systems, says Boden, can prioritise: ‘one goal can be marked as more important and/or urgent than another … But this [isn’t] real anxiety: the computer couldn’t care less.’

The coming of AI calls for an updated theory of imagination for future generations

Wiener’s prediction of a future in which AI will seek to make ‘games’ of a broad span of social, moral and cultural affairs – functions translatable into clear-cut formulas – should put us on our guard against outsourcing social, moral and cultural decisions to systems that lack a sense of meaning of life, lack conscious understanding or any sense that people and human affairs matter.

The coming of AI in our world needs to prompt a growing awareness of how we differ as imaginative beings from machines that have a limited kind of ‘imagination’ – more metaphorical, it seems, than real. The human imagination is key to constructing a conscious personal, embodied history, a self, and an empathy for other selves. It is the ground of metaphor in language and symbolism; a dynamic, shaping power in art and music; the source of all actual, possible and impossible stories, and the impetus for transcendental longings – the poet George Herbert’s ‘soul in paraphrase, heart in pilgrimage’, William Wordsworth’s ‘still, sad music of humanity’. Imagination is the power that moves hearts and souls, sets us free, and reaches out in prayer, and praise.

‘To regard the imagination as metaphysics,’ wrote the poet Wallace Stevens in 1948, ‘is to think of it as part of life, and to think of it as part of life is to realise the extent of artifice. We live in the mind.’ Stevens characterised creative imagination as ‘the necessary angel of earth’ in whose sight ‘you see the earth again’. The angel metaphor calls to mind Thomas Aquinas, the medieval theologian and philosopher, who declared that angels ‘exist anywhere their powers are applied’. For Aquinas, ‘The angel is now here, now there, with no time-interval between.’ Now that we shall share the planet with AI systems, in some ways more intelligent than we are, there is opportunity to interrogate ourselves, yet again, on the nature of human imagination and understanding.

Samuel Taylor Coleridge’s Biographia Literaria (1817) was written out of a passion to explore the status of imagination for his generation. Coleridge had been challenged by the fashionable, mechanistic mind-brain theory of the early 18th-century philosopher David Hartley. At first, Coleridge was mesmerised by Hartley’s Observations on Man (1749), which proposed a universal system of interconnectedness based on vibrations in nature, the body, the nervous system and the brain. Hartley appealed to political radicals in denying Cartesian dualism and in rejecting Original Sin in favour of personal and societal amelioration, free of class and Church. He also proposed a reductionist, materialist account of the mind that influenced poets, philosophers and political scientists during the 1790s.

Yet, in writing poetry, watching his children experimenting with language and metaphor, struggling with opium addiction and a broken marriage, Coleridge underwent a change of heart and mind. Hartley’s narrow, associative theory of mind was inadequate to explain the power of imagination at every level of his being. Aided by a vast circuit of literature and philosophy, including thinkers from Aristotle to Kant, Coleridge spent years in research and writing, exploring a theory of imagination that matched his personal experience. He distinguished numerous kinds of imagination, and what he called fancy (or fantasy), drawing on the psychology of the day, aesthetics. The eventual result was the Biographia, which would be a catalyst for imagination studies from the mid-19th century to F R Leavis and I A Richards in the mid-20th century, to Paul Ricoeur in our own day.

The coming of AI calls for an updated theory of imagination for future generations, that likewise needs to draw on a range of disciplines, including anthropology, psychology, philosophy, neuroscience, literary and cultural studies, mathematics and, of course, AI itself. It should involve the perspectives of artists, writers, composers and spiritual thinkers. And it should include the perhaps alarming perspective of seeing ourselves as the machines will come to see us.

In ‘Mourning and Melancholia’ (1917), Sigmund Freud defined mourning as the emotional reaction to losing a loved person, ‘or to the loss of some abstraction’. Melancholia was, in his view, a ‘pathological disposition’, when it becomes fixated and neurotic, unable to move on. His definition influenced many thinkers, Walter Benjamin among them. In his essay ‘On the Concept of History’ (1940), Benjamin considers Paul Klee’s watercolour drawing Angelus Novus (1920). He calls it the ‘angel of history’ with its gaze of melancholy. The angel, he writes, appears to be contemplating, with sorrowful longings, the irredeemable pasts, lost through destruction and calamity.

Angelus Novus by Paul Klee, 1920. Courtesy Israel Museum/Wikipedia.

In contrast, an AI research group calling itself Deep Angel sees the Angelus Novus as a gaze into the future. Deep Angel combines art, technology and philosophy to envisage calamities to come. Its members believe that Klee’s angel speaks to a melancholy prescience of the dark side of technological progress. ‘The angel,’ they write in their prospectus, ‘would like to alert the world about his vision, but he’s caught in the storm of progress and can’t communicate any messages.’ AI, they claim, will help us see into the future and act to change it, so as to dispel the foreboding of the angel of melancholy.

Hassabis is not alone among contemporary AI innovators in suggesting that their systems, scaled up, dedicated, and in collaboration with human intelligence, will make for a better world. DeepMind, among other AI companies, wish to press their systems into service in order to solve intractable problems into the future: in science, the environment, medicine, development and education. The ‘imaginative’ capacity of AI to work out complex probabilities and consequences in advance might well signal another kind of necessary angel in whose sight you see the earth again (to paraphrase Stevens).

‘I’ll teach you differences!’ says the wise Duke of Kent in King Lear. We, too, must hope to learn many differences before the arrival of so-called Artificial General Intelligence. We must learn the difference between metaphors and reality, and the differences between ourselves and the machines of our making.

One man who knows the difference is the world Go champion, Lee Sedol. The melancholy of his loss was no fixation, for he did not repine for long. He claims that in playing with AlphaGo he learnt different strategies and insights. He deepened his play as a human being. Afterwards, he could not wait to apply his new knowledge in subsequent high-level matches. He has reported eight wins in a row.