In 2002, a distinguished historian wrote that the widely told tales of ‘No Irish Need Apply’ signs in the late 19th-century United States were myths. Richard Jensen at the University of Illinois said that such signs were inventions, ‘myths of victimisation’, passed down from Irish immigrants to their children until they reached the unassailable status of urban legends. For more than a decade, most historians accepted Jensen’s scholarship, while opponents were dismissed – sometimes by Jensen himself – as Irish-American loyalists.

In a 2015 story that seemed to encapsulate the death of expertise, an eighth-grader named Rebecca Fried claimed that Jensen was wrong, not least because of research she did on Google. She was respectful, but determined. ‘He has been doing scholarly work for decades before I was born, and the last thing I want to do was show disrespect for him and his work,’ she said later. It all seemed to be just another case of a precocious child telling an experienced teacher – an emeritus professor of history, no less – that he had not done his homework.

But, as it turns out, she was right and he was wrong. Such signs existed, and they weren’t that hard to find.

For years, other scholars had wrestled with Jensen’s claims, but they fought with his work inside the thicket of professional historiography. Meanwhile, outside the academy, Jensen’s assertion was quickly accepted and trumpeted as a case of an imagined grievance among Irish-Americans.

Young Rebecca, however, did what a sensible person would: she started looking through databases of old newspapers. She found the signs, as the Daily Beast later reported, ‘collecting a handful of examples, then dozens, then more. She went to as many newspaper databases as she could. Then she thought, somebody had to have done this before, right?’ As it turned out, neither Jensen nor anyone else had apparently bothered to do this basic fact-checking. Miss Fried has now entered high school with a published piece in the Journal of Social History, and she is not alone in overturning the status quo.

In the 1970s, the top nutritional scientists in the US told the government that eggs, among many other foods, might be lethal. There could be no simpler application of Occam’s Razor, with a trail leading from the barnyard to the morgue. Eggs contain a lot of cholesterol, cholesterol clogs arteries, clogged arteries cause heart attacks, and heart attacks kill people. The conclusion was obvious: Americans need to get all that cholesterol out of their diet. And so they did. Then something unexpected happened: Americans gained a lot of weight and started dying of other things.

The egg scare was based on a cascade of flawed studies, some going back almost a half century. People who want to avoid eggs can still do so, of course. In fact, there are now studies that suggest that skipping breakfast entirely – which scientists have also long been warning against – isn’t as bad as anyone thought either.

Experts get things wrong all the time. The effects of such errors range from mild embarrassment to wasted time and money; in rarer cases, they can result in death, and even lead to international catastrophe. And yet experts regularly ask citizens to trust expert judgment and to have confidence not only that mistakes will be rare, but that the experts will identify those mistakes and learn from them.

Day to day, laypeople have no choice but to trust experts. We live our lives embedded in a web of social and governmental institutions meant to ensure that professionals are in fact who they say they are, and can in fact do what they say they do. Universities, accreditation organisations, licensing boards, certification authorities, state inspectors and other institutions exist to maintain those standards.

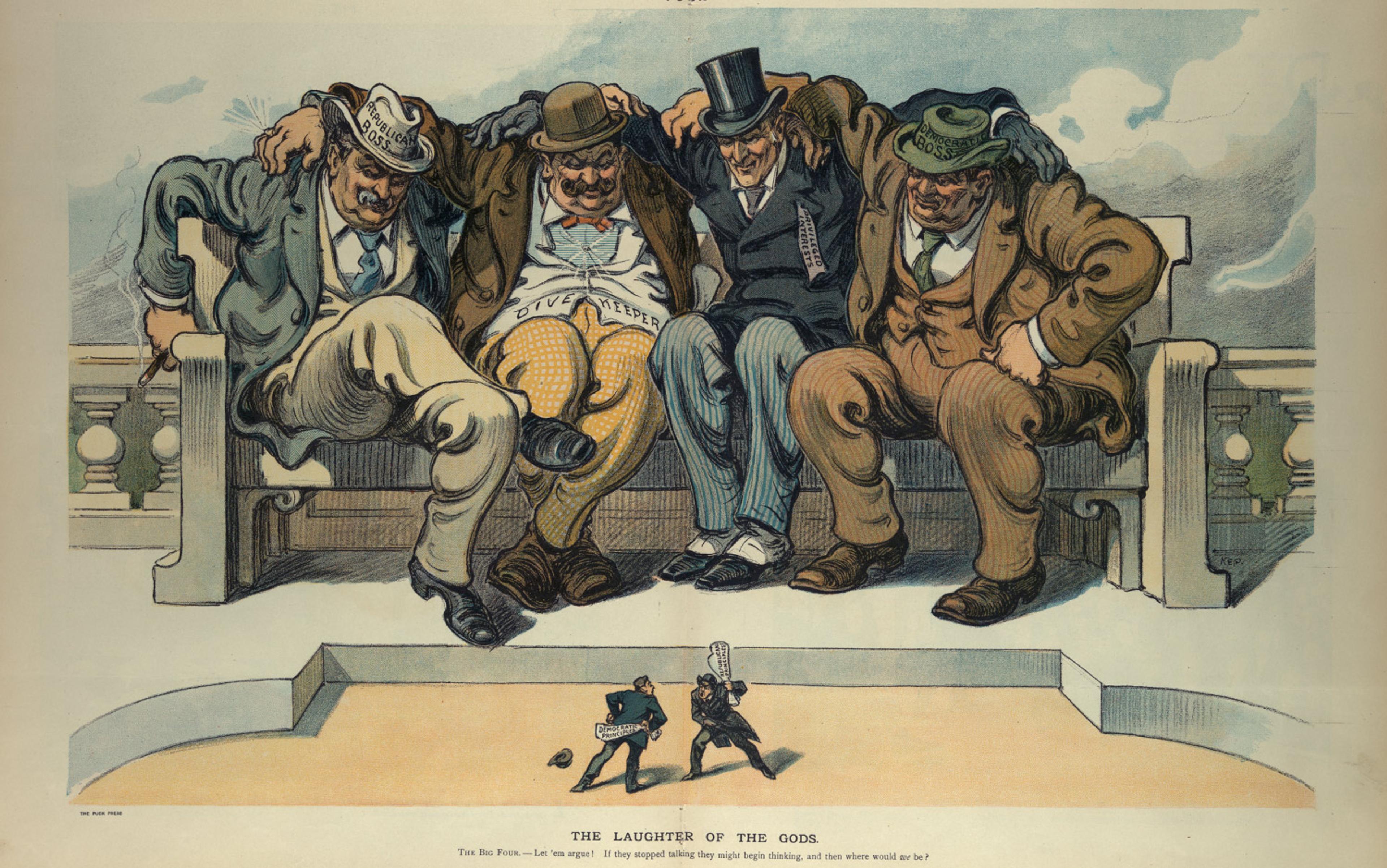

This daily trust in professionals is a prosaic matter of necessity. It is in much the same way that we trust everyone else in our daily lives, including the bus driver we assume isn’t drunk or the restaurant worker we assume has washed her hands. This is not the same thing as trusting professionals when it comes to matters of public policy: to say that we trust our doctors to write us the correct prescription is not the same thing as saying that we trust all medical professionals about whether the US should have a system of national healthcare. To say that we trust a college professor to teach our sons and daughters the history of the Second World War is not the same as saying that we therefore trust all academic historians to advise the president of the US on matters of war and peace.

For these larger decisions, there are no licences or certificates. There are no fines or suspensions if things go wrong. Indeed, there is very little direct accountability at all, which is why laypeople understandably fear the influence of experts.

How do experts go wrong? There are several kinds of expert failure. The most innocent and most common are what we might think of as the ordinary failures of science. Individuals, or even entire professions, get important questions wrong because of error or because of the limitations of a field itself. They observe a phenomenon or examine a problem, come up with theories and solutions, and then test them. Sometimes they’re right, and sometimes they’re wrong.

Science is learning by doing. Laypeople are uncomfortable with ambiguity, and they prefer answers rather than caveats. But science is a process, not a conclusion. Science subjects itself to constant testing by a set of careful rules under which theories can be displaced only by other theories. Laypeople cannot expect experts to never be wrong; if they were capable of such accuracy, they wouldn’t need to do research and run experiments in the first place. If policy experts were clairvoyant or omniscient, governments would never run deficits, and wars would break out only at the instigation of madmen.

The experts who predicted an all-out international arms race in nuclear weapons at the end of the 1950s were wrong. But they were wrong at least in part because they underestimated the efficacy of their own efforts to limit the spread of nuclear weapons. President John F Kennedy feared a world of as many as 25 nuclear armed powers by the 1970s. (As of 2017, only 10 nations have crossed this threshold, including one – South Africa – that has renounced its arsenal.) Kennedy’s prediction, based on the best expert advice, was not impossible or even unreasonable; rather, the number of future nuclear powers was lowered with the assistance of policies advocated by those same experts.

Other forms of expert failure are more worrisome. Experts can go wrong, for example, when they try to stretch their expertise from one area to another. A biologist is not a medical doctor but, in general terms, a biologist is likely to be relatively better able to understand medical issues than a layperson. Still, this does not mean that anyone in the life sciences is always better informed than anyone else on any issue in that area. A diligent person who has taken the time to read up on, say, diabetes could very well be more conversant in that subject than a botanist.

Finally, there is outright deception and malfeasance. This is the rarest but most dangerous category. Here, experts for their own reasons (usually careerist defences of their own shoddy work) intentionally falsify their results. Such misconduct can be hard to detect specifically because it requires other experts to ferret it out; laypeople are not equipped to take apart scientific studies, no more than they are likely to look closely at a credential hanging on a wall to see if it is real.

Sometimes, experts aren’t experts. People lie brazenly about their credentials. This is the kind of bravura fakery that the real-life ‘Great Pretender’, Frank Abagnale, pulled off in the 1960s – later popularised in the movie Catch Me If You Can (2002), including his impersonation of an airline pilot and a medical doctor.

When actual experts lie, they endanger not only their own profession but also the wellbeing of their client: society. Their threat to expertise comes in both the immediate outcome of their chicanery and the erosion of social trust that such misconduct creates. Most such misconduct is invisible to laypeople because it is so dull. Unlike the dramatic stories of massive fraud that people see in well-known movies such as Erin Brockovich (2000) or The Insider (1999), most of the retractions in scientific journals are over small-bore mistakes or misrepresentations in studies on narrow topics.

Attempts to reproduce 100 psychology studies found that more than half of the findings did not hold up when retested

Indeed, natural scientists could point out that retractions in themselves are signs of professional responsibility and oversight. The scientific and medical journals with the highest impact on their fields – the New England Journal of Medicine, for example – tend to have higher rates of retractions. No one, however, is quite sure why. It could be due to more people checking the results, which would be a heartening trend. It could also happen because more people cut corners to get into top journals, which would be a depressing reality.

The gold standard of any scientific study is whether it can be replicated or at least reconstructed. This is why scientists and scholars use footnotes: not as insurance against plagiarism – although there’s that, too – but so that their peers can follow in their footsteps to see if they would reach the same conclusions.

This kind of verification assumes, however, that anyone is bothering to replicate the work in the first place. Ordinary peer review does not include rerunning experiments; rather, the referees read the paper with an assumption that basic standards of research and procedure were met.

Recently, a team of researchers set out to do the work of replication in the field of psychology. The results were surprising, to say the least. As The New York Times reported in 2015, a ‘painstaking’ effort to reproduce 100 studies published in three leading psychology journals found that more than half of the findings did not hold up when retested.

This outcome is cause for concern, but is it fraud? Lousy research isn’t the same thing as misconduct. In many of these cases, the problem is not that the replication of the study produced a different result but that the studies themselves were inherently ‘irreproducible’, meaning that their conclusions might be useful but that other researchers cannot rerun those human investigations in the same way over and over.

Cancer researchers ran into the same problems as the psychologists when they tried to replicate studies in their field. Daniel Engber, a writer for Slate, reported in 2016 on a group of biomedical studies that suggested a ‘replication crisis’ much like the one in psychology, and he noted that by some estimates ‘fully half of all results rest on shaky ground, and might not be replicable in other labs’.

Rarely does a single study make or break a subject. The average person is not going to have to rely on the outcome of any particular project in, say, cell research. When a group of studies is aggregated into a drug or a treatment of which that one study might be a part, this itself triggers successive studies looking at safety and efficacy. It is possible to fake one study. To fake hundreds and thus produce a completely fraudulent or dangerous result is another matter entirely.

However, what fraud does in any field is to waste time and delay progress. Much in the same way that an error buried early in a complex set of equations can bog down later calculations, fraud or misconduct can delay an entire project until someone figures out who screwed up.

One of the most common errors that experts make is to assume that, because they are smarter than most people about certain things, they are smarter than everyone about everything.

Overconfidence leads experts not only to get out of their own lane and make pronouncements on matters far afield of their expertise, but also to ‘over-claim’ wider expertise even within their own general area of competence. Experts and professionals, just as people in other endeavours, assume that their previous successes and achievements are evidence of their superior knowledge, and they push their boundaries rather than say the three words that every expert hates to say: ‘I don’t know.’ No one wants to appear to be uninformed or to be caught out on some ellipsis in their personal knowledge. Laypeople and experts alike will issue confident statements on things about which they know nothing, but experts are supposed to know better.

The Nobel Prize-winning chemist Linus Pauling, for example, became convinced in the 1970s that vitamin C was a wonder drug. He advocated taking megadoses of the supplement to ward off the common cold and any number of other ailments. There was no actual evidence for Pauling’s claims, but Pauling had a Nobel in chemistry, and so his conclusions about the effect of vitamins seemed to many people to be a reasonable extension of his expertise.

In fact, Pauling failed to apply the scientific standards of his own profession at the very start of his advocacy for vitamins. He began taking vitamin C in the late 1960s on the advice of a self-proclaimed doctor named Irwin Stone, who told Pauling that if he took 3,000 milligrams of vitamin C a day – many times the recommended daily amount – he would live 25 years longer. ‘Doctor’ Stone’s only degrees, however, were two honorary awards from a non-accredited correspondence school and a college of chiropractic medicine.

Pauling wanted to believe in the concept, and he started gobbling the vitamin. Immediately, he felt its miraculous effects. A more impartial observer might have suspected a ‘placebo effect’, in which telling someone that a pill will make them feel better makes them think they feel better. But because of Pauling’s illustrious contributions to science, his colleagues took him seriously. Pauling himself died of cancer at age 93. Whether he got the extra 25 years ‘Doctor’ Stone promised him, we’ll never know.

Sometimes, experts use the lustre of a particular credential or achievement to go even further afield of their area, in order to influence important public policy debates. In 1983, a New York City radio station broadcast a programme about the nuclear arms race. The 1980s were tense years in the Cold War, and 1983 was one of the worst: the Soviet Union shot down a civilian Korean airliner, talks between the US and the USSR about nuclear arms broke down in Geneva, and ABC’s film on a possible nuclear war, The Day After, became the most-watched television programme of that period.

I was a young postgraduate in New York at the time, studying the Soviet Union and looking ahead to a career in public policy. ‘If Ronald Reagan is re-elected,’ the voice on my radio said in a sharp Australian accent, ‘nuclear war becomes a mathematical certainty.’

The speaker was a woman named Dr Helen Caldicott. She was not a doctor of physics or government or international affairs, but a paediatrician from Australia. Her concern about nuclear weapons, by her own recollection, stemmed from reading Nevil Shute’s post-apocalyptic novel On the Beach (1957), set in her native country. As she later put it, she saw no point in treating children for their illnesses when the world around them could be reduced to ashes at any moment.

Chomsky is revered by millions, having written a stack of books on foreign policy. He is actually a linguistics professor

Caldicott was prone to making definitive statements about highly technical matters. She would discourse confidently on things such as the resilience of US missile silos, civil defence measures, and the internal workings of the Soviet foreign policy apparatus. She resided in the US for almost a decade, and she became a regular presence in the media representing the antinuclear activist community.

The expert community is full of such examples. The most famous, at least if measured by impact on the global public, is the MIT professor Noam Chomsky, a figure revered by millions of readers around the world. Chomsky, by some counts, is the most widely cited living American intellectual, having written a stack of books on politics and foreign policy. His post at MIT, however, was actually as a professor of linguistics. Chomsky is regarded as a pioneer, even a giant, in his own field, but he is no more an expert in foreign policy than, say, the late George Kennan was in the origins of human language. Nonetheless, he is more famous among the general public for his writings on politics than in his area of expertise; indeed, I have often encountered college students over the years who are familiar with Chomsky but who had no idea he was actually a linguistics professor.

Like Pauling and Caldicott, however, Chomsky answered a need in the public square. Laypeople often feel at a disadvantage challenging traditional science or socially dominant ideas, and they will rally to outspoken figures whose views carry a patina of expert assurance. It might well be that doctors should look closely at the role of vitamins in the human diet. It is certain that the public should be involved in an ongoing reconsideration of the role of nuclear weapons. But a degree in chemistry or a residency in paediatrics does not make advocates of those positions more credible than any other autodidact in those esoteric subjects.

The public is remarkably tolerant of such trespasses, and this itself is a paradox: while some laypeople do not respect an expert’s actual area of knowledge, others assume that expertise and achievement are so generic that experts and intellectuals can weigh in with some authority on almost anything. The same people who might doubt their family physician about the safety of vaccines will buy a book on nuclear weapons because the author’s title includes the magic letters ‘MD’.

Unfortunately, when experts are asked for views outside their competence, few are humble enough to remember their responsibility to demur. I have made this mistake myself, and I have ended up regretting it. In a strange twist, I have also actually argued with people who have insisted that I am fully capable of commenting on a subject when I have made plain that I have no particular knowledge in the matter at hand. It is an odd feeling indeed to assure a reporter or especially a student that, despite their faith in me, it would be irresponsible to answer their question with any pretence of authority. It is an uncomfortable admission, but one we can only wish linguistics professors, paediatricians and so many others would make as well.

In the early 1960s, an entertainer known as ‘The Amazing Criswell’ was a regular guest on television and radio shows. Criswell’s act was to make outrageous predictions, delivered with a dramatic flourish of ‘I predict!’ Among his many pronouncements, Criswell warned that New York would sink into the sea by 1980, Vermont would suffer a nuclear attack in 1981, and Denver would be destroyed in a natural disaster in 1989. Criswell’s act was pure camp, but the public enjoyed it. What Criswell did not predict, however, was that his own career would fizzle out in the late 1960s and end with a few small roles in low-budget sexploitation films made by his friend, the legendarily awful director Ed Wood.

Prediction is a problem for experts. It’s what the public wants, but experts usually aren’t very good at it. This is because they’re not supposed to be good at it; the purpose of science is to explain, not to predict. And yet predictions, like cross-expertise transgressions, are catnip to experts.

These predictions are often startlingly bad. In his widely read study on ‘black swan’ events – the unforeseeable moments that can change history – Nassim Nicholas Taleb decried the ‘epistemic arrogance’ of the whole enterprise of prediction:

We produce 30-year projections of social security deficits and oil prices without realising that we cannot even predict these for next summer – our cumulative prediction errors for political and economic events are so monstrous that every time I look at the empirical record I have to pinch myself to verify that I am not dreaming.

Taleb’s warning about the permanence of uncertainty is an important observation, but his insistence on accepting the futility of prediction is impractical. Human beings will not throw their hands up and abandon any possibility of applying expertise as an anticipatory hedge.

The question is not whether experts should engage in prediction. They will. The society they live in and the leaders who govern it will ask them to do so. Rather, the issue is when and how experts should make predictions, and what to do about it when they’re wrong.

In his book Expert Political Judgment: How Good Is It? How Can We Know? (2005), the scholar Philip Tetlock gathered data on expert predictions in social science, and found what many people suspected: ‘When we pit experts against minimalist performance benchmarks – dilettantes, dart-throwing chimps, and assorted extrapolation algorithms – we find few signs that expertise translates into greater ability to make either “well-calibrated” or “discriminating” forecasts.’ Experts, it seemed, were no better at predicting the future than spinning a roulette wheel. Tetlock’s initial findings confirmed for many laypeople a suspicion that experts don’t really know what they’re doing.

But this reaction to Tetlock’s work was a classic case of laypeople misunderstanding expertise. As Tetlock himself noted: ‘Radical skeptics welcomed these results, but they start squirming when we start finding patterns of consistency in who got what right … the data revealed more consistency in forecasters’ track records than could be ascribed to chance.’

Tetlock, in fact, did not measure experts against everyone in the world, but against basic benchmarks, especially the predictions of other experts. The question wasn’t whether experts were no better than anyone else at prediction, but why some experts seemed better at prediction than others, which is a very different question.

What Tetlock actually found was that certain kinds of experts seemed better at applying knowledge to hypotheticals than their colleagues. Tetlock used the British thinker Isaiah Berlin’s distinction between ‘hedgehogs’ and ‘foxes’ to distinguish between experts whose knowledge was wide and inclusive (‘the fox knows many things’) from those whose expertise is narrow and deep (‘the hedgehog knows one big thing’). While experts ran into trouble when trying to move from explanation to prediction, the ‘foxes’ generally outperformed the ‘hedgehogs’, for many reasons.

As classic hedgehogs, scientists have difficulty processing information from outside their small lane of expertise

Hedgehogs, for example, tended to be overly focused on generalising their specific knowledge to situations that were outside of their competence, while foxes were better able to integrate more information and to change their minds when presented with new or better data. ‘The foxes’ self-critical, point-counterpoint style of thinking,’ Tetlock found, ‘prevented them from building up the sorts of excessive enthusiasm for their predictions that hedgehogs, especially well-informed ones, displayed for theirs.’

Technical experts, the very embodiment of the hedgehogs, had considerable trouble not only with prediction but with broadening their ability to process information outside their area in general. People with a very well-defined area of knowledge do not have many tools beyond their specialisation, so their instinct is to take what they know and generalise it outward, no matter how poorly the fit is between their own area and the subject at hand. This results in predictions that are made with more confidence but that tend to be more often wrong, mostly because the scientists, as classic hedgehogs, have difficulty accepting and processing information from outside their very small but highly complicated lane of expertise.

There are some lessons in all this, not just for experts, but for laypeople who judge – and even challenge – expert predictions. The most important point is that failed predictions do not mean very much in terms of judging expertise. Experts usually cover their predictions (and an important part of their anatomy) with caveats, because the world is full of unforeseeable accidents that can have major ripple effects down the line. History can be changed by contingent events as simple as a heart attack or a hurricane. Laypeople tend to ignore these caveats, despite their importance.

This isn’t to let experts, especially expert communities, off the hook for massive failures of insight. The polling expert Nate Silver, who made his reputation with remarkably accurate forecasts in the 2008 and 2012 presidential elections, has since admitted that his predictions about the Republican presidential nominee Donald Trump in 2016 were based on flawed assumptions. But Silver’s insights into the other races remain solid, even if the Trump phenomenon surprised him and others.

The goal of expert advice and prediction is not to win a coin toss, it is to help guide decisions about possible futures. Professionals must own their mistakes, air them publicly, and show the steps they are taking to correct them. Laypeople must exercise more caution in asking experts to prognosticate, and they must educate themselves about the difference between failure and fraud.

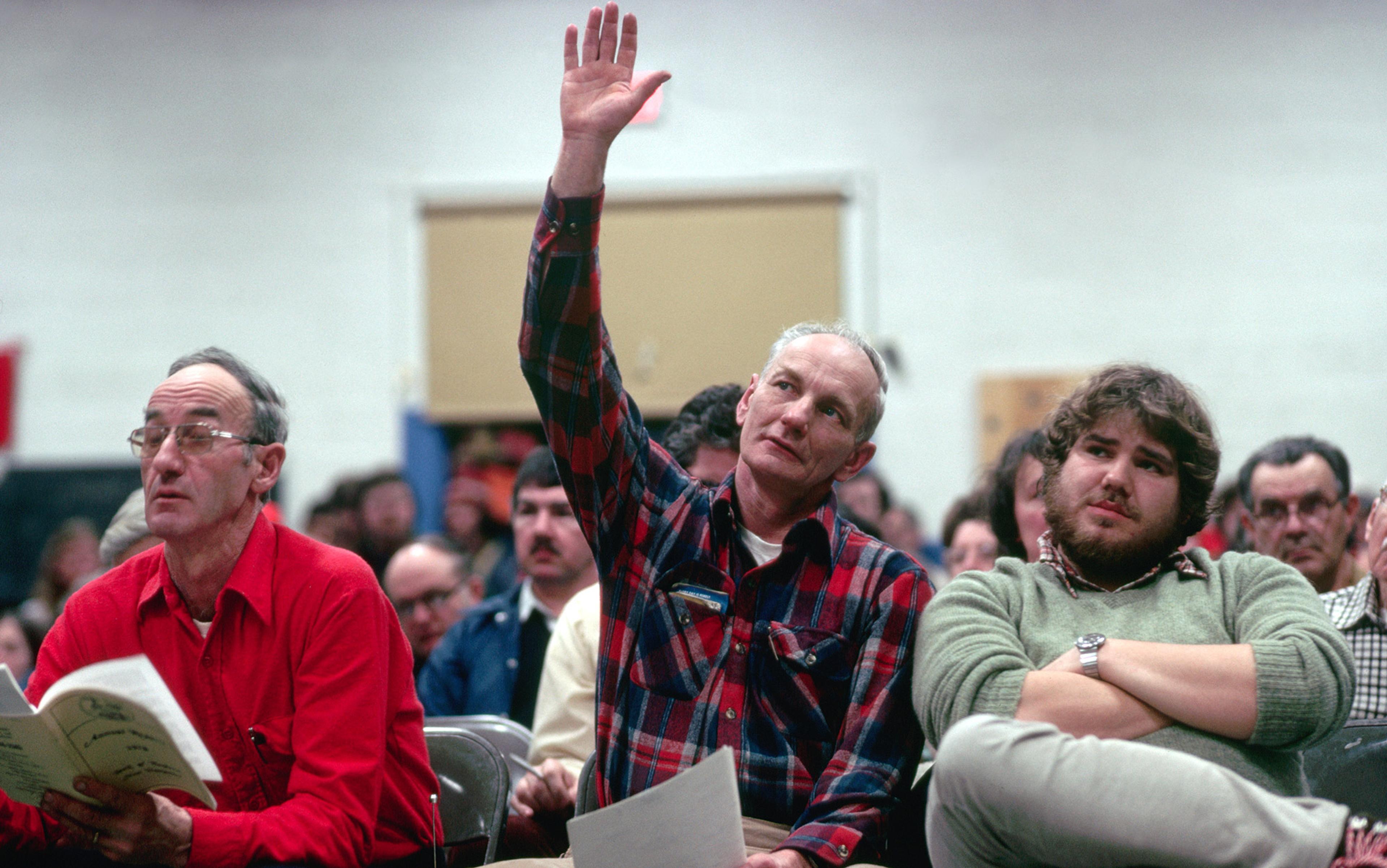

If laypeople refuse to take their duties as citizens seriously, and do not educate themselves about issues important to them, democracy will mutate into technocracy. The rule of experts, so feared by laypeople, will grow by default.

Democracy cannot function when every citizen is an expert. Yes, it is unbridled ego for experts to believe they can run a democracy while ignoring its voters; it is also, however, ignorant narcissism for laypeople to believe that they can maintain a large and advanced nation without listening to the voices of those more educated and experienced than themselves.

Adapted from an extract from The Death of Expertise (2017) by Tom Nichols, published by Oxford University Press ©Tom Nichols.