Would you say that astrology is very scientific, sort of scientific, or not at all scientific? The question was asked in a survey in the United States, and according to Science and Engineering Indicators 2014 ‘slightly more than half of Americans said that astrology was “not at all scientific”’. The rest, almost 50 per cent, were willing to grant it credibility, a higher proportion than in previous surveys. Perhaps that indicates a decline in rational scepticism, but an alternative interpretation suggests itself: many respondents had simply confused astrology and astronomy. It’s a common enough mistake: when the Daily Mail profiled the ailing Patrick Moore not long before his death in 2012, they dubbed him an ‘astrological legend’. One can only hope the error did nothing to hasten the great man’s demise.

As an amateur astronomer myself, I’m used to the mix-up, though to be honest, the confusion doesn’t particularly trouble me. Don’t get me wrong, I am not going to suggest there is any plausibility in the idea that the gravitational field of Jupiter can stimulate life-changing tidal forces in my head. But while the boundary between science and pseudo-science seems clear enough in theory, it’s not always so straightforward in practice. The reason, in many cases, is that both draw on the same recurring set of ideas.

I would like to propose what I shall call the principle of eternal folly. It states that in nearly every era there arises, in some form, nearly every idea of which humans are capable. Certainly, there is the emergence of new ideas: technological ones are the most obvious, but there are others, too. I do think it fair to say that Jane Austen, Beethoven, and even the occasional entrepreneur have invented radically new things. However, the vast majority of ideas are recycled – and it is when we fail to recognise this, as we eternally do, that we commit folly.

Conventional wisdom sees a transition somewhere around the 17th century between ancient ‘science’ and the genuine article we know today. Astrology gave way to astronomy, alchemy to chemistry, and the old doctrines of ‘armchair philosophers’ were finally abandoned in favour of hypotheses that could be empirically tested. Galileo’s experiments on motion are a school-room paradigm of the modern scientific method, while Aristotle’s idea that stones fall because they want to get to the centre of the Earth, and fire rises because it belongs in the sky, is typical of the unscientific approach.

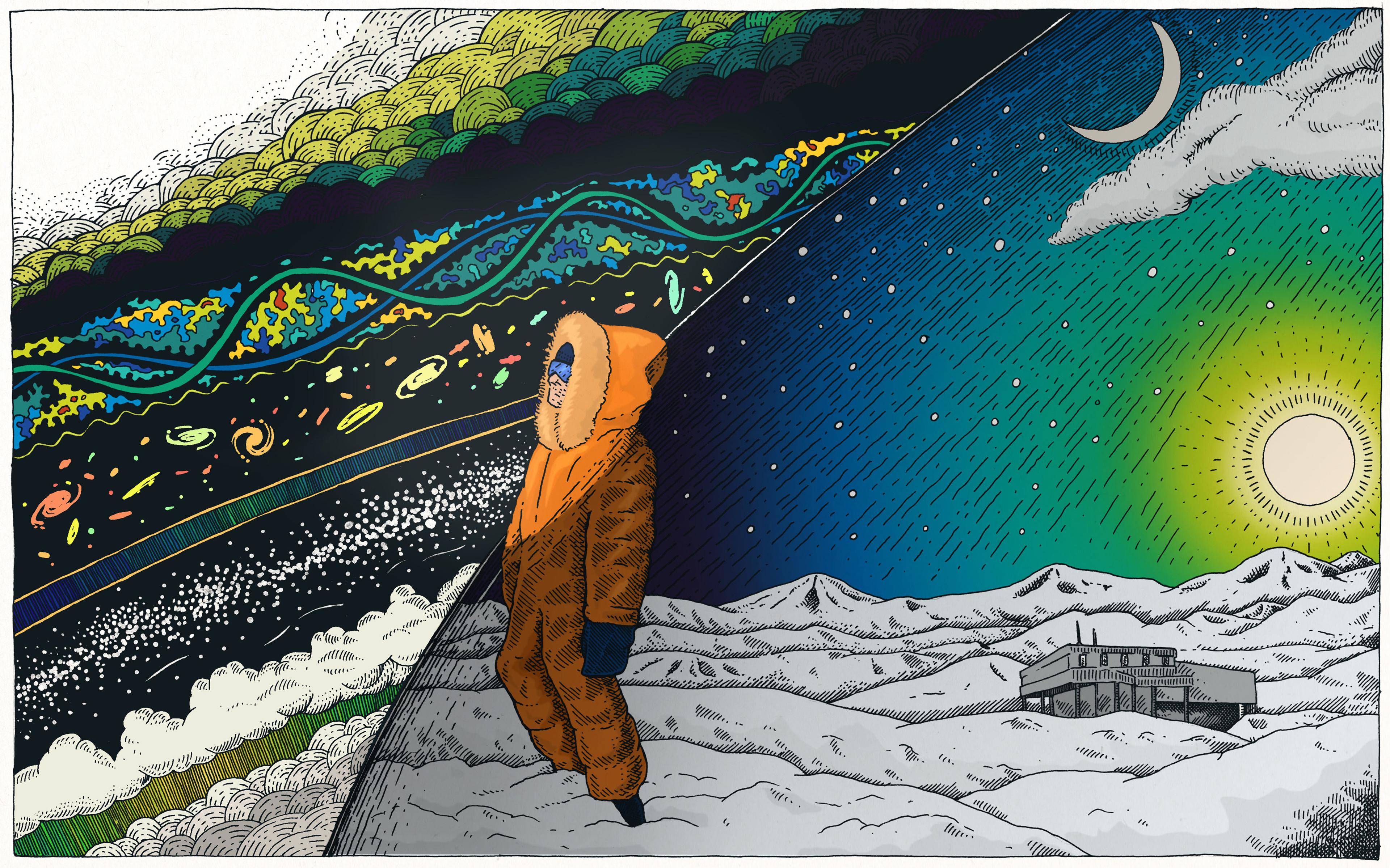

The principle of eternal folly offers a somewhat different picture. In place of history seen as a progression of steps on a ladder, we could instead imagine something more stratified, rather like the escarpments of the Weald of Kent that Charles Darwin wrote about so eloquently. We envisage a cliff-face exposed by erosion; our own age is the topmost layer, but presented to us are the remains of every preceding age, and we are at liberty to pluck out buried fossils if we choose.

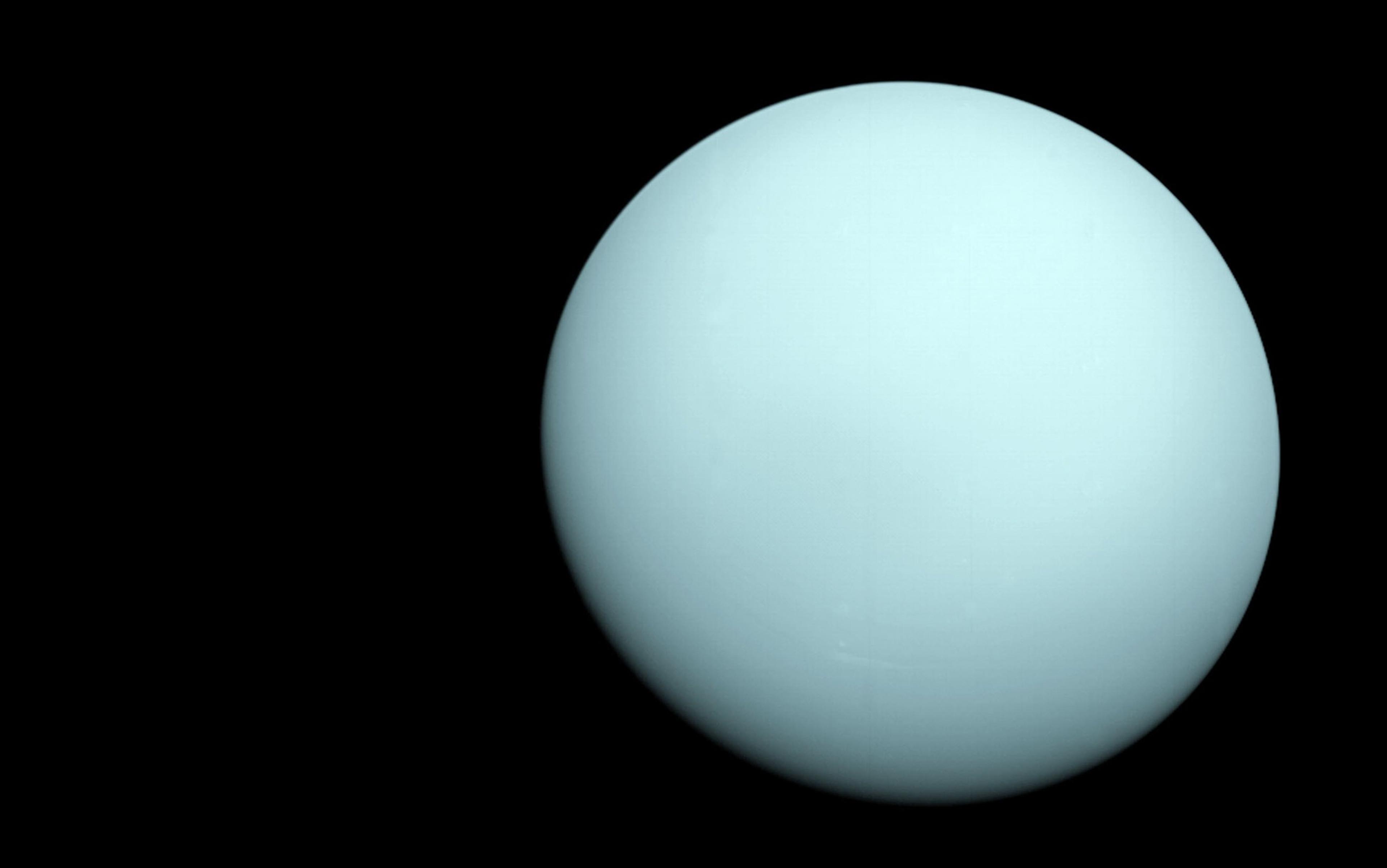

It is not hard to find such fossilised ideas all around us. We still say that the sun rises and sets, or that we cast a glance over a page, though we know that the Earth rotates and rays come into our eyes, not out of them. On every clear night when I set up my telescope to look at the stars, I’m confronted with this stratification of human history. I can view those twinkling lights as balls of hydrogen and helium powered by nuclear fusion, all lying at greatly different distances, or I can see them as fixed patterns on a sphere: constellations such as Libra, my birth sign. It might not be scientific, but is it any more silly than looking at a picture of mountains in Scotland and thinking of it as my homeland?

The 16th-century Danish nobleman Tycho Brahe is remembered for making celestial measurements of unprecedented accuracy that undermined the old Ptolemaic theory, but he also supplied his royal patrons with predictions based on planetary positions, and conducted experiments that might have led to the high levels of mercury found in his remains when they were exhumed in 2010. Do we call him astronomer or astrologer, chemist or alchemist? We could dismiss his astrological charts as meaningless, but his model of the solar system was flawed too: he thought the planets went round the Sun, and all of those together went round the Earth. If being right is the definition of science, then Brahe doesn’t qualify; and if agreement with empirical data is the hallmark, then there are an awful lot of present-day theoretical physicists whose untestable ideas about superstrings or multiverses possibly put them in the same category as the jocular British pop-astrologer Russell Grant.

Whether engaged in astronomy or astrology, Brahe’s mathematical calculations were much the same. We could say, though, that his astrology was based on the idea of supernatural planetary influence, without offering any explanation of what it was or how it might operate, or any evidence of its existence. The only support came from tradition: the belief that ancient people held special knowledge of the universe. In the stratified cliff-face of human thought, this is a fossilised idea that still turns up quite a lot.

It’s an idea that Isaac Newton took very seriously. A few years after publishing his greatest scientific work, the Principia (1687), he began a treatise, ‘On the origin of religion and its corruption’. From his extensive studies of Biblical and other sources, Newton believed that the first religion – preserved by Noah ‘and from him spread into all nations at the first peopling of the Earth’ – involved altars holding sacred fire, representing the Sun as the centre of the universe. In other words, Newton believed that ancient people (going right back to the Garden of Eden) were granted revelatory knowledge about the true nature of the cosmos – knowledge that later became lost, leaving people thinking instead that the Earth was the centre. The first science, according to Newton’s treatise, was the wisdom of priests:

So then twas one designe of the first institution of the true religion to propose to mankind by the frame of the ancient Temples, the study of the frame of the world as the true Temple of the great God they worshipped. And thence it was that the Priests anciently were above other men well skilled in the knowledge of the true frame of Nature & accounted it a great part of their Theology… And when the Greeks travelled into Egypt to learn astronomy & philosophy they went to the Priests.

How did this original knowledge become lost? According to Newton, the ancient temples (the Greek Prytanaea) contained representations of the heavenly bodies, and people began worshipping those, eventually seeing them as gods in their own right, thus lapsing into idolatry. So instead of history being a steady march of intellectual progress, Newton saw it as a process of loss and decay; in the Principia, he had finally been able to rediscover what the first priests must have known anyway.

Nowadays, we see Newton’s writings as falling into three main areas: scientific, religious and alchemical. Newton himself presumably didn’t see such a split: his manuscripts frequently contain mathematical notes in the middle of theological treatises. But the contemporary division is clear: the papers judged scientific are a University of Cambridge prize possession, while the rest went up for auction in 1936 and were mostly acquired by two men. The Jewish polymath Abraham Yahuda got the bulk of the religious papers, which now reside in the National Library of Israel, while the alchemical ones were bought by John Maynard Keynes.

In a lecture titled ‘Newton, the Man’ (1942), Keynes tried to fathom the ‘queer collections’ left by a man whom he considered ‘Copernicus and Faustus in one’. For him, ‘Newton was not the first of the age of reason. He was the last of the magicians.’ He had ‘one foot in the Middle Ages and one foot treading a path for modern science’.

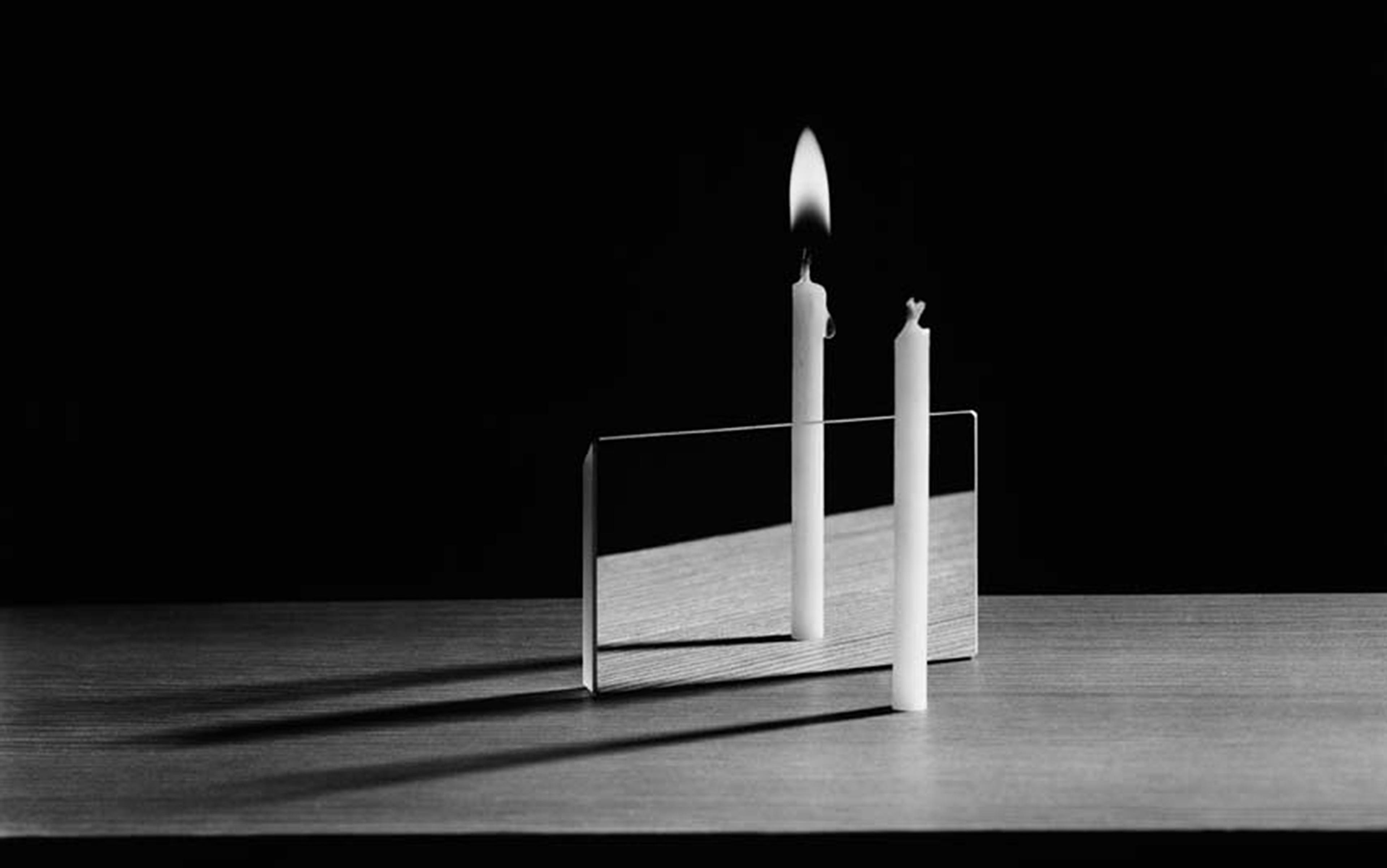

Generations of schoolchildren have learned mnemonics that colour-code light, which have as much to do with physical reality as the signs of the zodiac

Keynes took issue with the 19th-century image of Newton as supreme rationalist, and replaced it with another, based on that familiar assumption of a clear division between ancient magic and modern science. Yet this brought a new contradiction. According to Keynes, Newton’s greatest gift was intuition; the ability, for instance, to realise that a spherical mass could be treated as a point, even before he could derive the mathematical proof. Intuition, however, could be considered revelation by a more secular name, and in that respect Newton was by no means the last magician: his gift is one we willingly attribute to discoverers and innovators of all kinds – as long as their guesses are right.

Newton’s wrong guesses are, however, as interesting as his correct ones. A well-known example is his conviction that light is made of particles, not waves. What he had in mind were solid ‘corpuscles’, not the photons of modern physics, but it’s still tempting to see him as having been half right. And in trying to explain the splitting of white light into a spectrum, he came up with the beautiful notion that the width of the coloured bands matches the mathematical proportions of a musical scale. People had traditionally counted no more than five colours in a rainbow, but for his theory to work Newton needed more, so he introduced two ‘semitones’, orange and indigo, and we’ve been counting seven colours in a rainbow ever since. Generations of schoolchildren have learned mnemonics that colour-code light, such as ‘Richard Of York Gave Battle In Vain’, which have as much to do with physical reality as the signs of the zodiac.

Newton’s harmonious vision was a fossil from a very deep layer of intellectual history: the doctrines of the semi-legendary Pythagoras who, apart from discovering that theorem about the square on the hypotenuse, supposedly also had a leg made of gold and could appear in two different places at once. The Pythagorean view that ‘all is number’, and that the motion of the planets was linked to musical principles (the ‘harmony of the spheres’), was also endorsed by the German mathematician Johannes Kepler, whose Harmonices Mundi (‘The Harmony of the World’, 1619) tried to explain the solar system in terms of music and geometry, and correctly proposed what we now call Kepler’s third law of planetary motion.

Galileo expressed the Pythagorean spirit in The Assayer (1623), where he called the universe a great book ‘written in the language of mathematics’, and modern physicists sustain the tradition. The great British theorist Paul Dirac, asked to explain what an electron was, reputedly wrote an equation on the blackboard, pointed to a letter in it and said, ‘That’s an electron.’ The standard model of elementary particles, brilliantly confirmed by accelerator experiments, grew out of studies of ‘symmetry groups’, an area of mathematics related to Kepler’s geometrical exercises. In each case, the idea was the same: start with a concept of mathematical symmetry and try to make it match reality.

The harmony of the spheres also has its counterpart in modern-day superstring theory, which supposes particles to correspond to those same vibrations that captivated the Pythagoreans, though, after more than 30 years of intensive study, the theory has yet to make a single prediction borne out by experiment.

Viewed in this light, Newton’s misguided intuition regarding the rainbow puts him in exactly the same camp as so many other people from all ages who have struggled to make messy reality fit beautiful theory, and have on occasion hit the jackpot. But for Keynes, what placed one of Newton’s feet firmly on the pre-scientific side of the line was his cast of mind: ‘His deepest instincts were occult, esoteric, semantic.’

‘Occult’ literally means hidden, but has various meanings in relation to Newton. On the one hand, there are his writings on subjects such as the Philosopher’s Stone, or the dating of the Apocalypse. Then there is his secretive and, according to Keynes, ‘neurotic’ personality: ‘a paralyzing fear of exposing his thoughts’. But there is also his famous comment in the Principia rejecting ‘hypotheses, whether metaphysical or physical, whether of occult qualities or mechanical’. An ‘occult force’, in Newton’s terms, was any hidden principle not directly observable in phenomena, and his opponents claimed that his version of gravitation was itself an occult force since it assumed some mysterious ‘action at a distance’. An analogous situation was thought by some to arise in quantum theory, leading to what Albert Einstein allegedly disparaged as ‘spooky action at a distance’.

Occultism of a more literally spooky variety has likewise recurred over scientific history. A notable instance is the ‘psychic force’ proposed in the 19th century by the British chemist William Crookes. Crookes first made his name by discovering the element thallium, and is chiefly remembered for experiments where he applied high voltages to tubes containing gasses at very low pressure. These were among the earliest cathode-ray tubes: his work was the precursor of fluorescent lighting and television. He was also a pioneer in spectroscopy and radioactivity before announcing his discovery of a dubious new force in the Quarterly Journal of Science in 1874. Having invited spiritualist mediums to perform under what he considered controlled conditions, Crookes had become convinced that the phenomena he witnessed were genuine and in need of further scientific investigation. Apparently, he thought the ghostly glow produced in his vacuum tubes was a possible explanation for ectoplasm.

Crookes was fooled by a succession of mediums including Florence Cook, whose act – conducted in darkened Victorian homes – consisted of apparently materialising a ghost called Katie King, who would be seen to move around while Cook herself was generally absent. At one such session, a sceptical participant grabbed hold of the ghost and found her to be strikingly similar in appearance to Cook; he was thrown out and told that, of course, the ghost would tend to look like the medium. Crookes was invited to validate Cook’s claims, and duly did. Like those unfortunate people who give away all their money to internet fraudsters, his desire to believe outweighed his common sense.

Crookes was by no means the only scientist to endorse spiritualism. The British physicist Oliver Lodge – one of the first people to predict in 1893 what we now understand as relativistic length contraction – was a fellow member with Crookes of the Society for Psychical Research in London (both served as president), and they also belonged to the Ghost Club, a spiritualist group whose earlier members had included (less surprisingly) Charles Dickens. Crookes’ interest may have been prompted by the premature death of his brother, while Lodge later became widely known for claiming that his son, killed in the First World War, had communicated with him through mediums. One suspects their beliefs had more to do with personal need – and traditional belief in the afterlife – than rational judgement.

What Crookes advocated was an occult force in every sense; he tried to account for fictitious phenomena by speculating new laws of physics. He was by no means the last to do so. In the 1970s, Uri Geller became famous for his spoon-bending and other illusions, which he claimed to be the result of psychic powers. He attracted the attention of the British physicist John Taylor, who performed laboratory tests on Geller and endorsed his feats as genuine in a book called Superminds (1975), where he speculated an electromagnetic explanation. Taylor acknowledged his error after the Canadian magician James Randi replicated Geller’s illusions under the same conditions but, like Crookes, Taylor had spent several years seeking to explain phenomena that do not exist.

While scientific theories can become increasingly technical and abstract, the brains that struggle to interpret their meaning haven’t evolved much in the past 50,000 years

Quantum theory, with its apparent possibility for spooky action at a distance, has sparked all sorts of speculation about telepathy, remote viewing or ‘acausal’ effects: old ideas given a new twist. In 1932, the Austrian quantum physicist Wolfgang Pauli began consulting Carl Jung for therapy following a series of distressing events including his mother’s suicide and his own divorce; he subsequently collaborated with Jung, and in their book The Interpretation of Nature and the Psyche (1950) they proposed a ‘quaternity’ consisting of the pairings energy-spacetime and causality-synchronicity.

The recurrence of ideas over the course of history is something that Jung or Pauli would have attributed to archetypes in the collective unconscious. An alternative would be the finiteness of human imagination, and susceptibility to cultural influence. While scientific theories can become increasingly technical and abstract, the brains that struggle to interpret their meaning haven’t evolved much in the past 50,000 years. If our own brain is a kind of living fossil, it’s hardly surprising that so much of what we do with it is metaphorically fossilised too.

As well as trying to identify the first religion, Newton sought the original unit of length. In ‘A Dissertation upon the Sacred Cubit of the Jews’ (1737), Newton found it to be just over 25 inches (making Noah’s Ark more than 625 feet in length). Modern-day pyramidology maintains the tradition of finding hidden meaning in physical dimensions. In a different way, so do today’s physicists, though rather than cubits they speak of the ‘Planck length’, a unit determined by some juggling with fundamental values such as the speed of light and the gravitational constant. The idea is to combine naturally occurring quantities in order to get a ‘dimensionless’ number that might or might not have physical significance. For example, suppose your height is two metres, you can run at eight metres per second, and your age is 1 billion seconds (nearly 32 years). If you work out age times speed divided by height you get the number 4 billion, and you would get this if you used any other consistent set of units; for example years, miles, and miles per year. Hence it’s ‘dimensionless’. Does it have any physical significance? Almost certainly not, but that’s how the game works.

Paul Dirac and his fellow British physicist Arthur Eddington played it using quantities such as the mass and size of the electron; Dirac believed he could obtain the age of the universe, but his idea was judged to be contradicted by available evidence. Eddington, however, pursued what he called his ‘fundamental theory’ until he died in 1944. His book on the subject was published posthumously, and contains his derivation of the ‘cosmical number’ 204 x 2256, which he claimed to be the number of protons and electrons in the universe. History will decide whether this is on par with Newton’s erroneous theory of the rainbow or his far better one of gravity, but it is safe to say that numerical and other coincidences will continue to fascinate physicists and lay-people alike.

Personally, I shall maintain faith in the principle of eternal folly, comforted by the thought that nearly every idea that ever crosses my mind is most likely unoriginal or wrong, or both – though just occasionally our grey matter is actually capable of producing something new. A suitably humbling sentiment as I set up my telescope for another night of astro-whatever.