There is no agreed criterion to distinguish science from pseudoscience, or just plain ordinary bullshit, opening the door to all manner of metaphysics masquerading as science. This is ‘post-empirical’ science, where truth no longer matters, and it is potentially very dangerous.

It’s not difficult to find recent examples. On 8 June 2019, the front cover of New Scientist magazine boldly declared that we’re ‘Inside the Mirrorverse’. Its editors bid us ‘Welcome to the parallel reality that’s hiding in plain sight’.

How you react to such headlines likely depends on your familiarity not only with aspects of modern physics, but also with the sensationalist tendencies of much of the popular-science media. Needless to say, the feature in question is rather less sensational than its headline suggests. It’s about the puzzling difference in the average time that subatomic particles called neutrons will freely undergo radioactive decay, depending on the experimental technique used to measure this – a story unlikely to pique the interests of more than a handful of New Scientist’s readers.

But, as so often happens these days, a few physicists have suggested that this is a problem with ‘a very natural explanation’. They claim that the neutrons are actually flitting between parallel universes. They admit that the chances of proving this are ‘low’, or even ‘zero’, but it doesn’t really matter. When it comes to grabbing attention, inviting that all-important click, or purchase, speculative metaphysics wins hands down.

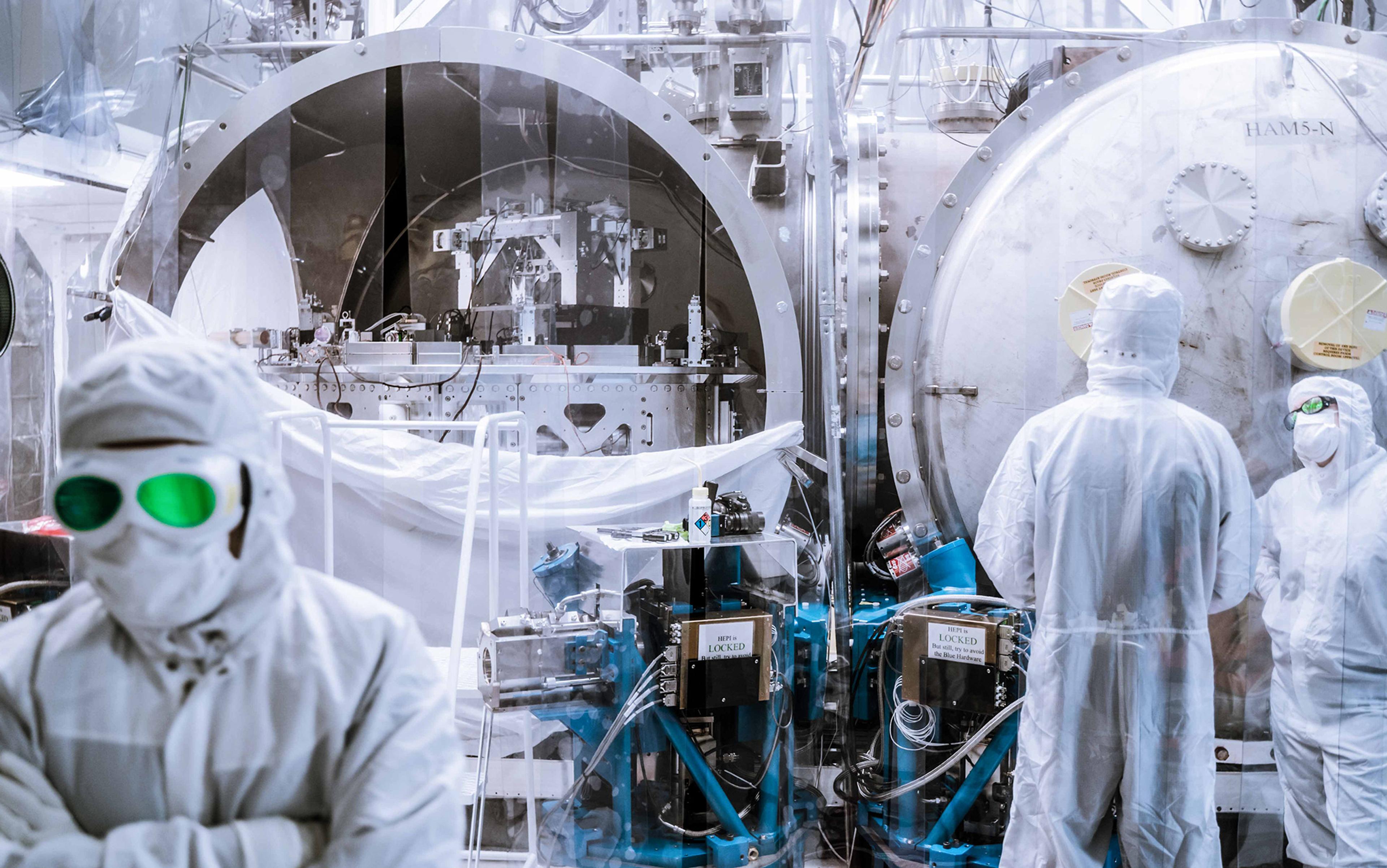

It would be easy to lay the blame for this at the feet of science journalists or popular-science writers. But it seems that the scientists themselves (and their PR departments) are equally culpable. The New Scientist feature is concerned with the work of Leah Broussard at the US Department of Energy’s Oak Ridge National Laboratory. As far as I can tell, Broussard is engaged in some perfectly respectable experimental research on the properties of neutrons. But she betrays the nature of the game that’s being played when she says: ‘Theorists are very good at evading the traps that experimentalists leave for them. You’ll always find someone who’s happy to keep the idea alive.’

The ‘mirrorverse’ is just one more in a long line of so-called multiverse theories. These theories are based on the notion that our Universe is not unique, that there exists a large number of other universes that somehow sit alongside or parallel to our own. For example, in the so-called Many-Worlds interpretation of quantum mechanics, there are universes containing our parallel selves, identical to us but for their different experiences of quantum physics. These theories are attractive to some few theoretical physicists and philosophers, but there is absolutely no empirical evidence for them. And, as it seems we can’t ever experience these other universes, there will never be any evidence for them. As Broussard explained, these theories are sufficiently slippery to duck any kind of challenge that experimentalists might try to throw at them, and there’s always someone happy to keep the idea alive.

Is this really science? The answer depends on what you think society needs from science. In our post-truth age of casual lies, fake news and alternative facts, society is under extraordinary pressure from those pushing potentially dangerous antiscientific propaganda – ranging from climate-change denial to the anti-vaxxer movement to homeopathic medicines. I, for one, prefer a science that is rational and based on evidence, a science that is concerned with theories and empirical facts, a science that promotes the search for truth, no matter how transient or contingent. I prefer a science that does not readily admit theories so vague and slippery that empirical tests are either impossible or they mean absolutely nothing at all.

But isn’t science in any case about what is right and true? Surely nobody wants to be wrong and false? Except that it isn’t, and we seriously limit our ability to lift the veils of ignorance and change antiscientific beliefs if we persist in peddling this absurdly simplistic view of what science is. To understand why post-empirical science is even possible, we need first to dispel some of science’s greatest myths.

Despite appearances, science offers no certainty. Decades of progress in the philosophy of science have led us to accept that our prevailing scientific understanding is a limited-time offer, valid only until a new observation or experiment proves that it’s not. It turns out to be impossible even to formulate a scientific theory without metaphysics, without first assuming some things we can’t actually prove, such as the existence of an objective reality and the invisible entities we believe to exist in it. This is a bit awkward because it’s difficult, if not impossible, to gather empirical facts without first having some theoretical understanding of what we think we’re doing. Just try to make any sense of the raw data produced by CERN’s Large Hadron Collider without recourse to theories of particle physics, and see how far you get. Theories are underdetermined: choosing between competing theories that are equivalently accommodating of the facts can become a matter for personal judgment, or our choice of metaphysical preconceptions or prejudices, or even just the order in which things happened historically. This is one of the reasons why arguments still rage about the interpretation of a quantum theory that was formulated nearly 100 years ago.

Yet history tells us quite unequivocally that science works. It progresses. We know (and we think we understand) more about the nature of the physical world than we did yesterday; we know more than we did a decade, or a century, or a millennium ago. The progress of science is the reason we have smartphones, when the philosophers of Ancient Greece did not.

Successful theories are essential to this progress. When you use Google Maps on your smartphone, you draw on a network of satellites orbiting Earth at 20,000 kilometres, of which four are needed for the system to work, and between six and 10 are ‘visible’ from your location at any time. Each of these satellites carries a miniaturised atomic clock, and transmits precise timing and position data to your device that allow you to pinpoint your location and identify the fastest route to the pub. But without corrections based on Albert Einstein’s special and general theories of relativity, the Global Positioning System would accumulate clock errors, leading to position errors of up to 11 kilometres per day. Without these rather abstract and esoteric – but nevertheless highly successful – theories of physics, after a couple of days you’d have a hard time working out where on Earth you are.

In February 2019, the pioneers of GPS were awarded the Queen Elizabeth Prize for Engineering. The judges remarked that ‘the public may not know what [GPS] stands for, but they know what it is’. This suggests a rather handy metaphor for science. We might scratch our heads about how it works, but we know that, when it’s done properly, it does.

And this brings us to one of the most challenging problems emerging from the philosophy of science: its strict definition. When is something ‘scientific’, and when is it not? In the light of the metaphor above, how do we know when science is being ‘done properly’? This is the demarcation problem, and it has an illustrious history. (For a more recent discussion, see Massimo Pigliucci’s essay ‘Must Science Be Testable?’ on Aeon).

The philosopher Karl Popper argued that what distinguishes a scientific theory from pseudoscience and pure metaphysics is the possibility that it might be falsified on exposure to empirical data. In other words, a theory is scientific if it has the potential to be proved wrong.

Astrology makes predictions, but these are intentionally general and wide open to interpretation. In 1963, Popper wrote: ‘It is a typical soothsayer’s trick to predict things so vaguely that the predictions can hardly fail: that they become irrefutable.’ We can find many ways to criticise the premises of homeopathy and dismiss this as pseudoscience, as it has little or no foundation in our current understanding of Western, evidence-based medicine. But, even if we take it at face value, we should admit that it fails all the tests: there is no evidence from clinical trials for the effectiveness of homeopathic remedies beyond a placebo effect. Those who, like Prince Charles, continue to argue for its efficacy are not doing science. They are doing wishful-thinking or, like a snake-oil salesman, they’re engaged in deliberate deception.

And, no matter how much we might want to believe that God designed all life on Earth, we must accept that intelligent design makes no testable predictions of its own. It is simply a conceptual alternative to evolution as the cause of life’s incredible complexity. Intelligent design cannot be falsified, just as nobody can prove the existence or non-existence of a philosopher’s metaphysical God, or a God of religion that ‘moves in mysterious ways’. Intelligent design is not science: as a theory, it is simply overwhelmed by its metaphysical content.

Instead of accepting that Newton’s laws were falsified, they tinkered with the auxiliary assumptions

But it was never going to be as simple as this. Applying a theory typically requires that – on paper, at least – we simplify the problem by imagining that the system we’re interested in can be isolated, such that we can ignore interference from the rest of the Universe. In his book Time Reborn (2013), the theoretical physicist Lee Smolin calls this ‘doing physics in a box’, and it involves making one or more so-called auxiliary assumptions. Consequently, when predictions are falsified by the empirical evidence, it’s never clear why. It might be that the theory is false, but it could simply be that one of the auxiliary assumptions is invalid. The evidence can’t tell us which.

There’s a nice lesson on all this from planetary astronomy. In 1781, Isaac Newton’s laws of motion and gravitation were used to predict the orbit of a newly discovered planet called Uranus. The prediction was wrong. But instead of accepting that Newton’s laws were thus falsified, the problem was solved simply by tinkering with the auxiliary assumptions, in this case by making the box a little bigger. John Adams and Urbain Le Verrier independently proposed that there was an as-yet-unobserved eighth planet in the solar system that was perturbing the orbit of Uranus. Neptune was duly discovered, in 1846, very close to the position predicted by Le Verrier. Far from falsifying Newton’s laws, the incorrect prediction and subsequent discovery of Neptune was greeted as a triumphant confirmation of them.

A few years later, Le Verrier tried the same logic on another astronomical problem. The planetary orbits are not exact ellipses. With each orbit, each planet’s point of closest approach to the Sun (called the perihelion) shifts slightly, or precesses, and this was thought to be caused by the cumulative gravitational pull of all the other planets in the solar system. For the planet Mercury, lying closest to the Sun, Newton’s laws predict a precession of 532 arc-seconds per century. But today the observed precession is rather more, about 575 arc-seconds per century, a difference of 43 arc-seconds. Though small, this difference accumulates and is equivalent to one ‘extra’ orbit every 3 million years or so.

Le Verrier ascribed this difference to the effects of yet another unobserved planet, lying closer to the Sun than Mercury, which became known as Vulcan. Astronomers searched for it in vain. In this case, Newton’s laws were indeed playing false. Einstein was delighted to discover that his general theory of relativity predicts a further ‘relativistic’ contribution of 43 arc-seconds per century, due to the curvature of spacetime around the Sun in the vicinity of Mercury.

This brief tale suggests that scientists will stop tinkering and agree to relegate a theory only when a demonstrably better one is available to replace it. We could conclude from this that theories are never falsified, as such. We know that Newton’s laws of motion are inferior to quantum mechanics in the microscopic realm of molecules, atoms and sub-atomic particles, and they break down when stuff of any size moves at or close to the speed of light. We know that Newton’s law of gravitation is inferior to Einstein’s general theory of relativity. And yet Newton’s laws remain perfectly satisfactory when applied to ‘everyday’ objects and situations, and physicists and engineers will happily make use of them. Curiously, although we know they’re ‘not true’, under certain practical circumstances they’re not false either. They’re ‘good enough’.

Such problems were judged by philosophers of science to be insurmountable, and Popper’s falsifiability criterion was abandoned (though, curiously, it still lives on in the minds of many practising scientists). But rather than seek an alternative, in 1983 the philosopher Larry Laudan declared that the demarcation problem is actually intractable, and must therefore be a pseudo-problem. He argued that the real distinction is between knowledge that is reliable or unreliable, irrespective of its provenance, and claimed that terms such as ‘pseudoscience’ and ‘unscientific’ have no real meaning.

But, for me at least, there has to be a difference between science and pseudoscience; between science and pure metaphysics, or just plain ordinary bullshit.

So, if we can’t make use of falsifiability, what do we use instead? I don’t think we have any real alternative but to adopt what I might call the empirical criterion. Demarcation is not some kind of binary yes-or-no, right-or-wrong, black-or-white judgment. We have to admit shades of grey. Popper himself was ready to accept this (the italics are mine):

the criterion of demarcation cannot be an absolutely sharp one but will itself have degrees. There will be well-testable theories, hardly testable theories, and non-testable theories. Those which are non-testable are of no interest to empirical scientists. They may be described as metaphysical.

Here, ‘testability’ implies only that a theory either makes contact, or holds some promise of making contact, with empirical evidence. It makes no presumptions about what we might do in light of the evidence. If the evidence verifies the theory, that’s great – we celebrate and start looking for another test. If the evidence fails to support the theory, then we might ponder for a while or tinker with the auxiliary assumptions. Either way, there’s a tension between the metaphysical content of the theory and the empirical data – a tension between the ideas and the facts – which prevents the metaphysics from getting completely out of hand. In this way, the metaphysics is tamed or ‘naturalised’, and we have something to work with. This is science.

Now, this might seem straightforward, but we’ve reached a rather interesting period in the history of foundational physics. Today, we’re blessed with two extraordinary theories. The first is quantum mechanics. This is the basis for the so-called standard model of particle physics that describes the workings of all known elementary particles. It is our best theory of matter. The second is Einstein’s general theory of relativity that explains how gravity works, and is the basis for the so-called standard model of Big Bang cosmology. It is our best theory of space, time and the Universe.

These two standard models explain everything we can see in the Universe. Yet they are deeply unsatisfying. The charismatic physicist Richard Feynman might have been a poor philosopher, but he wasn’t joking when he wrote in 1965: ‘I think I can safely say that nobody understands quantum mechanics.’ To work satisfactorily, Big Bang cosmology requires rather a lot of ‘dark matter’ and ‘dark energy’, such that ‘what we can see’ accounts for an embarrassingly small 5 per cent of everything we believe there is in the Universe. If dark matter is really matter of some kind, then it’s simply missing from our best theory of matter. Changing one or more of the constants that govern the physics of our Universe by even the smallest amount would render the Universe inhospitable to life, or even physically impossible. We have no explanation for why the laws and constants of physics appear so ‘fine-tuned’ to evolve a Goldilocks universe that is just right.

These are very, very stubborn problems, and our best theories are full of explanatory holes. Bringing them together in a putative theory of everything has proved to be astonishingly difficult. Despite much effort over the past 50 years, there is no real consensus on how this might be done. And, to make matters considerably worse, we’ve run out of evidence. The theorists have been cunning and inventive. They have plenty of metaphysical ideas but there are no empirical signposts telling them which path they should take. They are ideas-rich, but data-poor.

They’re faced with a choice.

Do they pull up short, draw back and acknowledge that, without even the promise of empirical data to test their ideas, there is little or nothing more that can be done in the name of science? Do they throw their arms in the air in exasperation and accept that there might be things that science just can’t explain right now?

In the absence of facts, what constitutes ‘the best explanation’?

Or do they plough on regardless, publishing paper after paper filled with abstract mathematics that they can interpret to be explanatory of the physics, in the absence of data, for example in terms of a multiverse? Do they not only embrace the metaphysics but also allow their theories to be completely overwhelmed by it? Do they pretend that they can think their way to real physics, ignoring Einstein’s caution:

Time and again the passion for understanding has led to the illusion that man is able to comprehend the objective world rationally by pure thought without any empirical foundations – in short, by metaphysics.

I think you know the answer. But to argue that this is nevertheless still science requires some considerable mental gymnastics. Some just double-down. The theoretical physicist David Deutsch has declared that the multiverse is as real as the dinosaurs once were, and we should just ‘get over it’. Martin Rees, Britain’s Astronomer Royal, declares that the multiverse is not metaphysics but exciting science, which ‘may be true’, and on which he’d bet his dog’s life. Others seek to shift or undermine any notion of a demarcation criterion by wresting control of the narrative. One way to do this is to call out all the problems with Popper’s falsifiability that were acknowledged already many years ago by philosophers of science. Doing this allows them to make their own rules, while steering well clear of the real issue – the complete absence of even the promise of any tension between ideas and facts.

Sean Carroll, a vocal advocate for the Many-Worlds interpretation, prefers abduction, or what he calls ‘inference to the best explanation’, which leaves us with theories that are merely ‘parsimonious’, a matter of judgment, and ‘still might reasonably be true’. But whose judgment? In the absence of facts, what constitutes ‘the best explanation’?

Carroll seeks to dress his notion of inference in the cloth of respectability provided by something called Bayesian probability theory, happily overlooking its entirely subjective nature. It’s a short step from here to the theorist-turned-philosopher Richard Dawid’s efforts to justify the string theory programme in terms of ‘theoretically confirmed theory’ and ‘non-empirical theory assessment’. The ‘best explanation’ is then based on a choice between purely metaphysical constructs, without reference to empirical evidence, based on the application of a probability theory that can be readily engineered to suit personal prejudices.

Welcome to the oxymoron that is post-empirical science.

Still, what’s the big deal? So what if a handful of theoretical physicists want to indulge their inner metaphysician and publish papers that few outside their small academic circle will ever read? But look back to the beginning of this essay. Whether they intend it or not (and trust me, they intend it), this stuff has a habit of leaking into the public domain, dripping like acid into the very foundations of science. The publication of Carroll’s book Something Deeply Hidden, about the Many-Worlds interpretation, has been accompanied by an astonishing publicity blitz, including an essay on Aeon last month. A recent PBS News Hour piece led with the observation that: ‘The “Many-Worlds” theory in quantum mechanics suggests that, with every decision you make, a new universe springs into existence containing what amounts to a new version of you.’

Physics is supposed to be the hardest of the ‘hard sciences’. It sets standards by which we tend to judge all scientific endeavour. And people are watching.

The historian of science Helge Kragh has spent some considerable time studying the ‘higher speculations’ that have plagued foundational physics throughout its history. On the multiverse, in Higher Speculations (2011) he concluded (again, the italics are mine):

But, so it has been argued, intelligent design is hardly less testable than many multiverse theories. To dismiss intelligent design on the ground that it is untestable, and yet to accept the multiverse as an interesting scientific hypothesis, may come suspiciously close to applying double standards. As seen from the perspective of some creationists, and also by some non-creationists, their cause has received unintended methodological support from multiverse physics.

Unsurprisingly, the folks at the Discovery Institute, the Seattle-based think-tank for creationism and intelligent design, have been following the unfolding developments in theoretical physics with great interest. The Catholic evangelist Denyse O’Leary, writing for the Institute’s Evolution News blog in 2017, suggests that: ‘Advocates [of the multiverse] do not merely propose that we accept faulty evidence. They want us to abandon evidence as a key criterion for acceptance of their theory.’ The creationists are saying, with some justification: look, you accuse us of pseudoscience, but how is what you’re doing in the name of science any different? They seek to undermine the authority of science as the last word on the rational search for truth.

The philosophers Don Ross, James Ladyman and David Spurrett have argued that a demarcation criterion is a matter for institutions, not individuals. The institutions of science impose norms and standards and provide sense-checks and error filters that should in principle exclude claims to objective scientific knowledge derived from pure metaphysics. But, despite efforts by the cosmologist George Ellis and the astrophysicist Joe Silk to raise a red flag in 2014 and call on some of these institutions to ‘defend the integrity of physics’, nothing has changed. Ladyman seems resigned: ‘Widespread error about fundamentals among experts can and does happen,’ he tells me. He believes a correction will come in the long run, when a real scientific breakthrough is made. But what damage might be done while we wait for a breakthrough that might never come?

Perhaps we should begin with a small first step. Let’s acknowledge that theoretical physicists are perfectly entitled to believe, write and say whatever they want, within reason. But is it asking too much that they make their assertions with some honesty? Instead of ‘the multiverse exists’ and ‘it might be true’, is it really so difficult to say something like ‘the multiverse has some philosophical attractions, but it is highly speculative and controversial, and there is no evidence for it’? I appreciate that such caveats get lost or become mangled when transferred into a popular media obsessed with sensation, but this would then be a failure of journalism or science writing, rather than a failure of scientific integrity.