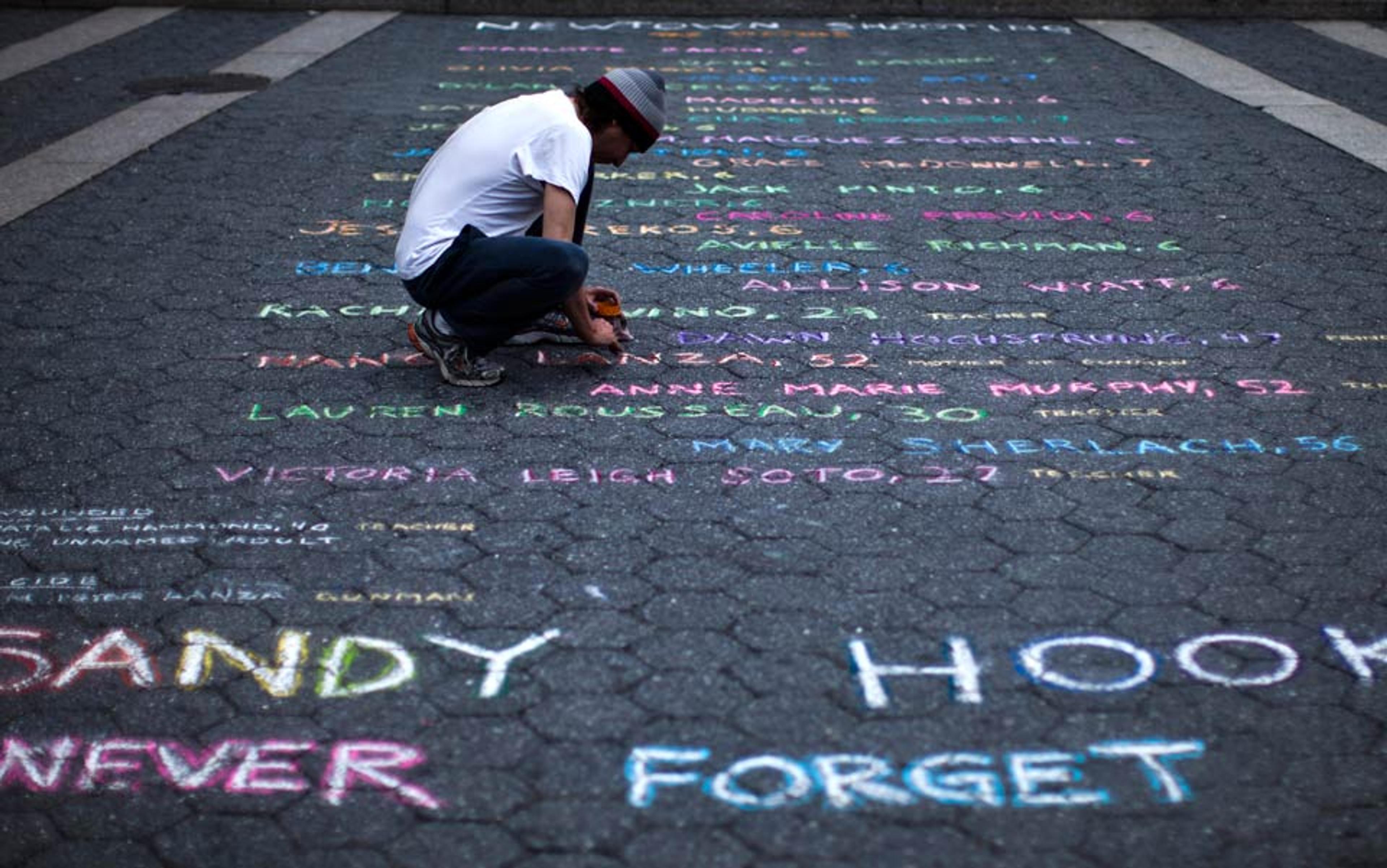

In 1992, Juan Rivera was arrested for the rape and murder of an 11-year-old girl in Waukegan, Illinois. On the night of the murder, Rivera was wearing an electronic ankle bracelet in connection with unrelated burglary charges, and this bracelet showed he’d been at home. Yet, based on a tip, police decided to arrest him.

Rivera had a low IQ and a history of emotional problems, which psychologists knew would make him highly suggestible. The police chose to ignore that when they grilled him for several days and lied to him about the results of his polygraph test. By the end of the fourth day, having endured more than 24 hours of round-robin questioning by at least nine different officers, Rivera signed a confession. His first confession was inaccurate, however, so police kept questioning him until he got it right.

Confessions have extraordinary power, so police believed it despite a lack of physical evidence. So did a jury, which found Rivera guilty; and a judge, who sentenced him to life without parole. In 2005, DNA tests excluded Rivera as the source of the semen recovered from the body. But the prosecutor devised a theory that the 11-year-old victim had had sex with another man previously, and that Rivera did not ejaculate when he raped her after that. A jury found Rivera guilty a second time. Finally, after another appeal in 2011, Rivera’s lawyers were able to set him free. He had been wrongfully imprisoned for nearly 20 years.

Rivera’s case represents a tragic miscarriage of justice. Seen another way, it’s also the result of bad science and anti-scientific thinking – from the police’s coercive interview of a vulnerable person, to the jury’s acceptance of a false confession over physical evidence, including DNA.

Unfortunately, Rivera’s case is not unique. Hundreds of innocent people have been convicted by bad science, permitting an equal number of perpetrators to go free. It’s impossible to know how often this happens, but the growing number of DNA-related exonerations points to false convictions as the collateral damage of our legal system. Part of the problem involves faulty forensics: contrary to what we might see in the CSI drama shows on TV, few forensic labs are state-of-the-art, and they don’t always use scientific techniques. According to the US National Academy of Sciences, none of the traditional forensic techniques, such as hair comparison, bite-mark analysis or ballistics analysis, qualifies as rigorous, reproducible science. But it’s not just forensics: bad science is marbled throughout our legal system, from the way police interrogate suspects to the decisions judges make on whether to admit certain evidence in court.

More than 50 years ago, C P Snow, the British scientist and novelist, warned that the division between ‘two cultures’ – science and the humanities – makes it harder to solve the world’s problems. There’s a ‘two cultures’ problem in our legal systems too, in the contrasting methodologies of science and the law. The scientific method involves making observations, constructing a hypothesis, and testing that hypothesis with an experiment that others can repeat. You can trust your results if others can reproduce them.

The legal approach involves making an argument. Tasked with the job of arriving at a verdict, legal professionals gather information under strict rules of evidence and subject it to rigorous cross-examination. For guidance, they look to precedent: you can trust your legal theory if previous courts have approved it. In this way, legal practice tends to move forward more slowly than science. The gap between the two methodologies – enquiry versus argument, testing versus precedent – is where innocent people sometimes can fall.

Science and the law have always had an uneasy relationship. But with the growing importance of forensics in the courtroom and scientific methods in prosecuting crime, it’s become critical to marry the two disciplines. The question is, how?

Science has been intertwined with the law for almost as long as the jury trial has been in existence. Records show that as early as the 1600s physicians were brought to court to give expert opinions, such as how long after the death of her husband a woman could bear a child. By the mid-1800s, experts on both sides of the Atlantic were called on to testify about a variety of subjects, such as the chemical signs of poisoning and the mental state of accused murderers.

What really brought science into court, though, was the development of forensic science in the late 19th century. Led by the French physician Alexandre Lacassagne, a coterie of experts in Europe pioneered many of the techniques still used today, including footprint analysis, the use of markings on a bullet to match it to a gun, and chemical reagents to see if a stain was a biological fluid such as semen or blood.

Beyond freeing innocent people, DNA exonerations have provided a kind of core sample into what goes wrong in our justice system

Those developments became pivotal in criminal justice, and they caused verdicts to be ever more science-based. Lacassagne hoped the science would grow like other scholarly fields, conducted at institutes affiliated with universities. But instead of becoming part of the university system, forensic science moved to police departments and government laboratories. There, researchers focused on practical applications without questioning underlying hypotheses or imposing the rigour practiced by classic science labs. When techniques such as bullet-matching and bite-mark analysis resulted in false convictions, the errors were seen as anomalies rather than signs that the science itself was wrong.

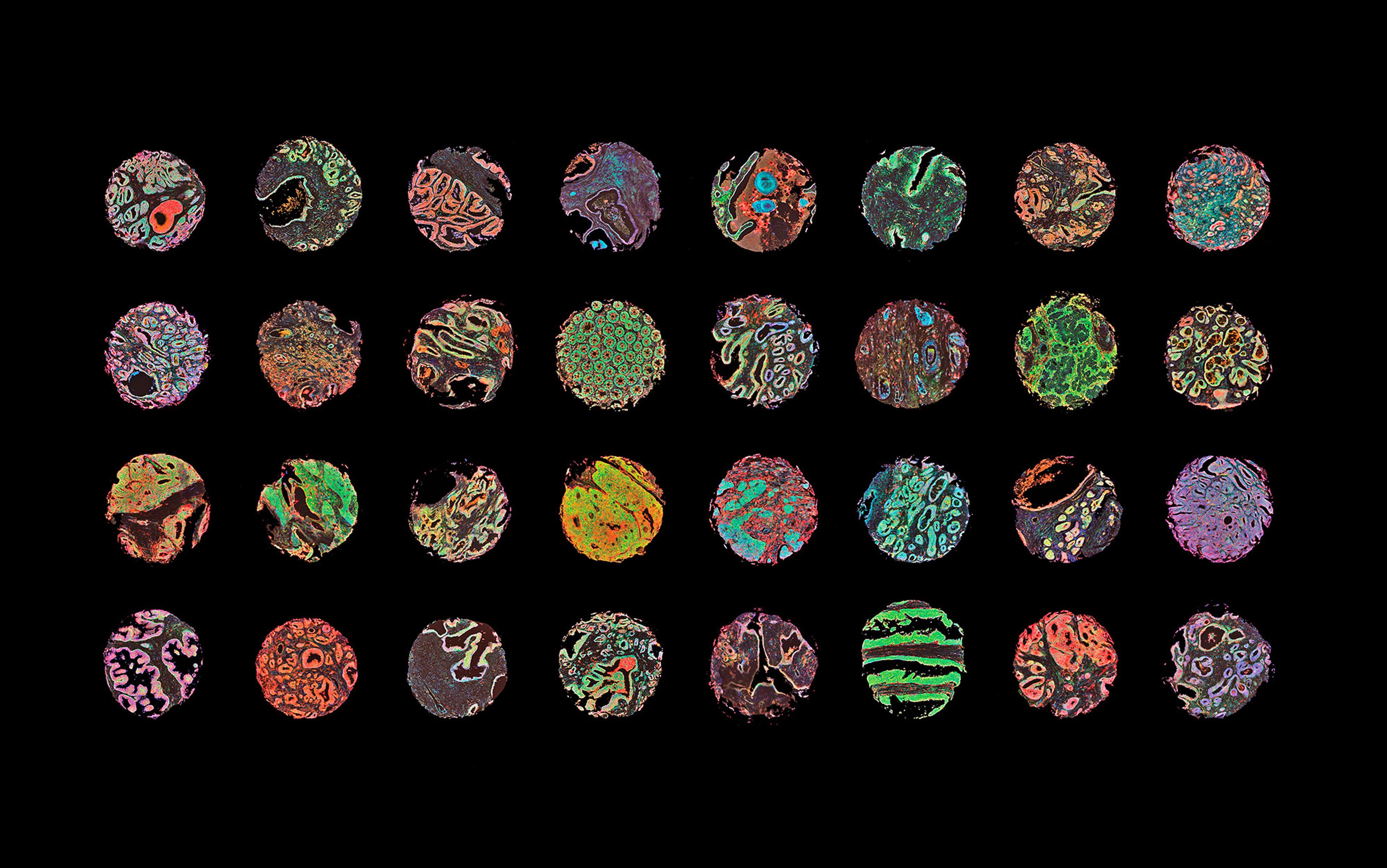

That changed with the DNA revolution of the 1990s. Unlike prior forensic sciences, DNA technology emerged directly from the laboratories of universities and scientific institutes, with all the statistical proofs and controls of real science. The technology allows scientists to spot certain sequences in our genetic material that occur with a known frequency in various populations. This means that scientists can state with statistical accuracy the chances of your DNA matching a sample from a crime scene – usually on the order of one in a few billion – something that traditional forensic scientists cannot do.

To see how deeply the DNA revolution cuts into traditional crime-solving techniques, consider the gold standard of evidence: fingerprints. The US Federal Bureau of Investigation (FBI) maintains the world’s largest database of fingerprints, with more than 70 million samples in its criminal master file, and uses advanced computer algorithms to help match them to samples gathered at crime scenes. It’s a highly sophisticated, multi-level analysis. In the end, though, the identification comes down to simple human judgment: a series of experts who eyeball the results. Such judgments are compromised because experts rarely get to compare complete sets of fingerprints. Police usually find partial prints at a crime scene – a smudge or only a portion of a print. They’ll examine several points on those fragments and see if they match similar points on a suspect’s fingerprints. Unlike with DNA, no one has done the statistical analysis to see if more than one person can have the same points of comparison.

The fact that DNA was a proven, quantified science made it a critical tool in reversing wrongful convictions – 321 in the US alone since 1992, when the Innocence Project was launched at Yeshiva University in New York. Innocence Projects have also sprung up in Canada, the United Kingdom, Ireland, France, Holland, Australia and New Zealand.

Beyond freeing innocent people, DNA exonerations have provided a kind of core sample into what goes wrong in our justice system. If DNA represents hard, scientific fact, then the evidence it contradicts is by definition wrong. In 2011, Brandon L Garrett, a law professor at the University of Virginia, dug into that issue by analysing thousands of pages of legal records and court transcripts from 220 of the then-250 exonerations. He found that an overwhelming majority of wrong decisions resulted from flawed science-related procedures, such as eyewitness errors, faulty lab procedures and false confessions.

Garrett is not alone: many legal scholars are using case histories from the DNA-exoneration database as a way of examining the systemic problems of our police departments and courts. They’re consistently finding that the law lags far behind science. As a result, certain practices remain accepted long past their expiration date – both in the investigation of crimes and in trials.

Consider the investigation of arson. For generations, arson investigators, usually former fire-fighters, were taught to look for the signs of arson by their predecessors, who based their knowledge on practical experience. A body of common knowledge developed, none of which was backed by laboratory science. An example: the dark splotches on the floor of a burnt building, or ‘spill patterns’, meant that someone had used gasoline or another accelerant to start the fire. But starting in the late 1980s, scientists who conducted extensive laboratory research found that fire behaved differently than previously thought. Spill patterns had more to do with the ventilation of a working fire than the liquid that might have started it.

In other words, probably hundreds of convictions have been based on incorrect findings of arson. The most notorious of these was the 2004 execution in Texas of Cameron Todd Willingham for the arson-related murder of his three daughters. Experts have since determined that the fire was probably accidental or undetermined at best. Although scientists are working to bring about change, and have created national standards for investigation, the majority of investigators continue to be trained in the old ways.

confessions have a ripple effect: once a suspect admits to a crime, almost all other evidence changes to support it

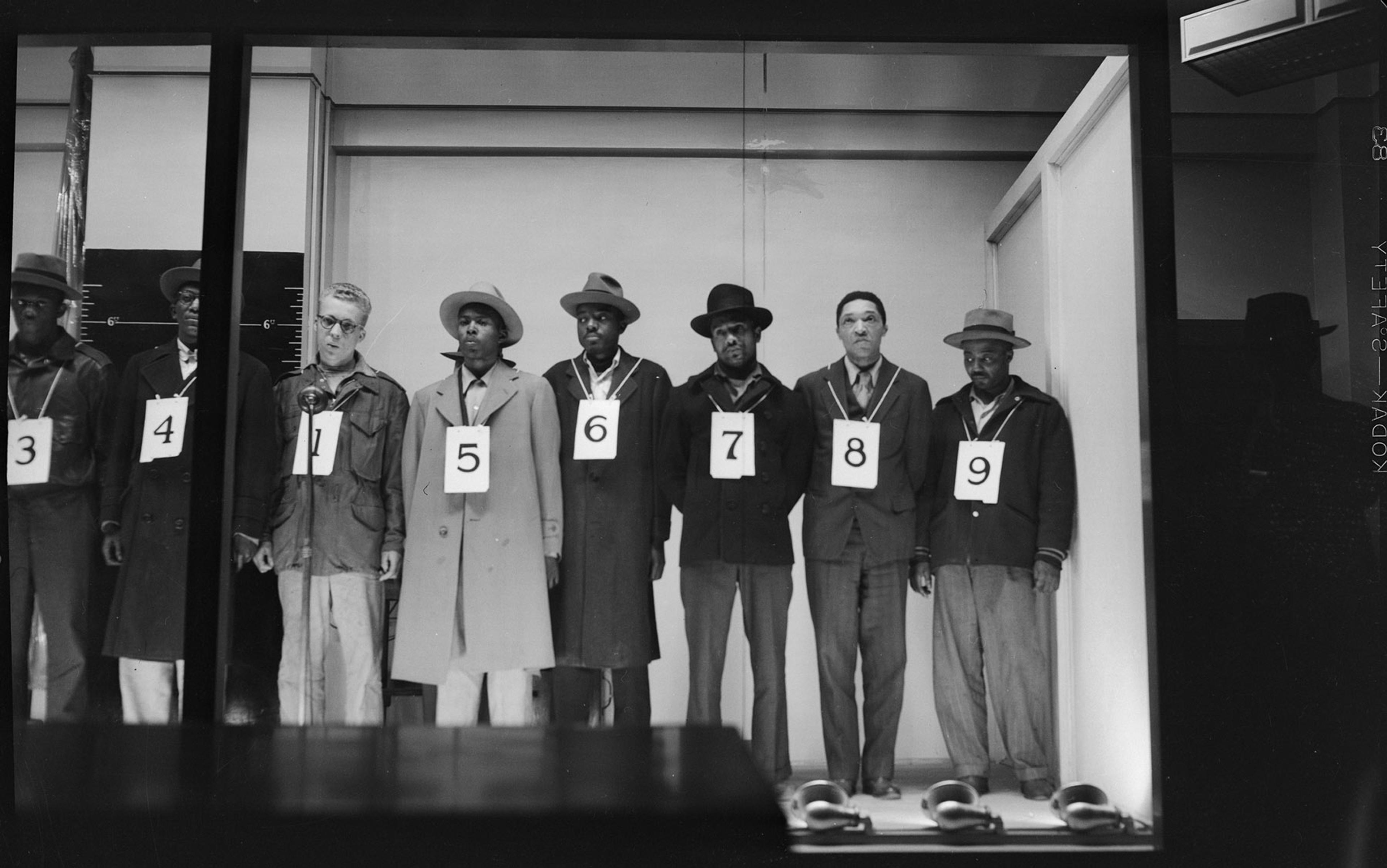

Other flawed science can influence investigations. Most surveys cite eyewitness identification as the most common cause of wrongful convictions, largely because the science of memory is misunderstood. For many years most people assumed that memory was like a videotape – that when you accessed someone’s memory you could get a fairly accurate description of what happened, and that the more times you accessed it, the more accurate the description would become. Psychologists now compare memory to trace evidence, easily contaminated by the process of collecting it, such as the nature of a line-up or the casual remarks a police officer might make. Each time the police re-interview a witness or congratulate her for identifying a culprit, they contaminate that memory a little bit more, while at the same time making the witness more confident. By the time a case goes to trial, the witness can be absolutely certain and absolutely wrong – a dangerously persuasive combination for a jury. The result has been some notorious cases of false identification in which people were imprisoned for decades before finally being freed by DNA evidence.

The damage doesn’t stop with a single mistake. What’s alarming about the use of faulty science during investigations is that one error begets others, steering investigators down the wrong track. For example, over the past couple of decades, psychologists have learned that the persistent interrogation method used by most police produces a certain percentage of false confessions. That’s not surprising: the method, called the Reid technique, is based on 1950s-era psychology that scientists have shown to be inherently coercive. But once a suspect confesses, a cascade of bad decisions can occur, according to Saul Kassin, who has been researching false confessions for more than 35 years. Kassin, a psychology professor at John Jay College in New York and at Williams College in Massachusetts, has found that confessions have a ripple effect: once a suspect admits to a crime, almost all other evidence changes to support it, from alibis to the interpretation of physical evidence. Not even retracting the confession can set things right.

That’s what happened in the case of Rivera, whose coerced confession was powerful enough to dissuade one jury from accepting DNA evidence. Another case involves Michael Ledford, a Virginia resident charged with setting a fire that injured his wife and killed his one year-old son. Investigators originally classified the fire as of ‘undetermined’ origin because they could find no evidence to support arson. A month later, Ledford confessed after an exhaustive interrogation following an all-night shift at his place of employment, in which he was falsely told that he had failed a polygraph exam. (Unlike their counterparts in the UK and Canada, US police are permitted to lie during interrogations.) It was only after that confession that investigators re-classified the fire and managed to find evidence to support arson. You could say that the confession enabled investigators to bootstrap their work, but fire experts hired by the defence say it was a classic case of a confession distorting forensics. Ledford was found guilty and is serving a 50-year sentence.

An important part of the scientific process involves recognising human bias and preventing it from affecting results. For that reason, scientists use double-blind studies. When evaluating a drug for clinical trials, neither the doctors nor the patient knows who has been given the experimental drug and who the placebo. It is only after the experiment has been concluded that the scientists are unblinded. That way their wishful thinking can’t influence their findings.

Not so with the legal profession, which accumulates biases every step of the way. Most police, when they show a line-up to a witness, know which face belongs to the suspect. Studies have shown that that simple knowledge can lead an officer to unconsciously influence the witness who, in turn, can pick the favoured suspect, who might not be the perpetrator.

Itiel Dror, a cognitive psychologist at University College London, has shown that the knowledge of a case can influence even fingerprint experts. In one well-known study in 2005, Dror and his colleagues recruited five UK fingerprint experts with a combined 85 years’ experience. He showed the experts two sets of prints that each of them had matched in actual cases five years earlier. Then he played a trick: rather than tell them where the prints really came from, he told them they came from a well-known case of a fingerprint mismatch. After examining the prints, three of the experts declared the prints a mismatch, reversing their own judgments from several years before. One expert couldn’t decide, and only one held firm to his previous position. Dror concluded that even fingerprint experts were vulnerable to ‘irrelevant and misleading contextual influences’.

Once faulty science gets into the legal system, it’s rare that the system screens it out; for, although science has self-correcting mechanisms at each step of the process, the legal system has few. The most notable such mechanism in the US is the Daubert standard, a 1993 US Supreme Court decision that is supposed to enforce rigorous standards for science in the courtroom. That decision gives judges a gatekeeper role – to make sure that any expert evidence or testimony is screened for scientific reliability. In other words, the science can’t be merely generally accepted or grandfathered in; it must have been peer-reviewed and evaluated with a quantified error rate.

many judges feel that they lack the scientific background to challenge the government’s experts

‘When a forensic technician says: “I know it when I see it,” the judge should say: “Wait a minute, is that all you have?”’ says David Faigman, professor of law at the University of California in San Francisco. ‘You have to at least articulate the variables.’

That hasn’t happened, mainly because defence lawyers rarely challenge forensic science. Those attorneys usually represent poor people, so don’t have the money to hire their own experts. In contrast, lawyers in civil suits, who stand to make bundles of money, do.

Still, judges shouldn’t have to rely on defence attorneys to screen out weak science. Under the Daubert standard, judges can, and should, challenge the evidence. But many judges feel that they lack the scientific background to challenge the government’s experts. As a result, they fall back on lessons learned in law school: when in doubt, look back to precedent. But precedent, like old science, can be wrong. In one notable case, the judge of a district court in Denver agreed with the defence attorney that latent fingerprint comparison fails to meet scientific standards because it has no statistical error rate. Yet the judge admitted the evidence anyway. After all, wrote the judge, fingerprinting had been used, ‘all over the world for almost a century’. That’s the kind of reasoning that justified bloodletting.

Then there’s the jury, our traditional common-sense arbiter of truth. Judges and lawyers have an almost religious belief in the adversarial system, in which two lawyers fight it out in front of the jury, and good judgment prevails. Yet that too fails the test of science. The jury system was designed centuries ago, when common knowledge could deal with just about any case that might come along. The world has become more complicated since then.

Furthermore, the adversarial system – in which one side is right and the other is wrong – forces expert witnesses to ‘rigidify’ their views, in the words of Susan Haack, a legal scholar at the University of Miami. Expert witnesses are more likely to say they’re 100 per cent certain than to present a nuanced or numbers-based conclusion. Intimidated by this process, jurors sometimes lean on their intuition, influenced by the confidence of eyewitnesses and the demeanor of experts. ‘He just looked guilty’ is the common refrain of jurors who find themselves confounded by the scientific evidence.

‘There’s no villain here,’ says Jennifer Mnookin, professor of law at the University of California, Los Angeles. ‘It’s more a system problem, when you get underfunded defence attorneys, zealous prosecutors, and judges who take refuge in the familiar and safe. It all adds up to a system that’s not very good at self-reflection or change.’

Unlike their US colleagues, UK police no longer push for confessions, which they consider inherently unreliable

Fortunately, there’s been progress. This summer, the FBI and other US federal agencies adopted a policy of electronically recording all interrogations – a move that is certain to reduce the number of coercive interrogations and false confessions. Many police forces in the US have modified their line-up procedures to conform to modern psychological science, making them less likely to contaminate eyewitness memory. The US Department of Justice has established a national commission to convene panels of policy experts to tease out exactly which practices are science-based and how to make them more so. They’re also examining human biases, such as tunnel vision (seeing things only one way) and confirmation bias (in which you select evidence to confirm what you already think) that influence supposedly objective lab findings. Several other nations have taken similar measures.

The UK has made a bolder move. Stung by several false confession scandals in the 1990s, the UK Home Office organised a committee of psychologists, lawyers and police officials to completely remake their interrogation procedures and bring them in line with modern cognitive psychology. The new process did away with the US-style accusatory interrogations and substituted interviews that more resemble journalistic fact-finding. Unlike their US colleagues, UK police no longer push for confessions, which they consider inherently unreliable.

Progress is coming to the courtroom, too. In the US state of Texas, which leads the nation in both executions and exonerations, lawmakers have passed a ‘junk science writ’ that makes it possible to appeal a conviction if you can show it was based on outmoded science. This law saved the life of Robert Avila, who was scheduled for execution last January for stomping to death a 19-month-old boy he was babysitting. During Avila’s 2001 trial, medical experts testified that the toddler’s injuries could not have been caused by his four-year-old brother, who had been wrestling with him in the next room. Since then, the science of biomechanics has evolved: last year, experts showed that a 40 lb child falling on the toddler could indeed have caused the injuries that killed the toddler. Avila’s case is pending an appeal.

But those changes are piecemeal, and don’t address the fundamental schisms between science and the law. In the US, a group of legal scholars is hoping to fix that, by borrowing an idea from science-based industries such as aviation and medicine. The idea is to approach injustices as errors in the system, rather than one-off exceptions to the rule or individual mistakes. According to James Doyle, the Boston attorney who developed the idea, a systems analysis of the Rivera case would examine how community pressure, tunnel vision by the police, flawed interrogation training, laxity of the judge, and a host of other factors allowed bad science to work its way through the system and convict an innocent man.

Several nations are examining a novel Australian method of vetting science in the courtroom, called ‘hot tubbing’, or ‘concurrent evidence’. Rather than pit one scientific expert against the other, the judge sits all the experts together, where they present their analyses, question each other, and eventually arrive at a consensus. Rather than a battlefield, the process resembles a scientific discussion.

None of this is to say that our legal system is hostile to scientific procedures. Indeed, we know as much as we do about false convictions today because evidence has become more scientific, not less. But the more that we can blend the two cultures by bringing science into police stations and courts – not only with specific techniques, but with the core principles of the scientific method – the more just our legal system will be.