Watching a rocket as it slowly starts to heave itself out of Earth’s deep gravity well and then streaks up into the blue, you suddenly grasp on a visceral level the energies involved in space exploration. One minute that huge cylinder is sitting quietly on its launching pad; the next, its engines fire up with a brilliant burst of light. Clouds of exhaust fill the sky, and the waves of body-shaking thunder never seem to end.

To get anywhere in space, you have to travel astounding distances. Even the Moon is about 400,000 km away. And yet the hardest part – energy-wise, anyway – is just getting off the ground. Clear that hurdle, slip the bonds of Earth, and you’re off. Gravity’s influence falls away and suddenly, travel becomes a lot cheaper.

So it might be surprising to hear that the most exciting new frontier in space exploration starts a mere 2,000 km above the terrestrial surface. We aren’t talking about manned missions, automatic rovers or even probes. We’re talking about satellites. Even more prosaically, we’re talking about communications satellites, in low Earth orbit. Yes, they’ll be fitted with precision laser equipment that sends and receives particles of light – photons – in their fundamental quantum states. But the missions will be an essentially commercial proposition, paid for, in all probability, by banks eager to protect themselves against fraud.

Perhaps that doesn’t sound very romantic. So consider this: those satellites could change the way we see our Universe as much as any space mission to date. For the first time, we will be able to test quantum physics in space. We’ll get our best chance yet to see how it meshes with that other great physical theory, relativity. And at this point, we have very little idea what happens next.

Let’s back up. Since its discovery in 1900 and its formalisation in the 1920s, quantum mechanics has remained unchallenged as our basic theory of the submicroscopic world. Everything we know about energy and matter can (in principle) be derived from its equations. In an extended form, known as quantum field theory, it underlies the ‘Standard Model’ – which is to say, all that we know about the elementary particles.

It’s difficult to overstate the explanatory power of the Standard Model. Physics has identified four fundamental forces at work in the Universe. The Standard Model accounts for three of them. It explains the electromagnetic force that holds atoms and molecules together; the strong force that binds quarks into protons and neutrons and clamps them together in atomic nuclei; and the weak force that releases electrons or positrons from a nucleus in the form of beta decay. The only thing the model leaves out is gravity, the weakest of the four. Gravity has a theory of its own – general relativity, which Albert Einstein published in 1916.

Many physicists believe we should be able to capture all our fundamental forces with a single theory. It’s fair to say that this has yet to be achieved. The problem is, quantum theory and relativity are based on utterly different premises. In the Standard Model, forces arise from the interchange of elementary particles. Electromagnetism is caused by the emission and absorption of photons. Other particles cause the strong and weak forces. In a way, the micro-scale world functions like a crowd of kids pelting each other with snowballs.

Gravity is different. In fact, according to general relativity, it’s not really a force at all.

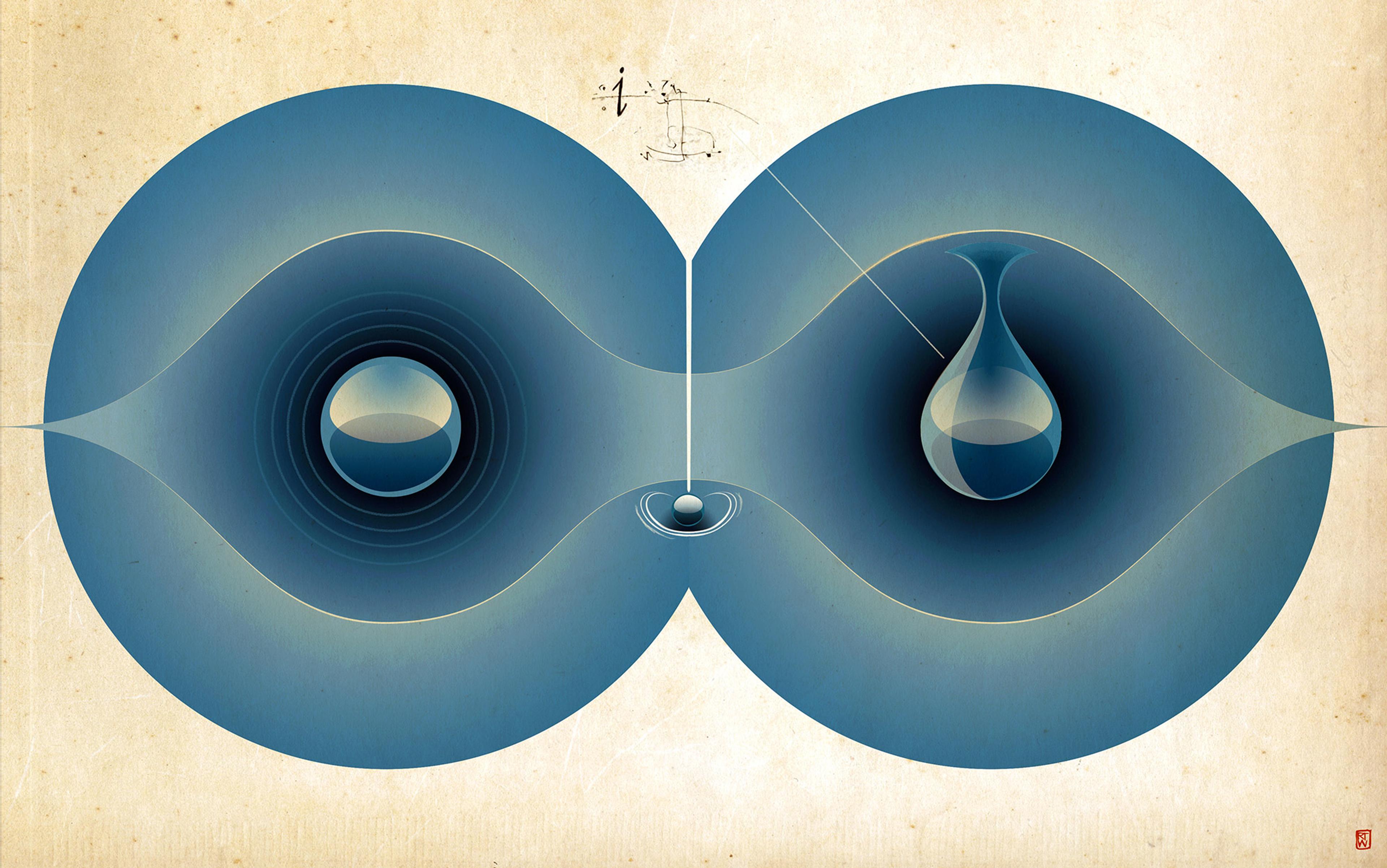

Picture an empty canvas hammock stretched out flat between two trees. In places where there are no big masses (stars, for example), spacetime is a bit like that. An apple placed on the hammock would stay put. Given a shove, it would roll across the canvas in a straight line (in the real Universe, that’s how bodies behave when they are far away from any large masses). But when you settle your mass into the hammock, you distort its flatness into a dip. Given a shove, the apple would swerve around the dip – or maybe just roll straight into your side. In much the same way, when a planet orbits a sun, there’s no force pulling it – it is simply following a curved path in distorted spacetime. Gravity is what we call that curvature. As the American physicist John Wheeler put it in 1990: ‘Mass tells spacetime how to curve, and spacetime tells mass how to move.’

This geometrical character sets general relativity apart from quantum mechanics. They might have been lumped together as ‘modern physics’ in the 20th century, but really, these theories merely coexist. As the British physicist J J Thomson wrote in 1925 in a different context, they are like a ‘tiger and shark, each is supreme in his own element but helpless in that of the other’. And yet the prospect of bringing them together remains irresistible. It might be true that we have working theories for all the fundamental forces, but until we can unite them within a single theory, important parts of the cosmic environment and its history remain obscure.

Such as? Well, using our existing theories we can trace the history of the Universe back almost to the Big Bang itself, 13.8 billion years ago. Almost but not quite: we know next to nothing about the first 10-43 seconds. What we can say is that the cosmos must have been extremely small and hot at that point.

That earliest ‘Planck epoch’ is defined using tiny fundamental units invented by Max Planck – a length of about 10-35 metres, a mass of about 10-8 kg, and a duration of about 10-43 seconds. The Planck length, far smaller than any elementary particle or distance that we could measure, is the ultimate quantum uncertainty in location. It is the scale at which gravitational and quantum effects are equally strong. What that means is that, when the Universe was still that small, it could be understood only through a theory that includes both gravity and quantum mechanics.

Such a theory is also necessary for black holes, those cosmic zones where mass is so concentrated that not even light can escape its gravitational effects. Black holes are common – they exist at the centre of our galaxy and many others – and though general relativity predicts them, it does not fully describe them. In particular, it is silent about what happens at their centres, where spacetime becomes infinitely curved. So, a decent theory of quantum gravity would shine a light into some mysterious places.

Sadly, our best efforts to develop this theory remain unconvincing. One idea is that gravity is carried by hypothetical particles, just like the other three forces. These ‘gravitons’ are predicted by string theory, in which elementary particles are quantum states of tiny vibrating strings. But string theory itself is controversial, mainly because, despite the beauty of its mathematics, it fails to generate anything resembling a testable prediction (as Einstein said: ‘If you are out to describe the truth, leave elegance to the tailor’). A competing theory called loop quantum gravity, in which spacetime itself has a quantum nature, also lacks testable implications.

What to do, then? In physics, mathematical beauty and ingenuity are never enough by themselves. What is needed is data – especially new data. And it happens that the prospects for that are suddenly very good.

Recall that quantum mechanics describes the micro-world of fundamental particles. General relativity, meanwhile, describes how celestial bodies operate over great distances – it governs the vast expanses of the cosmos. What we don’t yet know is what happens to quantum phenomena over long distances. In short, we haven’t tried to do quantum experiments where relativity gets in the way. Yet.

Today, the opportunity to do just that looks likely to emerge from a plan to improve our current telecommunications infrastructure. At the moment, a lot of data – internet, TV and suchlike – is transmitted as pulses of light through a global fibre-optic network. Those pulses are made of photons, of course, but the network does not make use of the photon’s exotic quantum properties. The new idea is to see what happens when it does.

There’s one area where the use of qubits is anticipated with particular eagerness: data security

Let’s look at photons for a moment. Each one has an electric field that can be polarised – that is, it can be made to point in either of two directions at right angles to one another, which at the Earth’s surface are ‘horizontal’ and ‘vertical’. We could indicate the former with a ‘0’ and the latter with a ‘1’. And once we’re thinking about our photon that way, it’s only a short leap to seeing it as a binary bit, like a switch in a computer processor. But there’s a twist. A regular computer bit is always 0 or 1, with no other options. The quantum nature of a photon, by contrast, allows it to represent 0 and 1 simultaneously. It’s a sort of super-bit. We call it a ‘quantum bit’, or qubit for short.

Qubit-based computers, now being designed, are expected to far outperform ordinary computers for certain problems. And there’s one area where the use of qubits is anticipated with particular eagerness: data security. In any communications system, sensitive information such as financial data can be encoded and sent to a recipient who has the key to the code. The trouble is, it’s always possible for a third party to sneak into the network and secretly learn the key. It was this kind of breach, for example, that recently leaked the credit card numbers of millions of customers of US retail chains such as Target and Home Depot. Qubits should prevent that.

Using a procedure called ‘quantum key distribution’ (QKD for short), the innate quantum uncertainty about the polarisation of each photon allows us to generate a long random string of 0s and 1s, which can then be sent as a totally secure key. It’s secure because any interception would be detected – reading the bits changes them, thanks to the Heisenberg Uncertainty Principle.

Well, that’s the theory. In practice, it turns out that photon qubits cannot reliably be sent through long stretches of optical fibre. So researchers are developing an audacious new plan for a secure global data network: they intend to transmit qubits between ground stations and space satellites in low Earth orbit (LEO) at altitudes up to 2,000 km.

There are groups working on this project at the Institute for Quantum Computing (IQC), part of the University of Waterloo in Canada, and at the Centre for Quantum Technologies in Singapore. The most advanced effort, supported by the Chinese Academy of Sciences, is based at the University of Science and Technology of China in Hefei (USTC). Its stated goal is to launch an LOE Quantum Science satellite in 2016, equipped to test both secure quantum communications and fundamental quantum effects.

Once qubits can be exchanged with this or any other satellite, we can begin examining quantum mechanics in space. Surely the first thing to look into would be the exotic effect of entanglement – what Einstein called ‘spooky action at a distance’. Entanglement means that, once two quantum particles have interacted, they remain linked no matter how far apart, so that measuring one instantly affects the other. We can, for instance, prepare a photon pair so that they are oppositely polarised (horizontal and vertical, 0 and 1), without knowing which is which. As soon as the polarisation of photon 1 is measured, no matter what the result, measurement of photon 2 will reveal the other value with no physical connection between the two.

The strangeness of quantum entanglement was first laid out in the 1935 ‘EPR’ paper by Einstein, Boris Podolsky and Nathan Rosen. EPR argued that entanglement contradicts ‘local realism’, which is the idea that objects have innate properties independent of measurement and that they cannot affect each other any sooner than light can travel between them. In an amazing tour de force in 1964, the Northern Irish theorist John Bell showed that, if data from two entangled particles obey a mathematical relationship called Bell’s Inequality (as it appears they do), they must violate local realism. And they must violate it ‘instantaneously’, through a quantum feature such that ‘the setting of one measuring device can influence the reading of another instrument however remote’ (emphasis mine).

However remote? Entanglement has been tested up to a distance of 143 km, by Anton Zeilinger at the University of Vienna. In 2012, Zeilinger’s former student Jian-Wei Pan, who heads the USTC quantum satellite effort, achieved entanglement over distances nearly as large. And then, in an important ‘proof of principle’ demonstration in 2013, he managed to transmit photon qubits 800 km from a ground station to an orbiting German satellite and back. Results like these start to make quantum research in space look very doable.

So what can we hope to investigate? Conveniently, the Institute for Quantum Computing recently published a survey of the most exciting avenues. The first experiments to push into new regimes would use LEO satellites to test entanglement up to a distance of 2,000 km. If entanglement and Bell’s Inequality still hold, that would give the strongest evidence yet against local realism. An important result. But if either failed within that distance, then the meaning of entanglement would have to be utterly rethought. That would be even more interesting.

LEO measurements could also support a test not possible on Earth. When an observer measures one of an entangled pair of photons, in principle that simultaneously determines the state of its partner as measured by a second observer. But in special relativity, the meaning of the word ‘simultaneous’ is complicated. Observers moving relative to one another who measure two physically separated events will disagree over who made the measurement first. The time difference is too small to measure at earthly speeds, but LEO satellites move fast enough that the apparent paradox could be examined to explore the supposedly instantaneous nature of entanglement.

experiments in this broader regime are most likely to discover something big

However, current quantum technology might be sufficient to transfer qubits and test entanglement still further out – to geostationary satellites 36,000 km above the Earth’s equator, and even 10 times further, to the Moon. At these distances, the curvature of spacetime starts to become important. For that reason, Thomas Jennewein, a lead author of the IQC paper, thinks experiments in this broader regime are most likely to discover something big.

Such as? One example concerns what is called gravitational redshift. We know that a photon’s wavelength moves towards the red end of the spectrum as the photon climbs upwards against gravity. The reason is because time dilates – that is, clocks literally run slower – the more intense the gravitational field becomes. Even in the short climb to a LEO satellite, photon qubits ought to be redshifted, which would mean we can determine whether time dilation applies at the quantum level – another important result. But if we try this and similar experiments at greater distances, we should see more intricate examples of quantum-gravitational interaction.

For one thing, the curvature of spacetime would affect the polarisation of photons and hence any measurements of quantum entanglement. Near the Earth’s surface, photons follow straight lines, which means that their horizontal and vertical polarisation directions are fixed. This allows us to do clear-cut entanglement experiments in which measuring photon 1 as ‘horizontal’ makes photon 2 yield ‘vertical’, and vice-versa. But if two entangled photons follow different relativistic curved paths in space, the directions of their electric fields and polarisations would seem to depend on the details of the curvatures. In this way relativity becomes mixed into an innately quantum mechanical effect.

Polarised photons might also allow us to explore the hypothesis that spacetime itself is quantised – that is, not smooth as in general relativity, but a granular structure made of discrete Planck-sized cells. Evidence for this would be a huge step towards a theory of quantum gravity. The trouble is, we don’t currently have any hope of probing these tiny units directly. This difficulty has inspired physicists to come up with an alternative – the Holometer at the Fermi National Accelerator Laboratory near Chicago. This sensitive device is designed to detect the ‘jitter’ in laser beams as they are affected by the randomness inherent in a quantised spacetime. Some observers are sceptical that this will pay off, but data collection has started and could yield results relatively soon.

By contrast, an approach based on polarised photons in space came in 2010 from researchers at Imperial College, London. Each time a photon traverses a Planck cell, quantum randomness would slightly shift the direction of the photon’s electric field. The accumulation of many quantum ‘kicks’ as a photon travels a long distance through numerous cells would measurably change the polarisation, indicating a granular spacetime. The distance would need to be billions of kilometres at least – the size of the Solar System – which is far beyond present quantum technology. But it’s nice to dream big: the IQC paper classifies this experiment as ‘visionary’. Whether that means ‘potentially ground-breaking’ or ‘slightly off the reservation’ is unclear.

Similarly visionary experiments could use entanglement to examine the history of the Universe. In theory, as the cosmos grew from its tiny beginnings, the corresponding changes in spacetime curvature should have altered entanglement in ways that can be traced back to presently unknown details of cosmic development. Entanglement studies could even bear on an old cosmological question: are the physical laws that we have derived on Earth valid for the whole Universe? Since the time of Copernicus, scientists and philosophers have considered this question from different perspectives, but have had little direct evidence to draw on. If entanglement proves to be truly infinite in scope, it could be the ultimate tool to glean answers from distant cosmic locations.

These wild schemes would have to use spacecraft operating far from Earth. But we’ve done that before: NASA’s Voyager 1, launched in 1977 and now 20 billion km away, is still out there in interstellar space, sending back data (as we noted earlier, space travel is pretty cheap once you’ve got going).

satellite-based QKD will form an important piece of a multibillion-dollar global quantum cryptography market

Voyager was never equipped to handle qubits or entangled photons, of course, but Singapore’s Centre for Quantum Technologies has just tested a compact source of entangled photons suitable for spacecraft. There are huge technical challenges to overcome. It will be difficult to maintain entanglement over these distances. Even so, we can imagine putting such a source on a space probe and sending it off beyond the edge of the Solar System. Like Voyager, this vessel would have to travel for many years to reach a suitable distance. Its discoveries would lie a long way in our future.

More immediately, we can look forward to meaningful LEO satellite measurements, assuming that the underlying quantum network can be established. There are good reasons to think it can. The Chinese government is putting considerable resources into its quantum satellite programme. Western capitalism is well-motivated to weigh in, too. A recent IQC market study asserts that in a world where data security is under heavy attack, QKD represents a ‘potentially lucrative business opportunity’ because its fundamental quantum principles will always be proof against hackers. The study predicts that satellite-based QKD will form an important piece of a multibillion-dollar global quantum cryptography market. That ought to focus some energy.

Governments and financial institutions such as major banks will welcome the day when quantum satellites can securely transmit sensitive data. But the biggest winners of the quantum network will surely be researchers eager for new fundamental discoveries. And that day could well mark the first time in history when banks too big to fail test our best physical theories to destruction.