Tamaki Sono/Flickr

Many people view the internet as an unstoppable force with regenerative powers. They believe our insatiable appetite for information ensures that even if data gets removed from a particular website, it’s just a matter of time before the vanquished material reappears elsewhere. Just look at how the BBC resisted Google’s attempts to comply with the European Union’s so-called ‘Right to Be Forgotten’ of 2014. When links were removed from the search engine, it aggregated and reposted the delisted information on its own website. From this perspective, the internet is a hydra, the mythical beast that could grow back any head that had been chopped off.

Don’t buy into this fallacy. In reality, the internet is more like a bustling city than a hydra. There are glitzy neighbourhoods: safe, family-friendly and with well-lit streets. But there also are seedy underbellies to be navigated only by those in the know, as well as plenty of dark alleys, forgotten corners and hidden haunts. Keep in mind, too, that many will choose where they go based on convenience, especially tourists. If it’s hard to get from A to B, fewer people will make the trip. And as any city planner will tell you, if you want more traffic, build a closer exit ramp. It’s not that we’re all lazy. We simply have limited attention spans and finite resources for gathering information and making decisions. Transaction costs impact behaviour, and this is as much the key to urban navigation as it is to preserving our privacy online.

When Google voluntarily removed links to revenge porn in June, and delisted the information from its search engine, it made the non-consensually published images more taxing but not impossible to find. It’s as if the road to revenge porn was shuttered. Sure, other routes can take you there, but they’re off the main thoroughfare. Google deserves credit for enhancing privacy protections by bolstering obscurity.

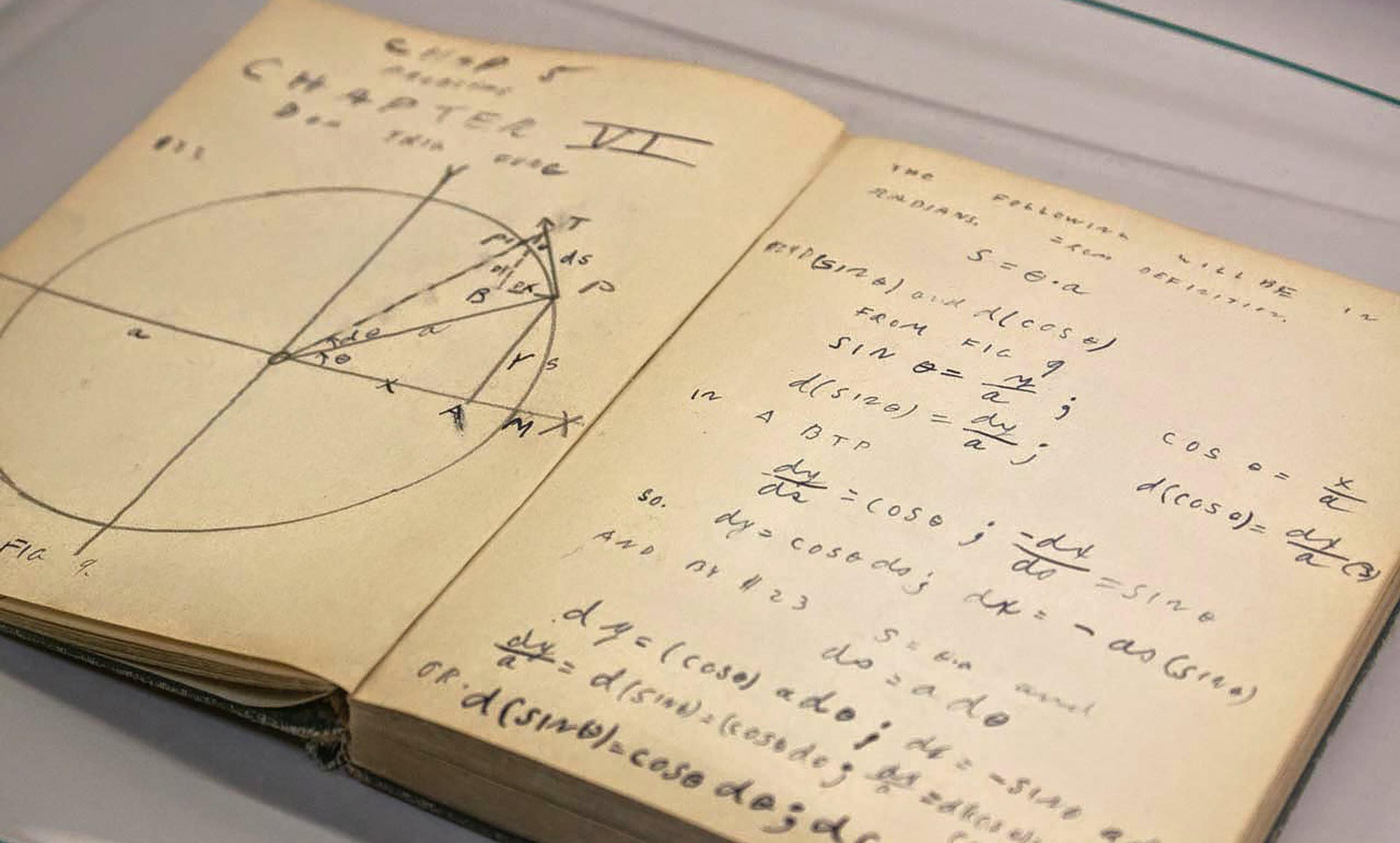

If information is hard to find or understand – when it’s obscure – it’s safer. We rely upon obscurity all the time to protect both trivial and significant personal information. Indeed, we grasp this point in our everyday lives by recognising that face-to-face conversations are protected by zones of obscurity which revolve around constraints such as our limited memories and the limited number of people who know what we look like.

Laws can foster obscurity by introducing transaction costs that make it expensive to find information. For example, the ‘Right to Be Forgotten’ is really more of a ‘Right to Be Obscure’. It facilitates delisting from search engines, though not full-blown take-downs from original publishers or erasure from databases. Similarly, California’s ‘Eraser Button’ Law ensures that minors can delete their own social media posts, even though its efficacy is limited when others continue to disseminate the information.

Technology can protect obscurity too. YouTube’s ‘face blurring’ tool can keep facial recognition technologies from identifying people who prefer to remain anonymous, such as protesters. And the robots.txt protocol has prevented web pages from being indexed by search engines long before the ‘Right to Be Forgotten’ existed. Even social media privacy settings are better thought of as obscurity settings. They merely make information harder to find, and yet their modest privacy protections might be all we need to safeguard against most social disclosures.

Once you appreciate what obscurity is, you begin to get a sense of what’s wrong with the widely shared view that privacy interests are forfeited once information is publicly shared. As per the hydra view, if information is shared on the internet, you can’t limit who will see it. After all, it’s saved online, and you can’t take it back if someone or some company decides to spread, aggregate or highlight it. In a similar manner, if you leave your house, it’s incredibly easy to be recorded, studied and discussed. The surveilled information can be disseminated online and integrated with personal data that’s already on the internet.

But if privacy protections are rendered meaningless just because information is available to some audiences, there’s no point resisting facial recognition technology, drones, wearables such as Google Glass, data brokers and search engines! It’s also futile to even try to prevent personal information from being digitised and put online for all the world to study or nefarious industries to exploit. Of course, we shouldn’t want to live in a world where you have to forsake all privacy expectations the moment you step outside. You should be able to enjoy what a city has to offer without fearing that you’re losing something of yourself when you do.

All of these technologies and practices can be limited by laws, policies, norms and design choices that delay prying eyes and disperse personal information – at least if there’s enough will and ingenuity to back them up. For example, while determined hackers can’t be stopped from uploading illegitimate software to Glass, Google’s prohibition against facial recognition apps cut off facial recognition at the source. Bans still remain a live option, too. Several states have or are considering prohibiting automatic licence‑plate readers that make collecting and aggregating the whereabouts of motorists much easier than eyewitness observation.

Yes, innovation will continue to challenge obscurity. But that doesn’t mean the entire enterprise should be abandoned. Instead, we should embrace our Sisyphean fate and wash our hands of the unrealistic hope of ever achieving perfect privacy protections. Delay. Disperse. Obfuscate. Et cetera. Repeat, over and over. Is this ideal? Of course not. But that’s okay. Privacy panaceas are as mythical as hydras.