Here’s a simple recipe for doing science. Find a plausible theory for how some bits of the world behave, make predictions, test them experimentally. If the results fit the predictions, then the theory might describe what’s really going on. If not, you need to think again. Scientific work is vastly diverse and full of fascinating complexities. Still, the recipe captures crucial features of how most of it has been done for the past few hundred years.

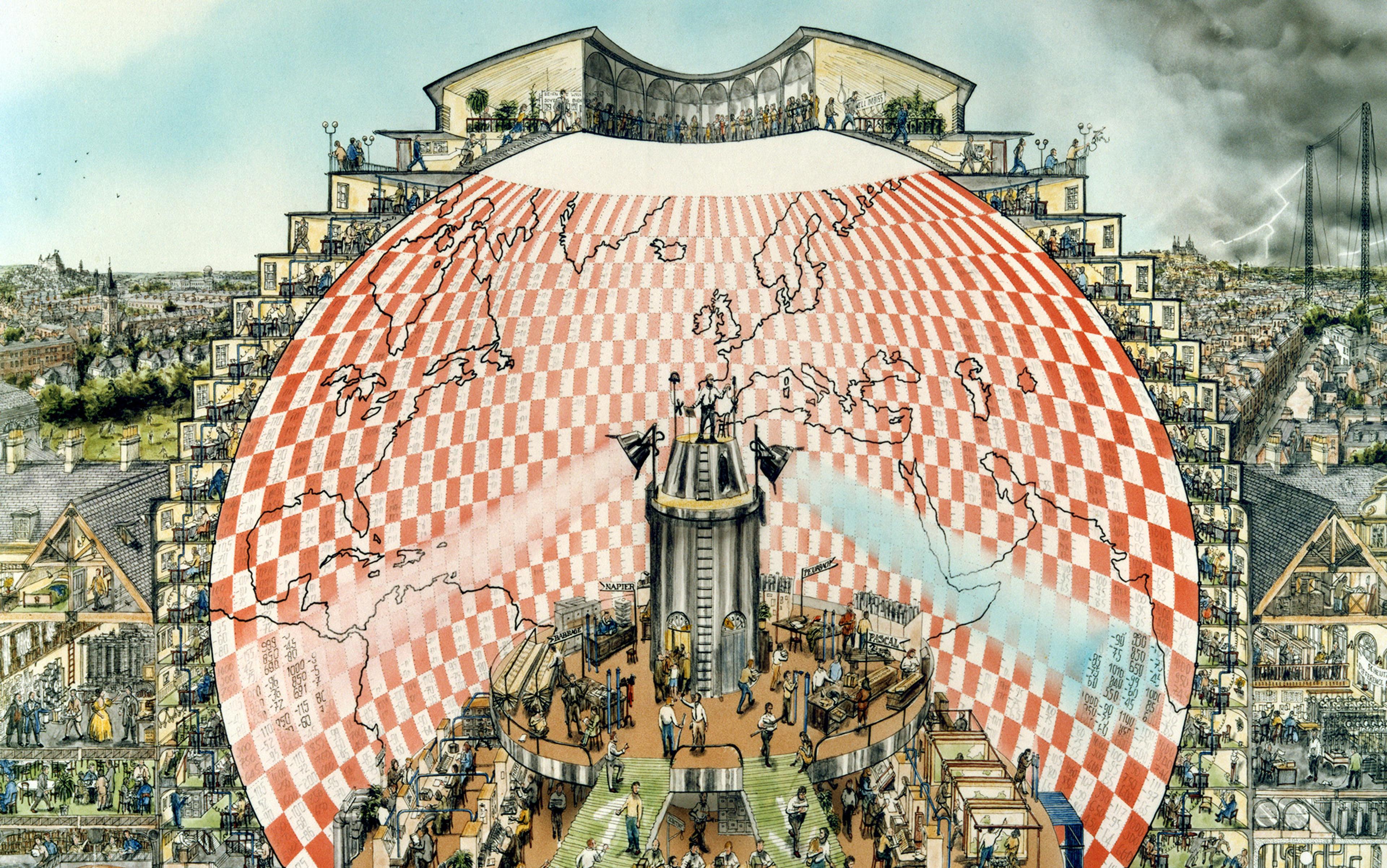

Now, however, there is a new ingredient. Computer simulation, only a few decades old, is transforming scientific projects as mind-bending as plotting the evolution of the cosmos, and as mundane as predicting traffic snarl-ups. What should we make of this scientific nouvelle cuisine? While it is related to experiment, all the action is in silico — not in the world, or even the lab. It might involve theory, transformed into equations, then computer code. Or it might just incorporate some rough approximations, which are good enough to get by with. Made digestible, the results affect us all.

As computer modelling has become essential to more and more areas of science, it has also become at least a partial guide to headline-grabbing policy issues, from flood control and the conserving of fish stocks, to climate change and — heaven help us — the economy. But do politicians and officials understand the limits of what these models can do? Are they all as good, or as bad, as each other? If not, how can we tell which is which?

Modelling is an old word in science, and the old uses remain. It can mean a way of thinking grounded in analogy — electricity as a fluid that flows, an atom as a miniature solar system. Or it can be more like the child’s toy sense of model: an actual physical model of something that serves as an aid to thought. Recall James Watson in 1953 using first cardboard, then brass templates cut in the shape of the four bases in DNA so that he could shuffle them around and consider how they might fit together in what emerged as the double-helix model of the genetic material.

Computer models are different. They’re often more complex, always more abstract and, crucially, they’re dynamic. It is the dynamics that call for the computation. Somewhere in the model lies an equation or set of equations that represent how some variables are tied to others: change one quantity, and working through the mathematics will tell you how it affects the rest. In most systems, tracking such changes over time quickly overwhelms human powers of calculation. But with today’s super-fast computers, such dynamic problems are becoming soluble. Just turn your model, whatever it is, into a system of equations, let the computer solve them over a given period, and, voila, you have a simulation.

In this new world of computer modelling, an oft-quoted remark made in the 1970s by the statistician George Box remains a useful rule of thumb: ‘all models are wrong, but some are useful’. He meant, of course, that while the new simulations should never be mistaken for the real thing, their features might yet inform us about aspects of reality that matter.

To get a feel for the range of models currently in use, and the kinds of trade-offs and approximations model builders have to adopt, consider the certifiably top-notch modelling application that just won its authors the 2013 Nobel Prize for chemistry. Michael Levitt, professor of structural biology at Stanford University, along with professors of chemistry Martin Karplus of Harvard and Arieh Warshel of the University of Southern California, were honoured for modelling chemical reactions involving extremely large molecules, such as proteins. We know how these reactions work in principle, but calculating the full details — governed by quantum mechanics — remains far beyond our computers. What you can do is calculate the accurate, quantum mechanical results for the atoms you think are important. Levitt’s model simply treats the rest of the molecule as a collection of balls connected by springs, whose mechanical behaviour is easier to plot. As he explained in Nature in October: ‘The art is to find an approximation simple enough to be computable, but not so simple that you lose the useful detail.’

Because it’s usually easy to perform experiments in chemistry, molecular simulations have developed in tandem with accumulating lab results and enormous increases in computing speed. It is a powerful combination. But there are other fields where modelling benefits from checking back with a real, physical system. Aircraft and Formula One car designs, though tested aerodynamically on computers, are still tweaked in the wind-tunnel (often using a model of the old-fashioned kind). Marussia F1 (formerly Virgin Racing) likewise uses computational fluid dynamics to cut down on expensive wind-tunnel testing, but not as a complete substitute. Nuclear explosion simulations were one of the earliest uses of computer modelling, and, of course, since the test-ban treaty of 1996, simulated explosions are the only ones that happen. Still, aspects of the models continue to be real-world tested by creating extreme conditions with high-power laser beams.

More often, though — and more worryingly for policymakers — models and simulations crop up in domains where experimentation is harder in practice, or impossible in principle. And when testing against reality is not an option, our confidence in any given model relies on other factors, not least a good grasp of underlying principles. In epidemiology, for example, plotting the spread of an infectious disease is simple, mathematically speaking. The equations hinge on the number of new cases that each existing case leads to — the crucial quantity being the reproduction number, R0. If R0 is bigger than one, you have a problem. Get it below one, and your problem will go away.

It would be harder to model a totally new disease, but we would know the factors likely to influence its spread.

Such modelling proved impressively influential during the 2001 foot and mouth epidemic in the UK, even as it called for an unprecedented cull of livestock. The country’s chief scientific adviser, David King, assured prime minister Tony Blair that the cull would ‘turn exponential growth to exponential decay within two days’. It worked, freeing Blair to call a general election after a month’s postponement — an unexpected result from a computer model.

King is a chemist, but he understood what the epidemiologists were saying, because models for following a virus travelling from one farm to another were ‘very similar’ to models he’d published in the 1980s describing the pattern of diffusion of molecules on solid surfaces. He also had the advantage that domesticated animals (in this case, cows, sheep and pigs) are easy to manage: we have good information about how many there are, and where. Unlike humans, or wild animals (where this year’s UK badger cull comes to mind), movement can be prohibited easily. Plus, we can kill a few million livestock with surprisingly little protest.

Ease of repetition is another mark of good modelling, since it gives us a good idea of how sensitive the results are to variations in starting conditions. This is a crucial consideration that, at the risk of sounding circular, we first learnt about from computer models in meteorology. It becomes expensive with bigger, more complex models that require precious supercomputer time. Yet, for all that, we seem increasingly to be discussing results from models of natural phenomena that are neither well-understood, nor likely to respond to our tampering in any simple way. In particular, as Naomi Oreskes, professor of the history of science at Harvard, notes, we used such models to study systems that are too large, too complex, or too far away to tackle any other way. That makes the models indispensable, as the alternative is plain guessing. But it also brings new dimensions of uncertainty.

Think of a typical computer model as a box, with a linked set of equations inside that transforms inputs into outputs. The way the inputs are treated varies widely. So do the inputs and outputs. Since models track dynamics, part of the input will often be the output of the last cycle of calculation — as with the temperature, pressure, wind and rainfall data from a weather forecast — and part will be external influences on the results, such as the energy transferred from outside the limits of the forecast area. Run the model once, and you get a timeline that charts how things might turn out, a vision of one possible future.

There are numerous possible sources of fuzziness in that vision. First, you might be a bit hazy about the inputs derived from observations — the tedious but important stuff of who measured what, when, and whether the measurements were reliable. Then there are the processes represented in the model that are well understood but can’t be handled precisely because they happen on the wrong scale. Simulations typically concern continuous processes that are sampled to furnish data — and calculations — that you can actually work with. But what if significant things happen below the sampling size? Fluid flow, for instance, produces atmospheric eddies on the scale of a hurricane, down to the draft coming through your window. In theory, they can all be modelled using the same equations. But while a climate modeller can include the large ones, the smaller scales can be approximated only if the calculation is ever going to end.

Finally, there are the processes that aren’t well-understood — climate modelling is rife with these. Modellers deal with them by putting in simplifications and approximations that they refer to as parameterisation. They work hard at tuning parameters to make them more realistic, and argue about the right values, but some fuzziness always remains.

There are some policy-relevant models where the uncertainties are minimal. Surface flooding is a good example. It is relatively easy to work out which areas are likely to be under water for a given volume of river flow: the water moves under gravity, you allow for the friction of the surface, and use up-to-date data from airborne lasers that can measure surface height to within five centimetres. But this doesn’t tell you when the next flood will come. That depends on much fuzzier models of weather and climate. And there are plenty of harder problems in hydrology than surface flooding; for example, anything under the ground taxes modellers hugely.

In epidemiology, plotting the likely course of human epidemics depends on a far larger package of assumptions about biology and behaviour than for a disease of cattle. Biologically, a simple-looking quantity such as the reproduction number R0 still depends on a host of factors that have to be studied in detail. The life cycle of the infecting organism and the state of the host’s immune system matter. And if you’re modelling, say, a flu outbreak, then you must consider who will stay at home when they start sneezing; who will get access to drugs to dampen down their symptoms; who may have had a vaccine in advance, and so on. The resulting models might factor in hundreds of elements, and the computers then check possible variations to see which matter most. Even so, they were accurate enough to help frame the policies that contained the outbreak of severe acute respiratory syndrome (SARS) in 2002. And the next flu epidemic will see public health departments using models to predict the flow of hospital admissions they need to plan for. It would be harder to model a totally new disease, but we would know the factors likely to influence its spread.

When the uncertainties are harder to characterise, evaluating a model depends more on stepping back, I think, and asking what kind of community it emerges from. Is it, in a word, scientific? And what does that mean for this new way of doing science?

While disease models draw on ideas about epidemic spread that predate digital computers, and are scientifically well-grounded, climate models are much more complex and are a large, ramified work in progress. What’s more, the earth system is imperfectly understood, so uncertainties abound; even aspects that are well-understood, such as fluid flow equations, challenge the models. Tim Palmer, professor in climate physics at the University of Oxford, says the equations are the mathematical equivalent of a Russian doll: they unpack in such a way that a simple governing equation is actually shorthand for billions and billions of equations. Too many for even the fastest computers.

Then there is the input data, a subject of much controversy — some manufactured, some genuine. Imagine setting out to run a global simulation of anything. A little thought shows that the data will come from many measurements, in many instruments, in many places. Human fallibility and the complexities of the instrumentation mean a lot of careful work is needed to standardise it and make it usable. In fact, there are algorithms — models, by another name — to help clean up the temperature, air pressure, humidity and other data points that climate models need. In A Vast Machine (2010), which chronicles the history of climate models, Paul Edwards, a historian at the University of Michigan, shows that the past few decades have seen an immense collective endeavour to merge data collection with model design, as both co-evolve. At its best, this endeavour is systematically self-critical — in the way that good science has to be.

The way to regard climate models, Edwards and others suggest, is — contrary to the typical criticism — not as arbitrary constructs that produce the results modellers want. Rather, as the philosopher Eric Winsberg argues in detail in Science in the Age of Computer Simulation (2010), developing useful simulations is not that different from performing successful experiments. An experiment, like a model, is a simplification of reality. Deciding what counts as good one, or even what counts as a repeat of an old one, depends on intense, detailed discussions between groups of experts who usually agree about fundamentals.

Of course, uncertainties remain, and can be hard to reduce, but Reto Knutti, from the Institute for Atmospheric and Climate Science in Zurich, says that does not mean the models are not telling us anything: ‘For some variable and scales, model projections are remarkably robust and unlikely to be entirely wrong.’ There aren’t any models, for example, that indicate that increasing levels of atmospheric greenhouse gases will lead to a sudden fall in temperature. And the size of the increase they do project does not vary over that wide a range, either.

However, it does vary, and that is unfortunate, because the difference between a global average increase of a couple of degrees centigrade, and four, five or six degrees centigrade, is generally agreed to be crucial. But we might have to resign ourselves to peering through the lens of models at a blurry image. Or, as Paul Edwards frames that future: ‘more global data images, more versions of the atmosphere, all shimmering within a relatively narrow band yet never settling on a single definitive line’. But then, the Nobel-anointed discovery of the Higgs boson depends on a constantly accumulating computer analysis of zillions of particle collisions, focusing the probability that the expected signature of the Higgs has actually been observed deep inside a detector of astonishing complexity. That image shimmers, too, but we accept it is no mirage.

The computer models of economists have to use equations that represent human behaviour; by common consent, they do it amazingly badly

One way to appreciate the virtues of climate models is to compare them with a field where mirages are pretty much the standard product: economics. The computer models that economists operate have to use equations that represent human behaviour, among other things, and by common consent, they do it amazingly badly. Climate modellers, all using the same agreed equations from physics, are reluctant to consider economic models as models at all. Economists, it seems, can just decide to use whatever equations they prefer.

Mainstream economic modellers seem interested in what they can make their theory do — not in actually testing it. The models most often discussed in academic journals, and the kind used for forecasting, assume that markets reach a point of stability, and that the people who buy and sell behave rationality, trying to maximise their gain.

The crash of 2008 led to a renewed questioning of those assumptions. Thomas Lux, professor of economics at the University of Kiel in Germany, together with seven colleagues in Europe and the US, wrote a searing critique of the ‘systemic failure’ of academic economics. ‘It is obvious, even to the casual observer,’ they wrote in 2009 in the journal Critical Review, ‘that these models fail to account for the actual evolution of the real world economy’. Joseph Stiglitz, professor of economics at Columbia University, told a London conference, organised by George Soros’s Institute for New Economic Thinking in 2010, that for ‘most of the work you see which says the economy is stable, there is no theory underlying that, it is just a religious belief’.

That being the case, it’s extremely hard to have a scientific community working co-operatively to improve the product. There are not enough agreed-upon fundamentals to sustain such an effort. As Robert Shiller, professor of economics at Yale and one of the winners of the 2013 Nobel Prize for economics, delicately put it in an opinion piece for Project Syndicate in September: ‘My belief is that economics is somewhat more vulnerable than the physical sciences to models whose validity will never be clear.’ Tony Lawson, an economic theorist at the University of Cambridge, argues more forcefully that the whole enterprise of mathematical description is so flawed that economics would be improved if it found other ways of analysing the system. In other words, no models at all might be better than the current models. This is where uncertainty maxes out.

Generalisations about modelling remain hard to make. Eric Winsberg is one of the few philosophers who has looked at them closely, but the best critiques of modelling tend to come from people who work with them, and who prefer to talk strictly about their own fields. Either way, the question is: ought we to pay attention to them?

It would be a shame if our odd relationship with economic models — can’t be doing with them, can’t live without them — produced a wider feeling that scientific models only reproduce the prejudices and predilections of the modeller. That is no more sensible than a readiness to embrace model projections simply because they result from computers whirring through lots of solutions to coupled equations, and are expressed in objective-looking numbers. Better to consider each kind of model on its merits. And that means developing a finer feel for the uncertainties in play, and how a particular research community handles them.

Repetition helps. Paul Bates, professor of hydrology at the University of Bristol, runs flood models. He told me: ‘The problem is that most modellers run their codes deterministically: one data set and one optimum set of parameters, and they produce one prediction. But you need to run the models many times. For some recent analyses, we’ve run nearly 50,000 simulations.’ The result is not a firmer prediction, but a well-defined range of probabilities overlaid on the landscape. ‘You’d run the model many times and you’d do a composite map,’ said Bates. The upshot, he added, is that ‘instead of saying here will be wet, here will be dry, each cell would have a probability attached’.

Reto Knutti in Zurich is similarly critical of his own field. He advocates more work on quantifying uncertainties in climate modelling, so that different models can be compared. ‘A prediction with a model that we don’t understand is dangerous, and a prediction without error bars is useless,’ he told me. Although complex models rarely help in this regard, he noted ‘a tendency to make models ever more complicated. People build the most complicated model they can think of, include everything, then run it once on the largest computer with the highest resolution they can afford, then wonder how to interpret the results.’ Again, note that the criticism comes from within the climate modelling community, not outside it. This is a good sign.

In areas where modelling is being intensively developed, ubiquitous computing power allows ordinary people to get hands-on experience of how models actually work. If an epidemiologist can run a disease model on a laptop, so can you or I. There are now a number of simple models that one can play with on the internet, or download to get a feel for how they respond when you turn the knobs and dials that alter key parameters.

You can even participate directly in ensemble testing, or distributed processing — effectively, repetition to the n-th degree. When I stop work, for example, my computer returns to doing a very small portion of the calculation needed for one of many runs of a UK Met Office climate model that tries to take account of interactions between oceans and atmosphere at maximum resolution. After a month, the readout tells me I am 38.81 per cent through my allocated task — itself, one among thousands running on home computers whose owners have registered with the open project Climateprediction.net.

You can also download economic modelling packages on your PC, though none, as far as I know, is part of a crowd-sourced effort to improve the actual models. That seems a pity. I don’t expect my infinitesimal contribution to citizen science to yield any appreciable reduction in the range of climate predictions. But it reinforces my respect for the total effort to build models that incorporate our best understanding of a planet-sized system. We cannot all get involved in experiments that make a difference, but in the age of computers, and with the right kind of expert community inviting participation, we can all play a small part in the new science of modelling and simulation.