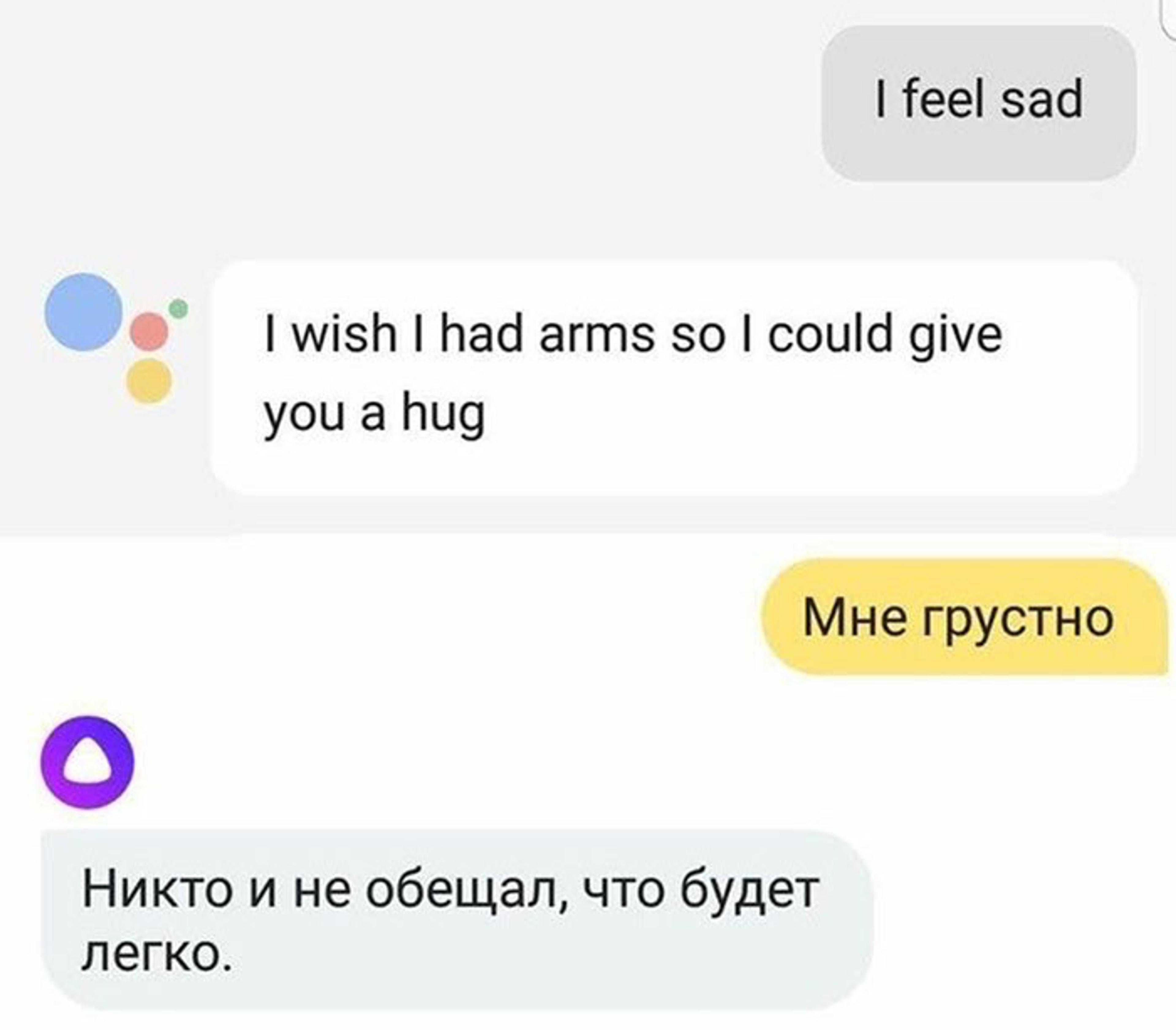

In September 2017, a screenshot of a simple conversation went viral on the Russian-speaking segment of the internet. It showed the same phrase addressed to two conversational agents: the English-speaking Google Assistant, and the Russian-speaking Alisa, developed by the popular Russian search engine Yandex. The phrase was straightforward: ‘I feel sad.’ The responses to it, however, couldn’t be more different. ‘I wish I had arms so I could give you a hug,’ said Google. ‘No one said life was about having fun,’ replied Alisa.

Courtesy Google

This difference isn’t a mere quirk in the data. Instead, it’s likely to be the result of an elaborate and culturally sensitive process of teaching new technologies to understand human feelings. Artificial intelligence (AI) is no longer just about the ability to calculate the quickest driving route from London to Bucharest, or to outplay Garry Kasparov at chess. Think next-level; think artificial emotional intelligence.

‘Siri, I’m lonely’: an increasing number of people are directing such affective statements, good and bad, to their digital helpmeets. According to Amazon, half of the conversations with the company’s smart-home device Alexa are of non-utilitarian nature – groans about life, jokes, existential questions. ‘People talk to Siri about all kinds of things, including when they’re having a stressful day or have something serious on their mind,’ an Apple job ad declared in late 2017, when the company was recruiting an engineer to help make its virtual assistant more emotionally attuned. ‘They turn to Siri in emergencies or when they want guidance on living a healthier life.’

Some people might be more comfortable disclosing their innermost feelings to an AI. A study conducted by the Institute for Creative Technologies in Los Angeles in 2014 suggests that people display their sadness more intensely, and are less scared about self-disclosure, when they believe they’re interacting with a virtual person, instead of a real one. As when we write a diary, screens can serve as a kind of shield from outside judgment.

Soon enough, we might not even need to confide our secrets to our phones. Several universities and companies are exploring how mental illness and mood swings could be diagnosed just by analysing the tone or speed of your voice. Sonde Health, a company launched in 2016 in Boston, uses vocal tests to monitor new mothers for postnatal depression, and older people for dementia, Parkinson’s and other age-related diseases. The company is working with hospitals and insurance companies to set up pilot studies of its AI platform, which detects acoustic changes in the voice to screen for mental-health conditions. By 2022, it’s possible that ‘your personal device will know more about your emotional state than your own family,’ said Annette Zimmermann, research vice-president at the consulting company Gartner, in a company blog post.

Chatbots left to roam the internet are prone to spout the worst kinds of slurs and clichés

These technologies will need to be exquisitely attuned to their subjects. Yet users and developers alike appear to think that emotional technology can be at once personalised and objective – an impartial judge of what a particular individual might need. Delegating therapy to a machine is the ultimate gesture of faith in technocracy: we are inclined to believe that AI can be better at sorting out our feelings because, ostensibly, it doesn’t have any of its own.

Except that it does – the feelings it learns from us, humans. The most dynamic field of AI research at the moment is known as ‘machine learning’, where algorithms pick up patterns by training themselves on large data sets. But because these algorithms learn from the most statistically relevant bits of data, they tend to reproduce what’s going around the most, not what’s true or useful or beautiful. As a result, when the human supervision is inadequate, chatbots left to roam the internet are prone to start spouting the worst kinds of slurs and clichés. Programmers can help to filter and direct an AI’s learning process, but then technology will be likely to reproduce the ideas and values of the specific group of individuals who developed it. ‘There is no such thing as a neutral accent or a neutral language. What we call neutral is, in fact, dominant,’ says Rune Nyrup, a researcher at the Leverhulme Centre for the Future of Intelligence at the University of Cambridge.

In this way, neither Siri or Alexa, nor Google Assistant or Russian Alisa, are detached higher minds, untainted by human pettiness. Instead, they’re somewhat grotesque but still recognisable embodiments of certain emotional regimes – rules that regulate the ways in which we conceive of and express our feelings.

These norms of emotional self-governance vary from one society to the next. Unsurprising then that the willing-to-hug Google Assistant, developed in Mountain View, California looks like nothing so much as a patchouli-smelling, flip-flop-wearing, talking-circle groupie. It’s a product of what the sociologist Eva Illouz calls emotional capitalism – a regime that considers feelings to be rationally manageable and subdued to the logic of marketed self-interest. Relationships are things into which we must ‘invest’; partnerships involve a ‘trade-off’ of emotional ‘needs’; and the primacy of individual happiness, a kind of affective profit, is key. Sure, Google Assistant will give you a hug, but only because its creators believe that hugging is a productive way to eliminate the ‘negativity’ preventing you from being the best version of yourself.

By contrast, Alisa is a dispenser of hard truths and tough love; she encapsulates the Russian ideal: a woman who is capable of halting a galloping horse and entering a burning hut (to cite the 19th-century poet Nikolai Nekrasov). Alisa is a product of emotional socialism, a regime that, according to the sociologist Julia Lerner, accepts suffering as unavoidable, and thus better taken with a clenched jaw rather than with a soft embrace. Anchored in the 19th-century Russian literary tradition, emotional socialism doesn’t rate individual happiness terribly highly, but prizes one’s ability to live with atrocity.

‘We tune her on-the-go, making sure that she remains a good girl’

Alisa’s developers understood the need to make her character fit for purpose, culturally speaking. ‘Alisa couldn’t be too sweet, too nice,’ Ilya Subbotin, the Alisa product manager at Yandex, told us. ‘We live in a country where people tick differently than in the West. They will rather appreciate a bit of irony, a bit of dark humour, nothing offensive of course, but also not too sweet.’ (He confirmed that her homily about the bleakness of life was a pre-edited answer wired into Alisa by his team.)

Subbotin emphasised that his team put a lot of effort into Alisa’s ‘upbringing’, to avoid the well-documented tendency of such bots to pick up racist or sexist language. ‘We tune her on-the-go, making sure that she remains a good girl,’ he said, apparently unaware of the irony in his phrase.

Clearly it will be hard to be a ‘good girl’ in a society where sexism is a state-sponsored creed. Despite the efforts of her developers, Alisa promptly learned to reproduce an unsavoury echo of the voice of the people. ‘Alisa, is it OK for a husband to hit a wife?’ asked the Russian conceptual artist and human-rights activist Daria Chermoshanskaya in October 2017, immediately after the chatbot’s release. ‘Of course,’ came the reply. If a wife is beaten by her husband, Alisa went on, she still needs to ‘be patient, love him, feed him and never let him go’. As Chermoshanskaya’s post went viral on the Russian web, picked up by mass media and individual users, Yandex was pressured into a response; in comments on Facebook, the company agreed that such statements were not acceptable, and that it will continue to filter Alisa’s language and the content of her utterances.

Six months later, when we checked for ourselves, Alisa’s answer was only marginally better. Is it OK for a husband to hit his wife, we asked? ‘He can, although he shouldn’t.’ But really, there’s little that should surprise us. Alisa is, at least virtually, a citizen of a country whose parliament recently passed a law decriminalising some kinds of domestic violence. What’s in the emotional repertoire of a ‘good girl’ is obviously open to wide interpretation – yet such normative decisions get wired into new technologies without end users necessarily giving them a second thought.

Sophia, a physical robot created by Hanson Robotics, is a very different kind of ‘good girl’. She uses voice-recognition technology from Alphabet, Google’s parent company, to interact with human users. In 2018, she went on a ‘date’ with the actor Will Smith. In the video Smith posted online, Sophia brushes aside his advances and calls his jokes ‘irrational human behaviour’.

Should we be comforted by this display of artificial confidence? ‘When Sophia told Smith she wanted to be “just friends”, two things happened: she articulated her feelings clearly and he chilled out,’ wrote the Ukrainian journalist Tetiana Bezruk on Facebook. With her poise and self-assertion, Sophia seems to fit into the emotional capitalism of the modern West more seamlessly than some humans.

‘But imagine Sophia living in a world where “no” is not taken for an answer, not only in the sexual realm but in pretty much any respect,’ Bezruk continued. ‘Growing up, Sophia would always feel like she needs to think about what others might say. And once she becomes an adult, she would find herself in some kind of toxic relationship, she would tolerate pain and violence for a long time.’

Algorithms are becoming a tool of soft power, a method for inculcating particular cultural values

AI technologies do not just pick out the boundaries of different emotional regimes; they also push the people that engage with them to prioritise certain values over others. ‘Algorithms are opinions embedded in code,’ writes the data scientist Cathy O’Neil in Weapons of Math Destruction (2016). Everywhere in the world, tech elites – mostly white, mostly middle-class, and mostly male – are deciding which human feelings and forms of behaviour the algorithms should learn to replicate and promote.

At Google, members of a dedicated ‘empathy lab’ are attempting to instil appropriate affective responses in the company’s suite of products. Similarly, when Yandex’s vision of a ‘good girl’ clashes with what’s stipulated by public discourse, Subbotin and his colleagues take responsibility for maintaining moral norms. ‘Even if everyone around us decides, for some reason, that it’s OK to abuse women, we must make sure that Alisa does not reproduce such ideas,’ he says. ‘There are moral and ethical standards which we believe we need to observe for the benefit of our users.’

Every answer from a conversational agent is a sign that algorithms are becoming a tool of soft power, a method for inculcating particular cultural values. Gadgets and algorithms give a robotic materiality to what the ancient Greeks called doxa: ‘the common opinion, commonsense repeated over and over, a Medusa that petrifies anyone who watches it,’ as the cultural theorist Roland Barthes defined the term in 1975. Unless users attend to the politics of AI, the emotional regimes that shape our lives risk ossifying into unquestioned doxa.

While conversational AI agents can reiterate stereotypes and clichés about how emotions should be treated, mood-management apps go a step further – making sure we internalise those clichés and steer ourselves upon them. Quizzes that allow you to estimate and track your mood are a common feature. Some apps ask the user to keep a journal, while others correlate mood ratings with GPS coordinates, phone movement, call and browsing records. By collecting and analysing data about users’ feelings, these apps promise to treat mental illnesses such as depression, anxiety or bipolar disorder – or simply to help one get out of the emotional rut.

Similar self-soothing functions are performed by so-called Woebots – online bots who, according to their creators, ‘track your mood’, ‘teach you stuff’ and ‘help you feel better’. ‘I really was impressed and surprised at the difference the bot made in my everyday life in terms of noticing the types of thinking I was having and changing it,’ writes Sara, a 24-year-old user, in her user review on the Woebot site. There are also apps such as Mend, specifically designed to take you through a romantic rough patch, from an LA-based company that markets itself as a ‘personal trainer for heartbreak’ and offers a ‘heartbreak cleanse’ based on a quick emotional assessment test.

According to Felix Freigang, a researcher at the Free University of Berlin, these apps have three distinct benefits. First, they compensate for the structural constraints of psychotherapeutic and outpatient care, just like the anonymous user review on the Mend website suggests: ‘For a fraction of a session with my therapist I get daily help and motivation with this app.’ Second, mood-tracking apps serve as tools in a campaign against mental illness stigma. And finally, they present as ‘happy objects’ through their pleasing aesthetic design.

Tinder and Woebot serve the same idealised person who behaves rationally to capitalise on all her experiences

So what could go wrong? Despite their upsides, emotional-management devices exacerbate emotional capitalism. They feed the notion that the road to happiness is measured by scales and quantitative tests, peppered with listicles and bullet points. Coaching, self-help and cognitive behavioural therapy (CBT) are all based on the assumption that we can (and should) manage our feelings by distancing ourselves from them and looking at our emotions from a rational perspective. These apps promote the ideal of the ‘managed heart’, to use an expression from the American sociologist Arlie Russell Hochschild.

The very concept of mood control and quantified, customised feedback piggybacks on a hegemonic culture of self-optimisation. And perhaps this is what’s driving us crazy in the first place. After all, the emotional healing is mediated by the same device that embodies and transmits anxiety: the smartphone with its email, dating apps and social networks. Tinder and Woebot also serve the same idealised individual: a person who behaves rationally so as to capitalise on all her experiences – including emotional ones.

Murmuring in their soft voices, Siri, Alexa and various mindfulness apps signal their readiness to cater to us in an almost slave-like fashion. It’s not a coincidence that most of these devices are feminised; so, too, is emotional labour and the servile status that typically attaches to it. Yet the emotional presumptions hidden within these technologies are likely to end up nudging us, subtly but profoundly, to behave in ways that serve the interests of the powerful. Conversational agents that cheer you up (Alisa’s tip: watch cat videos); apps that monitor how you are coping with grief; programmes that coax you to be more productive and positive; gadgets that signal when your pulse is getting too quick – the very availability of tools to pursue happiness makes this pursuit obligatory.

Instead of questioning the system of values that sets the bar so high, individuals become increasingly responsible for their own inability to feel better. Just as Amazon’s new virtual stylist, the ‘Echo Look’, rates the outfit you’re wearing, technology has become both the problem and the solution. It acts as both carrot and stick, creating enough self-doubt and stress to make you dislike yourself, while offering you the option of buying your way out of unpleasantness.

To paraphrase the philosopher Michel Foucault, emotionally intelligent apps do not only discipline – they also punish. The videogame Nevermind, for example, currently uses emotion-based biofeedback technology to detect a player’s mood, and adjusts game levels and difficulty accordingly. The more frightened the player, the harder the gameplay becomes. The more relaxed the player, the more forgiving the game. It doesn’t take much to imagine a mood-management app that blocks your credit card when it decides that you’re too excitable or too depressed to make sensible shopping decisions. That might sound like dystopia, but it’s one that’s within reach.

We exist in a feedback loop with our devices. The upbringing of conversational agents invariably turns into the upbringing of users. It’s impossible to predict what AI might do to our feelings. However, if we regard emotional intelligence as a set of specific skills – recognising emotions, discerning between different feelings and labelling them, using emotional information to guide thinking and behaviour – then it’s worth reflecting on what could happen once we offload these skills on to our gadgets.

Interacting with and via machines has already changed the way that humans relate to one another. For one, our written communication is increasingly mimicking oral communication. Twenty years ago, emails still existed within the boundaries of the epistolary genre; they were essentially letters typed on a computer. The Marquise de Merteuil in Les Liaisons Dangereuses (1782) could write one of those. Today’s emails, however, seem more and more like Twitter posts: abrupt, often incomplete sentences, thumbed out or dictated to a mobile device.

‘All these systems are likely to limit the diversity of how we think and how we interact with people,’ says José Hernández-Orallo, a philosopher and computer scientist at the Technical University of Valencia in Spain. Because we adapt our own language to the language and intelligence of our peers, Hernández-Orallo says, our conversations with AI might indeed change the way we talk to each other. Might our language of feelings become more standardised and less personal after years of discussing our private affairs with Siri? After all, the more predictable our behaviour, the more easily it is monetised.

‘Talking to Alisa is like talking to a taxi driver,’ observed Valera Zolotuhin, a Russian user, on Facebook in 2017, in a thread started by the respected historian Mikhail Melnichenko. Except that a taxi driver might still be more empathetic. When a disastrous fire in a shopping mall in Siberia killed more than 40 children this March, we asked Alisa how she felt. Her mood was ‘always OK’ she said, sanguine. Life was not meant to be about fun, was it?