The geological epoch we currently inhabit – the ‘wholly recent’ period since the last ice age – was first mooted in 1833 by the English geologist Charles Lyell to encompass the time during which Earth had been ‘tenanted by man’. French paleontologist Paul Gervais dubbed it the Holocene in 1867, a name which was formalised at the Third International Geological Congress in Bologna in 1885. But it would be another 84 years before the term was endorsed by the US Commission on Stratigraphic Nomenclature in 1969, and only in 2009 was the Holocene awarded its very own Geological Stratigraphic Section and Point (GSSP) – or ‘golden spike’ – located in an ice core 1,492 metres below the surface of Greenland.

The science, and the bureaucracy, of geology takes after its subject matter in the enormous stretches of time required (176 years for the Holocene) for ideas to bed down in the discipline’s conceptual sediment and then harden into universally accepted facts. And that hardening is represented by the GSSP.

A valedictory piece of metal, the ‘golden spike’ is awarded by the International Commission of Stratigraphy. It is ceremoniously driven into a rock face (or ice core) between two types of strata and, by extrapolation, two different epochs. Since the system was introduced in the 1970s, a golden spike has become the holy grail of stratigraphy. It can take decades to find a suitable spot on the planet in which to plant it, one where the change in the rock is irrefutable.

The next marker in rock-time, but one yet to earn its golden spike, is the Anthropocene – aka us. As a proposition, the Anthropocene is so compelling (featuring on the covers of The Economist, The Guardian, Le Monde and Der Spiegel), and apparently so urgent (can a word save the world?) that it is forcing the geological hierarchy to speed up its glacial procedures. We simply can’t wait to declare a new epoch.

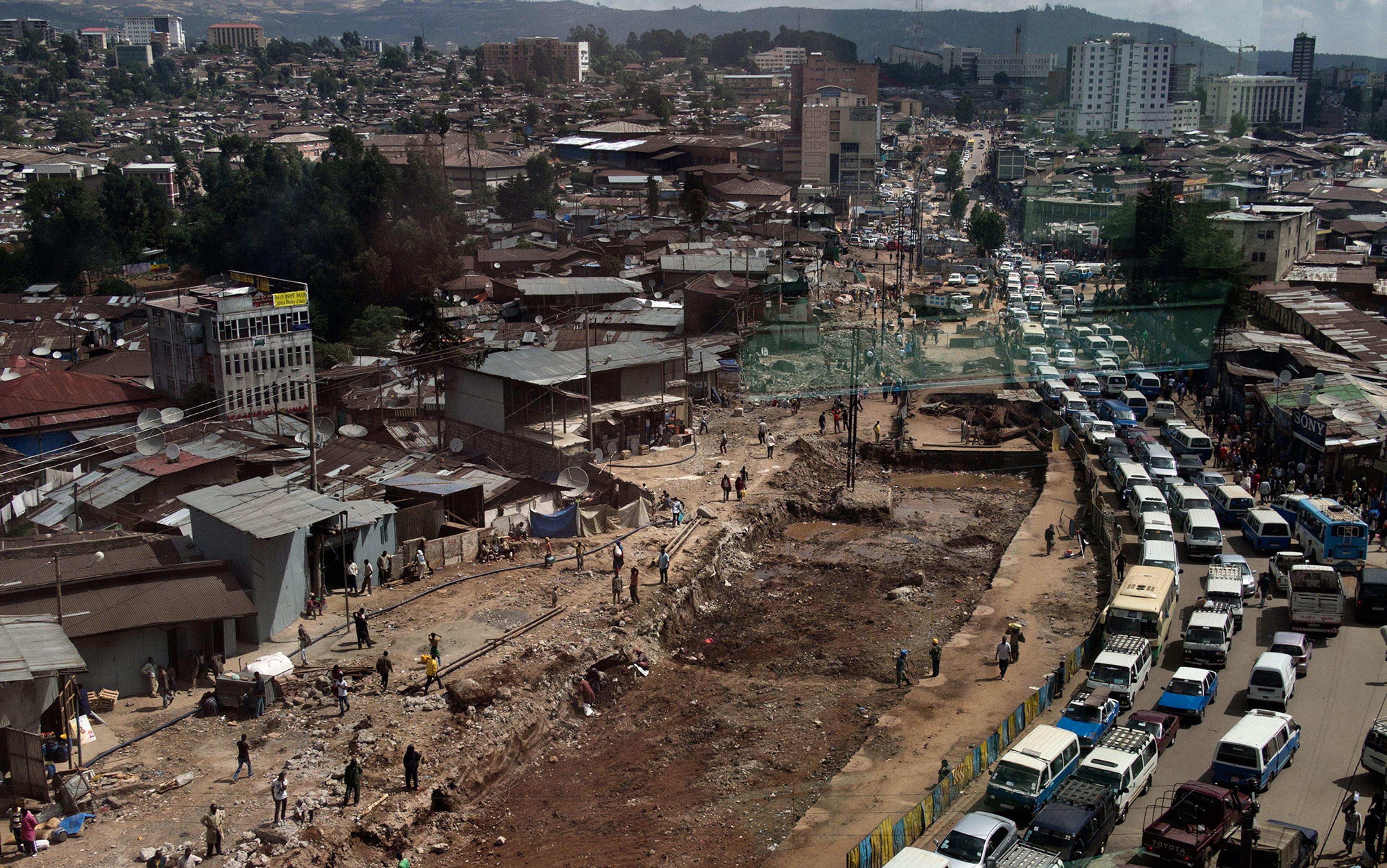

Conceived in its current form by the Dutch chemist and Nobel Prize-winner Paul Crutzen and the American biologist Eugene F Stoermer in 2000, and developed by Crutzen and colleagues in a landmark 2007 paper, the Anthropocene is a neologism that attempts to pin down a lot of free-floating anxiety about climate change and the myriad ways that Homo sapiens are making over the planet in our own image. It styles humans as a geological force, as powerful as any ‘natural’ one (we move more material than all natural processes combined, including river and ocean sedimentation), and acknowledges that we have re-engineered all the Earth systems, notably the carbon dioxide and nitrogen cycles, leaving a poisonous crust for far-future civilisations to excavate and ponder.

At the same moment that the Holocene’s golden spike was driven into Greenland’s ice-core in 2009, the Anthropocene Working Group (AWG) – consisting of a few dozen geologists and other interested parties – began searching for the next spike, to identify the point when humans became a global geological force. Never mind that the most acute symptoms of the Anthropocene kicked in only around 70 years ago – less than a blink in geological terms – the AWG is going all-out in its global search, from the desert of New Mexico to glacial lakes in Norway, to establish a stratigraphic basis for the human epoch. A mad scramble is on.

This year so far three candidate start dates have been proposed for the Anthropocene. Originating in different scholarly papers, yet widely touted by the media, these are: 16 July 1945 (the first nuclear explosion, proposed by the AWG itself); a much earlier date of 1610 (marked by a dip in CO2 that can be detected in an ice core in Antarctica, and representing the time that forests overtook agricultural land after 50 million people in the Americas perished with the arrival of Europeans); and even ca.100,000 years ago, by which time a thick ‘carpet’ of stone tools had accumulated on an escarpment in Libya, evidence of massive scale lithic industry by early hominids that changed the landscape seemingly forever. This last will have trouble making the grade: an anthropogenic effect tied to one locale is very different to an epoch-defining global marker.

What constitutes a stratigraphic boundary is primarily the ‘sudden’ appearance or disappearance of a particular type of fossil: the carboniferous period, for example, is characterised by the appearance of coal 360 million years ago. The difficulty for geologists is that there is very little uniformity in fossil layers in the rock. Even less consistent is evidence for establishing – through radiometric dating – uniform time boundaries between the layers. This is because change is usually diachronous, unfolding at different moments and at different rates in different places.

With the Anthropocene, the typical predicament that geologists face is inverted: they have crystal-clear time divisions for recent events – thanks to the meticulous documentation of recent human history – but very little actual evidence, yet, in the rock. And rocks have their own sense of time, different to calendar time. The challenge of stratigraphy, or more precisely chrono-stratigraphy, is to marry the two together.

We are recalibrating ‘The present is the key to the past’ to read: ‘The future is the key to the present’

While every discovery of a new boundary makes the existence of our species look puny – compared with the millions of years in which coal was laid down during the carboniferous period, for example – the Anthropocene puts us front and centre. And while epochs and periods are usually defined with rough boundaries of thousands or millions of years, with the Anthropocene we think we can define it down to the second.

In the Principles of Geology (1833), Charles Lyell set out his dictum that ‘The present is the key to the past’ – a then-controversial notion that geological processes operate in the same way now as they did in deep time. The implication was that one could draw conclusions about preceding epochs by examining the current one. Lyell thus changed the way we think about the deep past and about ourselves by forging a geological link between the two.

Now advocates of the Anthropocene are forcing an acceleration of Lyell’s dictum that is notably anthropocenic (a great new adjective offered by the Anthropocene for impatient, inflationary, domineering, unconscious behaviour). We are now recalibrating ‘The present is the key to the past’ to read: ‘The future is the key to the present.’ The Anthropocene posits a worldview in which humans are not just relevant but entirely responsibly for the fate of the planet. There’s a sense of epic impatience about the Anthropocene: we want the potential disasters of the future to be visible now. And we want the right to name that future now, even before the wreckage of human history piling up at our feet has made its way into the permanence of bedrock.

For a potentially epoch-making event, the press conference after the AWG’s first face-to-face meeting in Berlin in October was muted, and sparsely attended. And yet Jan Zalasiewicz, a paleobiologist at the University of Leicester and chairman of the AWG, had important news to impart. He reported a growing feeling within the group that a strong case for formalising the Anthropocene can be made when the AWG submits its report to the International Commission of Stratigraphy in 2016.

The AWG will recommend a start date for the Anthropocene in the early 1950s (relegating many of our parents and grandparents to an entirely different epoch). Why then? Well, the flurry of post-war thermonuclear test explosions left a radionuclide signature that has spread across the entire planet in the form of carbon 12 and plutonium-239, which has a half-life of 24,110 years. The early 1950s also coincides with the beginning of the Great Acceleration in the second half of the 20th century, a period of unprecedented economic and population growth with matching surges – charted by Will Steffen and colleagues in Global Change and the Earth System (2004) – in every aspect of planetary dominance, from the damming of rivers to fertiliser production, to ozone depletion.

The Anthropocene’s advocates have a huge buffet of evidence that human activity amounts to an almost total domination of the planet – one of the latest being new maps that show the extent to which the United States has been paved over. But their problem in terms of formalisation on the Geological Time Scale is that the Earth has only just begun to digest this deadly feast through the pedosphere (the outermost layer) and into the lithosphere (the crust beneath it). The challenge is to convince geologists accustomed to digging much further back in time that the evidence accumulating now will be significant, stratigraphically speaking, deep into the future. Geologists are being asked to become prophets.

Copernicus displaced humans from the centre of the universe: now we’re trying to put them back

The AWG has no power to write its convictions into the Geological Time Scale. All it can do is recommend. Like freshly scattered fly ash from a coal power plant, the AWG and its findings are but a thin layer sprinkled on top of seriously heavy academic strata: the Subcommission on Quaternary Stratigraphy; the International Commission on Stratigraphy; and the International Union of Geological Sciences as the bedrock and final arbiter. Each layer of the scholarly hierarchy is more traditional than the last, harder to convince that they should hold judgment over processes that are still unfolding.

The historian of science Naomi Oreskes of Harvard delivered an evening lecture at the AWG meeting in Berlin, demonstrating how geologists are indoctrinated to believe that humans can only ever be insignificant, geologically speaking. She stretched her arm out and recounted how she was taught that human existence, on the arm-scale of the planet’s history, represents only what would be shaved off a fingernail by a nail file. With the notion of the Anthropocene, she said, we are reversing Copernican wisdom: he displaced humans from the centre of the universe, now we’re trying to put them back.

No wonder there is resistance from the discipline forced to the centre of the debate. In the article ‘Is the Anthropocene an Issue of Stratigraphy or Pop Culture?’ (2012), the stratigraphers Whitney Autin at the State University of New York and John Holbrook of Texas Christian University described themselves as ‘taken aback’ by claims that there is already sufficient evidence to formalise the Anthropocene. ‘Science and society have much to gain from a clear understanding of how humans drive Earth-system processes instead of conducting an esoteric debate about stratigraphic nomenclature,’ they wrote, noting that ‘the discipline of stratigraphy may also have a reputation to protect.’

The Anthropocene challenges the basis of stratigraphy, and classical practitioners will naturally try to defend it when the media wreaks havoc on their carefully constructed chrono-stratigraphic system. Yet it isn’t their job to predict models, as record-hunters. The job of classifying this epoch should fall to the geologists of the year 3000 or 4000.

In an interview at the end of the Berlin conference, a clearly exhausted Zalasiewicz reiterated the plentiful evidence for the Anthropocene, but admitted: ‘We haven’t translated those gigatonnes of concrete and those altered sediment pathways, the dams and so on, into their vertical stratigraphic equivalent. And that’s simply because the science is so young. We work on a shoestring – we have no funding.’

One senses that Zalasiewicz is slightly frustrated by the need – albeit self-imposed – to search for a golden spike, and also by critics’ attention towards it. In a freer moment, he has written that: ‘The Anthropocene is not about being able to detect human influence in stratigraphy, but reflects a change in the Earth system (of which the most important and long-lasting is the change to the biological system).’ But the AWG’s decision to pursue formalisation nevertheless means that the group now has to jump through the hoops of the geological hierarchy. A stratigraphy boundary of some sort has to be found, like it or not.

Why the obsession with rocks, when we have historical records, data on hard drives and CDs?

The quest for a golden spike is always difficult, Zalasiewicz said, but not always necessary in order to have a working geological term. ‘There are very few chronostratigraphic boundaries that are absolutely clear, unambiguous and widely traceable, and I’ve wrestled with enough in my time.’ In a 2013 paper, Zalasiewicz noted that the shift from the Precambrian to the Cambrian about 500 million years ago, although an ‘explosion’ when viewed from today, ‘comprised in reality a succession of preserved innovations over some 40 million years, of which just one, the inception of Treptichnus-type burrowing, was selected to represent the formal stratigraphic boundary.’

The hunt for the golden spike thus puts anthropocenists in a paradoxically weak position: the signal that qualifies will likely be something that seems puny – like the invisible traces of radionuclides – compared with the scale of anthropogenic effects playing out above ground right now, like super storms and extinctions caused by invasive species.

The mismatch will be even worse, rhetorically, if the AWG’s proposal for formalisation is rejected, giving fodder for a new type of sceptic: the Anthropocene-denier, probably born before 1950, and with an unshakeable and now apparently confirmed belief that the planet is untameable and inexhaustible. In rushing to declare a new geological epoch, anthropocenists are not only being pre-emptive (and anthropocentric), they are, in a way, not being radical enough. Geology doesn’t even begin to express the human, biological, chemical and moral significance of an exhausted planet. Yet it is being put on a pedestal and asked to arbitrate on our behalf.

When Zalasiewicz submits the AWG’s report it will land on the desk of Stan Finney, a geologist at California State University at Long Beach, current chair of the International Commission of Stratigraphy, and one of the biggest Anthropocene sceptics. Finney doesn’t deny that human activity is impacting the planet at an unprecedented scale and speed. His major issue is a logical fallacy in the pursuit of geological formalisation. ‘In the Holocene we have all the human records, so we don’t need to use the stratigraphic record,’ Finney says. ‘Why use an interpretive time scale when you have direct observations? We don’t know if the buildings and excavations that are relics of humans will leave traces in sediments, but we do have exact numbers and dates for when they were laid down.’

Finney and other geologists are baffled by the category error of using stratigraphy as an authority on human activities over the past 70 years, today, and into the future. Why, they wonder, are we looking for evidence in low‑resolution – subtle signals buried underground – when we already have super‑high-definition effects playing out right in front of our face? Why the obsession with rocks, when we have historical records, data on hard drives and CDs?

The physicist Irka Hajdas, from the department of Ion Beam Physics at ETH Zurich (the Swiss Federal Institute of Technology) was brought into the AWG for her expertise with radiocarbon dating, but also for her scepticism. While radionuclides from thermonuclear testing are a clear potential golden spike for the Anthropocene, Hajdas points out that: ‘When we see them, geologists already use the phrase “anthropogenic effect”. And, in a way, that’s enough. We don’t need to see them as a geological boundary.’ And we still don’t know if radionuclides will be the biggest anthropogenic input into the strata. ‘We could say: now we’ve changed the world, let’s put the boundary here. But maybe an even more significant change might come.’

If there were radical pre-Neolithic stratigraphers, they might have been eager to declare the start of the Holocene 14,500 years ago when the planet started to warm up. But, Hajdas asks, what would they have done when the Younger Dryas cooling event came along immediately afterwards, flipping the climate back to freezing? It would take another 2,800 years for the interglacial stability of the Holocene to really kick in – at which point they would have needed to declare another new epoch.

Stratigraphic evidence for the Anthropocene is nevertheless being unearthed slowly. In a 2013 paper in Earth-Science Reviews, Alexander Wolfe of the University of Alberta and colleagues describe pulling ‘contaminated’ sediment from glacial lake beds in such wide-ranging and apparently pristine locations as Beartooth Wilderness in Montana, Baffin Island in Canada, in West Greenland, and on Svalbard in Norway. One of these glacial lakes might be the spot where we can definitively say that the Anthropocene is leaving its dirty fingerprints, forever.

it will finally be a truth universally acknowledged that humans have more power than they know how to handle, and can start picking up the pieces

In sediment cores, Wolfe found distinct changes in the composition of deposits (due to retreating glaciers), together with increases in nitrogen levels (from global artificial fertiliser production) and increases in fossilised diatoms (microscopic plankton that thrive in warmer temperatures). These changes appeared or accelerated after 1950. Thus Wolfe claims to have found the holy grail: a golden spike marking the lower boundary between the Holocene and the Anthropocene.

Though disturbingly revealing of humanity’s global impact, Wolfe’s conclusions are still largely a claim on the deep-time future significance, endurance and legibility of extremely recent, fragile evidence that could easily be obliterated or superseded by something else. The Anthropocene in this sense is still disciplinarily incorrect: formalisation doesn’t fit within the current criteria of the Geological Time Scale, nor with the traditional mechanisms of stratigraphy. Something big would have to shift in the discipline for the Anthropocene to be formalised.

Whatever happens after the AWG submits its recommendation in 2016, anthropocenists are, ironically, selling their theory short by seeking a place on something as esoteric as the Geological Time Scale. The Anthropocene, in all its multi-faceted, Earth system-altering horror, is more serious than that. The hope of course is that if we can name a new epoch after us then it will finally be a truth universally acknowledged that humans have more power than they know how to handle, and we will be able to start picking up the pieces.

But we already know that ‘knowing’ isn’t enough to address, for example, climate change, which is at least a single issue, whereas the Anthropocene is an idea that encompasses potentially everything omnipresent about humans today. Why confine it to rocks? The anthropocene – let’s use lower case now – is a deeply material state – but it’s also an intangible, even existential one. While the world is scraped away, it is simultaneously cloaked in a new digital layer of sensing technology and data-gathering. There is no mystery left. Everything is known or knowable in the anthropocene, except the impulses that got us here and where to go next as a species.

Behind the quest for the golden spike is a more fundamental, linguistic quest. In Ecology Without Nature (2007), Timothy Morton notes: ‘The idea that a view can change the world is deeply rooted in the Romantic period, as is the notion of worldview itself (Weltanschauung).’ We still wish that a word will save the world – even if we baulk at the idea of harbouring Romantic inclinations today.