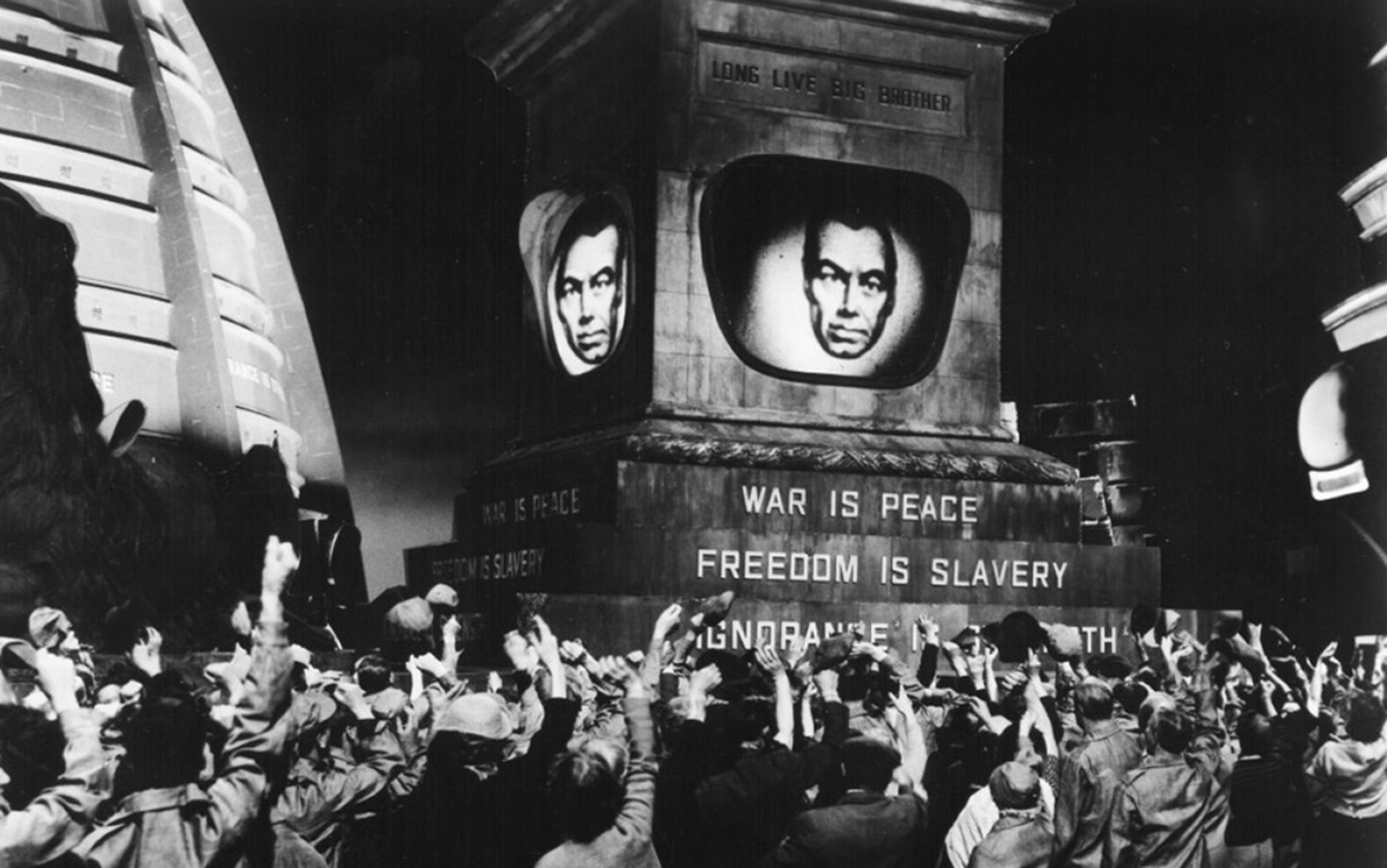

Everyone remembers Newspeak, the straitjacketed version of English from George Orwell’s novel 1984 (1949). In that dystopia, Newspeak was a language designed by ideological technicians to make politically incorrect thoughts literally inexpressible. Fewer people know that Orwell also worried about the poverty of our ordinary, unregimented vocabulary. Too often, he believed, we lack the words to say exactly what we mean, and so we say something else, something in the general neighbourhood, usually a lot less nuanced than what we had in mind; for example, he observed that ‘all likes and dislikes, all aesthetic feeling, all notions of right and wrong… spring from feelings which are generally admitted to be subtler than words’. His solution was ‘to invent new words as deliberately as we would invent new parts for a motor-car engine’. This, he suggested in an essay titled ‘New Words’ (1940), might be the occupation of ‘several thousands of… people.’

Now, I don’t have anything against the invention of new words when it’s appropriate. But Orwell was badly mistaken, and not just for ignoring the fact that English already picks up new words on a daily basis. His reasons for wanting that extra expressive power are, uncharacteristically, poorly thought-out. Language just doesn’t work in the way that either 1984’s Ministry of Truth or the more benign bureau of verbal inventors in ‘New Words’ presume. And understanding why that is will put us in a position to explain a lot of what goes under the heading of metaphysics.

Imagine you really did have a repertoire of concepts and names that allowed you to say exactly what you meant, pretty much whatever you noticed, or whatever occurred to you. Adrienne Lehrer, a linguist at the University of Arizona, wrote Wine and Conversation (2009), a book about wine vocabulary: ‘earthy’, ‘full-bodied’, ‘flowery’, ‘cloying’, ‘disciplined’, ‘mossy’, and so on. Many, many such adjectives turn up in wine commentary, though evidently not enough of them to live up to Orwell’s ideal. Imagine really having precise terms for all those flavour notes. Orwell was especially worried about capturing our inner lives, so imagine also having words for the day-to-day events that remind you of particular experiences that only you have undergone. Feeling a little swamped?

It’s not simply that your mind would be submerged in conceptual clutter; it’s not just that it wouldn’t be possible to learn most of these words, or to communicate with them. In fact, Lehrer found that people don’t manage to communicate very well with their wine vocabularies; if subjects are asked to pick a wine out of a lineup on the basis of someone else’s description of it, they mostly can’t do it. Presumably this sort of talk isn’t really about communication, but it’s also something of an exception.

For the most part, our repertoire of concepts and labels for individuals is important because we use it in our reasoning. Descriptions are useful in that we can draw conclusions from them. In the most basic case, you would use a rule: when certain conditions are met (for example, when you’re making the American chef Deborah Madison’s mashed potatoes and turnips), certain implications follow (an appropriate pairing would be a Sancerre in the summer, or a Cabernet Franc from the Loire in fall or winter). Your ever-so-precise mot juste might capture exactly what you see or feel but, if there’s no inference you can fit it to, then there’s nothing you can do with it. Descriptions that you can’t fold into your reasoning are useless.

The thing is, the useful ones don’t come easy. Back in the 18th century, the philosopher David Hume thought that we were mostly led from one belief to another by causes, and he thought we learned that As cause Bs by seeing lots of As followed by lots of Bs. That’s somewhat oversimplified, but it’ll do as a stand-in. It would mean that in order to add a causal connection to your intellectual repertoire, somebody or other would have to take the time to observe all those As and Bs. If we do this systematically, that’s science. (It’s not always science: if we do it with recipes, it’s kitchen testing.) Now, science takes time and money, and as the US Congress keeps reminding the National Science Foundation and NASA, there’s only so much to go around. The point generalises: those inferential connections are expensive, and we can afford only so many of them. So if you want to think effectively, you have to be selective. You have to confine your descriptions of the world around you, of your inner life and all the rest of it, to the descriptions that someone has invested in, doing what was needed to embed them in a network of usable inferential links.

We can’t avoid cutting intellectual corners, forcing our experience to fit our language and then squinting to see whether the conclusions we draw still stand

Most of the time, you don’t have much of a say in where those investments are made, and so you end up with a descriptive vocabulary that doesn’t quite fit what you want to think about, or tell other people about. But, in those cases, the right thing to do is exactly what Orwell doesn’t like. Go ahead, use words that misdescribe whatever it is. Choose terms that let you draw conclusions. But (and maybe this would improve matters by his lights) be upfront about what you’re doing.

Tag what you said as pretty much true, or almost entirely true, or true enough, or technically true, or maybe just good enough for government work. Or use a hedge like the one from a few lines back: somewhat oversimplified, but it’ll do as a stand-in. Maybe Orwell was right that we need to invent more words. I do think we could use more qualifiers such as these.

Philosophers in the English-speaking world for the most part think of truth as an all-or-nothing matter. (Here’s a typical expression of this attitude, borrowed from Charles Travis’ Unshadowed Thought (2000): ‘‘Approximately’, like ‘basically’, is a polite negation. If what I say is (only) approximately true, then it is, strictly speaking, false.’) I think otherwise. It’s actually more important to be able to register how something you said was partways true than it is to announce that something is simply true. (Most of the time when you do that, it’s repetition for emphasis.) We can’t avoid cutting intellectual corners, forcing our experience to fit our language and then squinting to see whether the conclusions we draw still stand. In other words, we’re in the business of using approximations and idealisations.

In that case, it’s natural to look for recipes – that is, routinised ways of generating those self-aware misdescriptions, preferably ones that you can apply in a great many cases. If you’ve taken an introductory physics class where the problem sets seemed to assume that the world was made of point masses and rigid bodies and frictionless planes, or if you took intro econ where markets were populated by perfectly rational and informed buyers and sellers, you’ve encountered recipes like that. But these aren’t just tricks of the trade in one scientific discipline or another. We have a great many recipes for misdescription that work similarly in everyday life. Often we’re so used to them that we don’t even notice how they get put to work.

Aristotle came up with a simplifying technique of this sort, one that has made its way into the standard intellectual toolkit that everybody picks up as a child. I’m sure you don’t need reminding that people can look very different from one another, and that this is doubly the case when they’re at different life stages. It would be very tricky to take account of all that variation; a nine-pound baby and a 270-pound football player have to be handled very differently on many occasions, for instance when you’re selling them plane tickets, and you’d think they might count differently. Shouldn’t the football player, who takes up two seats, count for two, and the infant on a lap for some fraction? But Aristotle’s descriptive recipe lets us say that if you’re a human being, you’re exactly one human. When I was born, I was one human, and when I was big enough to try out for the team, I was one human; if I gain a few pounds, I don’t clock in at 1.03 humans, and if I lose a few, I don’t become merely 0.94 of a person.

This is a good idea for all sorts of purposes. It makes our world much more straightforward, in that it lets us just count off people – but not just people: also cats and so on – without worrying about those extra pounds. It’s also metaphysics. We translate Aristotle’s word for these individual, countable beings as ‘substances’, and philosophers generally agree that Aristotle’s doctrine of substance is metaphysics. Maybe it’s even the paradigm of metaphysics. But I’m suggesting that most metaphysics, not just Aristotle’s, consists of such recipes, formulae for simplifying your descriptions and making your thinking more straightforward. Metaphysics is intellectual ergonomics.

It’s unusual to hear philosophers agree to that last part. In the main, they take their job as metaphysicians to be arguing over whether, say, Aristotelian substances really exist. They tie themselves up in knots worrying about what persons really are. But maybe that’s because personhood is an idealisation. The properly Orwellian question here is the same one we would ask about the idealised point masses of physics or the perfect markets of economic theory: should we stick with our Aristotelian Newspeak and use that to misdescribe our surroundings? Or is it getting in our way? Is our present situation a good occasion to modify it or replace it with something else?

Even if all that sounds pretty good, there’s a cost to acknowledge. Orwell went to fight in the Spanish Civil War, and came back deeply disturbed by how the press subordinated its reportage to one or another party line. And, he noticed, it wasn’t just the journalists and publishers. There were a great many alleged human rights violations, and almost everyone seemed to believe that one or another of them had taken place only if it suited their party’s tactical needs. Or rather, they only ‘believed’ it had because, the moment it was convenient, those beliefs would turn on a dime – even when they were about what were very clearly matters of fact, not decisions or policies. Orwell turned these observations into the basis for 1984’s doublethink.

Doublethink isn’t just something we have to cope with in politics. It is the price of metaphysics, and metaphysics – if what I’ve told you so far comes close enough to being right – is everywhere. So it seems we have a choice between two kinds of doublethink.

If you’re thinking with the help of useful idealisations such as these, then the world doesn’t really match your descriptions of it. Lose track of that fact, come to believe that your metaphysics are just true, and you’ll have to develop a selective blindness – in both your intellectual and your day-to-day lives – to what’s actually going on around you. It will be like the myopia of the left- and right-wingers of Orwell’s own day, or the perversely oblivious characters in 1984. The economist who still insists that people really are economically rational agents displays a familiar contemporary version of that blindness. But since we all have to get along in a world that doesn’t work quite as our idealised misdescriptions say it ought to, we will somehow manage both to see things and not to see them.

Getting by in this way runs the risk of looking (and maybe not just looking) dishonest. And with this dishonesty there might come a certain guilty pleasure. It’s a little reminiscent of 1950s utopian sci-fi, namely, the thrill of imagining that you live in a much more orderly, understandable world than we really do.

I don’t think we can do without metaphysics, understood as intellectual ergonomics: the world is far too messy a place

The alternative to this kind of fantastical dogmatism is always to accompany what you announce with hedges. You see that a conclusion follows from your idealised description, and you remind yourself not to draw it on this occasion. You warn other people that what you just told them is only roughly true, and to watch out for the exceptions. You’re honest, but only because you’re always attaching those reminders that you don’t exactly mean whatever it was you just said.

I don’t think the choice is clean, and sometimes there are ways around it. For example, misdescriptions can be a bit like self-fulfilling prophecies. Emotional investment sometimes follows those inferential investments; as you get used to thinking and talking with other people using some set of crisply articulated concepts, your interest can gradually shift to the common ground. A good number of philosophers originally took up the subject because they had what some people call existential concerns – that is, they were worried about the meaning of life. But in the field, there hasn’t been a lot of research investment in that concept. The nearest thing is probably value theory, or maybe metaethics. And so, decades later, those philosophers often find that they care about issues in value theory and metaethics, and have entirely forgotten that they originally wanted to know about the meaning of life.

Perhaps that sounds a bit iffy. Haven’t those philosophers lost their way? Probably, but not everyone who lives through these shifts of interest necessarily has. Sometimes it really is important that what you reason about and what you care about are exactly the same things. And sometimes you can get them to match up by gradually changing what you care about. Going back to our earlier illustration, for certain purposes anyway, shouldn’t you care about, precisely, people, and not so much about the messy real-world things we all are, which are only approximately people?

I don’t think we can do without metaphysics, understood as the kind of intellectual ergonomics I’ve just described; the world is far too messy a place. And mostly we don’t want to quietly forget about the bits and pieces of the mess that matter to us. So we’re going to have to live with doublethink. The only question is, which kind?

I can feel the temptations of the first option. Wouldn’t it be so orderly to live in the British philosopher Timothy Williamson’s world, where the vague concepts all come with clean, sharp boundaries? (It’s just that we’ll never know where they are.) Wouldn’t it be reassuring to live in the world of the American philosophers W V O Quine and Donald Davidson, where people are never illogical, and are mostly right about most things? (If it seems otherwise, you must be mistranslating them.)

Maybe it’s just me, but I prefer honest doublethink, where you’re upfront with yourself and with other people about your misrepresentations. The other sort – where you tell yourself that the world is as you say, but then somehow simultaneously cope with and ignore the ways it isn’t – is probably the norm in many walks of life: it certainly is in the world of professional philosophy. But I don’t want, as Orwell put it, ‘to know and not to know, to be conscious of complete truthfulness while telling carefully constructed lies’ – no doubt in part for the reasons that his readers were horrified at such descriptions of the cynical apparatchiks of 1984.