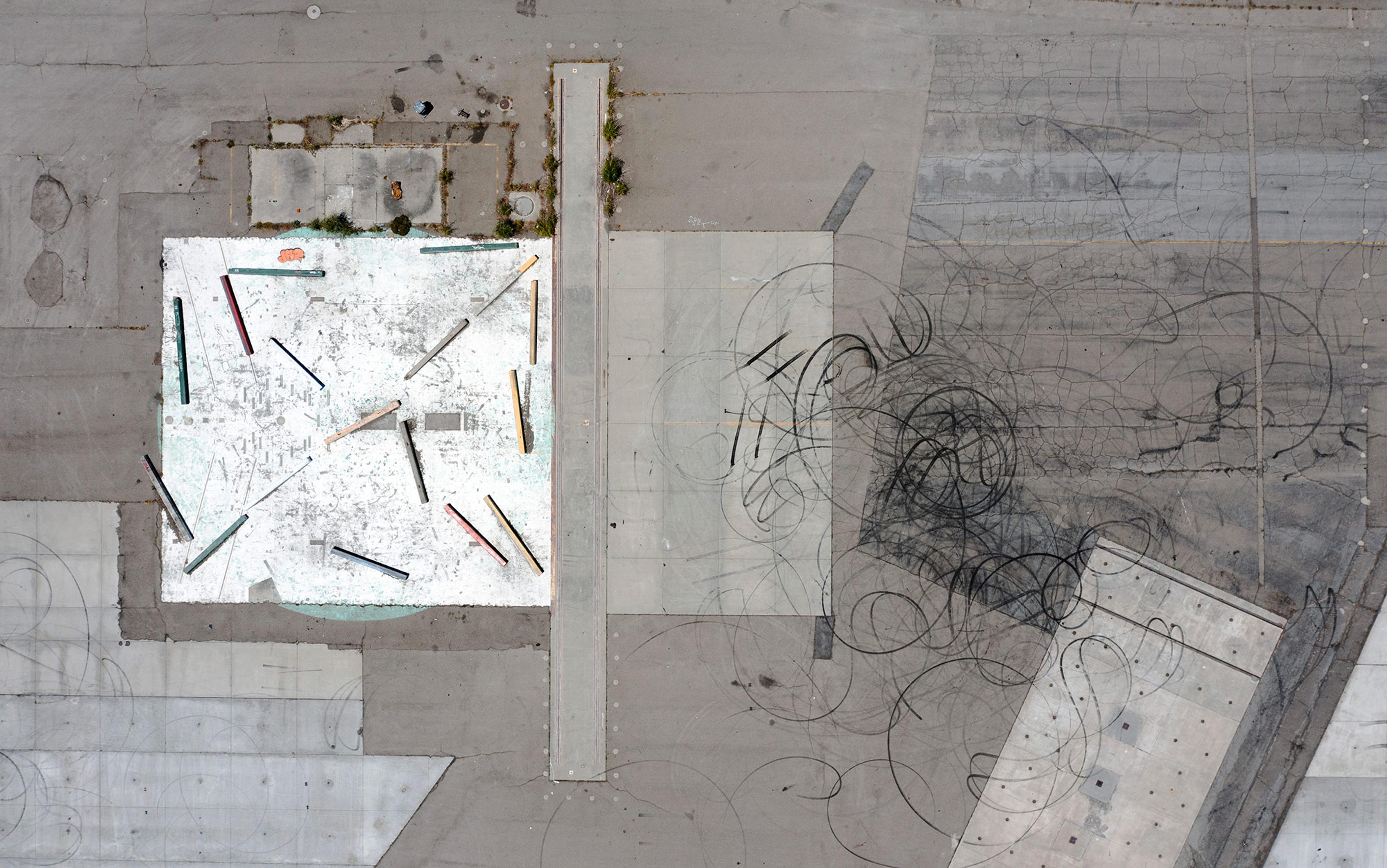

On good days, the world seems like a well-run railway: things happen according to principles, laws, rules and generalisations that we humans understand and can apply to particulars. We forgive the occasional late trains as exceptions that prove the rule. But other times we experience the world as a multi-car pile-up on a highway. The same laws of physics and of governments apply, but there are so many moving parts that we can’t predict the next pile-up and we can’t explain the details of this one – ‘details’ that can let one car escape with a bent fender while another erupts in a fireball.

What’s true of a car pile-up is also true of an uneventful autumn walk down a path arrayed with just exactly those leaves and no others. They are both accidents in which interdependencies among uncountable particulars overwhelm the explanatory power of the rules that determine them. One outcome we curse. The other in a quiet moment we may marvel at.

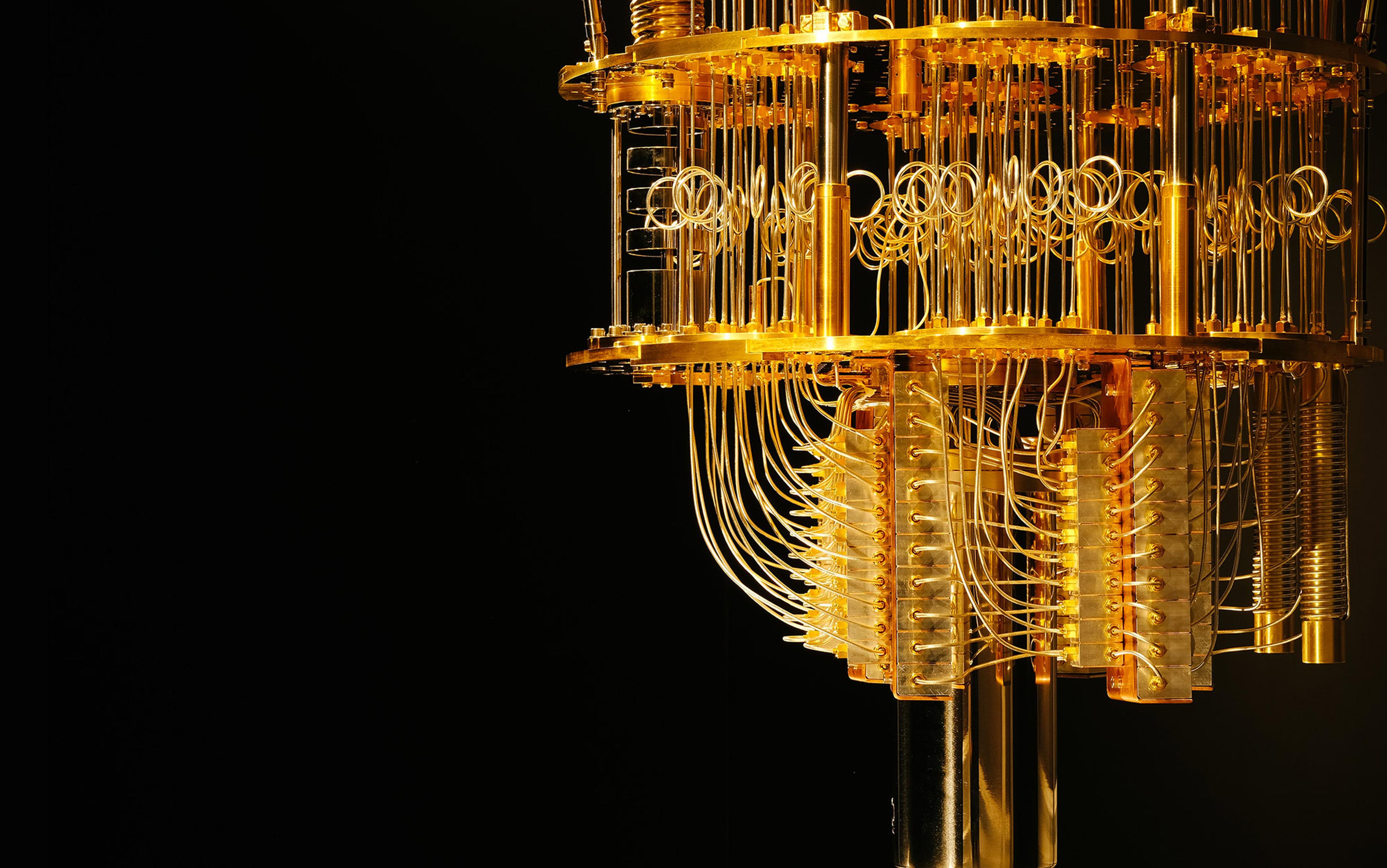

Now our latest paradigmatic technology, machine learning, may be revealing the everyday world as more accidental than rule-governed. If so, it will be because machine learning gains its epistemological power from its freedom from the sort of generalisations that we humans can understand or apply.

The opacity of machine learning systems raises serious concerns about their trustworthiness and their tendency towards bias. But the brute fact that they work could be bringing us to a new understanding and experience of what the world is and our role in it.

Machine learning works radically differently from traditional programming. Indeed, traditional programs are the apotheosis of the rule-based, railroad understanding of our world and experience. To use a common example of the most iconic type of machine learning, to write software that recognises handwritten numbers, the programmer would, traditionally, instruct the computer that a ‘1’ is written as an upright straight line, an ‘8’ consists of a smaller circle on top of a larger one, and so on. That can work well, but its reliance on Platonic ideals of handwritten numbers means the program is going to misidentify a relatively high percentage of numerals written by mortal hands in this imperfect realm.

Machine learning models instead are programs that are written by programs that know how to learn from examples. To create a machine learning model to recognise handwritten numerals, the developers don’t tell the machine anything about what we humans know about the shapes of numerals. Instead, in one common approach, the developers give it images of thousands of examples of handwritten numbers, each different and each labelled correctly as the number it represents. The system then algorithmically discovers statistical relationships among the pixels that compose the images that share a label. A series of pixels in a somewhat vertical line adds statistical weight to the image being a ‘1’, diminishes the probability that it’s a ‘3’, and so on.

In the cry ‘We don’t know how machine learning works!’ we hear that these models do indeed work

In real-life machine learning applications, the number of possible answers can far exceed the 10 digits, the amount of data to be considered is truly vast, and the correlations among the data points are so complex that we humans often simply cannot understand them. For example, the human metabolism is an insanely complex set of interactions and interdependent effects. So, imagine that a machine learning system is created that is good at predicting how the human body’s system will react to complexes of causes. DeepMetab, as we’ll call this model, becomes the place where doctors, researchers, lay people and hypochondriacs go to ask questions, to explore ideas, and to play ‘What if?’ with the human organism. DeepMetab becomes the most important source of knowledge about the human body, even if we cannot understand how it produces its output.

As we grow more and more reliant on machine learning models (MLMs) such as DeepMetab that we cannot understand, we could start to tell ourselves either of two narratives:

- The first narrative says that inexplicability is a drawback we often must put up with in order to gain the useful, probabilistic output that MLMs generate.

- The second says that the inexplicability is not a drawback but a truth: MLMs work because they’re better at reading the world than we are: they result from the statistical interrelating of more and finer-detailed data than other systems can manage, without having to worry about explaining itself to us humans. Every time a concerned citizen or regulator cries out in understandable despair: ‘We don’t know how machine learning works!’, we hear that these models do indeed work.

If machine learning models work by dispensing with understandable rules, principles, laws and generalisations that explain complexity by simplifying it, then in the cry ‘It works!’ we detect beneath the Harmony of the Spheres the clacking and grating of all the motes and particulars asserting themselves in their interdependence as the Real. The success of our technology is teaching us that the world is the real black box.

From our watches to our cars, from our cameras to our thermostats, machine learning has already embedded itself in much of our everyday lives. It’s being used to recommend videos, to attempt to identify hate speech, to steer vehicles on other planets, to control the spread of diseases, and is essential to the struggle to mitigate the climate crisis. It’s imperfect and can amplify societal biases, but we continue to use it anyway because it works. That machine learning does all this without applying rules to particulars is startling and even discomforting. We’re so set in our preference for rules over particulars that it seems crazy to us to program a machine learning system to play the game Go without even telling it the rules, and instead giving it hundreds of thousands of boards and moves to analyse. But that is how machine learning has become the best Go player in history. Indeed, when developers give the system data relevant to a domain, they typically purposefully withhold what we already know about the interrelationships among its data.

For example, a healthcare MLM likely trained itself on data points from millions of health records that include information about patients’ weight, age, history of smoking, blood pressure, heart rate, prior diseases, treatments and results. The developers don’t tell the system generalisations such as that symptoms are signs of particular diseases, or that diseases can be treated with specific medicines, except sometimes if the patient has allergies or a heart condition. We don’t tell it that there’s a relationship between smoking and lung diseases, or between LDL (low-density lipoproteins or ‘bad cholesterol’) and the medication simvastatin, or between lung capacity and blood oxygen levels. The MLM training process does not start from generalisations, usually does not output generalisations, and there might be no interpretable generalisations in between.

It’s like wondering how your car avoided serious damage in that multi-car collision

Now, the hairs on the back of the necks of people even lightly acquainted with machine learning are standing on end because a machine learning model is created precisely by generalising from data. For example, if an MLM handwriting identifier hasn’t generalised from what it’s learned from the samples it was given, it will fail miserably at categorising characters it hasn’t seen before. Its failure to generalise would make it a useless model. In technical terms, it has been overfitted.

But MLMs’ generalisations are unlike the traditional generalisations we use to explain particulars. We like traditional generalisations because (a) we can understand them; (b) they often enable deductive conclusions; and (c) we can apply them to particulars. But (a) an MLM’s generalisations are not always understandable; (b) they are statistical, probabilistic and primarily inductive; and (c) literally and practically, we usually cannot apply MLM generalisations except by running the machine learning model that resulted from them.

Further, an MLM’s generalisations can be oddly particular: a pattern of veins in a retinal scan might portend the onset of arthritis but only if there are 50 other factors with specific values in the overall health record, and those 50 factors can vary depending on their interrelation. It’s like wondering how your car avoided serious damage in that multi-car collision: the car had to overcome so many ifs, ands and ors that the event doesn’t reduce to a comprehensible rule that you could express or neatly apply to another situation. Or it’s like the clues in a murder mystery that indicate the killer only when taken together in ways that can’t be generalised and applied to future murder cases.

Our encounter with MLMs doesn’t deny that there are generalisations, laws or principles. It denies that they are sufficient for understanding what happens in a universe as complex as ours. The contingent particulars, each affecting all others, overwhelm the explanatory power of the rules and would do so even if we knew all the rules. For example, if you know the laws governing gravitational attraction and air resistance, and if you know the mass of a coin and of Earth, and if you know the height from which the coin will be dropped, you can calculate how long it will take the coin to hit the ground. That will likely be enough to meet your pragmatic purpose. But the traditional Western framing of it has overemphasised the calm power of the laws. To apply the rules fully, we would have to know every factor that has an effect on the fall, including which pigeons are going to stir up the airflow around the tumbling coin and the gravitational pull of distant stars tugging at it from all directions simultaneously. (Did you remember to include the distant comet?) To apply the laws with complete accuracy, we would have to have Laplace’s demon’s comprehensive and impossible knowledge of the Universe.

That’s not a criticism of the pursuit of scientific laws, nor of the practice of science, which is usually empirical and sufficiently accurate for our needs – even if the degree of pragmatic accuracy possible silently shapes what we accept as our needs. But it should make us wonder why we in the West have treated the chaotic flow of the river we can’t step into twice as mere appearance, beneath which are the real and eternal principles of order that explain that flow. Why our ontological preference for the eternally unchanging over the eternally swirling water and dust?

Machine learning may be breaking the West’s infatuation with certainty as the sign of knowledge

These are familiar topics in the history of Western philosophy and religion, far beyond my scope. But in no small part it seems we have been attracted by the way eternal laws simplify the world sufficiently that we can understand it, and thus predict and control it. At the same time, these simple and often beautiful laws hide from us the chaos of the particulars that are determined not just by the laws but by the state of every other particular. But now we have a technology of prediction and control that directly derives from the small, simultaneous chaotic interactions of the totality. This technology gives us increased mastery, but not understanding. Its success brings attention to what escapes our understanding.

At the same time, and for the same reasons, machine learning may be breaking the West’s infatuation with certainty as the sign of knowledge, for machine learning outcomes are probabilistic. Indeed, complete certainty of results from a machine learning model is a cause for scepticism about that model. Machine learning’s outputs, as probabilistic, have some degree of inaccuracy built into them; a true probabilistic statement is one that correctly predicts how often it will be wrong.

Now that we have mechanisms that stun us with a power wrung from swirls of particulars connected in incomprehensible, delicate webs, perhaps we will no longer write off those chaotic swirls as mere appearances to be penetrated. Perhaps the impenetrability of the effect of all on all will be the rock Samuel Johnson kicked to disprove Bishop Berkeley’s radical Idealism. This time, however, the rock will be pushing back against our Western assumption that what’s most real is what’s most unchanging, general and knowable.

Perhaps we will take as what’s ultimately real the unimaginable complexity of the simplest happening. And the primacy of accidents and happenstance. And the inadequacy of our 1.4 kg of brain in the face of the challenge we in the West have set for it. And the brutal unknowability of our world that blunts the edges of the axes of our understanding. If this is happening, it’s because we can now heed the voices of more particulars than we can count, each a tiny signal from our new cacophonous readout of the world – a readout that is yielding useful, surprising, probabilistic knowledge from the incomprehensible effect of all on all, ever and always.

The views expressed in this Essay are solely the author’s and do not necessarily represent the views of any organisation with which he has been affiliated.