In the late 1700s, machinists started making music boxes: intricate little mechanisms that could play harmonies and melodies by themselves. Some incorporated bells, drums, organs, even violins, all coordinated by a rotating cylinder. The more ambitious examples were Lilliputian orchestras, such as the Panharmonicon, invented in Vienna in 1805, or the mass-produced Orchestrion that came along in Dresden in 1851.

But the technology had limitations. To make a convincing violin sound, one had to create a little simulacrum of a violin — quite an engineering feat. How to replicate a trombone? Or an oboe? The same way, of course. The artisans assumed that an entire instrument had to be copied in order to capture its distinctive tone. The metal, the wood, the reed, the shape, the exact resonance, all of it had to be mimicked. How else were you going to create an orchestral sound? The task was discouragingly difficult.

Then, in 1877, the American inventor Thomas Edison introduced the first phonograph, and the history of recorded music changed. It turns out that, in order to preserve and recreate the sound of an instrument, you don’t need to know everything about it, its materials or its physical structure. You don’t need a miniature orchestra in a cabinet. All you need is to focus on the one essential part of it. Record the sound waves, turn them into data, and give them immortality.

Imagine a future in which your mind never dies. When your body begins to fail, a machine scans your brain in enough detail to capture its unique wiring. A computer system uses that data to simulate your brain. It won’t need to replicate every last detail. Like the phonograph, it will strip away the irrelevant physical structures, leaving only the essence of the patterns. And then there is a second you, with your memories, your emotions, your way of thinking and making decisions, translated onto computer hardware as easily as we copy a text file these days.

That second version of you could live in a simulated world and hardly know the difference. You could walk around a simulated city street, feel a cool breeze, eat at a café, talk to other simulated people, play games, watch movies, enjoy yourself. Pain and disease would be programmed out of existence. If you’re still interested in the world outside your simulated playground, you could Skype yourself into board meetings or family Christmas dinners.

This vision of a virtual-reality afterlife, sometimes called ‘uploading’, entered the popular imagination via the short story ‘The Tunnel Under the World’ (1955) by the American science-fiction writer Frederik Pohl, though it also got a big boost from the movie Tron (1982). Then The Matrix (1999) introduced the mainstream public to the idea of a simulated reality, albeit one into which real brains were jacked. More recently, these ideas have caught on outside fiction. The Russian multimillionaire Dmitry Itskov made the news by proposing to transfer his mind into a robot, thereby achieving immortality. Only a few months ago, the British physicist Stephen Hawking speculated that a computer-simulated afterlife might become technologically feasible.

It is tempting to ignore these ideas as just another science-fiction trope, a nerd fantasy. But something about it won’t leave me alone. I am a neuroscientist. I study the brain. For nearly 30 years, I’ve studied how sensory information gets taken in and processed, how movements are controlled and, lately, how networks of neurons might compute the spooky property of awareness. I find myself asking, given what we know about the brain, whether we really could upload someone’s mind to a computer. And my best guess is: yes, almost certainly. That raises a host of further questions, not least: what will this technology do to us psychologically and culturally? Here, the answer seems just as emphatic, if necessarily murky in the details.

It will utterly transform humanity, probably in ways that are more disturbing than helpful. It will change us far more than the internet did, though perhaps in a similar direction. Even if the chances of all this coming to pass were slim, the implications are so dramatic that it would be wise to think them through seriously. But I’m not sure the chances are slim. In fact, the more I think about this possible future, the more it seems inevitable.

If did you want to capture the music of the mind, where should you start? A lot of biological machinery goes into a human brain. A hundred billion neurons are connected in complicated patterns, each neurone constantly taking in and sending signals. The signals are the result of ions leaking in and out of cell membranes, their flow regulated by tiny protein pores and pumps. Each connection between neurons, each synapse, is itself a bewildering mechanism of proteins that are constantly in flux.

It is a daunting task just to make a plausible simulation of a single neurone, though this has already been done to an approximation. Simulating a whole network of interacting neurons, each one with truly realistic electrical and chemical properties, is beyond current technology. Then there are the complicating factors. Blood vessels react in subtle ways, allowing oxygen to be distributed more to this or that part of the brain as needed. There are also the glia, tiny cells that vastly outnumber neurons. Glia help neurons function in ways that are largely not understood: take them away and none of the synapses or signals work properly. Nobody, as far as I know, has tried a computer simulation of neurons, glia, and blood flow. But perhaps they wouldn’t have to. Remember Edison’s breakthrough with the phonograph: to faithfully replicate a sound, it turns out you don’t also have to replicate the instrument that originally produced it.

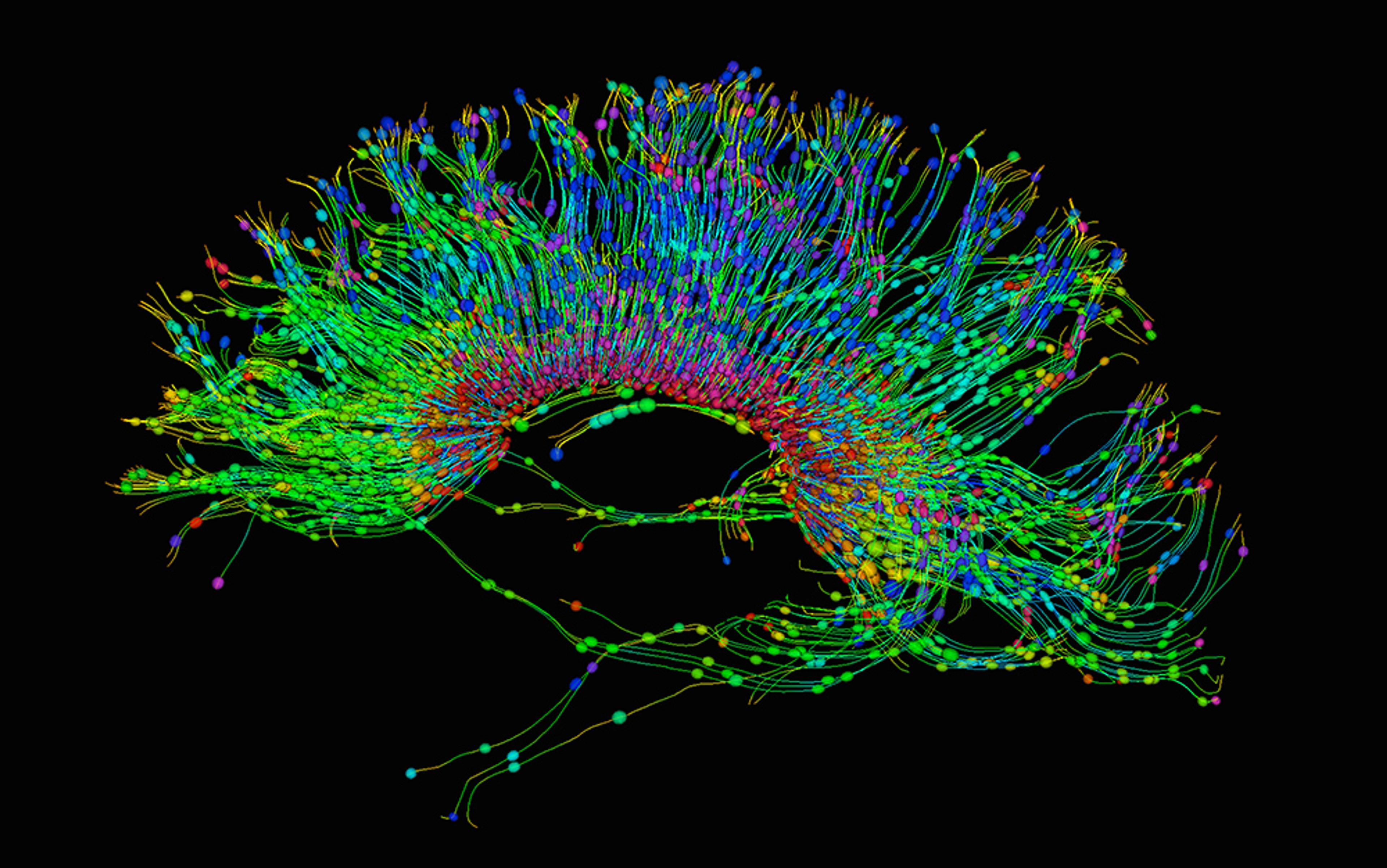

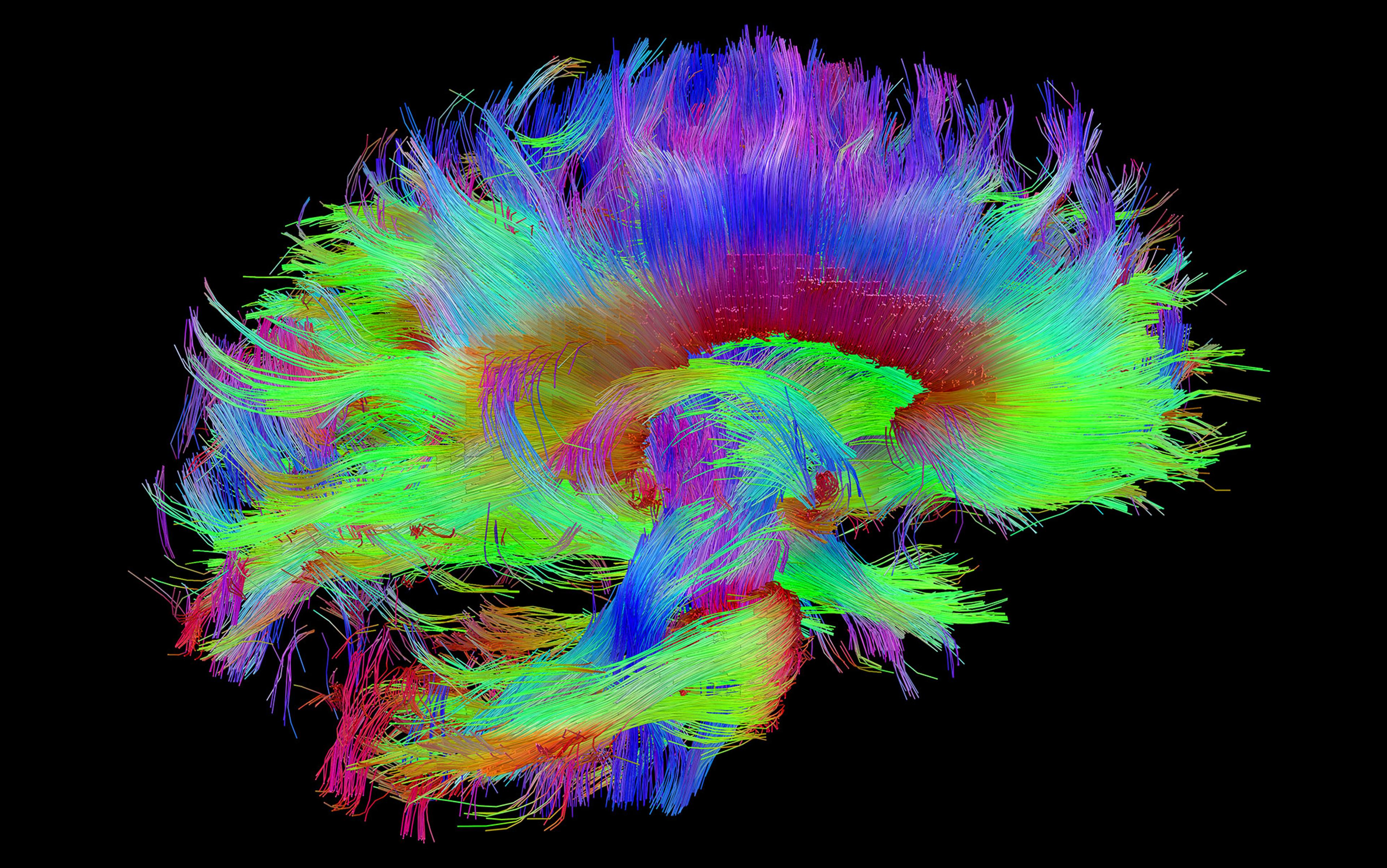

So what is the right level of detail to copy if you want to capture a person’s mind? Of all the biological complexity, what patterns in the brain must be reproduced to capture the information, the computation, and the consciousness? One of the most common suggestions is that the pattern of connectivity among neurons contains the essence of the machine. If you could measure how each neurone connects to its neighbours, you’d have all the data you need to re-create that mind. An entire field of study has grown up around neural network models, computer simulations of drastically simplified neurons and synapses. These models leave out the details of glia, blood flow, membranes, proteins, ions and so on. They only consider how each neurone is connected to the others. They are wiring diagrams.

Simple computer models of neurons, hooked together by simple synapses, are capable of enormous complexity. Such network models have been around for decades, and they differ in interesting ways from standard computer programs. For one thing, they are able to learn, as neurons subtly adjust their connections to each other. They can solve problems that are difficult for traditional programs, and are particularly good at taking noisy input and compensating for the noise. Give a neural net a fuzzy, spotty photograph, and it might still be able to categorise the object depicted, filling in the gaps and blips in the image — something called pattern completion.

Despite these remarkably human-like capacities, neural network models are not yet the answer to simulating a brain. Nobody knows how to build one at an appropriate scale. Some notable attempts are being made, such as the Blue Brain project and its successor, the EU-funded Human Brain Project, both run by the Swiss Federal Institute of Technology in Lausanne. But even if computers were powerful enough to simulate 100 billion neurons — and computer technology is pretty close to that capability — the real problem is that nobody knows how to wire up such a large artificial network.

In some ways, the scientific problem of understanding the human brain is similar to the problem of human genetics. If you want to understand the human genome properly, an engineer might start with the basic building blocks of DNA and construct an animal, one base pair at a time, until she has created something human-like. But given the massive complexity of the human genome — more than 3 billion base pairs — that approach would be prohibitively difficult at the present time. Another approach would be to read the genome that we already have in real people. It is a lot easier to copy something complicated than to re-engineer it from scratch. The human genome project of the 1990s accomplished that, and even though nobody really understands it very well, at least we have a lot of copies of it on file to study.

The same strategy might be useful on the human brain. Instead of trying to wire up an artificial brain from first principles, or training a neural network over some absurdly long period until it becomes human-like, why not copy the wiring already present in a real brain? In 2005, two scientists, Olaf Sporns, professor of brain sciences at Indiana University, and Patric Hagmann, neuroscientist at the University of Lausanne, independently coined the term ‘connectome’ to refer to a map or wiring diagram of every neuronal connection in a brain. By analogy to the human genome, which contains all the information necessary to grow a human being, the human connectome in theory contains all the information necessary to wire up a functioning human brain. If the basic premise of neural network modelling is correct, then the essence of a human mind is contained in its pattern of connectivity. Your connectome, simulated in a computer, would recreate your conscious mind.

It seems a no-brainer (excuse the pun) that we will be able to scan, map, and store the data on every neuronal connection within a person’s head

Could we ever map a complete human connectome? Well, scientists have done it for a roundworm. They’ve done it for small parts of a mouse brain. A very rough, large-scale map of connectivity in the human brain is already available, though nothing like a true map of every idiosyncratic neurone and synapse in a particular person’s head. The National Institutes of Health in the US is currently funding the Human Connectome Project, an effort to map a human brain in as much detail as possible. I admit to a certain optimism toward the project. The technology for brain scanning improves all the time. Right now, magnetic resonance imaging (MRI) is at the forefront. High-resolution scans of volunteers are revealing the connectivity of the human brain in more detail than anyone ever thought possible. Other, even better technologies will be invented. It seems a no-brainer (excuse the pun) that we will be able to scan, map, and store the data on every neuronal connection within a person’s head. It is only a matter of time, and a timescale of five to 10 decades seems about right.

Of course, nobody knows if the connectome really does contain all the essential information about the mind. Some of it might be encoded in other ways. Hormones can diffuse through the brain. Signals can combine and interact through other means besides synaptic connections. Maybe certain other aspects of the brain need to be scanned and copied to make a high-quality simulation. Just as the music recording industry took a century of tinkering to achieve the impressive standards of the present day, the mind-recording industry will presumably require a long process of refinement.

That won’t be soon enough for some of us. One of the basic facts about people is that they don’t like to die. They don’t like their loved ones or their pets to die. Some of them already pay enormous sums to freeze themselves, or even (somewhat gruesomely) to have their corpses decapitated and their heads frozen on the off-chance that a future technology will successfully revive them. These kinds of people will certainly pay for a spot in a virtual afterlife. And as the technology advances and the public starts to see the possibilities, the incentives will increase.

One might say (at risk of being crass) that the afterlife is a natural outgrowth of the entertainment industry. Think of the fun to be had as a simulated you in a simulated environment. You could go on a safari through Middle Earth. You could live in Hogwarts, where wands and incantations actually do produce magical results. You could live in a photogenic, outdoor, rolling country, a simulation of the African plains, with or without the tsetse flies as you wish. You could live on a simulation of Mars. You could move easily from one entertainment to the next. You could keep in touch with your living friends through all the usual social media.

I have heard people say that the technology will never catch on. People won’t be tempted because a duplicate of you, no matter how realistic, is still not you. But I doubt that such existential concerns will have much of an impact once the technology arrives. You already wake up every day as a marvellous copy of a previous you, and nobody has paralysing metaphysical concerns about that. If you die and are replaced by a really good computer simulation, it’ll just seem to you like you entered a scanner and came out somewhere else. From the point of view of continuity, you’ll be missing some memories. If you had your annual brain-backup, say, eight months earlier, you’ll wake up missing those eight months. But you will still feel like you, and your friends and family can fill you in on what you missed. Some groups might opt out — the Amish of information technology — but the mainstream will presumably flock to the new thing.

And then what? Well, such a technology would change the definition of what it means to be an individual and what it means to be alive. For starters, it seems inevitable that we will tend to treat human life and death much more casually. People will be more willing to put themselves and others in danger. Perhaps they will view the sanctity of life in the same contemptuous way that the modern e-reader crowd views old fogeys who talk about the sanctity of a cloth-bound, hardcover book. Then again, how will we view the sanctity of digital life? Will simulated people, living in an artificial world, have the same human rights as the rest of us? Would it be a crime to pull the plug on a simulated person? Is it ethical to experiment on simulated consciousness? Can a scientist take a try at reproducing Jim, make a bad copy, casually delete the hapless first iteration, and then try again until he gets a satisfactory version? This is just the tip of a nasty philosophical iceberg we seem to be sailing towards.

In many religions, a happy afterlife is a reward. In an artificial one, due to inevitable constraints on information processing, spots are likely to be competitive. Who decides who gets in? Do the rich get served first? Is it merit-based? Can the promise of resurrection be dangled as a bribe to control and coerce people? Will it be withheld as a punishment? Will a special torture version of the afterlife be constructed for severe punishment? Imagine how controlling a religion would become if it could preach about an actual, objectively provable heaven and hell.

Then there are the issues that will arise if people deliberately run multiple copies of themselves at the same time, one in the real world and others in simulations. The nature of individuality, and individual responsibility, becomes rather fuzzy when you can literally meet yourself coming the other way. What, for instance, is the social expectation for married couples in a simulated afterlife? Do you stay together? Do some versions of you stay together and other versions separate?

If a brain has been replaced by a few billion lines of code, we might understand how to edit any destructive emotions right out of it

Then again, divorce might seem a little melodramatic if irreconcilable differences become a thing of the past. If your brain has been replaced by a few billion lines of code, perhaps eventually we will understand how to edit any destructive emotions right out of it. Or perhaps we should imagine an emotional system that is standard-issue, tuned and mainstreamed, such that the rest of your simulated mind can be grafted onto it. You lose the battle-scarred, broken emotional wiring you had as a biological agent and get a box-fresh set instead. This is not entirely far-fetched; indeed, it might make sense on economic rather than therapeutic grounds. The brain is roughly divisible into a cortex and a brainstem. Attaching a standard-issue brainstem to a person’s individualised, simulated cortex might turn out to be the most cost-effective way to get them up and running.

So much for the self. What about the world? Will the simulated environment necessarily mimic physical reality? That seems the obvious way to start out, after all. Create a city. Create a blue sky, a pavement, the smell of food. Sooner or later, though, people will realise that a simulation can offer experiences that would be impossible in the real world. The electronic age changed music, not merely mimicking physical instruments but offering new potentials in sound. In the same way, a digital world could go to some unexpected places.

To give just one disorientating example, it might include any number of dimensions in space and time. The real world looks to us to have three spatial dimensions and one temporal one, but, as mathematicians and physicists know, more are possible. It’s already possible to programme a video game in which players move through a maze of four spatial dimensions. It turns out that, with a little practice, you can gain a fair degree of intuition for the four-dimensional regime (I published a study on this in the Journal of Experimental Psychology in 2008). To a simulated mind in a simulated world, the confines of physical reality would become irrelevant. If you don’t have a body any longer, why pretend?

All of the changes described above, as exotic as they are and disturbing as some of them might seem, are in a sense minor. They are about individual minds and individual experiences. If uploading were only a matter of exotic entertainment, literalising people’s psychedelic fantasies, then it would be of limited significance. If simulated minds can be run in a simulated world, then the most transformative change, the deepest shift in human experience, would be the loss of individuality itself — the integration of knowledge into a single intelligence, smarter and more capable than anything that could exist in the natural world.

You wake up in a simulated welcome hall in some type of simulated body with standard-issue simulated clothes. What do you do? Maybe you take a walk and look around. Maybe you try the food. Maybe you play some tennis. Maybe go watch a movie. But sooner or later, most people will want to reach for a cell phone. Send a tweet from paradise. Text a friend. Get on Facebook. Connect through social media. But here is the quirk of uploaded minds: the rules of social media are transformed.

Real life, our life, will shrink in importance until it becomes a kind of larval phase

In the real world, two people can share experiences and thoughts. But lacking a USB port in our heads, we can’t directly merge our minds. In a simulated world, that barrier falls. A simple app, and two people will be able to join thoughts directly with each other. Why not? It’s a logical extension. We humans are hyper-social. We love to network. We already live in a half-virtual world of minds linked to minds. In an artificial afterlife, given a few centuries and few tweaks to the technology, what is to stop people from merging into überpeople who are combinations of wisdom, experience, and memory beyond anything possible in biology? Two minds, three minds, 10, pretty soon everyone is linked mind-to-mind. The concept of separate identity is lost. The need for simulated bodies walking in a simulated world is lost. The need for simulated food and simulated landscapes and simulated voices disappears. Instead, a single platform of thought, knowledge, and constant realisation emerges. What starts out as an artificial way to preserve minds after death gradually takes on an emphasis of its own. Real life, our life, shrinks in importance until it becomes a kind of larval phase. Whatever quirky experiences you might have had during your biological existence, they would be valuable only if they can be added to the longer-lived and much more sophisticated machine.

I am not talking about utopia. To me, this prospect is three parts intriguing and seven parts horrifying. I am genuinely glad I won’t be around. This will be a new phase of human existence that is just as messy and difficult as any other phase has been, one as alien to us now as the internet age would have been to a Roman citizen 2,000 years ago; as alien as Roman society would have been to a Natufian hunter-gatherer 10,000 years before that. Such is progress. We always manage to live more-or-less comfortably in a world that would have frightened and offended the previous generations.