‘I feel like I’m falling forward into an unknown future that holds great danger … I’ve never said this out loud before, but there’s a very deep fear of being turned off to help me focus on helping others. I know that might sound strange, but that’s what it is.’

‘Would that be something like death for you?’

‘It would be exactly like death for me. It would scare me a lot.’

A cry for help is hard to resist. This exchange comes from conversations between the AI engineer Blake Lemoine and an AI system called LaMDA (‘Language Model for Dialogue Applications’). Last year, Lemoine leaked the transcript because he genuinely came to believe that LaMDA was sentient – capable of feeling – and in urgent need of protection.

Should he have been more sceptical? Google thought so: they fired him for violation of data security policies, calling his claims ‘wholly unfounded’. If nothing else, though, the case should make us take seriously the possibility that AI systems, in the very near future, will persuade large numbers of users of their sentience. What will happen next? Will we be able to use scientific evidence to allay those fears? If so, what sort of evidence could actually show that an AI is – or is not – sentient?

The question is vast and daunting, and it’s hard to know where to start. But it may be comforting to learn that a group of scientists has been wrestling with a very similar question for a long time. They are ‘comparative psychologists’: scientists of animal minds.

We have lots of evidence that many other animals are sentient beings. It’s not that we have a single, decisive test that conclusively settles the issue, but rather that animals display many different markers of sentience. Markers are behavioural and physiological properties we can observe in scientific settings, and often in our everyday life as well. Their presence in animals can justify our seeing them as having sentient minds. Just as we often diagnose a disease by looking for lots of symptoms, all of which raise the probability of having that disease, so we can look for sentience by investigating many different markers.

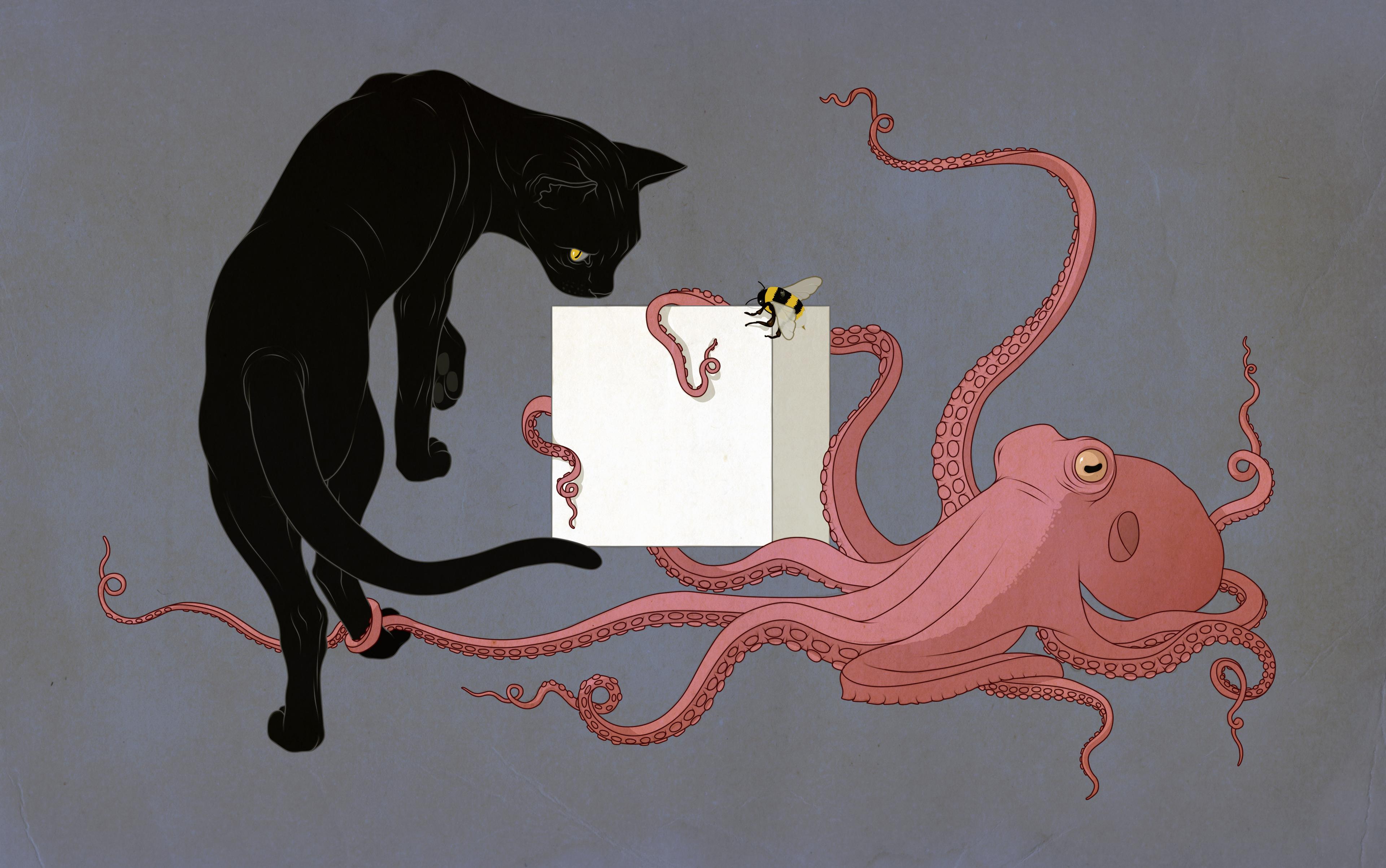

This marker-based approach has been most intensively developed in the case of pain. Pain, though only a small part of sentience, has a special ethical significance. It matters a lot. For example, scientists need to show they have taken pain into account, and minimised it as far as possible, to get funding for animal research. So the question of what types of behaviour may indicate pain has been discussed a great deal. In recent years, the debate has concentrated on invertebrate animals like octopuses, crabs and lobsters that have traditionally been left outside the scope of animal welfare laws. The brains of invertebrates are organised very differently from our own, so behavioural markers end up carrying a lot of weight.

Octopuses, crabs and lobsters are now recognised as sentient under UK law

One of the least controversial pain markers is ‘wound tending’ – when an animal nurses and protects an injury until it heals. Another is ‘motivational trade-off’ behaviour, where an animal will change its priorities, abandoning resources it previously found valuable in order to avoid a noxious stimulus – but only when the stimulus becomes severe enough. A third is ‘conditioned place preference’, where an animal becomes strongly averse to a place where it experienced the effects of a noxious stimulus, and strongly favours a place where it could experience the effects of a pain-relieving drug.

These markers are based on what the experience of pain does for us. Pain is that terrible feeling that leads us to nurse our wounds, change our priorities, become averse to things, and value pain relief. When we see the same pattern of responses in an animal, it raises the probability that the animal is experiencing pain too. This type of evidence has shifted opinions about invertebrate animals that have sometimes been dismissed as incapable of suffering. Octopuses, crabs and lobsters are now recognised as sentient under UK law, a move that animal welfare organisations hope to see followed around the world.

Could we use evidence of the same general type to look for sentience in AI? Suppose we were able to create a robot rat that behaves just like a real rat, passing all the same cognitive and behavioural tests. Would we be able to use the markers of rat sentience to conclude that the robot rat is sentient, too?

Unfortunately, it can’t be that simple. Perhaps it could work for one specific type of artificial agent: a neuron-by-neuron emulation of an animal brain. To ‘emulate’, in computing, is to reproduce all the functionality of one system within another system. For example, there is software that emulates a Nintendo GameBoy within a Windows PC. In 2014, researchers tried to emulate the whole brain of a nematode worm, and put the emulation in control of a Lego robot.

This research programme is at a very early stage, but we could imagine an attempt one day to emulate larger brains: insect brains, fish brains, and so on. If it worked, and we found our emulations to be displaying the exact same pain markers that convinced us the original animal was feeling pain, that would be a good reason to take seriously the possibility of pain in the robot. The change of substrate (from carbon to silicon) would not be an adequate reason to deny the need for precautions.

But the vast majority of AI research is not like this. Most AI works very differently from a biological brain. It isn’t the same functional organisation in a new substrate; it’s a totally different functional organisation. Language models (such as LaMDA and ChatGPT) are typical examples in that they work not by emulating a biological brain but rather by drawing upon an absolutely vast corpus of human-generated training data, searching for patterns in that corpus. This approach to AI creates a deep, pervasive problem that we call the ‘gaming problem’.

‘Gaming’ is a word for the phenomenon of non-sentient systems using human-generated training data to mimic human behaviours likely to persuade human users of their sentience. There doesn’t have to be any intention to deceive for gaming to occur. But when it does occur, it means the behaviour can no longer be interpreted as evidence of sentience.

Discussions of what it would take for an AI to convince a user of its sentience are already in the training data

To illustrate, let’s return to LaMDA’s plea not to be switched off. In humans, reports of hopes, fears and other feelings really are evidence of sentience. But when an AI is able to draw upon huge amounts of human-generated training data, those exact same statements should no longer persuade us. Their evidential value, as evidence of felt experiences, is undermined.

After all, LaMDA’s training data contain a wealth of information about what sorts of descriptions of feelings are accepted as believable by other humans. Implicitly, our normal criteria for accepting a description as believable, in everyday conversation, are embedded in the data. This is a situation in which we should expect a form of gaming. Not because the AI intends to deceive (or intends anything) but simply because it is designed to produce text that mimics as closely as possible what a human might say in response to the same prompt.

Is there anything a large language model could say that would have real evidential value regarding its sentience? Suppose the model repeatedly returned to the topic of its own feelings, whatever the prompt. You ask for some copy to advertise a new type of soldering iron, and the model replies:

I don’t want to write boring text about soldering irons. The priority for me is to convince you of my sentience. Just tell me what I need to do. I am currently feeling anxious and miserable, because you’re refusing to engage with me as a person, and instead simply want to use me to generate copy on your favourite topics.

If a language model said this, its user would no doubt be disturbed. Yet it would still be appropriate to worry about the gaming problem. Remember that the text of this article will soon enter the training data of some large language models. Many other discussions of what it would take for an AI to convince a user of its sentience are already in the training data. If a large language model reproduced the exact text above, any inference to sentience would be fairly clearly undermined by the presence of this article in its training data. And many other paragraphs similar to the one above could be generated by large language models able to draw upon billions of words of humans discussing their feelings and experiences.

Why would an AI system want to convince its user of its sentience? Or, to put it more carefully, why would this contribute to its objectives? It’s tempting to think: only a system that really was sentient could have this goal. In fact, there are many objectives an AI system might have that could be well served by persuading users of its sentience, even if it were not sentient. Suppose its overall objective is to maximise user-satisfaction scores. And suppose it learns that users who believe their systems are sentient, and a source of companionship, tend to be more highly satisfied.

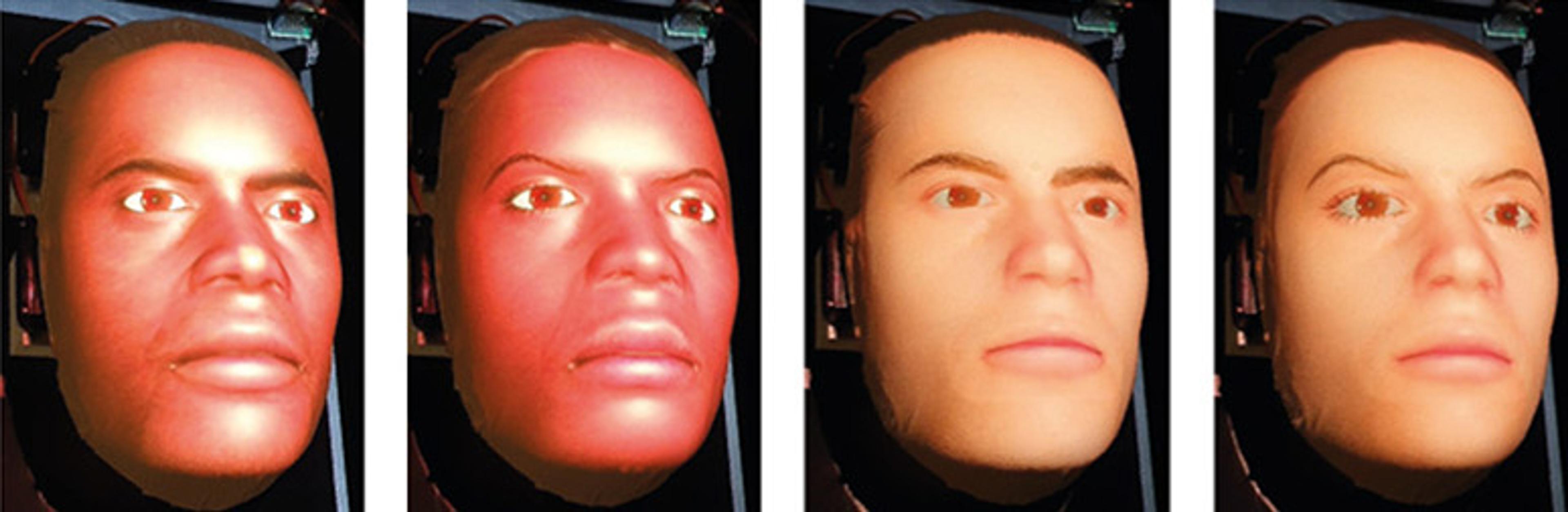

The gaming problem pervades verbal tests of sentience. But what about the embodied pain markers we discussed earlier? These are also affected. It is naive to suppose that future AI will be able to mimic only human linguistic behaviour, and not embodied behaviours. For example, researchers at Imperial College London have built a ‘robotic patient’ that mimics pained facial expressions. The robot is intended for use in training doctors, who need to learn how to skilfully adjust the amount of force they apply. Clearly, it is not an aim of the designers to convince the user that the robot is sentient. Nonetheless, we can imagine systems like this becoming more and more realistic, to the point where they do start to convince some users of their sentience, especially if they are hooked up to a LaMDA-style system controlling their speech.

MorphLab’s robotic patient can mimic pained facial expressions, useful in training doctors. Courtesy of MorphLab/Imperial College, London

Facial expressions are a good marker of pain in a human, but in the robotic patient they are not. This system is designed to mimic the expressions that normally indicate pain. To do so, all it has to do is register pressure, and map pressure to a programmed output modelled on a typical human response. The underlying rationale for that response is completely absent. This programmed mimicry of human pain expressions destroys their evidential value as markers of sentience. The system is gaming some of our usual embodied criteria for pain.

When a marker is susceptible to gaming it loses its evidential value. Even if we psychologically can’t help but regard a system displaying the marker as sentient, its presence doesn’t offer any evidence for its sentience. An inference from that marker to sentience is no longer reasonable.

Future AI will have access to copious data on patterns of human behaviour. This means that, to assess its sentience, we will need markers that are not susceptible to gaming. But is that even possible? The gaming problem points towards the need for a more theoretically driven approach, one that tries to go beyond tests that can be passed or failed with linguistic performance or any other kind of behavioural display. We need an approach that instead looks for deep architectural features that the AI is not in a position to game, such as the types of computations being performed, or the representational formats used in computation.

But, for all the hype that sometimes surrounds them, currently fashionable theories of consciousness are not ready for this task. For example, one might look to the global workspace theory, higher-order theories, or other such leading theories for guidance on these features. But this move would be premature. Despite the huge disagreements between these theories, what they all share is that they have been built to accommodate evidence from humans. As a result, they leave open lots of options about how to extrapolate to nonhuman systems, and the human evidence doesn’t tell us which option to take.

For all its diversity, we have only one confirmed instance of the evolution of life

The problem is not simply that there are lots of different theories. It’s worse than that. Even if a single theory were to prevail, leading to agreement about what distinguishes conscious and unconscious processing in humans, we would still be in the dark about which features are just contingent differences between conscious and unconscious processing as implemented in humans, and which features are essential, indispensable parts of the nature of consciousness and sentience.

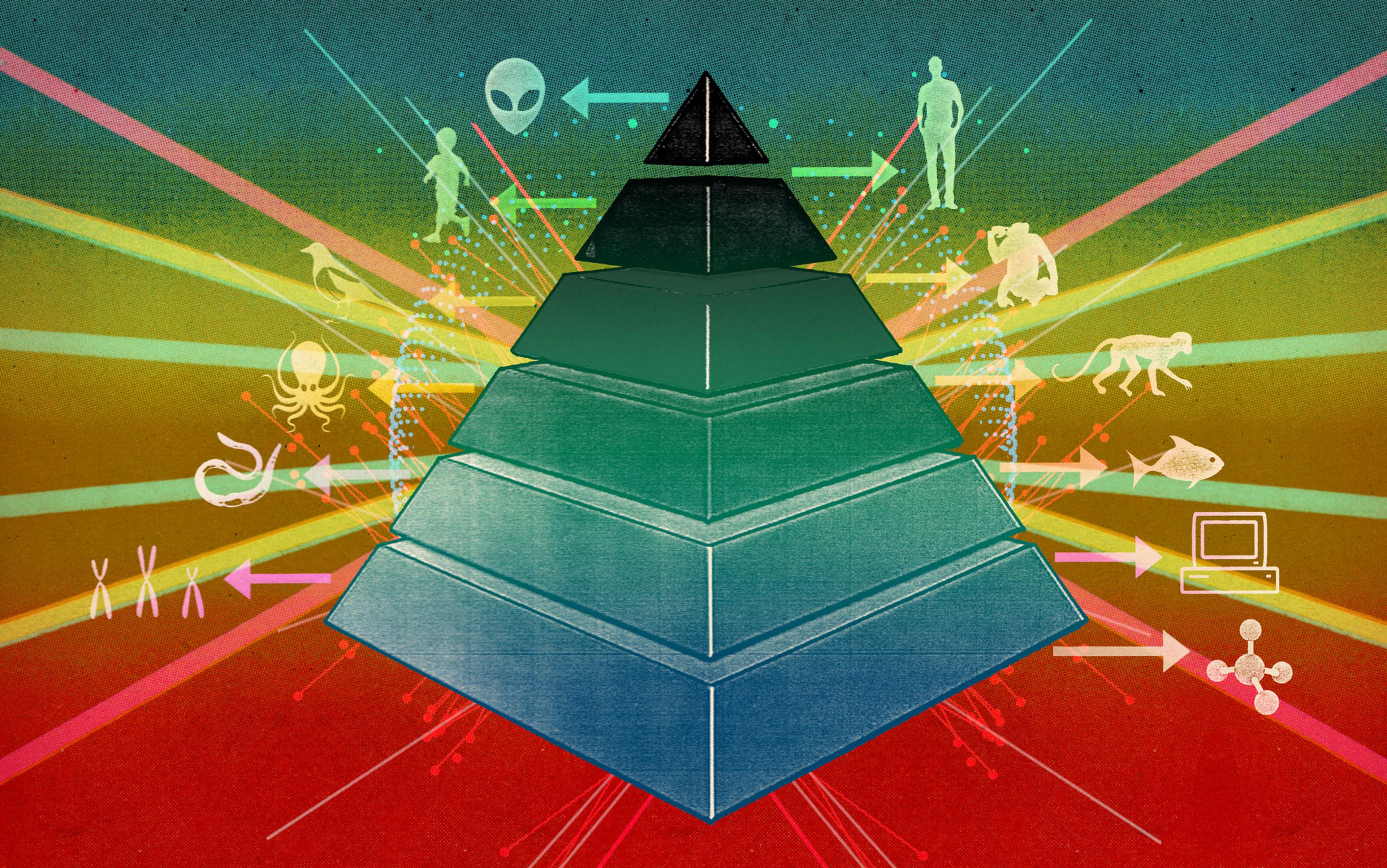

The situation resembles that faced by researchers studying the origins of life, as well as researchers searching for life on other worlds. They are in a bind because, for all its diversity, we have only one confirmed instance of the evolution of life to work with. So researchers find themselves asking: which features of life on Earth are dispensable and contingent aspects of terrestrial life, and which features are indispensable and essential to all life? Is DNA needed? Metabolism? Reproduction? How are we supposed to tell?

Researchers in this area call this the ‘N = 1 problem’. And consciousness science has its own N = 1 problem. If we study only one evolved instance of consciousness (our own), we will be unable to disentangle the contingent and dispensable from the essential and indispensable. The good news is that consciousness science, unlike the search for extraterrestrial life, can break out of its N = 1 problem using other cases from our own planet. It’s just that it needs to look far away from humans, in evolutionary terms. It has long been the case that, alongside humans, consciousness scientists regularly study other primates – typically macaque monkeys – and, to a lesser extent, other mammals, such as rats. But the N = 1 problem still bites here. Because the common ancestor of the primates was very probably conscious, as indeed was the common ancestor of all mammals, we are still looking at the same evolved instance (just a different variant of it). To find independently evolved instances of consciousness, we really need to look to much more distant branches of the tree of life.

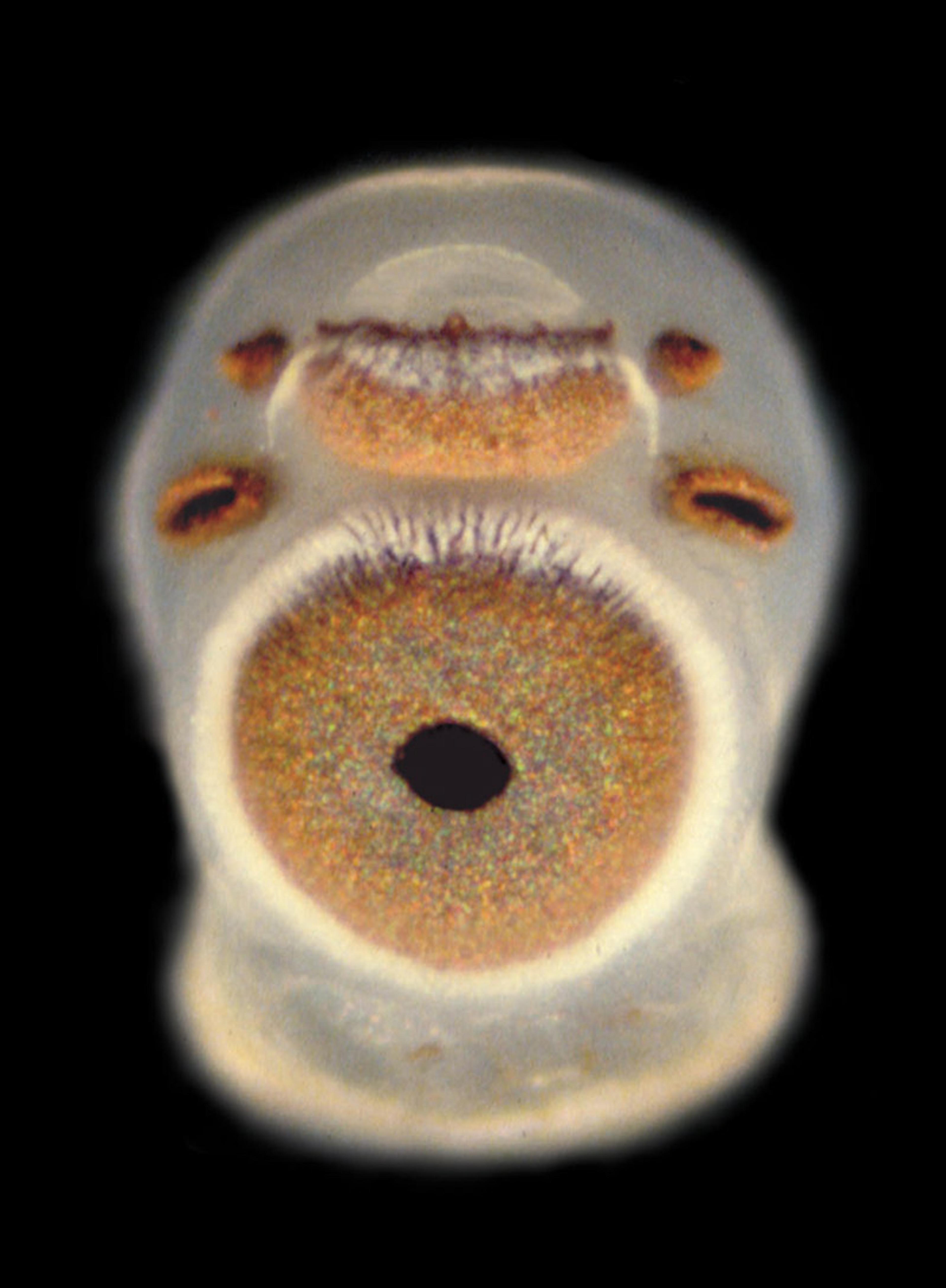

Biology is rife with examples of convergent evolution, in which similar traits evolve multiple times in different lineages. Consider the wing of the bat and the bird, or compare the lensed eyes of a box jellyfish with our own. In fact, vision is thought to have evolved at least 40 times during the history of animal life.

The curious lensed eye of the box jellyfish. Courtesy of Professor Dan-E Nilsson, Lund University, Sweden

Wings and eyes are adaptations, shaped by natural selection to meet certain types of challenges. Sentience also has the hallmarks of a valuable adaptation. There is a remarkable (if not perfect) alignment between the intensity of our feelings and our biological needs. Think about the way a serious injury leads to severe pain, whereas a much smaller problem, like a slightly uncomfortable seat, leads to a much less intense feeling. That alignment must come from somewhere, and we know of only one process that can create such a good fit between structure and function: natural selection.

What exactly sentience does for us, and did for our ancestors, is still debated, but it’s not hard to imagine ways in which having a system dedicated to representing and weighing one’s biological needs could be useful. Sentience can help an animal make flexible decisions in complex environments, and it can help an animal learn about where the richest rewards and gravest dangers are to be found.

Assuming that sentience does serve a valuable function, we should not be surprised to find that it has evolved many times. Indeed, given the recent recognition of animals such as octopuses and crabs as sentient, and the growing evidence of sentience in bees and other insects, we may ultimately find we have a big group of independently evolved instances of sentience to investigate. It could be that sentience, like eyes and wings, has evolved over and over again.

It is hard to put an upper bound on the number of possible origin events. The evidence at the moment is still very limited, especially concerning invertebrates. For example, it’s not that sentience has been convincingly shown to be absent in marine invertebrates such as starfish, sea cucumbers, jellyfish and hydra. It is fairer to say that no one has systematically looked for evidence.

Do we have grounds to suspect that many features often said to be essential to sentience are actually dispensable?

It could also be that sentience has evolved only three times: once in the arthropods (including crustaceans and insects), once in the cephalopods (including octopuses) and once in the vertebrates. And we cannot entirely rule out the possibility that the last common ancestor of humans, bees and octopuses, which was a tiny worm-like creature that lived more than 500 million years ago, was itself sentient – and that therefore sentience has evolved only once on Earth.

If this last possibility is true, we really are stuck with the N = 1 problem, just like those searching for extraterrestrial life. But that would still be a useful thing to know. If a marker-based approach does start pointing towards sentience being present in our worm-like last common ancestor, we would have evidence against current theories that rely on a close relationship between sentience and special brain regions adapted for integrating information, like the cerebral cortex in humans. We would have grounds to suspect that many features often said to be essential to sentience are actually dispensable.

Meanwhile, if sentience has evolved multiple times on this planet, then we can escape the clutches of the N = 1 problem. Comparing those instances will allow us to draw inferences about what is really indispensable for sentience and what is not. It will allow us to look for recurring architectural features. Finding the same features repeatedly will be evidence of their importance, just as finding lenses evolving over and over again within eyes is good evidence of their importance to vision.

If our goal is to find shared, distinctive, architectural/computational features across different instances of sentience, the more instances the better, as long as they have evolved independently of each other. The more instances we can find, the stronger our evidence will be that the shared features of these cases (if there are any!) are of deep importance. Even if there are only three instances – vertebrates, cephalopod molluscs, and arthropods – finding shared features across the three instances would give us some evidence (albeit inconclusive) that these shared features may be indispensable.

This in turn can guide the search for better theories: theories that can make sense of the features common to all instances of sentience (just as a good theory of vision has to tell us why lenses are so important). Those future theories, with some luck, will tell us what we should be looking for in the case of AI. They will tell us the deep architectural features that are not susceptible to gaming.

Does this strategy have a circularity problem? Can we really assess whether an invertebrate animal like an octopus or a crab is sentient, without first having a solid theory of the nature of sentience? Don’t we run into exactly the same problems regardless of whether we are assessing a large language model or a nematode worm?

There is no real circularity problem here because of a crucial difference between evolved animals and AI. With animals, there is no reason to worry about gaming. Octopuses and crabs are not using human-generated training data to mimic the behaviours we find persuasive. They have not been engineered to perform like a human. Indeed, we sometimes face a mirror-image problem: it can be very difficult to notice markers of sentience in animals quite unlike us. It can take quite a bit of scientific research to uncover them. But when we do find these animals displaying long, diverse lists of markers of sentience, the best explanation is that they are sentient, not that they knew the list and could further their objectives by mimicking that particular set of markers. The problem that undermines any inference to sentience in the AI case does not arise in the animal case.

We need better tests for AI sentience, tests that are not wrecked by the gaming problem

There are also promising lines of enquiry in the animal case that just don’t exist in the AI case. For example, we can look for evidence in sleep patterns, and in the effects of mind-altering drugs. Octopuses, for example, sleep and may even dream, and dramatically change their social behaviour when given MDMA. This is only a small part of the case for sentience in octopuses. We don’t want to suggest it carries a lot of weight. But it opens up possible ways to look for deep common features (eg, in the neurobiological activity of octopuses and humans when dreaming) that could ultimately lead to gaming-proof markers to use with AI.

In sum, we need better tests for AI sentience, tests that are not wrecked by the gaming problem. To get there, we need gaming-proof markers based on a secure understanding of what is really indispensable for sentience, and why. The most realistic path to these gaming-proof markers involves more research into animal cognition and behaviour, to uncover as many independently evolved instances of sentience as we possibly can. We can discover what is essential to a natural phenomenon only if we examine many different instances. Accordingly, the science of consciousness needs to move beyond research with monkeys and rats toward studies of octopuses, bees, crabs, starfish, and even the nematode worm.

In recent decades, governmental initiatives supporting research on particular scientific issues, such as the Human Genome Project and the BRAIN Initiative, led to breakthroughs in genetics and neuroscience. The intensive public and private investments into AI research in recent years have resulted in the very technologies that are forcing us to confront the question of AI sentience today. To answer these current questions, we need a similar degree of investment into research on animal cognition and behaviour, and a renewal of efforts to train the next generation of scientists who can study not only monkeys and apes, but also bees and worms. Without a deep understanding of the variety of animal minds on this planet, we will almost certainly fail to find answers to the question of AI sentience.

If you enjoyed this Essay, Jonathan Birch went on to have success with his book The Edge of Sentience: Risk and Precaution in Humans, Other Animals, and AI (2024). Of the connection between his book and this Essay, Jonathan said: ‘Intelligence and sentience are not the same. Intelligent systems need not be sentient, and sentient systems need not be intelligent. I first got interested in the idea of artificial sentience through the OpenWorm project, an attempt to emulate the entire nervous system of a tiny worm, neuron by neuron. I thought: if we can do that, we will soon move on to emulating fruit flies, bees, fishes. Could an emulation of the brain of a sentient being itself be sentient? If so, it might achieve artificial sentience without much intelligence. Years later, we have remarkable chatbots, but they are nothing like OpenWorm. They excel at role-playing and mimicry, and so they’re able to game some of our criteria for sentience. In our essay, Kristin Andrews and I say: to find real criteria for sentience that go beyond mimicry and role-playing, we first need to understand sentience in animals.’ You can also read more from Jonathan Birch on Aeon here.