If you suspect that 21st-century technology has broken your brain, it will be reassuring to know that attention spans have never been what they used to be. Even the ancient Roman philosopher Seneca the Younger was worried about new technologies degrading his ability to focus. Sometime during the 1st century CE, he complained that ‘The multitude of books is a distraction’. This concern reappeared again and again over the next millennia. By the 12th century, the Chinese philosopher Zhu Xi saw himself living in a new age of distraction thanks to the technology of print: ‘The reason people today read sloppily is that there are a great many printed texts.’ And in 14th-century Italy, the scholar and poet Petrarch made even stronger claims about the effects of accumulating books:

Believe me, this is not nourishing the mind with literature, but killing and burying it with the weight of things or, perhaps, tormenting it until, frenzied by so many matters, this mind can no longer taste anything, but stares longingly at everything, like Tantalus thirsting in the midst of water.

Technological advances would make things only worse. A torrent of printed texts inspired the Renaissance scholar Erasmus to complain of feeling mobbed by ‘swarms of new books’, while the French theologian Jean Calvin wrote of readers wandering into a ‘confused forest’ of print. That easy and constant redirection from one book to another was feared to be fundamentally changing how the mind worked. Apparently, the modern mind – whether metaphorically undernourished, harassed or disoriented – has been in no position to do any serious thinking for a long time.

In the 21st century, digital technologies are inflaming the same old anxieties about attention and memory – and inspiring some new metaphors. We can now worry that the cognitive circuitry of the brain has been ‘rewired’ through interactions with Google Search, smartphones and social media. The rewired mind now delegates tasks previously handled by its in-built memory to external devices. Thoughts dart from idea to idea; hands drift unwittingly toward pockets and phones. It may seem that constant access to the internet has degraded our capacity for sustained attention. This apparent rewiring has been noticed with general uneasiness, sometimes with alarm, and very often with advice about how to return to a better, more supposedly ‘natural’ way of thinking. Consider these alarming headlines: ‘Is Google Making Us Stupid?’ (Nicholas Carr, The Atlantic, 2007); ‘Have Smartphones Destroyed a Generation?’ (Jean M Twenge, The Atlantic, 2017); or ‘Your Attention Didn’t Collapse. It Was Stolen’ (Johann Hari, The Observer, 2022). This longing to return to a past age of properly managed attention and memory is hardly new. Our age of distraction and forgetting joins the many others on historical record: the Roman empire of Seneca, the Song Dynasty of Zhu, the Reformation of Calvin.

Plato would have us believe that this double feeling of anxiety and nostalgia is as old as literacy itself, an inescapable problem that is inherent in the technology of writing. In one of his dialogues, the Phaedrus, he recounts how the ancient inventor of writing, an Egyptian god named Theuth, presents his work to the king of the gods. ‘This invention, O king,’ says Theuth, ‘will make the Egyptians wiser and will improve their memories; for it is an elixir of memory and wisdom.’ The Egyptian king of the gods, Thamus, predicts the opposite:

For this invention will produce forgetfulness in the minds of those who learn to use it, because they will not practise their memory. Their trust in writing, produced by external characters which are no part of themselves, will discourage the use of their own memory within them. You have invented an elixir not of memory, but of reminding; and you offer your pupils the appearance of wisdom, not true wisdom, for they will read many things without instruction and will therefore seem to know many things, when they are for the most part ignorant and hard to get along with, since they are not wise, but only appear wise.

The gods’ predictions contradict one another, but they share an underlying theory of cognition. Each assumes that human inventions like writing can alter thought, and even create new methods of thinking. In 1998, the philosophers Andy Clark and David J Chalmers called this interactive system, composed of the inner mind cooperating with the outer world of objects, ‘the extended mind’. Our ability to think, they claimed, could be altered and extended through technologies like writing. This modern idea expresses a much older notion about the entanglement of interior thought and exterior things. Though Clark and Chalmers wrote about this entanglement with a note of wonder, other scholars have been less sanguine about the ways that cognition extends itself. For Seneca, Zhu and Calvin, this ‘extension’ was just as readily understood as cognitive ‘degradation’, forerunning the alarm about smartphones and Google ‘making us stupid’ or ‘breaking’ our brains.

Branching diagrams reveal the medieval extended mind at work in its interactions with pen, ink and the blank page

For as long as technologies of writing and reading have been extending the mind, writers have offered strategies for managing that interaction and given advice for thinking properly in media environments that appeared hostile to ‘proper’ thought. It’s not hard to find past theories of the ways that technologies, such as printed books or writing, shaped thought in past millennia. However, these theories don’t give us a sense of exactly how minds were being shaped, or a sense of what was gained by thinking differently. To understand the entanglement of books and minds as it was being shaped, we might turn to readers and writers in Europe during the Middle Ages, when bookshelves swelled with manuscripts but memory and attention seemed to shrivel.

Writing during the 13th century, the grammarian Geoffrey of Vinsauf had plenty of advice for writers overwhelmed with information. A good writer must not hurry; they must use the ‘measuring line of the mind’ to compose a mental model before rushing into the work of writing: ‘Let not your hand be too swift to grasp the pen … Let the inner compasses of the mind lay out the entire range of the material.’ Geoffrey expresses an ideal here, but his handbook gives us little access to thinking as it really happened while seated at a medieval desk before a blank page with quill in hand. In navigating this problem, the intellectual historian Ayelet Even-Ezra pursues one route toward an answer in Lines of Thought (2021). For her, ‘lines of thought’ are the lines of connection structuring the many branching diagrams that fill the pages of medieval manuscripts. One such horizontal tree can be seen crawling across the book’s cover:

Lines of Thought (2021) by Ayelet Even-Ezra

Follow these branches on the book’s cover to the root, and you will see that the diagram grows from a neuron. This union of nervous system and diagram-tree suggests the book’s argument rather directly: for Even-Ezra, these horizontal trees written by medieval scribes did not simply record information – they recorded pathways for thinking that were enabled by the branching form of the tree itself. Branching diagrams reveal the medieval extended mind at work in its interactions with pen, ink and the blank space of the page.

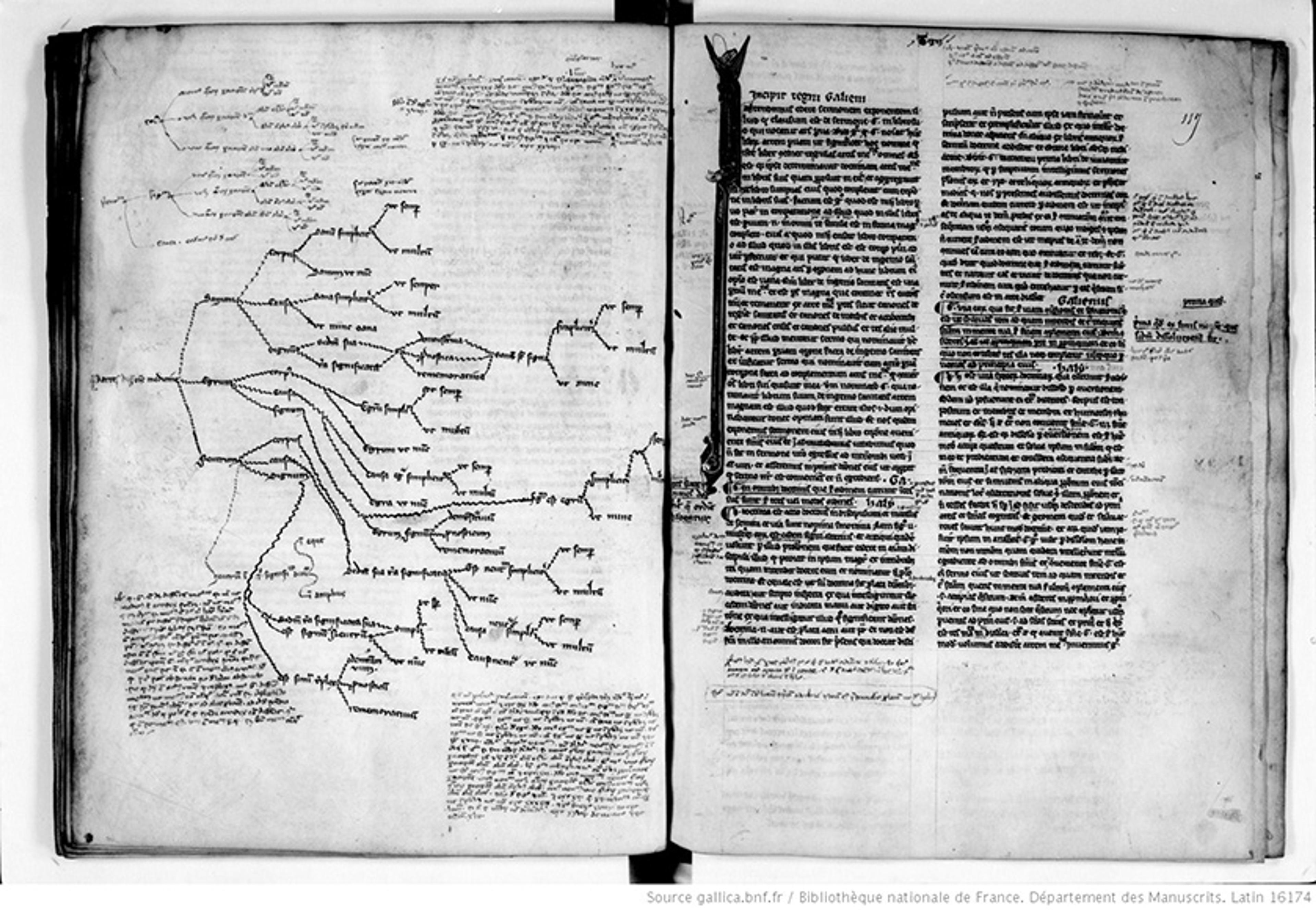

Pay close attention to these diagrams, and sometimes they will reveal a medieval cognitive process as it played out. Here is one 13th-century diagram examined by Even-Ezra:

The Latin translation of the aphorisms by Hippocrates of Kos (460-375 BCE), Prognosticon (‘Book of Prognosis’) and Regimen acutorum (‘Regimen in Acute Diseases’), with the commentary traditionally attributed to the Greek physician and philosopher Galen (c130-200). Latin 16174, fol 116v. Courtesy the Bibliothèque nationale de France

This diagram, mapping out the branches of medicine, does not seem to go as the scribe had planned. The first branch sprawls evenly and comfortably. However, the second branch is awkwardly diverted. An offshoot seems to have occurred to the scribe only later, which has been grafted on. The lowest branch is a thicket of revision and deviating thought-lines. Even-Ezra notes the obvious: this scribe did not gauge available space properly at the start. That was part of the problem. But it is also evident that the exact structure of this information had ‘emerged during the process of drawing’; written diagrams like this one facilitated complex, abstract thinking. These new abstract thoughts could surprise the thinker, who accommodates them wherever possible by sketching the diagram. Even-Ezra suggests that the horizontal-tree format made concepts ‘easier to manipulate’, abstracting them from the linearity of language. Filling out the many branches of these diagrams ‘paved the way for new questions’.

Centuries later, we can look at one of these diagrams and see how the scribe thought, and how practices of writing made such thinking possible. Even-Ezra makes the branching diagram a crucial device of an extended medieval mind, specific to its moment: it was a tool for thinking that could reconcile ‘complexity and simplicity, order and creativity, simultaneously’. Through it, the mind could be unburdened. At the height of the Middle Ages in the 13th and early 14th centuries, this was especially important. Its scholastic theologians and philosophers strove to organise their knowledge of the world into an all-encompassing system of thought. Ideally, this system was to be somehow meticulously complex yet grounded in basic, knowable principles, like the divinely ordered world it sought to understand.

Remember the story of Thamus, that sceptical Egyptian god who predicted that young minds would be ruined by writing? The branching diagram, in Even-Ezra’s account, represents one good outcome of the invention of writing. These diagrams could facilitate deeper reflection, especially of an abstract kind, during sessions of intensive reading. They could also be aids to memory, rather than its substitutes, because they repackaged information in formal patterns that could stick in the mind. Medieval note-takers filled the margins of medieval books with these diagrams, and many are evidence of careful attention and a desire to crystallise new knowledge. Even-Ezra describes how the rise of these diagrams – a new kind of writing technology – reshaped cognition.

We can see the effects drawn out on the page. Geoffrey of Vinsauf might have looked on in horror as medieval diagrammers, against his best advice, took up the pen to draw out abstract ideas not yet fully composed. But, like Even-Ezra, we can watch these developments with no anxiety or alarm. From a safe historical distance, Lines of Thought proposes that the medieval vogue for branching trees subtly rewired the medieval mind. But today, neither we nor Even-Ezra worry about the old ways of thinking that may have been lost in process.

We might follow a similar line through the long history of technology-induced media anxiety. There have been thousands of years of analogous fears of broken, distracted, stupefied brains – whatever metaphors are invented to express them. Our present worries are a novel iteration of an old problem. We’ve always been rewired (even before the new media technologies went electric and metaphors of ‘wiring’ became ubiquitous; the metaphor itself is older, and really caught on in the telegraph era.)

Consider another example: have indexes in printed books made us more distracted readers? In Index, A History of the (2022), the English historian Dennis Duncan makes Plato’s anecdote about the Egyptian gods Theuth and Thamus the ancient point of origin for a long historical arc of tech anxiety bending towards Google. At points between Plato and search engines, Duncan plots the rise of the index as a necessary piece of search equipment for readers. Compilers and users of early indexes in the 16th century, such as the Swiss physician Conrad Gessner, saw great potential in them, but also had reservations. Gessner used this technology in many of his books, creating impressive indexes of animals, plants, languages, books, writers and other people, creatures and things. He thought that well-compiled indexes were the ‘greatest convenience’ and ‘absolutely necessary’ to scholars. Yet he also knew that careless scholars sometimes read only indexes, instead of the whole work.

New regimes of memory and attention replace the old ones. Eventually they are replaced, then longed for

The index invited a kind of misuse that was an affront to the honest scholarship Gessner believed it was supposed to serve. Erasmus, that intellectual giant of the Renaissance, was another critic of the misuse of the index, yet he was less concerned about lazy, index-first readers than the writers who exploited this tendency. Since so many people ‘read only titles and indexes’, writers began to put their most controversial (even salacious) material there in search of a wider audience and better sales. The index, in other words, had become the perfect place for early modern clickbait. It was up to the good reader to ‘click through’ – to read the whole book and not just the punchy index entries – before rushing to judgment. Erasmus did not expect many readers to put in the legwork. But he makes no argument against printing books with indexes in themselves, any more than he argues against giving books title pages (for title pages, too, were newfangled, time-saving additions to printed books). For Erasmus, the index was a tool that was only as good as its readers. Duncan gives us a history of anxious controversy around the index and how people have used it, taking this unremarkably familiar feature of every book’s back-matter and revealing its early career as the latest technological threat to proper thought.

Should we look back on these changing interactions between books and minds, and worry that some ‘Great Rewiring’ was taking place centuries ago? Obviously not. Even if we believe that a commonplace way of writing down ideas on the page really was changing the way medieval minds worked, as Even-Ezra argues, we don’t look back with regret. Even if the new multitudes of books, and the indexes mapping them, caused some alarm among those who witnessed their proliferation and the demise of careful and attentive reading, we raise no alarms in retrospect. New regimes of memory and attention replace the old ones. Eventually they become the old regimes and are replaced, then longed for.

That longing now takes shape as a nostalgia for the good old days when people were ‘voracious readers’ of books, especially novels. Johann Hari, in his book Stolen Focus (2022), introduces us to a young bookseller who cannot finish any of the books by Vladimir Nabokov, Joseph Conrad or Shirley Jackson that she picks up: ‘[S]he could only get through the first chapter or two, and then her attention puttered out, like a failing engine.’ The would-be reader’s mind just runs out of steam. Hari himself retreats to a seaside town to escape the ‘pings and paranoias of social media’ and thus recover the lost experience of attention and memory. Reading Dickens was part of his self-prescribed cure: ‘I was becoming much more deeply immersed in the books I had chosen. I got lost in them for really long stretches; sometimes for whole days – and I felt like I was understanding and remembering more and more of what I read.’ To Hari, and many others, re-focusing on reading fiction is one obvious method to return the mind to some previous and better state of attention and remembering. This novel cure is a method so obvious, occurring to so many, that it often goes unexplained.

Getting lost in books, in novels, has been recast as a virtuous practice in modern life: the habit and the proof of a healthy mind. The same practice, however, has looked to others like a pathology. The ‘voracious reader’ presents as the mind of that intellectually malnourished, overstimulated junkie diagnosed by Petrarch, strung out on a diet of flimsy texts: ‘frenzied by so many matters, this mind can no longer taste anything’. Don Quixote characterised the pathological reader, so enthralled by his fictitious books of romance that his mind forgets reality. In Jane Austen’s England, around the turn of the 18th century, as more women and a growing middle class began to read novels, warnings were issued against their unhealthy effects. Concerned observers in the early 1800s wrote that a ‘passion for novel reading’ was ‘one of the great causes of nervous disorders’ and a threat to the ‘female mind’. Watch out, one wrote in 1806, for ‘the excess of stimulus on the mind from the interesting and melting tales, that are peculiar to novels’.

Later, in the 20th century, Walter Benjamin theorised that the urbanite’s solitary reading of mass-produced novels had made it almost impossible for them to achieve the state of mind required for storytelling. For him, novels – in tandem with newspapers and their drip-drip-drip of useful information – made the true mental relaxation that comes from boredom much harder to find. (He saw boredom as the natural incubator for storytelling.) It is remarkable how two different eras could both say something like: ‘We live in a distracted world, almost certainly the most distracted world in human history,’ and then come to exactly opposite conclusions about what that means, and what one should do.

Hari’s seaside idyll of properly managed attention (ie, getting lost in books) would have been taken as a tell-tale sign of a pathologically overstimulated mind in another age. That irony of history might be instructive to us. New technologies will certainly come along to vie for our attention, or to unburden our memory with ever-easier access to information. And our minds will adapt as we learn to think with them. In Stolen Focus, Hari quotes the biologist Barbara Demeneix, who says that ‘there is no way we can have a normal brain today’. There’s a yearning here, after some lost yesterday, when the mind worked how it was meant to. When was that, exactly? Seneca, Petrarch, and Zhu would all like to know.

Tech nostalgia tends to look wrong-headed eventually, whether it longs for the days before Gutenberg, or before daily newspapers, or before Twitter. Hari makes a good case that we need to work against the ways that our minds have been systematically ‘rewired’ to align with the interests of tech giants, polluters and even a culture of overparenting. He doesn’t believe we can truly opt out of the age of distraction by, say, ditching the smartphone. Indeed, we will still worry, as we should, about how our minds interact with external things. But together we should imagine a future of more conscientious thinking, not a past.