I am sitting in my daughter’s hospital room – she is prepping for a common procedure – when the surgeon pulls up a chair. I expect he will review the literal order of operations and offer the comforting words parents and children require even in the face of routine procedures. Instead, he asks us which of two surgical techniques we think would be best. I look at him incredulously and then manage to say: ‘I don’t know. I’m not that kind of doctor.’ After a brief discussion, my husband and I tell him what, to us, seems obvious: the doctor should choose the procedure that, in his professional opinion, carries the greatest chance of success and the least risk. He should act as if our daughter is his.

In truth, this encounter should not have surprised me. I have for several years been working on a book about risk as a form of social and political logic: a lens for apprehending the world and a set of tools for taming uncertainty within it. It is impossible to tell the contemporary story of risk without considering what scholars call responsibilisation or individuation – essentially, the practice of thrusting increasing amounts of responsibility onto individuals who become, as the scholar Tina Besley wrote, ‘morally responsible for navigating the social realm using rational choice and cost-benefit calculations’. In the United States, business groups and politicians often call offloading more responsibilities onto citizens ‘empowering’ them. It’s maybe telling that this jargon prevails in the private healthcare sector, just as moves to privatise social security in the US are cast as empowering employees to invest their retirement savings however they see fit.

In Individualism and Economic Order (1948), F A Hayek wrote: ‘if the individual is to be free to choose, it is inevitable that he should bear the risk attaching to that choice,’ further noting that ‘the preservation of individual freedom is incompatible with a full satisfaction of our views of distributive justice.’ Over the past several decades, Hayek’s position has gone mainstream but also become somewhat emaciated, such that individual freedom substantively means consumer choice – the presumed sanctity of which must be preserved across all sectors. But devolved responsibility favours those with more capacity to evaluate and make decisions about complex phenomena – those of us, for instance, with high levels of education and social access to doctors and investment managers to call for advice. And indeed, it’s striking that the embrace of responsibilisation as a form of individual ‘empowerment’ has accompanied the deepening of inequality in Western democracies.

This trend persists despite growing recognition from psychologists and economists that most of us are not rational decision-makers and that we are particularly terrible at assessing risk. Whether it is the likelihood of dying in a terrorist attack or succumbing to COVID-19, research shows that people tend to overestimate high-profile threats and underestimate everyday ones like driving a car. A cottage industry has sprung up to cater to this observable gap between perceived and actual risk. Books like Gerd Gigerenzer’s Risk Savvy (2014) and Dan Gardner’s Risk: The Science and Politics of Fear (2008) try to guide individuals toward more rational risk assessments; online tools invite users to calculate their chance of heart disease or becoming a crime victim; and websites like oddsofdying.com compile data on dozens of deadly threats – from earthquakes and plane crashes to bee stings and malaria. Yet there is an appreciable disconnect between what the data say and how many of us feel. As Gardner’s book puts it: ‘We are the safest humans who ever lived – the statistics prove it. So why has anxiety become the stuff of daily life? Why do we live in a culture of fear?’

How can we account for this disconnect? Why, given our demonstrable lack of capacity, are we still charged with becoming better risk calculators? And what type of political subject is the actuarial self?

Given that few objects elicit yawns like an actuarial table, it is worth underscoring what is at stake in conversations about risk: death, fear, and our ability to control what befalls us in the future. In his triumphal history Against the Gods: The Remarkable Story of Risk (1996), Peter Bernstein argued that risk management could not emerge without ‘the notion that the future is more than a whim of the gods.’ According to Bernstein, the idea of risk made the world modern by allowing humans to slough off centuries of theological baggage and chart their own paths. One can see how this notion contributed to the philosophical edifice of Western individualism, and why this conceptualisation of individual autonomy and responsibility has remained attractive down to our own time.

Bernstein traced the origins of risk to the 17th century, when these new ideas about human agency began to circulate alongside the mathematics of probability, which posited that past events could be systematically analysed to yield insights about the future. Such notions could arise only in post-Renaissance Europe, he argued, which explains why ‘the fatalistic Muslims were not yet ready to take the leap’ despite their mathematical sophistication. In Bernstein’s telling, the exceptionalism of European culture – not shifts in governance or the rise of capitalism – accounts for how the West broke the bonds of fatalism.

While positive associations still adhere to entrepreneurs and other ‘risk-takers’ today, they have become a minority discourse. This is one reason why Bernstein’s End-of-History-era euphoria sounds like a dispatch from another planet. For many, the language of risk instead denotes everything that could go wrong: climate risk, health risks, cyber risk, financial risk, terrorism risk, AI risk, and so forth. Outside certain pockets of Silicon Valley – such as the self-described techno-optimist Marc Andreessen – thoughts of the future are more likely to elicit anxiety or dread than excitement.

In 1603, deaths from consumption represented 20 per cent of the total burials

Human attempts to scientifically manage risk have expanded dramatically alongside this dimming of hopes for the future. While many risk-management practitioners stress that theirs is a young field – not more than 40-45 years old, according to Terje Aven, judging by when ‘the first scientific journals, papers and conferences covering fundamental ideas and principles on how to appropriately assess and manage risk’ emerged – certain patterns date back several centuries.

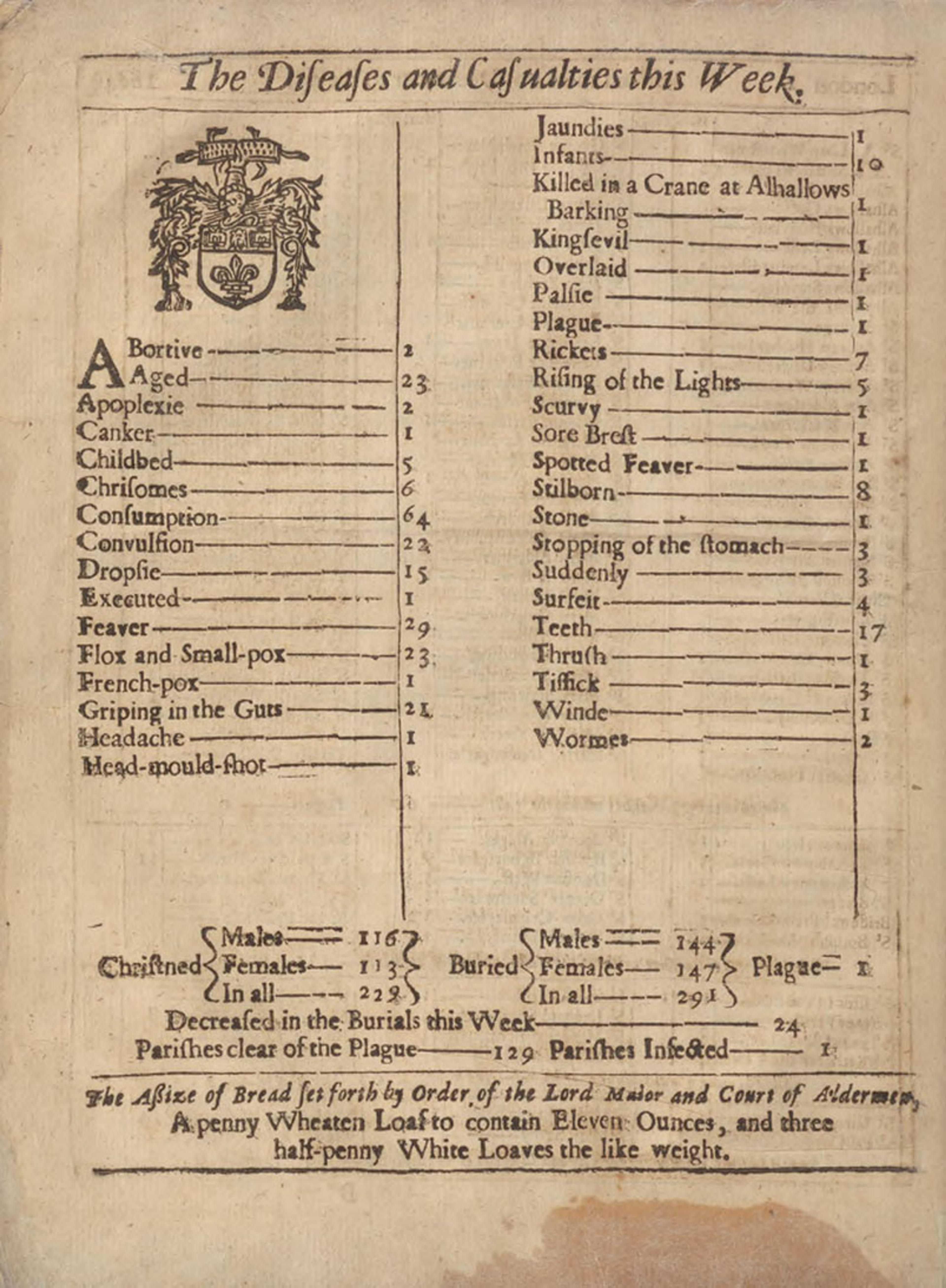

In 1603, in the midst of a plague outbreak that would kill a fifth of the population, the City of London began producing weekly bills of mortality. It was the duty of parish clerks to record and report the total number of burials, plague deaths and christenings for their congregation (in an early instance of sampling bias, the data thus excluded information about nonconformers). By the 1660s, these bills came to include a range of ailments that one might succumb to: ‘aged’, ‘childbed’, ‘dropsie’, ‘executed’, ‘griping in the guts’, ‘infants’, ‘rickets’, ‘sore brest’ [sic], ‘teeth’, and dozens more (see below).

London’s Dreadful Visitation, or, A Collection of All the Bills of Mortality for this Present Year (1665). Courtesy of the Public Domain Review

As the data shows, deaths from consumption represented just over 20 per cent of the total burials, while fever, ‘flox and small-pox’, old age and ‘convulsion’ together accounted for another third. The table also underscores the high rate of infant mortality, with 10 deaths, eight stillborn, and two abortions recorded. As public health records – indeed, as artefacts of the emerging idea that health was something that existed beyond individuals and required management at population level – the London mortality bills are invaluable. They also helped give rise to a new type in public life, the data-wielding expert who compared human fears about death with the actual human experience of death, according to numbers.

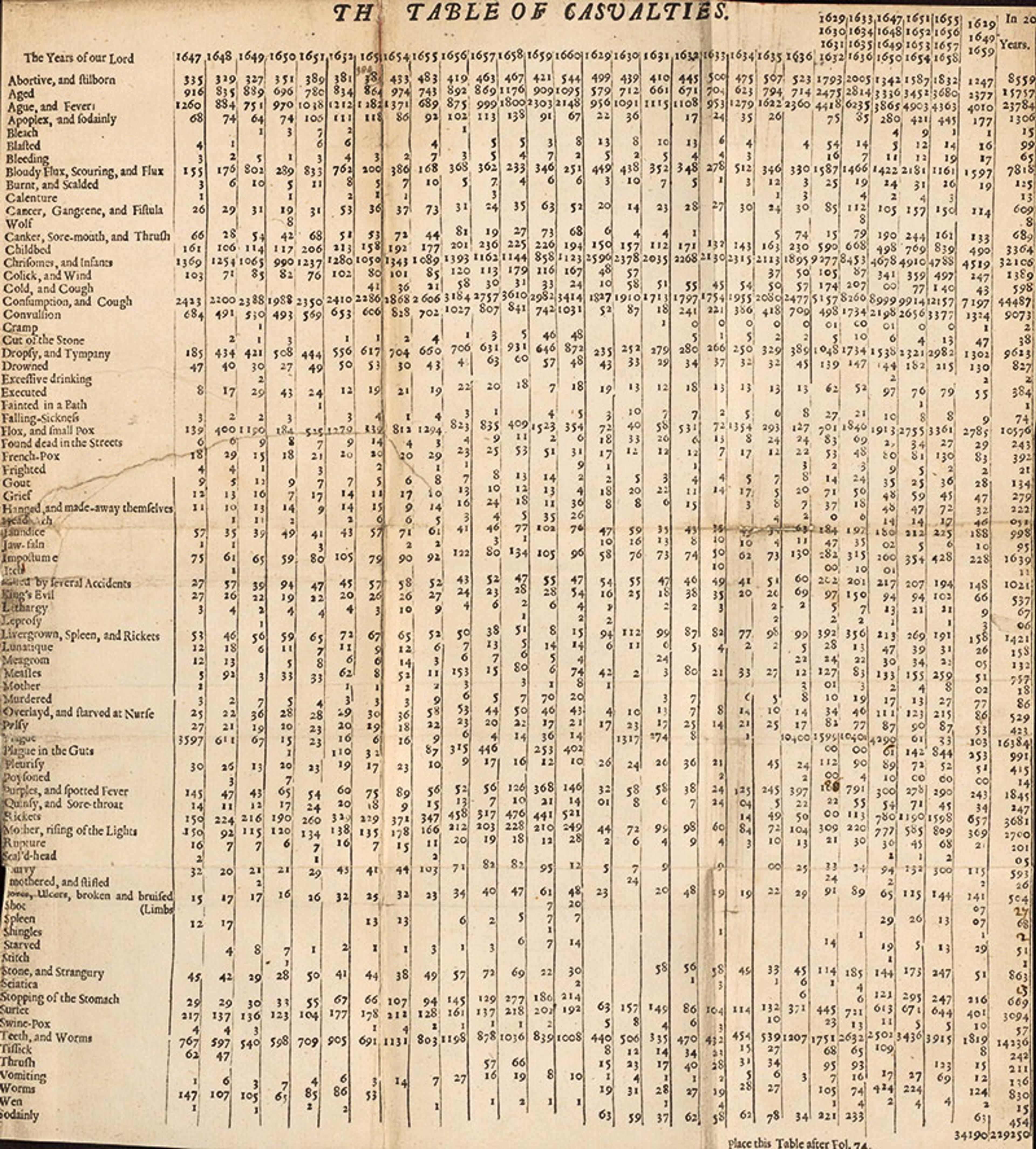

John Graunt (1620-74) was a London haberdasher turned demography pioneer who published the work Natural and Political Observations … Made Upon the Bills of Mortality (1662). Alongside his friend and sometimes collaborator, William Petty, Graunt is considered one of the founding fathers of statistics. Sensing that the mortality bills might be good for something beyond warning the rich when to retreat to their country homes, Graunt used them to tabulate and compare casualties for the years 1629-60, as well as the total number of burials and christenings from 1604-61. Applying a series of crude mathematical assumptions, Graunt was able to calculate the total population of London – and crucially, the number of military-aged men – and estimated the likelihood of dying from varied causes. As he explained his task, ‘whereas many persons live in great fear, and apprehension of some of the more formidable, and notorious diseases following; I shall only set down how many died of each, being compared to the total 229,250, those persons may the better understand the hazard they are in.’ With his data, Graunt assured readers that the chance of succumbing to much-feared toads or snakes was quite rare, and likewise that any man at present ‘well in his Wits’ had less than a 1/1000 chance of dying ‘a Lunatick in Bedlam’ over the next seven years.

Graunt’s attempts to better understand the objective risk of harm resemble those found in the Port Royal Logic (1662), published in France the same year that Graunt’s Observations appeared in England. The work of Antoine Arnauld and Pierre Nicole, philosopher-theologians associated with the Port Royal Abbey, the Port Royal Logic charted a path between scepticism and credulity in an effort ‘to make us more reasonable in our hopes and fears’. The authors considered the princess who’d heard of someone being crushed by a falling ceiling, and so refused to enter any home without having it first inspected. ‘The flaw in this reasoning is that in order to decide what we ought to do to obtain some good or avoid some harm, it is necessary to consider not only the good or harm in itself, but also the probability that it will or will not occur,’ they wrote. For instance, though many feared thunder because of ‘the danger of dying by lightning … it is easy to show it is unreasonable. For out of 2 million people, at most there is one who dies in this way … So, then, our fear of some harm ought to be proportional not only to the magnitude of the harm, but also to the probability of the event.’

Nearly 50 per cent of the ‘very liberal’ believed that COVID-19 presented a ‘great risk’

Despite the apparent similarities in tone, the Port Royal Logic and Graunt’s mortality tables advanced very different approaches to risk. The Port Royal authors had no empirical data to guide them about the frequency of lightning or anything else. Their project was rather a rationalist attempt to hone man’s common sense which, they noted, was none too common. They provided a set of tools and guidelines that aimed at increasing the practical reasoning capabilities of the reader so that they could make better judgments. In contrast, Graunt was among the first empiricists, who argued that real-world data could lead us to truth if only we would follow the numbers. The point was not to perfect human judgment, but to obviate it. In this way, his mortality tables provide an early example of the ‘quantitative authority’ (to borrow from the sociologist Wendy Espeland) that still adheres to numbers in our own time.

Natural and Political Observations Mentioned in a Following Index, and Made Upon the Bills of Mortality (1662) by John Graunt. Courtesy of the NIH Digital Collections

Compare, for instance, Graunt’s Observations with the COVID-era New York Times newsletter by David Leonhardt. In March 2022, as the Omicron wave of COVID-19 was cresting in much of the US, Leonhardt examined COVID fears among the self-identified ‘very liberal’. Drawing on recent polling data, he noted that nearly 50 per cent of that subgroup believed that COVID presented a ‘great risk’ both to their personal and their children’s health. He further noted that the ‘very liberal’ were disproportionally urban professionals who embraced a risk-adverse style of parenting: ‘Parents seek out the healthiest food, sturdiest car seats and safest playgrounds. They do not let their children play tackle football, and they worry about soccer concussions.’ While this mode of parenting had produced some notable gains – two cheers for bicycle helmets! – Leonhardt noted it also came with downsides: ‘It can lead people to obsess over small, salient risks while ignoring bigger ones.’ With regard to COVID, that meant ‘there is abundant evidence that the most liberal Americans are exaggerating the risks to the vaccinated and to children’ while ignoring more routine ones: ‘the flu kills more children in a typical year and car crashes kill about five times as many.’ Leonhardt thus joined the ranks of those who believe the main problem with the actuarial self is that most of us remain poor risk-calculators.

Few academic fields have done more to promote the idea of a gap between perceived and ‘objective’ risk than behavioural economics, which is arguably the most important academic export of the past 30 years. Besides popular bestsellers like Dan Ariely’s Predictably Irrational (2008), Daniel Kahneman’s Thinking, Fast and Slow (2011) and, perhaps most famously, Richard Thaler and Cass Sunstein’s Nudge (2008), the field has made significant public policy inroads. In 2010, David Cameron’s government established the ‘Nudge Unit’ to apply the field’s insights to UK social and economic problems ranging from obesity to online scams. As of 2019, they reported organising 780 projects in dozens of countries since its inception. The US president Barack Obama followed suit in 2015 with the creation of the Social and Behavioral Sciences Team.

Pioneered by Kahneman and fellow Israeli cognitive psychologist Amos Tversky, behavioural economics considers the real-life departures of human decision-making from the rationalist model associated with Homo economicus, or economic man. Homo economicus expresses the idea that humanity is composed of autonomous individual actors who are rational and narrowly concerned with maximising their own benefit. This idea is often traced to Adam Smith’s The Wealth of Nations (1776), which argued that the pursuit of selfish interests actually serves the common good: ‘It is not from the benevolence of the butcher, the brewer, or the baker that we expect our dinner, but from their regard to their own interest.’ Economic man reached his apex in the mid-20th century at the hands of the game theorists John von Neumann and Oskar Morgenstern – who tried to sketch a model of how the rational actor should behave under conditions of uncertainty – and remains a mainstay of mainstream economics.

Behavioural economists take aim at Homo economicus by uncovering the biases, heuristics and logical flaws that cloud human decision-making – which is not, from their perspective, fully rational. For example, if your judgment about a $40 T-shirt is swayed by seeing one costing $60, you might be ‘anchoring’. If that doesn’t sound like something you would do, rest assured there are dozens of other biases and sins of logic to choose from. Behavioural economics does not, however, question the individual as the foundational economic and social unit. Just as, for their neoclassical economic ancestors, autonomous, self-interested individuals remain the building blocks of society, and maintaining consumer choice (‘freedom’) remains the greatest good. The innovation comes in arguing that, because humans are not fully rational, they require outside encouragement – ‘nudges’ – to help them make better choices. The intent is not to free people entirely to make their own decisions (remember, we’re bad at it), but rather for elite experts to guide them toward the choices they deem best. That might sound reassuring until you meet the experts.

Thaler and Sunstein are primary exponents of what’s come to be known as nudge theory. Their 2008 book urged companies, governments and public institutions to create ‘choice architectures’ that encourage ‘appropriate’ choices among individuals trying to navigate the maze of modern life: ‘A good system of choice architecture helps people to improve their ability to map choices onto outcomes and hence select options that will make them better off.’ For example, placing fresh fruit at eye level in a school cafeteria can nudge students to eat healthier foods, and automatically enrolling employees in 401(k) retirement plans may result in higher levels of personal savings. Crucially, nudges cannot be coercive, and thus cannot reduce the range of options available, even if some of them might be harmful. While Sunstein and Thaler admit that products like extended warranties take advantage of consumers and should be avoided, as self-described libertarian paternalists they stop short of recommending legislation that would outlaw them. For higher-stakes products including credit cards, mortgages and insurance policies, they recommend a light regulatory approach called RECAP (record, evaluate, and compare alternative prices). RECAP requires more robust disclosures and transparent pricing information so citizen-consumers can make informed choices.

Cap-and-trade solutions have actually enabled major polluters to increase their emissions

As these cases illustrate, nudgers reject strong regulatory alternatives, what Thaler and Sunstein call ‘command-and-control’ regulations: ‘we libertarian paternalists do not favour bans. Instead, we prefer an improvement in choice architecture that will help people make better choices…’ This means, in effect, opposing financial regulations like those that constituted the New Deal regulatory regime, which expressed a government mandate not merely to encourage better choices among consumers but to offer protections to citizens. So too, the nudgers worry that command-and-control environmental regulations are a slippery slope to totalitarianism. ‘Such limitations [eg, on vehicle emissions],’ write Thaler and Sunstein, ‘have sometimes been effective; the air is much cleaner than it was in 1970. Philosophically, however, such limitations look uncomfortably similar to Soviet-style five-year plans.’ This judgment is particularly striking when considered alongside the extremely high and consistent levels of public support – ranging between 70 and 80 per cent of Americans across the political spectrum – for mandated vehicle efficiency standards since they were introduced in 1975. Never mind their actual popularity: ambitious standards related to vehicle emissions, clean air and clean water are precisely the sort of ‘1970s environmentalism’ that, argues Sunstein in Risk and Reason (2002), states need to move beyond in favour of cost-benefit analyses and ‘free market environmentalism’.

In lieu of public mandates and restrictive legislation, Thaler and Sunstein endorse economic incentives and market-based solutions, such as cap-and-trade deals that encourage industrial polluters to reduce their emissions. Yet, as even they acknowledged, such ‘solutions’ come with loopholes: ‘If a polluter wants to increase its level of activity, and hence its level of pollution, it isn’t entirely blocked. It can purchase a permit via the free market.’ And indeed, several studies have now shown the critical flaws in cap-and-trade and other market-based solutions, which have actually enabled major polluters to increase their emissions and concentrate pollution in low-income neighbourhoods. In 2009, President Obama appointed Sunstein head of the White House’s Office of Information and Regulatory Affairs – essentially, the country’s top regulator.

Nudgers are in essence tweakers who eschew the sort of major policy shifts that might, for instance, ensure senior citizens are able to retire even without a 401(k). As Nudge’s subtitle – ‘Improving Decisions about Health, Wealth, and Happiness’ – makes clear, the project’s utmost concern is to preserve the individual decision-maker as the primary social actor. Indeed, the champions of behavioural economics share many structural assumptions with their neoclassical forebears about the supremacy of efficiency, the relative benevolence of the private market, and the need to maximise individual choice as a bulwark against serfdom. This framework has particularly perverse consequences when it comes to navigating risk in the 21st century.

Take financial risk, for instance. As reported by the Financial Times in May 2023, the UK bank TSB found that 80 per cent of fraud activity took place through Facebook, WhatsApp and Instagram. Yet the UK government’s 2023 anti-fraud strategy had ‘scrapped plans to force tech companies to compensate victims of online financial scams’, opting instead for a voluntary ‘online fraud charter’ and better reporting tools. So, rather than require platforms to police their content, the onus now falls on individuals to become better fraud detectors.

Or consider the various ‘green’ behaviours individuals are directed to perform in an effort to stave off the climate crisis. My five-year old’s class is picking up litter ‘to save the Earth’, all while the leading carbon-intensive industries – fossil fuels, transportation, fashion – continue with business as usual. The limitations are particularly galling when it comes to the ways people in the US are encouraged to navigate health risks. While nudgers offer a variety of life hacks to eat better, and favour user-friendly ways to navigate the private insurance market, such fixes ignore the far larger, structural risks that stem from Americans’ lack of affordable and proximate healthcare. Yet we know that an estimated 45,000 working-age Americans die each year because of lack of medical insurance, that the uninsured are less likely to receive preventative care and cancer screenings, and that two-thirds of bankruptcy filings are attributable to medical debt. Only a comically impoverished theoretical framework could consider health risks in the US and deduce that Americans need to eat more salads.

These cases underscore the mismatch between the systemic nature of the risks humans face and the individualistic tools promoted to manage them. No wonder many feel that, despite their conscientious attempts to reduce various risks, nothing is working. Cultivating the actuarial self as a political subject shifts the conversation away from public, structural, effective solutions in favour of tips and hacks that can never address the root of problems.

Years ago, I attended a National Rifle Association personal safety course called ‘Refuse To Be A Victim’. While the appeal to gun ownership was never far below the surface, this is primarily a crash-course in personal risk assessment, powered by the data point that a violent crime occurs every 25 seconds in the US. As I learned during my three-hour training, refusing to be a victim requires cultivating a posture of constant vigilance – one that carefully surveys situations, exercises caution, and never, ever trusts strangers. The course conjured a world in which security was an individual responsibility and, perversely, victimhood a personal failure. Uncertainty, which is both a gift and a challenge, was weaponised to make racialised paranoia seem like the only responsible choice. Protecting myself and my children required thinking like the retired counterterrorism officer who taught my course – becoming, in short, my own personal risk assessor.

However extreme this case may seem, I’ve realised that Refuse To Be A Victim exists on a broad continuum of practices that envision, and even idealise, the individual qua actuary. Whether you find (like Thaler and Sunstein) that humans are poor decision-makers who need to be nudged toward virtue, or affirm (like Gigerenzer) that better public numeracy can improve our risk calculations, an antisocial logic clings to the actuarial self. Security becomes an individual privilege procured through the marketplace rather than a public right achieved at the social level. When it comes to personal safety, people of means are encouraged to manage risk by engaging in various kinds of social insulation (what I have called security hoarding), while those without are largely transformed into the ‘risks’ themselves. The uptick in vigilante acts and paranoid shootings in the US for ringing the wrong doorbell is a symptom of this antisocial logic taken to its natural, bloody end. The privatisation of security and violence are also forms of responsibilisation – ones where we can clearly see the costs associated with this form of ‘freedom’.

The good news is that there are wonderful alternatives to the hyper-individualised world of the actuarial self: apprehensions of security that think at the level of communities, neighbourhoods and towns or cities or nations. In a world facing rising temperatures, pandemics and a globalised financial system, many have come to recognise the highly individualised approach to risk as a relic of an irresponsible and iniquitous era. Building more capacious forms of security and networks of care will require looking beyond the actuarial self and the fundamentally conservative political agenda it serves.