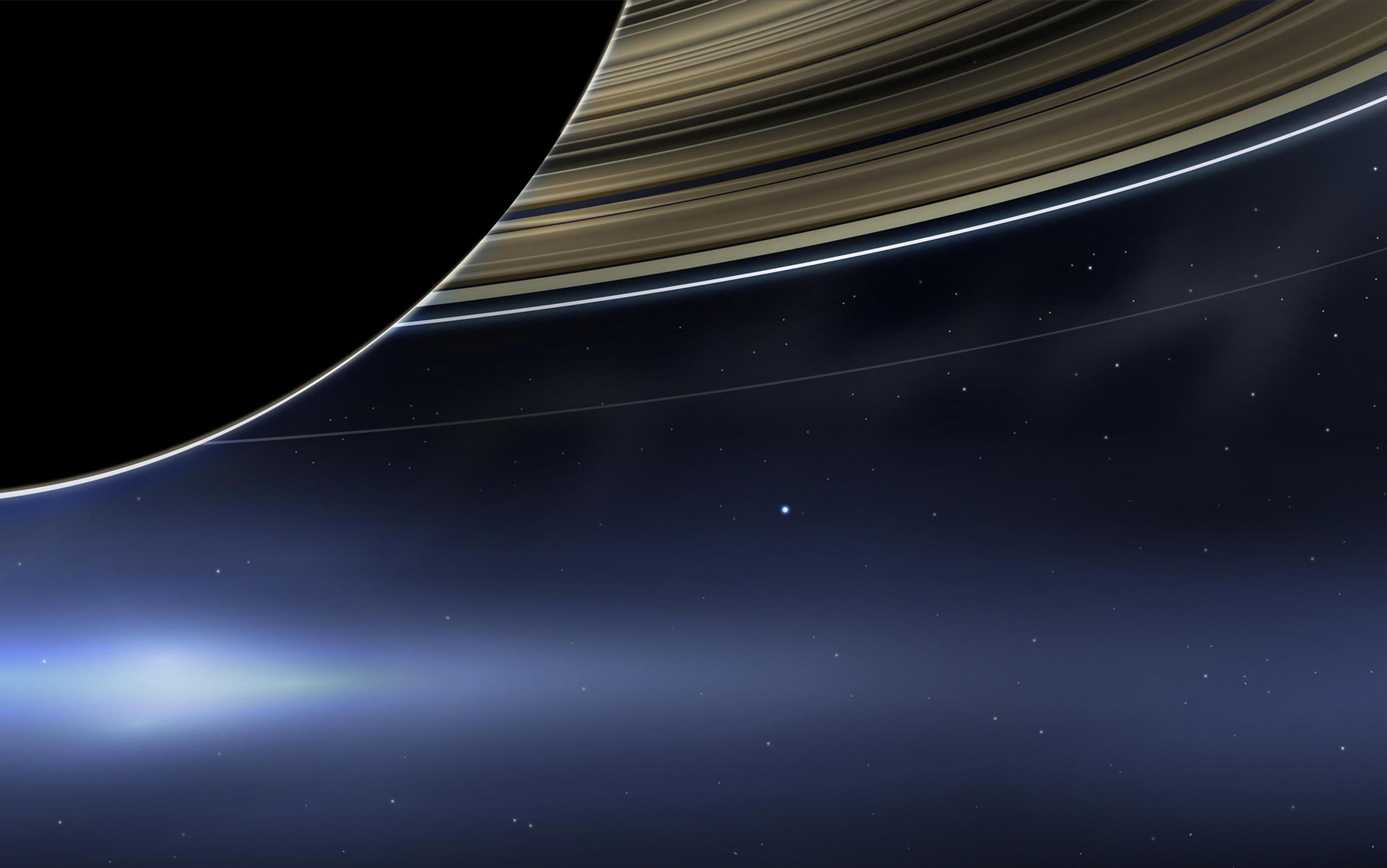

If you visit the Paris Observatory on the left bank of the Seine, you’ll see a plaque on its wall announcing that the speed of light was first measured there in 1676. The odd thing is, this result came about unintentionally. Ole Rømer, a Dane who was working as an assistant to the Italian astronomer Giovanni Domenico Cassini, was trying to account for certain discrepancies in eclipses of one of the moons of Jupiter. Rømer and Cassini discussed the possibility that light has a finite speed (it had typically been thought to move instantaneously). Eventually, following some rough calculations, Rømer concluded that light rays must take 10 or 11 minutes to cross a distance ‘equal to the half-diameter of the terrestrial orbit’.

Cassini himself had had second thoughts about the whole idea. He argued that if finite speed was the problem, and light really did take time to get around, the same delay ought to be visible in measurements of Jupiter’s other moons – and it wasn’t. The ensuing controversy came to an end only in 1728, when the English astronomer James Bradley found an alternative way to take the measurement. And as many subsequent experiments have confirmed, the estimate that came out of Rømer’s original observations was about 25 per cent off. We have now fixed the speed of light in a vacuum at exactly 299,792.458 kilometres per second.

Why this particular speed and not something else? Or, to put it another way, where does the speed of light come from?

Electromagnetic theory gave a first crucial insight 150 years ago. The Scottish physicist James Clerk Maxwell showed that when electric and magnetic fields change in time, they interact to produce a travelling electromagnetic wave. Maxwell calculated the speed of the wave from his equations and found it to be exactly the known speed of light. This strongly suggested that light was an electromagnetic wave – as was soon definitively confirmed.

A further breakthrough came in 1905, when Albert Einstein showed that c, the speed of light through a vacuum, is the universal speed limit. According to his special theory of relativity, nothing can move faster. So, thanks to Maxwell and Einstein, we know that the speed of light is connected with a number of other (on the face of it, quite distinct) phenomena in surprising ways.

But neither theory fully explains what determines that speed. What might? According to new research, the secret of c can be found in the nature of empty space.

Until quantum theory came along, electromagnetism was the complete theory of light. It remains tremendously important and useful, but it raises a question. To calculate the speed of light in a vacuum, Maxwell used empirically measured values for two constants that define the electric and magnetic properties of empty space. Call them, respectively, Ɛ0 and μ0.

The thing is, in a vacuum, it’s not clear that these numbers should mean anything. After all, electricity and magnetism actually arise from the behaviour of charged elementary particles such as electrons. But if we’re talking about empty space, there shouldn’t be any particles in there, should there?

This is where quantum physics enters. In the advanced version called quantum field theory, a vacuum is never really empty. It is the ‘vacuum state’, the lowest energy of a quantum system. It is an arena in which quantum fluctuations produce evanescent energies and elementary particles.

What’s a quantum fluctuation? Heisenberg’s Uncertainty Principle states that there is always some indefiniteness associated with physical measurements. According to classical physics, we can know exactly the position and momentum of, for example, a billiard ball at rest. But this is precisely what the Uncertainty Principle denies. According to Heisenberg, we can’t accurately know both at the same time. It’s as if the ball quivered or jittered slightly relative to the fixed values we think it has. These fluctuations are too small to make much difference at the human scale; but in a quantum vacuum, they produce tiny bursts of energy or (equivalently) matter, in the form of elementary particles that rapidly pop in and out of existence.

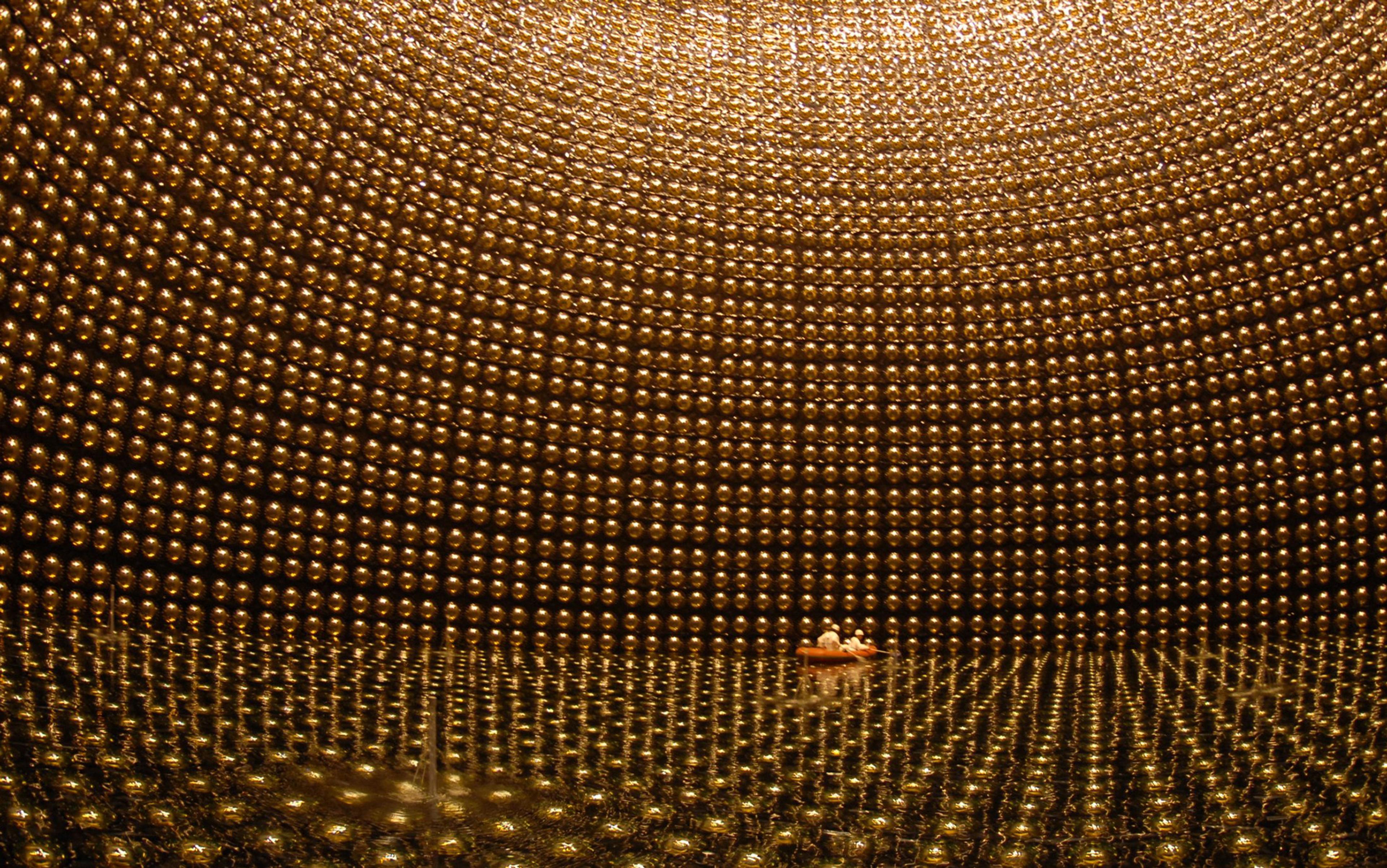

Leuchs is fascinated by the connection between classical electromagnetism and quantum fluctuations

These short-lived phenomena might seem to be a ghostly form of reality. But they do have measurable effects, including electromagnetic ones. That’s because these fleeting excitations of the quantum vacuum appear as pairs of particles and antiparticles with equal and opposite electric charge, such as electrons and positrons. An electric field applied to the vacuum distorts these pairs to produce an electric response, and a magnetic field affects them to create a magnetic response. This behaviour gives us a way to calculate, not just measure, the electromagnetic properties of the quantum vacuum and, from them, to derive the value of c.

In 2010, the physicist Gerd Leuchs and colleagues at the Max Planck Institute for the Science of Light in Germany did just that. They used virtual pairs in the quantum vacuum to calculate the electric constant Ɛ0. Their greatly simplified approach yielded a value within a factor of 10 of the correct value used by Maxwell – an encouraging sign! This inspired Marcel Urban and colleagues at the University of Paris-Sud to calculate c from the electromagnetic properties of the quantum vacuum. In 2013, they reported that their approach gave the correct numerical value.

This result is satisfying. But it is not definitive. For one thing, Urban and colleagues had to make some unsupported assumptions. It will take a full analysis and some experiments to prove that c can really be derived from the quantum vacuum. Nevertheless, Leuchs tells me that he continues to be fascinated by the connection between classical electromagnetism and quantum fluctuations, and is working on a rigorous analysis under full quantum field theory. At the same time, Urban and colleagues suggest new experiments to test the connection. So it is reasonable to hope that c will at last be grounded in a more fundamental theory. And then – mystery solved?

Well, that depends on your point of view.

The speed of light is, of course, just one of several ‘fundamental’ or ‘universal’ physical constants. These are believed to apply to the entire universe and to remain fixed over time. The gravitational constant G, for example, defines the strength of gravity throughout the Universe. At small scales, Planck’s constant h sets the size of quantum effects and the tiny charge on the electron e is the basic unit of electricity.

The numerical values of these and other constants are known to excruciating precision. For instance, h is measured as 6.626070040 × 10−34 joule-second (to within 10-6 per cent!). But all these quantities raise a host of unsettling questions. Are they truly constant? In what way are they ‘fundamental’? Why do they have those particular values? What do they really tell us about the physical reality around us?

Whether the ‘constants’ are really constant throughout the Universe is an ancient philosophical controversy. Aristotle believed that the Earth was differently constituted from the heavens. Copernicus held that our local piece of the Universe is just like any other part of it. Today, science follows the modern Copernican view, assuming that the laws of physics are the same everywhere in spacetime. But an assumption is all this is. It needs to be tested, especially for G and c, to make sure we are not misinterpreting what we observe in the distant universe.

It was the Nobel Laureate Paul Dirac who raised the possibility that G might vary over time. In 1937, cosmological considerations led him to suggest that it decreases by about one part in 10 billion per year. Was he right? Probably not. Observations of astronomical bodies under gravity do not show this decrease, and so far there is no sign that G varies in space. Its measured value accurately describes planetary orbits and spacecraft trajectories throughout the solar system, and distant cosmic events, too. Radio astronomers recently confirmed that G as we know it correctly describes the behaviour of a pulsar (the rapidly rotating remnant of a supernova) 3,750 light years away. Similarly, there seems to be no credible evidence that c varies in space or time.

So, let’s assume that these constants really are constant. Are they fundamental? Are some more fundamental than others? What do we even mean by ‘fundamental’ in this context? One way to approach the issue would be to ask what is the smallest set of constants from which the others can be derived. Sets of two to 10 constants have been proposed, but one useful choice has been just three: h, c and G, collectively representing relativity and quantum theory.

only the dimensionless constants are really ‘fundamental’, because they are independent of any system of measurement

In 1899, Max Planck, who founded quantum physics, examined the relations among h, c and G and the three basic aspects or dimensions of physical reality: space, time, and mass. Every measured physical quantity is defined by its numerical value and its dimensions. We don’t quote c simply as 300,000, but as 300,000 kilometres per second, or 186,000 miles per second, or 0.984 feet per nanosecond. The numbers and units are vastly different, but the dimensions are the same: length divided by time. In the same way, G and h have, respectively, dimensions of [length3/(mass x time2)] and [mass x length2/time]. From these relations, Planck derived ‘natural’ units, combinations of h, c and G that yield a Planck length, mass and time of 1.6 x 10-35 metres, 2.2 x 10-8 kilogrammes, and 5.4 x 10-44 seconds. Among their admirable properties, these Planck units give insights into quantum gravity and the early Universe.

But some constants involve no dimensions at all. These are so-called dimensionless constants – pure numbers, such as the ratio of the proton mass to the electron mass. That is simply the number 1836.2 (which is thought to be a little peculiar because we do not know why it is so large). According to the physicist Michael Duff of Imperial College London, only the dimensionless constants are really ‘fundamental’, because they are independent of any system of measurement. Dimensional constants, on the other hand, ‘are merely human constructs whose number and values differ from one choice of units to the next’.

Perhaps the most intriguing of the dimensionless constants is the fine-structure constant α. It was first determined in 1916, when quantum theory was combined with relativity to account for details or ‘fine structure’ in the atomic spectrum of hydrogen. In the theory, α is the speed of the electron orbiting the hydrogen nucleus divided by c. It has the value 0.0072973525698, or almost exactly 1/137.

Today, within quantum electrodynamics (the theory of how light and matter interact), α defines the strength of the electromagnetic force on an electron. This gives it a huge role. Along with gravity and the strong and weak nuclear forces, electromagnetism defines how the Universe works. But no one has yet explained the value 1/137, a number with no obvious antecedents or meaningful links. The Nobel Prize-winning physicist Richard Feynman wrote that α has been ‘a mystery ever since it was discovered… a magic number that comes to us with no understanding by man. You might say the “hand of God” wrote that number, and “we don’t know how He pushed his pencil”.’

Whether it was the ‘hand of God’ or some truly fundamental physical process that formed the constants, it is their apparent arbitrariness that drives physicists mad. Why these numbers? Couldn’t they have been different?

One way to deal with this disquieting sense of contingency is to confront it head-on. This path leads us to the anthropic principle, the philosophical idea that what we observe in the Universe must be compatible with the fact that we humans are here to observe it. A slightly different value for α would change the Universe; for instance by making it impossible for stellar processes to produce carbon, meaning that our own carbon-based life would not exist. In short, the reason we see the values that we see is that, if they were very different, we wouldn’t be around to see them. QED. Such considerations have been used to limit α to between 1/170 and 1/80, since anything outside that range would rule out our own existence.

But these arguments also leave open the possibility that there are other universes in which the constants are different. And though it might be the case that those universes are inhospitable to intelligent observers, it’s still worth imagining what one would see if one were able to visit.

For example, what if c were faster? Light seems pretty quick to us, because nothing is quicker. But it still creates significant delays over long distances. Space is so vast that aeons can pass before starlight reaches us. Since our spacecraft are much slower than light, this means that we might never be able to send them to the stars. On the plus side, the time lag turns telescopes into time machines, letting us see distant galaxies as they were billions of years ago.

there’s something very intriguing about how tightly constructed the laws of our own Universe appear to be

If c were, say, 10 times bigger, a lot of things would change. Earthly communications would improve. We’d cut the time lag for radio signals over big distances in space. NASA would gain better control over its unmanned spacecraft and planetary explorers. On the other hand, the higher speed would mess up our ability to peer back into the history of the Universe.

Or imagine slow light, so sluggish that we could watch it slowly creep out of a lamp to fill a room. While it wouldn’t be useful for much in everyday life, the saving grace is that our telescopes would carry us back to the Big Bang itself. (In a sense, ‘slow light’ has been achieved in the lab. In 1999, researchers brought laser light to the speed of a bicycle, and later to a dead stop, by passing it through a cloud of ultra-cold atoms.)

These possibilities are entertaining to think about – and they might well be real in adjacent universes. But there’s something very intriguing about how tightly constructed the laws of our own Universe appear to be. Leuchs points out that linking c to the quantum vacuum would show, remarkably, that quantum fluctuations are ‘subtly embedded’ in classical electromagnetism, even though electromagnetic theory preceded the discovery of the quantum realm by 35 years. The linkage would also be a shining example of how quantum effects influence the whole Universe.

And if there are multiple universes, unfolding according to different laws, using different constants, anthropic reasoning might well suffice to explain why we observe the particular regularities we find in our own world. In a sense it would just be the luck of the draw. But I’m not sure this would succeed in banishing mystery from the way things are.

Presumably the different parts of the multiverse would have to connect to one another in specific ways that follow their own laws – and presumably it would in turn be possible to imagine different ways for those universes to relate. Why should the multiverse work like this, and not that? Perhaps it isn’t possible for the intellect to overcome a sense of the arbitrariness of things. We are close here to the old philosophical riddle, of why there is something rather than nothing. That’s a mystery into which perhaps no light can penetrate.