Whenever we try to make an inventory of humankind’s store of knowledge, we stumble into an ongoing battle between what CP Snow called ‘the two cultures’. On one side are the humanities, on the other are the sciences (natural and physical), with social science and philosophy caught somewhere in the middle. This is more than a turf dispute among academics. It strikes at the core of what we mean by human knowledge.

Snow brought this debate into the open with his essay The Two Cultures and the Scientific Revolution, published in 1959. He started his career as a scientist and then moved to the humanities, where he was dismayed at the attitudes of his new colleagues. ‘A good many times,’ he wrote, ‘I have been present at gatherings of people who, by the standards of the traditional culture, are thought highly educated and who have with considerable gusto been expressing their incredulity at the illiteracy of scientists. Once or twice I have been provoked and have asked the company how many of them could describe the Second Law of Thermodynamics. The response was cold: it was also negative. Yet I was asking something which is the scientific equivalent of: Have you read a work of Shakespeare’s?’

That was more than half a century ago. If anything, the situation has got worse. Throughout the 1990s, postmodernist, deconstructionist and radical feminist authors (the likes of Michel Foucault, Jacques Derrida, Bruno Latour and Sandra Harding) wrote all sorts of nonsense about science, clearly without understanding what scientists actually do. The feminist philosopher Harding once boasted: ‘I doubt that in our wildest dreams we ever imagined we would have to reinvent both science and theorising itself’. That’s a striking claim given the dearth of novel results arising from feminist science. The last time I checked, there were no uniquely feminist energy sources on the horizon.

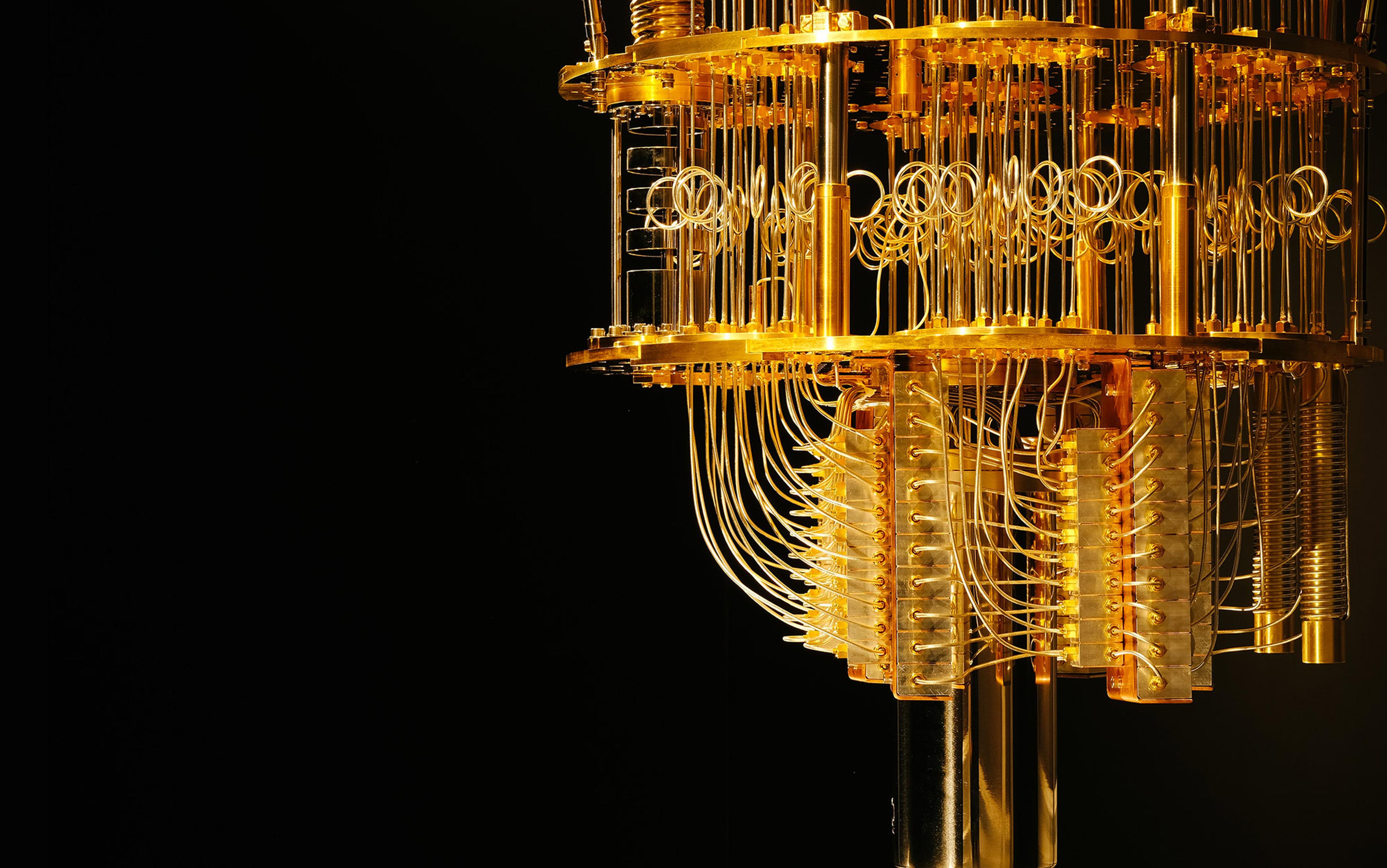

In order to satirise this kind of pretentiousness, in 1996 the physicist Alan Sokal submitted a paper to the postmodernist journal Social Text. He called it ‘Transgressing the Boundaries: Toward a Transformative Hermeneutics of Quantum Gravity’. There is no such thing as a hermeneutics of quantum gravity, transformative or not, and the paper consisted entirely of calculated nonsense. Nevertheless, the journal published it. The moral, Sokal concluded, was that postmodern writing on science depended on ‘radical-sounding assertions’ that can be given ‘two alternative readings: one as interesting, radical, and grossly false; the other as boring and trivially true’.

Truth be told we don’t know whether the laws that control the behaviour of quarks scale up to the level of societies and galaxies

Blame for the culture wars doesn’t lay squarely on the shoulders of humanists, however. Scientists have employed their own overblown rhetoric to aggrandise their doings and dismiss what they haven’t read or understood. Their target, interestingly, is often philosophy. Stephen Hawking began his 2010 book The Grand Design by declaring philosophy dead — though he neglected to provide evidence or argument for such a startling conclusion. Earlier this year, the theoretical physicist Lawrence Krauss told The Atlantic magazine that philosophy ‘reminds me of that old Woody Allen joke: those that can’t do, teach, and those that can’t teach, teach gym. And the worst part of philosophy is the philosophy of science; the only people, as far as I can tell, that read work by philosophers of science are other philosophers of science. It has no impact on physics whatsoever’.

To begin with, it is fair to point out that the only people who read works in theoretical physics are theoretical physicists, so by Krauss’s own reasoning both fields are irrelevant to everybody else (they aren’t, of course). Secondly, Krauss, and Hawking for that matter, seem to miss the fact that the business of philosophy is not to solve scientific problems — we’ve got science for that. Objecting to philosophy on these grounds is like complaining that historians of science haven’t solved a single puzzle in theoretical physics. That’s because historians do history, not science. When was the last time a theoretical physicist solved a problem in history? And as the philosopher Daniel Dennett wrote in Darwin’s Dangerous Idea (1995), a book that has been very popular among scientists: ‘There is no such thing as philosophy-free science; there is only science whose philosophical baggage is taken on board without examination’. Whether or not they realise it, Hawking and Krauss need philosophy as a background condition for what they do.

Perhaps the most ambitious contemporary attempt at reconfiguring the relationship between the sciences and the humanities comes from the biologist EO Wilson. In his 1998 book, Consilience: The Unity of Knowledge, he proposed nothing less than to explain the whole of human experience in terms of the natural sciences. Beginning with the premise that we are biological beings, he attempted to make sense of society, the arts, ethics and religion in terms of our evolutionary heritage. ‘I remember very well the time I was captured by the dream of unified learning,’ he wrote. ‘I discovered evolution. Suddenly — that is not too strong a word — I saw the world in a wholly new way’.

Wilson claims that we can engage in a process of ‘consilience’ that leads to an intellectually and aesthetically satisfactory unity of knowledge. Here is how he defines two versions of consilience: ‘To dissect a phenomenon into its elements … is consilience by reduction. To reconstitute it, and especially to predict with knowledge gained by reduction how nature assembled it in the first place, is consilience by synthesis’.

Despite the unfamiliar name, this is actually a standard approach in the natural sciences, and it goes back to Descartes. In order to understand a complex problem, we break it down into smaller chunks, get a grasp on those, and then put the whole thing back together. The strategy is called reductionism and it has been highly successful in fundamental physics, though its success has been more limited in biology and other natural sciences. The overall image that Wilson seems to have in mind is of a downward spiral wherein complex aspects of human culture — literature, for example — are understood first in terms of the social sciences (sociology, psychology), and then more mechanistically by the biological sciences (neurobiology, evolutionary biology), before finally being reduced to physics. After all, everything is made of quarks (or strings), isn’t it?

Before we can see where Wilson and his followers go wrong, we need to make a distinction between two meanings of reductionism. There is ontological reduction, which has to do with what exists, and epistemic reduction, which has to do with what we know. The first one is the idea that the bottom level of reality (say, quarks, or strings) is causally sufficient to account for everything else (atoms, cells, you and me, planets, galaxies and so forth). Epistemic reductionism, on the other hand, claims that knowledge of the bottom level is sufficient to reconstruct knowledge of everything else. It holds that we will eventually be able to derive a quantum mechanical theory of planetary motions and of the genius of Shakespeare.

How are we doing in the millennia-long quest for absolute and objective truth? Not so well, it seems

The notion of ontological reductionism is widely accepted in physics and in certain philosophical quarters, though there really isn’t any compelling evidence one way or the other. Truth be told, we don’t know whether the laws that control the behaviour of quarks scale up to the level of societies and galaxies, or whether large complex systems exhibit novel behaviour that can’t be reduced to lower ontological levels. I am, therefore, agnostic about ontological reductionism. Fortunately for the purposes of this discussion, it doesn’t matter one way or the other. The real game lies in the other direction.

Epistemic reductionism is obviously false. We do not have — nor are we ever likely to have — a quantum mechanical theory of planets or of human behaviour. Even if possible in principle, such a theory would be too complicated to compute or to understand. Chemistry might have become a branch of physics via a successful reduction, and neurobiology certainly informs psychology. But not even the most ardent physicist would attempt to produce an explanation of, say, ecosystems in terms of subatomic particles. The impossibility of this sort of epistemic reductionism therefore puts one significant constraint on Wilson-type consilience. The big question, then, is how far we can push the programme.

Let’s begin in the obvious place. If culture has to be understood in terms of biology, then genes must have quite a bit to do with it. Wilson, however, is too sophisticated to fall into straightforward genetic determinism. Instead he tells us: ‘Genes prescribe epigenetic rules, which are the regularities of sensory perception and mental development that animate and channel the acquisition of culture’. As it happens, I have worked on epigenetics. The word actually refers to all the molecular processes that mediate the effects of genes during plant and animal development. The problem from Wilson’s point of view is this: biologists don’t know what ‘epigenetic rules’ are. They don’t know how to quantify them or how to study them. For explanatory purposes, they are vacuous.

Wilson’s next move is to invoke Richard Dawkins’s idea of ‘memes’, or units of cultural evolution. If culture is made of discrete units that can replicate in the environment of human society, perhaps there is a way to bring evolutionary theory to bear directly on culture. Instead of genes (or epigenes), we apply Darwinian principles to memes. Unfortunately for consilience, the research programme of memetics is in big trouble. Scientists and philosophers have cast doubt on the usefulness, even the coherence, of the very concept. As my evolutionary biology colleague Jerry Coyne has said, it is ‘completely tautological, unable to explain why a meme spreads except by asserting, post facto, that it had qualities enabling it to spread’. We don’t know how to define memes in a way that is operationally useful to the practicing scientist, we don’t know why some memes are successful and others not, and we have no clue as to the physical substrate, if any, of which memes are made. Tellingly, the Journal of Memetics closed a few years ago for lack of submissions.

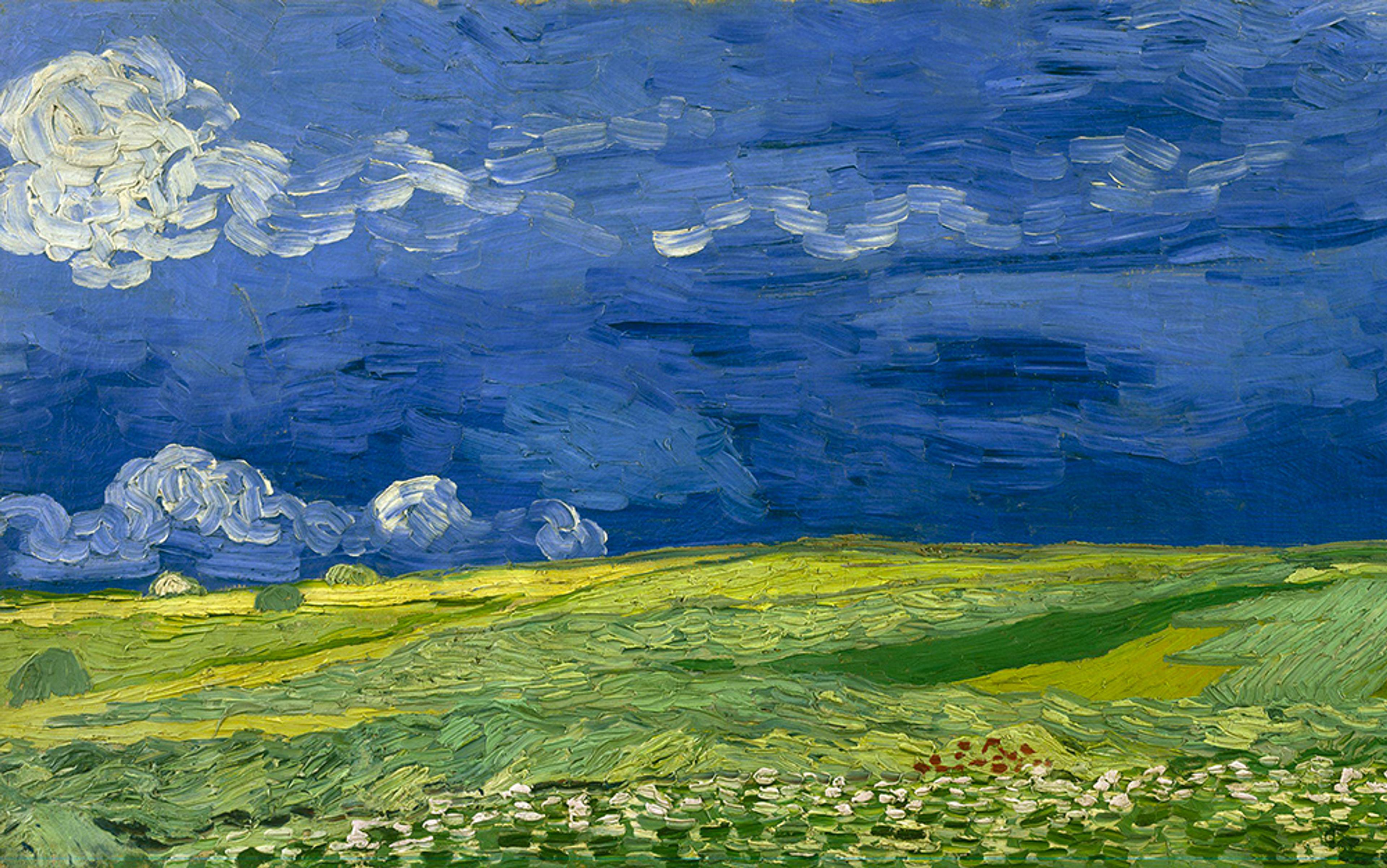

None of the above, of course, is to say that biology is irrelevant to human culture. We are indeed biological entities, so lots of what we do is connected with food, sex and social status. But we are also physical entities, and humanity has found cultural ways to exploit or get around physics. We built aeroplanes to fly despite the limitations imposed by gravity, and we invented endless variations on the basic biological themes, from Shakespeare’s sonnets to Picasso’s paintings. In each case, the supposedly fundamental sciences give us only a very partial picture of the whole.

If we take the idea of unity of knowledge seriously, there are some broad categories of inquiry that we should try to integrate into our picture. This turns out to be harder than we might think. Take mathematics and logic. Wilson is keen on these disciplines. ‘The dream of objective truth peaked,’ he writes, ‘with logical positivism’ — that is, with a philosophical movement of the 1920s and ’30s that attempted to capture the essence of scientific statements using logic. Mathematics, too, is central to his scheme. Because of its effectiveness in the natural sciences, it ‘seems to point arrowlike toward the ultimate goal of objective truth’.

Let’s leave aside the pretty well-established fact that human beings aren’t in the business of ‘ultimate objective truth’. When we come down to it, is scientific knowledge the same kind of thing as mathematical-logical knowledge? They are, I think, quite different. Look at what counts as a ‘fact’ in science: for instance the statement that there are four natural satellites of Jupiter that can be seen through small telescopes from Earth. These satellites were discovered by Galileo Galilei in the 17th century, and represented the first example of a solar-like system within our own Sun-centred one. Indeed, Galilei used this as a major reason to take seriously the then-highly controversial Copernican theory.

By contrast, take a mathematical ‘fact’, such as the demonstration of the Pythagorean theorem. Or a logical fact, such as a truth table that tells you the conditions under which particular combinations of premises yield true or false conclusions according to the rules of deduction. These two latter sorts of knowledge do resemble one another in certain ways; some philosophers regard mathematics as a type of logical system. Yet neither looks anything like a fact as it is understood in the natural sciences. Therefore, ‘unifying knowledge’ in this area looks like an empty aim: all we can say is that we have natural sciences over here and maths over there, and that the latter is often useful (for reasons that are not at all clear, by the way) to the former.

Let’s consider yet another type of fact, more germane to the project of reducing the humanities to the sciences. I happen to have a strong conviction that the music of Ludwig van Beethoven is better than that of Britney Spears. To me, that’s an aesthetic fact. I hope it’s also clear that this is a ‘fact’ (based on my ‘knowledge’ of music) that has a different structure and content from both logical-mathematical and natural-scientific facts. Indeed, it isn’t a fact at all: it’s an aesthetic judgment, one to which I have a strong emotional attachment.

Why would evolution produce brains such as Andrew Wiles’s, capable of solving Fermat’s last theorem?

Now, I do not doubt that my ability to make aesthetic judgments in general is influenced by the kind of biological being that I am. I need to have a particular type of auditory system even to hear Beethoven and Spears, and that system presumably accounts for why musicians rarely produce pieces outside a certain range of sound frequencies. Still, it seems hard to deny that my particular judgment about Beethoven versus Spears is primarily the result of my culture and psychology and upbringing. People in different times and cultures, or with different temperaments, have disagreed and will disagree with me — and they might feel just as strongly about their tastes as I do about mine (of course, they would be ‘wrong’). Clearly, there are aspects of human culture in which the very notion of ‘objective and ultimate truth’ is a category mistake.

Let’s set aside the goal of unifying all knowledge. How are we doing in the millennia-long quest for absolute and objective truth? Not so well, it seems, and that is largely because of the devastating contributions of a few philosophers and logicians, particularly David Hume, Bertrand Russell and Kurt Gödel.

In the 18th century, Hume formulated what is now known as the problem of induction. He noted that both in science and everyday experience we use a type of reasoning that philosophers call induction, which consists in generalising from examples. Hume also pointed out that we do not seem to have a logical justification for the inductive process itself. Why then do we believe that inductive reasoning is reliable? The answer is that it has worked so far. Ah, but to say so is to deploy inductive reasoning to justify inductive reasoning, which seems circular. Plenty of philosophers have tried to solve the problem of induction without success: we do not have an independent, rational justification for the most common type of reasoning employed by laypeople and professional scientists. Hume didn’t say that we should therefore all quit and go home in desperation. Indeed, we don’t have an alternative but to keep using induction. But it ought to be a sobering thought that our empirical knowledge is based on no solid foundation other than that ‘it works’.

What about maths and logic? At the beginning of the 20th century, a number of logicians, mathematicians and philosophers of mathematics were trying to establish firm logical foundations for mathematics and similar formal systems. The most famous such attempt was made by Bertrand Russell and Alfred North Whitehead, and it resulted in their Principia Mathematica (1910-13), one of the most impenetrable reads of all time. It failed.

A few years later the logician Kurt Gödel explained why. His two ‘incompleteness theorems’ proved — logically — that any sufficiently complex mathematical or logical system will contain truths that cannot be proven from within that system. Russell conceded this fatal blow to his enterprise, as well as the larger moral that we have to be content with unprovable truths even in mathematics. If we add to Gödel’s results the well-known fact that logical proofs and mathematical theorems have to start from assumptions (or axioms) that are themselves unprovable (or, in the case of some deductive reasoning like syllogisms, are derived from empirical observations and generalisation — ie, from induction), it seems that the quest for true and objective knowledge is revealed as a mirage.

At this point one might wonder what exactly is at stake here. Why are Wilson and his followers in search of a unified theory of everything, a single way to understand human knowledge? Wilson gives the answer explicitly in his book, and I think it also applies implicitly to some of his fellow travellers, for instance the physicist Steven Weinberg in his book Dreams of a Final Theory (1992). The motive is philosophical. More specifically, it is aesthetic. Some scientists really value simplicity and elegance of explanations, and use these criteria in evaluating of the relative worth of different theories. Wilson calls this ‘the Ionian enchantment’, and names the first chapter of Consilience accordingly. But the irony here is obvious. Neither simplicity nor elegance are empirical concepts: they are philosophical judgments. There is no reason to believe a priori that the universe can be explained by simple and elegant theories, and indeed the historical record of physics includes several instances when the simplest of competing theories turned out to be wrong.

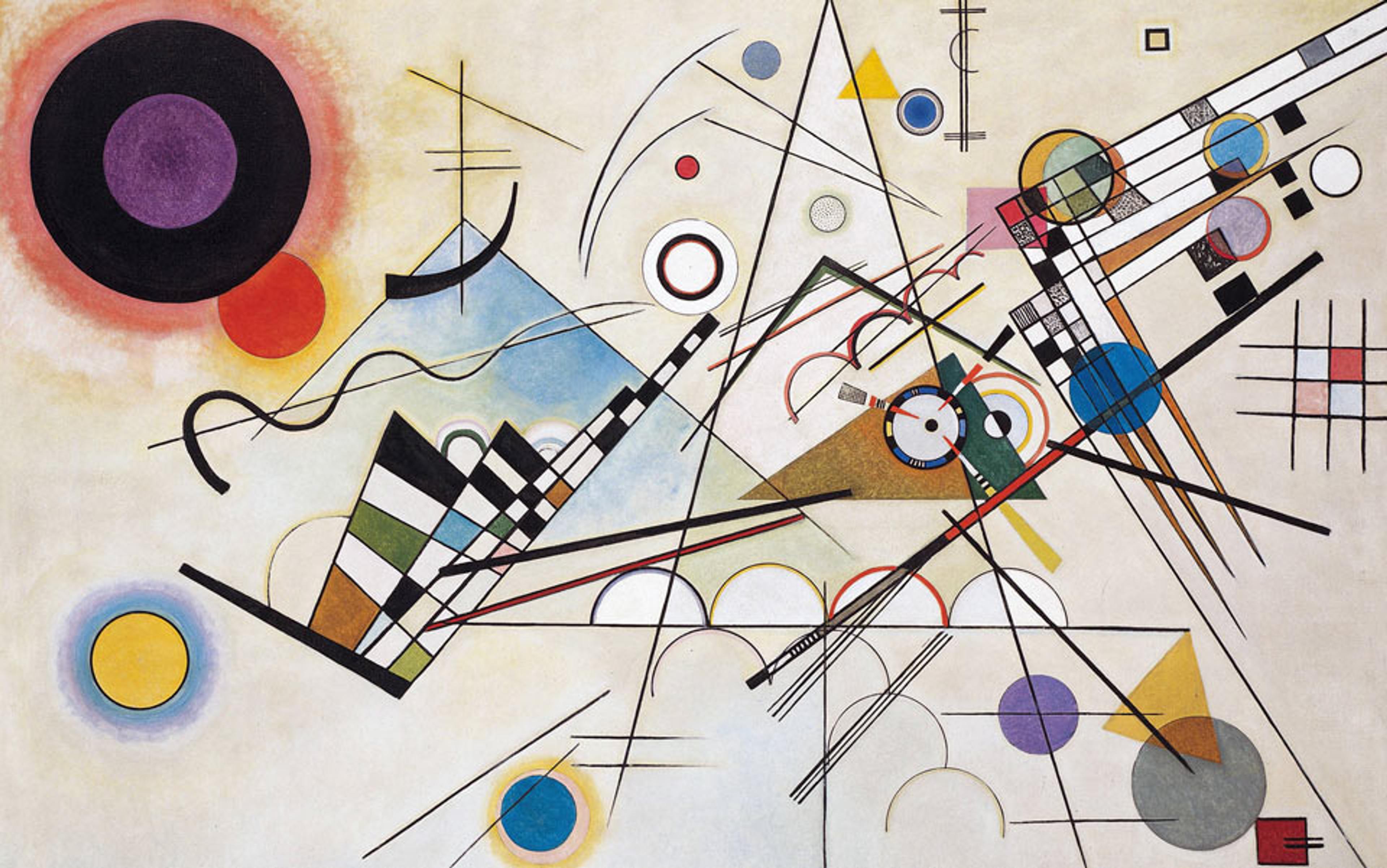

Enough with the demolition project. Is it possible to reconstruct something like Wilson’s consilience, but in a more reasonable manner? Think about visual art. Its history includes prehistoric cave paintings, Michelangelo, Picasso, and contemporary abstraction. It is reasonable to think that science — perhaps a combination of evolutionary biology and cognitive science — can tell us something about why our ancestors started painting to begin with, as well as why we like certain types of patterns: symmetrical figures, for instance, and repetitions of a certain degree of complexity. Yet these sorts of explanations massively underdetermine the variety of ways of doing visual art, both across centuries and across cultures. Picasso’s cubism is not about symmetry, for instance; indeed, it’s about breaking symmetry. And it is hard to imagine an explanation of the rise of, say, the Impressionist movement that doesn’t invoke the specific cultural circumstances of late 19th century France, and the biographies and psychologies of individual artists.

We find a similar situation with maths. It is plausible that our ability to count and do simple arithmetic gave us an evolutionary advantage and was therefore the result of natural selection. (Notice, however, that this is a speculative argument: we don’t have access to the kind of evidence needed to test the hypothesis.) But what on earth is the possible adaptive value of highly abstract mathematics? Why would evolution produce brains such as Andrew Wiles’s, capable of solving Fermat’s last theorem? Biology sets the background conditions for such feats of human ingenuity, since a brain of a particular type is necessary to accomplish them. But biology by itself has little else to say about how some human cultures took a historical path that ended up producing a small group of often socially awkward people who devote their lives to solving abstruse mathematical problems.

Or, finally, take morality, perhaps the most important aspect of what it means to be human. Much has been written on the evolutionary origins of morality, and many good and plausible ideas have been proposed. Our moral sense might well have originated in the context of social life as intelligent primates: other social primates do show behaviours consistent with the basic building blocks of morality such as fairness toward other members of the group, even when they aren’t kin. But it is a very long way from that to Aristotle’s Nicomachean Ethics, or Jeremy Bentham and John Stuart Mill’s utilitarianism. These works and concepts were possible because we are biological beings of a certain kind. Nevertheless, we need to take cultural history, psychology and philosophy seriously in order to account for them.

Here’s a final thought. Wilson’s project depends on the assumption that there is such a thing as human knowledge as a unifiable category. For him, disciplinary boundaries are accidents of history that need to be eliminated. But what if they helped to explain some further fact? An intriguing view has been proposed in different contexts by the linguist Noam Chomsky, in his Reflections on Language (1975), and the philosopher Colin McGinn, in The Problem of Consciousness (1991). The basic idea is to take seriously the fact that human brains evolved to solve the problems of life on the savannah during the Pleistocene, not to discover the ultimate nature of reality. From this perspective, it is delightfully surprising that we learn as much as science lets us and ponder as much as philosophy allows. All the same, we know that there are limits to the power of the human mind: just try to memorise a sequence of a million digits. Perhaps some of the disciplinary boundaries that have evolved over the centuries reflect our epistemic limitations.

Seen this way, the differences between philosophy, biology, physics, the social sciences and so on might not be the result of the arbitrary caprice of academic administrators and faculty; they might instead reflect a natural way in which human beings understand the world and their role in it. There might be better ways to organise our knowledge in some absolute sense, but perhaps what we have come up with is something that works well for us, as biological-cultural beings with a certain history.

This isn’t a suggestion to give up, much less a mystical injunction to go ‘beyond science’. There is nothing beyond science. But there is important stuff before it: there are human emotions, expressed by literature, music and the visual arts; there is culture; there is history. The best understanding of the whole shebang that humanity can hope for will involve a continuous dialogue between all our various disciplines. This is a more humble take on human knowledge than the quest for consilience, but it is one that, ironically, is more in synch with what the natural sciences tell us about being human.