The subtleties; an orginal edition of Swann’s Way from the personal archives of Marcel Proust. Photo by Getty

Years ago, on a flight from Amsterdam to Boston, two American nuns seated to my right listened to a voluble young Dutchman who was out to discover the United States. He asked the nuns where they were from. Alas, Framingham, Massachusetts was not on his itinerary, but, he noted, he had ‘shitloads of time and would be visiting shitloads of other places’.

The jovial young Dutchman had apparently gathered that ‘shitloads’ was a colourful synonym for the bland ‘lots’. He had mastered the syntax of English and a rather extensive vocabulary but lacked experience of the appropriateness of words to social contexts.

This memory sprang to mind with the recent news that the Google Translate engine would move from a phrase-based system to a neural network. (The technical differences are described here.) Both methods rely on training the machine with a ‘corpus’ consisting of sentence pairs: an original and a translation. The computer then generates rules for inferring, based on the sequence of words in the original text, the most likely sequence of words from the target language.

The procedure is an exercise in pattern matching. Similar pattern-matching algorithms are used to interpret the syllables you utter when you ask your smartphone to ‘navigate to Brookline’ or when a photo app tags your friend’s face. The machine doesn’t ‘understand’ faces or destinations; it reduces them to vectors of numbers, and processes them.

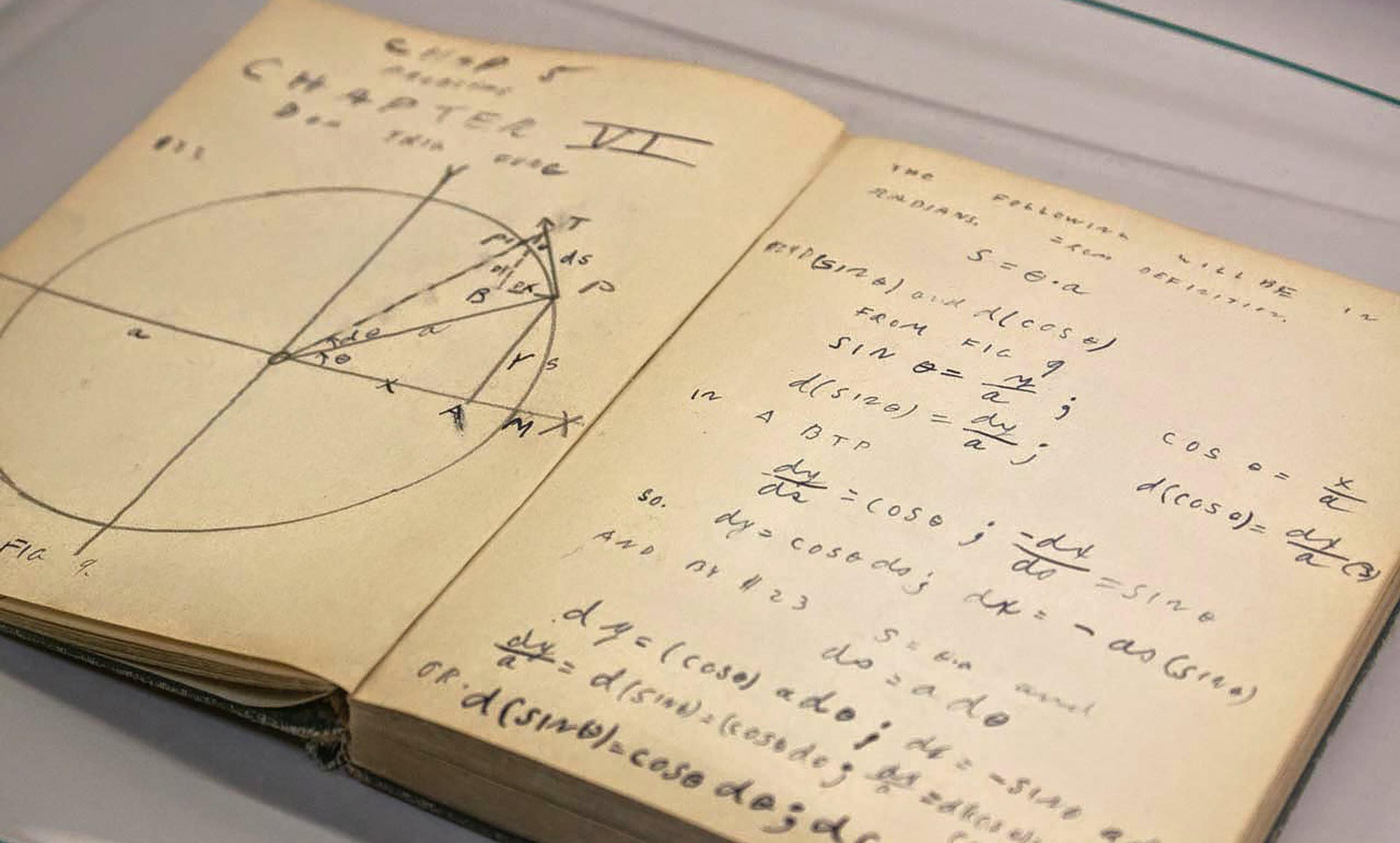

I am a professional translator, having translated some 125 books from the French. One might therefore expect me to bristle at Google’s claim that its new translation engine is almost as good as a human translator, scoring 5.0 on a scale of 0 to 6, whereas humans average 5.1. But I’m also a PhD in mathematics who has developed software that ‘reads’ European newspapers in four languages and categorises the results by topic. So, rather than be defensive about the possibility of being replaced by a machine translator, I am aware of the remarkable feats of which machines are capable, and full of admiration for the technical complexity and virtuosity of Google’s work.

My admiration does not blind me to the shortcomings of machine translation, however. Think of the young Dutch traveller who knew ‘shitloads’ of English. The young man’s fluency demonstrated that his ‘wetware’ – a living neural network, if you will – had been trained well enough to intuit the subtle rules (and exceptions) that make language natural. Computer languages, on the other hand, have context-free grammars. The young Dutchman, however, lacked the social experience with English to grasp the subtler rules that shape the native speaker’s diction, tone and structure. The native speaker might also choose to break those rules to achieve certain effects. If I were to say ‘shitloads of places’ rather than ‘lots of places’ to a pair of nuns, I would mean something by it. The Dutchman blundered into inadvertent comedy.

Google’s translation engine is ‘trained’ on corpora ranging from news sources to Wikipedia. The bare description of each corpus is the only indication of the context from which it arises. From such scanty information it would be difficult to infer the appropriateness or inappropriateness of a word such as ‘shitloads’. If translating into French, the machine might predict a good match to beaucoup or plusieurs. This would render the meaning of the utterance but not the comedy, which depends on the socially marked ‘shitloads’ in contrast to the neutral plusieurs. No matter how sophisticated the algorithm, it must rely on the information provided, and clues as to context, in particular social context, are devilishly hard to convey in code.

Take the French petite phrase. Phrase can mean ‘sentence’ or ‘phrase’ in English. When Marcel Proust uses it in a musical context in his novel À la recherche du temps perdu (1913-27), in the line ‘la petite phrase de Vinteuil’, it has to be ‘phrase’, because ‘sentence’ makes no sense. Google Translate (the old phrase-based system; the new neutral network is as yet available only for Mandarin Chinese) does remarkably well with this. If you put in petite phrase alone, it gives ‘short sentence’. If you put in la petite phrase de Vinteuil (Vinteuil being the name of a character who happens to be a composer), it gives ‘Vinteuil’s little phrase’, echoing published Proust translations. The rarity of the name ‘Vinteuil’ provides the necessary context, which the statistical algorithm picks up. But if you put in la petite phrase de Sarkozy, it spits out ‘little phrase Sarkozy’ instead of the correct ‘Sarkozy’s zinger’ – because in the political context indicated by the name of the former president, une petite phrase is a barbed remark aimed at a political rival – a zinger rather than a musical phrase. But the name Sarkozy appears in such a variety of sentences that the statistical engine fails to register it properly – and then compounds the error with an unfortunate solecism.

The problem, as with all previous attempts to create artificial intelligence (AI) going back to my student days at MIT, is that intelligence is incredibly complex. To be intelligent is not merely to be capable of inferring logically from rules or statistically from regularities. Before that, one has to know which rules are applicable, an art requiring awareness of sensitivity to situation. Programmers are very clever, but they are not yet clever enough to anticipate the vast variety of contexts from which meaning emerges. Hence even the best algorithms will miss things – and as Henry James put it, the ideal translator must be a person ‘on whom nothing is lost’.

This is not to say that mechanical translation is not useful. Much translation work is routine. At times, machines can do an adequate job. Don’t expect miracles, however, or felicitous literary translations, or aptly rendered political zingers. Overconfident claims have dogged AI research from its earliest days. I don’t say this out of fear for my job: I’ve retired from translating and am devoting part of my time nowadays to… writing code.