When René Descartes was 31 years old, in 1627, he began to write a manifesto on the proper methods of philosophising. He chose the title Regulae ad Directionem Ingenii, or Rules for the Direction of the Mind. It is a curious work. Descartes originally intended to present 36 rules divided evenly into three parts, but the manuscript trails off in the middle of the second part. Each rule was to be set forth in one or two sentences followed by a lengthy elaboration. The first rule tells us that ‘The end of study should be to direct the mind to an enunciation of sound and correct judgments on all matters that come before it,’ and the third rule tells us that ‘Our enquiries should be directed, not to what others have thought … but to what we can clearly and perspicuously behold and with certainty deduce.’ Rule four tells us that ‘There is a need of a method for finding out the truth.’

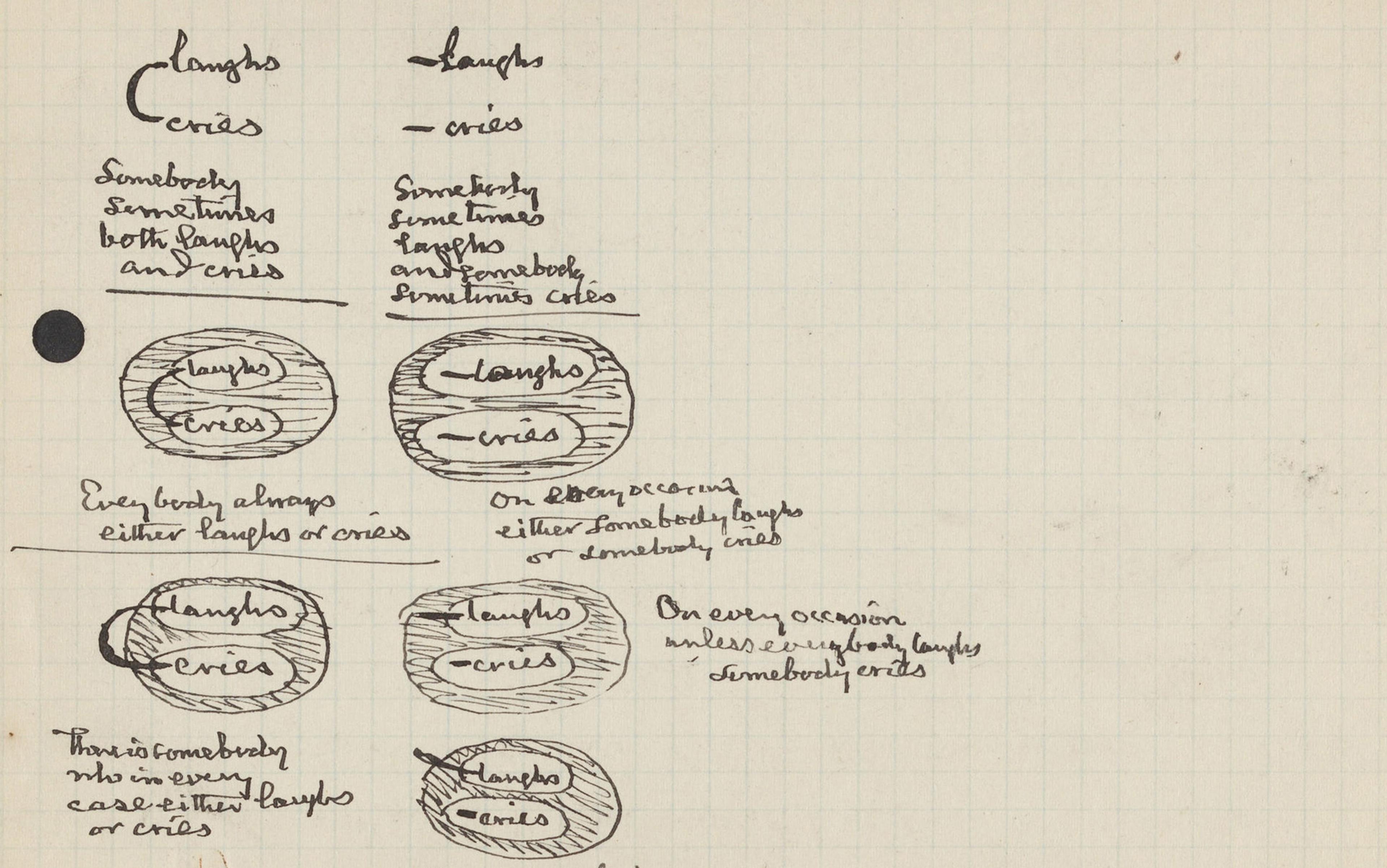

But soon the manuscript takes an unexpectedly mathematical turn. Diagrams and calculations creep in. Rule 19 informs us that proper application of the philosophical method requires us to ‘find out as many magnitudes as we have unknown terms, treated as though they were known’. This will ‘give us as many equations as there are unknowns’. Rule 20 tells us that, ‘having got our equations, we must proceed to carry out such operations as we have neglected, taking care never to multiply where we can divide’. Reading the Rules is like sitting down to read an introduction to philosophy and finding yourself, an hour later, in the midst of an algebra textbook.

The turning point occurs around rule 14. According to Descartes, philosophy is a matter of discovering general truths by finding properties that are shared by disparate objects, in order to understand the features that they have in common. This requires comparing the degrees to which the properties occur. A property that admits degrees is, by definition, a magnitude. And, from the time of the ancient Greeks, mathematics was understood to be neither more nor less than the science of magnitudes. (It was taken to encompass both the study of discrete magnitudes, that is, things that can be counted, as well as the study of continuous magnitudes, which are things that can be represented as lengths.) Philosophy is therefore the study of things that can be represented in mathematical terms, and the philosophical method becomes virtually indistinguishable from the mathematical method.

Similar intimations on the relationship between philosophy and mathematics can be found in antiquity, for example in the Pythagorean dictum that ‘all is number’. The Pythagorean discovery that the square root of two is irrational heralded the birth of Western philosophy by uncovering a fundamental limit in one approach to quantifying our experiences and opening the door to a richer conception of measurement and number. The nature of the continuum – the continuous magnitudes that are used to model time and space – have been a source of fruitful interaction between philosophers and mathematicians ever since. Plato held mathematics in great esteem, and argued that, in an ideal state, all citizens, from the guardians to the philosopher kings, would be trained in arithmetic and geometry. In The Republic, his protagonist Socrates maintains that mathematics ‘has a very great and elevating effect’, and that its abstractions ‘draw the mind towards truth, and create the spirit of philosophy’.

Galileo, a contemporary of Descartes, also blurred the distinction between mathematical and philosophical method. An excerpt from his essay ‘Il Saggiatore’ (1623), or The Assayer, is often cited for advancing a revolutionary mathematisation of physics:

Philosophy is written in this grand book – I mean the Universe – which stands continually open to our gaze, but it cannot be understood unless one first learns to comprehend the language and interpret the characters in which it is written. It is written in the language of mathematics, and its characters are triangles, circles and other geometrical figures, without which it is humanly impossible to understand a single word of it; without these, one is wandering around in a dark labyrinth.

In this quotation, it is philosophy that is written in the language of mathematics. It is no mere linguistic coincidence that Isaac Newton’s monumental development of calculus and modern physics was titled Philosophiæ Naturalis Principia Mathematica (1687), that is, Mathematical Principles of Natural Philosophy. The goal of philosophy is to understand the world and our place in it, and to determine the methods that are appropriate to that task. Physics, or natural philosophy, was part of that project, and Descartes, Galileo and Newton – and philosophers before and after – were keenly attentive to the role that mathematics had to play.

Gottfried Leibniz, another towering 17th-century figure in both mathematics and philosophy, was similarly interested in establishing proper method. In 1677 he wrote:

The true Method taken in all of its scope is to my mind a thing hitherto quite unknown, and it has not been practised except in mathematics.

Earlier, in his doctoral dissertation of 1666, he had set the goal of developing a symbolic language capable of expressing any rational thought, and a symbolic calculus powerful enough to decide the truth of any such statement. This lofty proposal served as a rallying cry for the field of symbolic logic centuries later. But Leibniz made it clear that application of the method is not limited to mathematics:

If those who have cultivated the other sciences had imitated the mathematicians … we should long since have had a secure Metaphysics, as well as an ethics depending on Metaphysics since the latter includes the sort of knowledge of God and the soul which should rule our life.

Here mathematics grounds not only science, but also ethics, metaphysics and knowledge of God and the soul. The mathematical approaches adopted by Descartes, Galileo, Newton and Leibniz were major philosophical advances, and this helps explain philosophers’ longstanding fascination with mathematics: understanding our capacities for mathematical thought is an important part of understanding our capacities to think philosophically.

The philosophy of mathematics reached its heyday in the middle of the 20th century, buoyed by the previous decades’ successes in mathematical logic. Logicians had finally begun to make good on Leibniz’s promise of a calculus of thought, developing systems of axioms and rules that are expressive enough to account for the vast majority of mathematical argumentation. Among the various mathematical foundations on offer, one known as Zermelo-Fraenkel set theory has proved to be especially robust. It provides natural and effective encodings of ordinary mathematical arguments, supported by basic logical constructs and axioms that describe abstract mathematical entities known as sets. Set theory provides a compelling description of mathematical practice in terms of a small number of fundamental concepts and rules. In the 1930s, the Austrian logician Kurt Gödel proved important results known as the incompleteness theorems, which identify inherent limits to the ability of the axiomatic method to settle all mathematical truths. Via the mathematical modelling of mathematical practice itself, logic therefore gave us a clear account of the nature and extent of mathematical reasoning.

Logic brought philosophical progress on other fronts as well, such as the nature of truth. In the 1930s, the Polish logician Alfred Tarski offered a mathematical analysis of truth, again providing a positive account while at the same time identifying inherent limits to its range of applicability. The 1930s also brought a clear mathematical analysis of the notion of computability. This provided a compelling analysis of the nature of the kinds of algorithmic methods that were sought by the likes of Descartes and Leibniz, while once again uncovering limitations.

These theories were quintessentially mathematical, set forth in the mathematician’s style of presenting definitions, theorems and proofs. But they were also motivated and informed by philosophical debate and understood to be worthy of philosophical scrutiny. As in the 17th century, the line between mathematics and philosophy was not sharp, and it was hard to deny that important progress had been made. Both the positive results and the negative, limitative results were valuable: having a clear understanding of what a particular methodological approach can and cannot be expected to achieve serves to focus enquiry and suggest new avenues for research.

The truth of a mathematical statement does not rely on historical context or the circumstances of the speaker

The successes were so striking that, for a while, it seemed that every other branch of philosophy wanted to be like the philosophy of mathematics. Philosophers of science imported the logician’s vocabulary for talking about mathematical theories, so that a scientific theory was understood to be something like a mathematical theory supplemented by additional observation predicates that served to connect them to the empirical world. It was so common for papers in the philosophy of science to begin an analysis with the phrase ‘let T be a theory’ that the contemporary philosopher Mark Wilson has described this style of philosophy as ‘theory T syndrome’.

In a similar way, philosophers of language imported notions of meaning, reference and truth from the logician’s study of mathematics. For all the complexity of the subject, the structure of mathematical language is disarmingly simple. There are no modes or tenses, since mathematicians do not typically worry about when seven became an odd number and what the world would have been like had it been even. The truth of a mathematical statement does not rely on historical context or the circumstances of the speaker, and the communicative norms of mathematics are fairly staid, without subtle presuppositions and implicatures. So a promising strategy for linguists and philosophers of language was to start with the modelling of mathematical language, where the mechanics are more easily understood, and then adapt the models to accommodate a broader range of linguistic constructs.

Philosophers of mind meanwhile imported logical scaffolding to the study of propositional attitudes. Roughly speaking, if we are able to know something, believe something, doubt something or wish for something, then that thing must be a kind of entity that is available to thought, perhaps via some sort of mental representation. Such representations, as they were treated in the literature, had a lot in common with the symbolic representations used to represent mathematical definitions and assertions.

Philosophical subjects such as these orbited the philosophy of mathematics, drawing heat and light from the logical account of mathematical practice. The successes in the philosophy of mathematics offered striking examples of what philosophy could achieve. But today the subject has lost its lustre, and no longer has the same gravitational pull. What went wrong?

In part, the philosophy of mathematics was a victim of its own success. For a subject traditionally concerned with determining the proper grounds for mathematical knowledge, modern logic offered such a neat account of mathematical proof that there was almost nothing left to do. Except, perhaps, one little thing: if mathematics amounts to deductive reasoning using the axioms and rules of set theory, then to ground the subject all we need to do is to figure out what sort of entities sets are, how we can know things about them, and why that particular kind of knowledge tells us anything useful about the world. Such questions about the nature of abstract objects have therefore been the central focus of the philosophy of mathematics from the middle of the 20th century to the present day.

In other branches of philosophy, where no neat story was available, philosophers had to deal with the inherently messy nature of language, science and thought. This required them to grapple with serious methodological issues. From the 1950s on, philosophers of language engaged with linguists to make sense of the Chomskyean revolution in thinking about the structure of language and human capacities for understanding and generating speech. Philosophers of mind interacted with psychologists and computer scientists to forge cognitive science, the new science of the mind. Philosophers of biology struggled with methodological issues related to evolution and the burgeoning field of genetics, and philosophers of physics worried about the coherence of the fundamental assumptions of quantum mechanics and general relativity. Meanwhile, philosophers of mathematics were chiefly concerned with the question as to whether numbers and other abstract objects really exist.

This fixation was not healthy. It has almost nothing to do with everyday mathematical practice, since mathematicians generally do not harbour doubts whether what they are doing is meaningful and useful – and, regardless, philosophy has had little reassurance to offer in that respect. It turns out that there simply aren’t that many interesting things to say about abstract mathematical objects in and of themselves. Insofar as it is possible to provide compelling justification for doing mathematics the way we do, it will not come from making general pronouncements but, rather, undertaking a careful study of the goals and methods of the subject and exploring the extent to which the methods are suited to the goals. When we begin to ask why mathematics looks the way it does and how it provides us with such powerful means of solving problems and explaining scientific phenomena, we find that the story is rich and complex.

The problem is that set-theoretic idealisation idealises too much. Mathematical thought is messy. When we dig beneath the neatly composed surface we find a great buzzing, blooming confusion of ideas, and we have a lot to learn about how mathematics channels these wellsprings of creativity into rigorous scientific discourse. But that requires doing hard work and getting our hands dirty. And so the call of the sirens is pleasant and enticing: mathematics is set theory! Just tell us a really good story about abstract objects, and the secrets of the Universe will be unlocked. This siren song has held the philosophy of mathematics in thrall, leaving it to drift into the rocky shores.

The field’s narrow focus on logic suggests another explanation for its decline. Given that the philosophy of mathematics has been closely aligned with logic for the past century or so, one would expect the fortunes of the two subjects to rise and fall in tandem. Over that period, logic has grown into a bona-fide branch of mathematics in its own right, and in 1966 Paul Cohen won a Fields Medal, the most prestigious prize in mathematics, for solving two longstanding open problems in set theory. But there hasn’t been another Fields Medal in logic since, and although the subject enjoys some interactions with other branches of mathematics, it has not found its way into the mathematical mainstream.

Many of philosophy’s traditional concerns about language, knowledge and thought now find a home in computer science, where the goal is to design systems that emulate these faculties. If the philosopher’s goal is to clarify concepts and shore up foundations, and the scientist’s goal is to gather data and refine the models, then the computer scientist aims to implement the findings and put them to good use. Ideally, information should flow back and forth, with philosophical understanding informing implementation and practical results, and challenges informing philosophical study. So it makes sense to consider the role that logic has played in computer science as well.

From the mid-1950s, cognitive science and artificial intelligence (AI) were dominated by what the American philosopher of mind John Haugeland dubbed GOFAI – ‘good old-fashioned AI’ – an approach that relies on symbolic representations and logic-based algorithms to produce intelligent behaviour. A rival approach, with its origins in the 1940s, incorporates neural networks, a computational model whose state is encoded by the activation strength of very large numbers of simple processors connected together like neurons in the brain. The early decades of AI were dominated by the logic-based approach, but in the 1980s researchers demonstrated that neural networks could be trained to recognise patterns and classify images without a manifest algorithm or encoding of features that would explain or justify the decision. This gave rise to the field of machine learning. Improvements to the methods and increased computational power have yielded great success and explosive growth in the past few years. In 2017, a system known as AlphaGo trained itself to play the strategy game Go well enough to sweep the world’s highest-ranked Go player in a three-game match. The approach, known as deep learning, is now all the rage.

Logic has also lost ground in other branches of automated reasoning. Logic-based methods have yet to yield substantial success in automating mathematical practice, whereas statistical methods of drawing conclusions, especially those adapted to the analysis of extremely large data sets, are highly prized in industry and finance. Computational approaches to linguistics once involved mapping out the grammatical structure of language and then designing algorithms to parse down utterances to their logical form. These days, however, language processing is generally a matter of statistical methods and machine learning, which underwrite our daily interactions with Siri and Alexa.

The structure of language is inherently amorphous. Concepts have fuzzy boundaries

In 1994, the electrical engineer and computer scientist Lotfi Zadeh at the University of California, Berkeley used the phrase ‘soft computing’ to describe such approaches. Whereas mathematics seeks precise and certain answers, obtaining them in real life is often intractable or outright impossible. In such circumstances, what we really want are algorithms that return reasonable approximations to the right answers in an efficient and reliable manner. Real-world models also tend to rely on assumptions that are inherently uncertain and imprecise, and our software needs to handle such uncertainty and imprecision in robust ways.

Many of philosophy’s central objects of study – language, cognition, knowledge and inference – are soft in this sense. The structure of language is inherently amorphous. Concepts have fuzzy boundaries. Evidence for a scientific theory is rarely definitive but, rather, supports the hypotheses to varying degrees. If the appropriate scientific models in these domains require soft approaches rather than crisp mathematical descriptions, philosophy should take heed. We need to consider the possibility that, in the new millennium, the mathematical method is no longer fundamental to philosophy.

But the rise of soft methods does not mean the end of logic. Our conversations with Siri and Alexa, for instance, are never very deep, and it is reasonable to think that more substantial interactions will require more precise representations under the hood. In an article in The New Yorker in 2012, the cognitive scientist Gary Marcus provided the following assessment:

Realistically, deep learning is only part of the larger challenge of building intelligent machines. Such techniques lack ways of representing causal relationships (such as between diseases and their symptoms), and are likely to face challenges in acquiring abstract ideas like ‘sibling’ or ‘identical to’. They have no obvious ways of performing logical inferences, and they are also still a long way from integrating abstract knowledge, such as information about what objects are, what they are for, and how they are typically used.

For some purposes, soft methods are blatantly inappropriate. If you go online to change an airline reservation, the system needs to follow the relevant policies and charge your credit card accordingly, and any imprecision is unwarranted. Computer programs themselves are precise artifacts, and the question as to whether a program meets a design specification is fairly crisp. Getting the answer right is especially important when that software is used to control an airplane, a nuclear reactor or a missile launch site. Even soft methods sometimes call for an element of hardness. In 2017, the AI expert Manuela Veloso of Carnegie Mellon University in Pittsburgh was quoted in the Communications of the ACM, locating the weakness of contemporary AI systems in the lack of transparency:

They need to explain themselves: why did they do this, why did they do that, why did they detect this, why did they recommend that? Accountability is absolutely necessary.

The question, then, is not whether the acquisition of knowledge is inherently hard or soft but, rather, where each sort of knowledge is appropriate, and how the two approaches can be combined. Leslie Valiant, a winner of the celebrated Turing Award in computer science, has observed:

A fundamental question for artificial intelligence is to characterise the computational building blocks that are necessary for cognition. A specific challenge is to build on the success of machine learning so as to cover broader issues in intelligence. This requires, in particular, a reconciliation between two contradictory characteristics – the apparent logical nature of reasoning and the statistical nature of learning.

Valiant himself has proposed a system of robust logic to achieve such a reconciliation.

Give us serenity to accept the things we cannot understand, courage to analyse the things we can, and wisdom to know the difference

What about the role of mathematical thought, beyond logic, in our philosophical understanding? The influence of mathematics on science, which has only increased over time, is telling. Even soft approaches to acquiring knowledge are grounded in mathematics. Statistics is built on a foundation of mathematical probability, and neural networks are mathematical models whose properties are analysed and described in mathematical terms. To be sure, the methods make use of representations that are different from conventional representations of mathematical knowledge. But we use mathematics to make sense of the methods and understand what they do.

Mathematics has been remarkably resilient when it comes to adapting to the needs of the sciences and meeting the conceptual challenges that they generate. The world is uncertain, but mathematics gives us the theory of probability and statistics to cope. Newton solved the problem of calculating the motion of two orbiting bodies, but soon realised that the problem of predicting the motion of three orbiting bodies is computationally intractable. (His contemporary John Machin reported that Newton’s ‘head never ached but with his study on the Moon’.) In response, the modern theory of dynamical systems provides a language and framework for establishing qualitative properties of such systems even in the face of computational intractability. At the extreme, such systems can exhibit chaotic behaviour, but once again mathematics helps us to understand how and when that happens. Natural and designed artifacts can involve complex networks of interactions, but combinatorial methods in mathematics provide means of analysing and understanding their behaviour.

Mathematics has therefore soldiered on for centuries in the face of intractability, uncertainty, unpredictability and complexity, crafting concepts and methods that extend the boundaries of what we can know with rigour and precision. In the 1930s, the American theologian Reinhold Niebuhr asked God to grant us the serenity to accept the things we cannot change, the courage to change the things we can, and the wisdom to know the difference. But to make sense of the world, what we really need is the serenity to accept the things we cannot understand, courage to analyse the things we can, and wisdom to know the difference. When it comes to assessing our means of acquiring knowledge and straining against the boundaries of intelligibility, we must look to philosophy for guidance.

Great conceptual advances in mathematics are often attributed to fits of brilliance and inspiration, about which there is not much we can say. But some of the credit goes to mathematics itself, for providing modes of thought, cognitive scaffolding and reasoning processes that make the fits of brilliance possible. This is the very method that was held in such high esteem by Descartes and Leibniz, and studying it should be a source of endless fascination. The philosophy of mathematics can help us understand what it is about mathematics that makes it such a powerful and effective means of cognition, and how it expands our capacity to know the world around us.

Ultimately, mathematics and the sciences can muddle along without academic philosophy, with insight, guidance and reflection coming from thoughtful practitioners. In contrast, philosophical thought doesn’t do anyone much good unless it is applied to something worth thinking about. But the philosophy of mathematics has served us well in the past, and can do so again. We should therefore pin our hopes on the next generation of philosophers, some of whom have begun to find their way back to the questions that really matter, experimenting with new methods of analysis and paying closer attention to mathematical practice. The subject still stands a chance, as long as we remember the reasons we care so much about it.