If you don’t speak Japanese but would like, momentarily, to feel like a linguistic genius, take a look at the following words. Try to guess their meaning from the two available options:

1. nurunuru (a) dry or (b) slimy?

2. pikapika (a) bright or (b) dark?

3. wakuwaku (a) excited or (b) bored?

4. iraira (a) happy or (b) angry?

5. guzuguzu (a) moving quickly or (b) moving slowly?

6. kurukuru (a) spinning around or (b) moving up and down?

7. kosokoso (a) walking quietly or (b) walking loudly?

8. gochagocha (a) tidy or (b) messy?

9. garagara (a) crowded or (b) empty?

10. tsurutsuru (a) smooth or (b) rough?

The answers are: 1(b); 2(a); 3(a); 4(b); 5(b); 6(a); 7(a); 8(b); 9(b) 10(a).

If you think this exercise is futile, you’re in tune with traditional linguistic thinking. One of the founding axioms of linguistic theory, articulated by Ferdinand de Saussure in the early 19th century, is that any particular linguistic sign – a sound, a mark on the page, a gesture – is arbitrary, and dictated solely by social convention. Save those rare exceptions such as onomatopoeias, where a word mimics a noise – eg, ‘cuckoo’, ‘achoo’ or ‘cock-a-doodle-doo’ – there should be no inherent link between the way a word sounds and the concept it represents; unless we have been socialised to think so, nurunuru shouldn’t feel more ‘slimy’ any more than it feels ‘dry’.

Yet many world languages contain a separate set of words that defies this principle. Known as ideophones, they are considered to be especially vivid and evocative of sensual experiences. Crucially, you do not need to know the language to grasp a hint of their meaning. Studies show that participants lacking any prior knowledge of Japanese, for example, often guess the meanings of the above words better than chance alone would allow. For many people, nurunuru really does feel ‘slimy’; wakuwaku evokes excitement, and kurukuru conjures visions of circular rather than vertical motion. That should simply not be possible, if the sound-meaning relationship was indeed arbitrary. (The experiment is best performed using real audio clips of native speakers.)

How and why do ideophones do this? Despite their prevalence in many languages, ideophones were once considered linguistic oddities of marginal interest. As a consequence, linguists, psychologists and neuroscientists have only recently started to unlock their secrets.

Their results pose a profound challenge to the foundations of Saussurean linguistics. According to this research, language is embodied: a process that involves subtle feedback, for both listener and speaker, between the sound of a word, the vocal apparatus and our own experience of human physicality. Taken together, this dynamic helps to create a connection between certain sounds and their attendant meanings. These associations appear to be universal across all human societies.

This understanding of language as an embodied process can illuminate the marvel of language acquisition during infancy. It might even cast light on the evolutionary origins of language itself – potentially representing a kind of ‘proto-world’, a vestige of our ancestors’ first utterances.

How should we define an ideophone? All languages contain powerfully emotive or sensual words, after all. But ideophones share a couple of characteristics that make them unique. For one, they constitute their own unique class, marked with their own linguistic rules – in the same way that, say, nouns or verbs also follow their own rules in how they are formed and used. In Japanese, for instance, ideophones are easy to recognise because they most often take the form of a two-syllable root that is then repeated – such as gochagocha (messy), nurunuru (slimy) or tsurutsuru (smooth).

Native speakers also have to agree that words in this class, as a whole, depict sensual scenes with an intensity that cannot be expressed by vocabulary outside the class. ‘So you could just describe it in dry, arbitrary words, but what [ideophones] do is enable you to experience it yourself,’ Mark Dingemanse at Radboud University in the Netherlands told me.

Ideophones are more prevalent in some languages than others. English has relatively few; by comparison, more than 4,000 Japanese words are classed as ideophones, meaning that they’re a hugely important part of anyone’s vocabulary. They can also be found across Asia, Africa, South America and Australasia. (My personal favourite ideophone is ribuy-tibuy, which means ‘the sound, sight, or motion of a person’s buttocks rubbing together as they walk’ in Mundari, spoken in Eastern India and Bangladesh.)

Some of the earliest formal studies of ideophones come from anthropologists who travelled in Africa in the late 19th and early 20th century. Analysing Bobangi, a language spoken in Congo, the linguist John Whitehead in 1899 described ‘colouring’ words that were ‘among the most graphic in the language’, adding that ‘often they have such force that sentence after sentence can be constructed by means of them, without the use of a single verb.’

The Ewe word for ‘duck’ evokes an uneven walk, and is accompanied by an exaggerated waddling motion

Even more influential was the German missionary Diedrich Westermann, who described the Lautbilder or ‘picture words’ of Ewe, spoken in present-day Ghana and Togo. Like Whitehead, Westermann emphasised the fact that these words could depict vivid and complex scenes. For example, zɔ hlóyihloyi captured the manner of someone ‘walking with many dangling objects’; another, zɔ ʋɛ̃ʋɛ̃, the ‘gait of a fat and stiff person’.

Part of their power comes from how the words themselves are produced: ideophones are often spoken in a dramatic tone of voice and accompanied by visual gestures to help you visualise the scene. The Ewe word ɖaboɖabo, for instance, translates as ‘duck’; in addition, the repetition of its basic syllables evokes an uneven walk, and the sounds are accompanied by an exaggerated waddling motion. ‘It’s a really good example of an ideophone as a word that imitates not just sound but a whole sensory scene,’ Dingemanse, who speaks Ewe, told me.

That observation is significant in itself. In the West, we have tended to think of language as primarily one modality – words, as either speech or writing – but there is now a growing appreciation that bodily gestures and hand signs are also incredibly important for meaning-making.

Yet Westermann’s later research found that there was also something special in ideophones’ phonological features. Comparing the ideophones of half a dozen West African languages, he noted that certain ‘front’ or ‘closed’ vowels – such as the [i] sound in the English word ‘cheese’ – tended to be used to represent concepts that were light, fine or bright, for instance; while ‘back’ or ‘open’ vowels – such as the [ɔ] sound in ‘talk’ or the [ɑ] in past – tended to be associated with a sense of slowness, heaviness and darkness. Meanwhile, voiced consonants such as ‘b’ or ‘g’ – so-called because they require the vocal cords to resonate – were associated with greater weight and softness, while voiceless consonants, such as ‘p’ or ‘k’, tended to be used to represent lighter weights and harsher surfaces. So, for example, in Ewe, someone kputukpluu is thinner than someone who is gbudugbluu.

He found similar relationships with linguistic tones. In many languages, the pitch at which a syllable is spoken can change a word’s meaning. Westermann found that words representing slowness, darkness and heaviness tended to have lower tones than those depicting speed, agility and brightness, which were formed of higher tones. Many also include the repetition of syllables, which can be used to signify number and a continuous action or state. So, for instance, in Ewe, kpata is used to denote one drop falling, but kpata kpata depicts many drops falling.

Such ‘sound symbolism’ – as it is known in the literature – should not be seen as a one-to-one, rigid ‘coding’; a particular phoneme can be associated with a whole range of sensations to do with weight, shape, brightness or darkness and motion. But Westermann’s research offered some of the first concrete evidence that certain linguistic sounds might be somehow better-suited to evoking certain concepts for listeners than others.

These patterns have now been observed in the ideophones of many other languages. To take a couple of examples from Basque – a language isolate that also has more than 4,000 ideophones – tiki taka, with its closed, frontal [i] sound, means taking quick, light steps, while taka taka, with a more open [a] sound, denotes taking heavier steps; tilin tilin means ‘small toll’, and tulun tulun ‘big toll’.

Meanwhile, in Japanese you have gorogoro, which, with its voiced ‘g’ sounds, represents a heavy object rolling continuously, while korokoro, with a voiceless ‘k’, represents a lighter rolling object. Similarly, bota means a ‘thick/much liquid hitting a solid surface’, while pota means a ‘thin/little liquid hitting a solid surface’. If you are a Pokémon fan, you might even notice these sound-meaning relationships in your favourite characters. Pikachu, for instance, is named after the Japanese ideophone pikapika, which means sparkle. As Westermann had noted, the voiceless consonants and front [i] vowels are often associated with brightness in a number of languages.

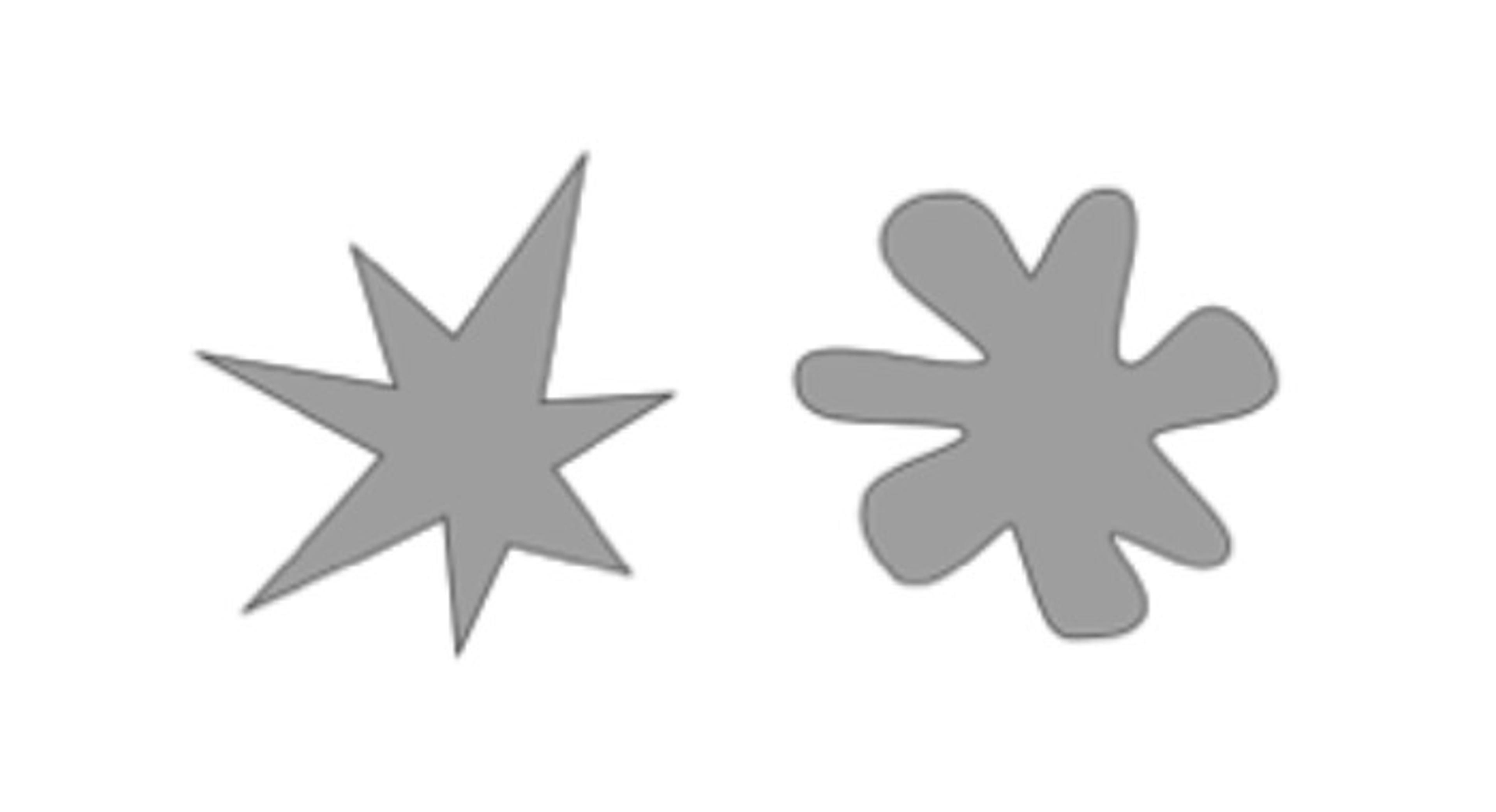

Importantly, psychological studies suggest that these general sound-symbolic connections are universally understood throughout the world. Consider the following experiment. Given two words, kiki and bouba – which term is most appropriate to describe the following shapes?

An early version of this experiment can be found in the writing of the German psychologist Wolfgang Köhler in the late 1920s, who tested participants in Tenerife. But the result has now been repeated many times, in many different cultures. In almost all cases, bouba is chosen to represent the shape on the right by the vast majority of participants, while kiki naturally seems to fit a spiky, pointed object.

The evocative power of ideophones might therefore reflect on an inherent sound symbolism understood by all humans. Although we don’t know the exact origin of these universal connections between sounds and meanings, one attractively parsimonious answer comes from human biology and the bodily experience of speech. According to this theory, subtle feedback from our mouth and throat primes us to associate certain phonemes with certain concepts. The mouth tends to form a rounder shape when we form an [o] sound, compared with an [i] sound – which might help to explain the kiki/bouba phenomenon. Voiced consonants such as ‘b’ also last for a marginally longer time than voiceless consonants such as ‘t’ – which might explain why they are associated with slower speed.

Nasal sounds are found in words associated with the nose, such as ‘snout’, ‘sniff’ or ‘sneeze’

Although nuanced, these ‘vocal gestures’ – in which our articulatory system subtly mimics the concept we wish to convey – might just be enough to prime us to intuitively feel that a word is more or less suited to a particular concept.

Sound symbolism might also emerge from the sensations associated with how we make noises. The marked resonance in the front of our face might explain why nasal sounds are more often found in many words associated with the nose, such as ‘snout’, ‘sniff’ or ‘sneeze’ in English.

However, that doesn’t really explain how sounds could convey a concept such as the brightness of glitter. Another theory chalks this up to more general cross-talk between brain regions, where our neural wiring means that activation in one sensory region triggers a response in another. We know this happens in people with synaesthesia – such that a particular musical note can evoke a colour, say – which is thought to be caused by an overabundance of neural links, as if the brain were a too-dense forest whose tree roots have become entangled. Some scientists believe that a similar, though less pronounced, phenomenon could lie behind sound-symbolic connections. Interestingly, synaesthetes tend to be more sensitive to sound symbolism than average members of the population, offering some circumstantial evidence for the idea.

Whatever its origins, analyses of thousands of languages reveal that most cultures use sound symbolism to some degree. Unsurprisingly, languages with many ideophones make more systematic use of sound symbolism to evoke meaning. In one experiment, for instance, Dingemanse and his colleague Gwilym Lockwood gave a group of Dutch participants a list of Japanese ideophones, their translations and their antonyms. (Lockwood also provided the quiz at the start of this article.) He found that they could guess the right answer with around 72 per cent accuracy. He then tried the same experiment with regular Japanese adjectives. The participants still scored above chance – they were right about 63 per cent of the time – but finding the meanings of the adjectives from the sounds alone was harder, compared with the ideophones.

As part of the same study, Dingemanse also asked Dutch participants to memorise various lists of word pairs. In one, the ideophones were paired with the correct translation that matched their sound symbolism (kibikibi was paired with its true meaning, ‘energetic’, for instance). In another, the Japanese ideophones were paired with words of the opposite meaning (kibikibi was paired with the Dutch word for ‘tired’).

Over all the experiments, the participants found it far easier to learn the ideophones paired with their correct meaning, remembering about 86 per cent of the ‘congruent’ word pairs, compared with 71 per cent of the word pairs in which they had been given the wrong translation of the ideophone. Once again, this was not true in another experiment that measured how well they learnt Japanese adjectives – supporting the idea that there is something special about ideophones, and the way they are formed, that makes them particularly vivid.

Perhaps because of historic ‘Eurocentrism’ in linguistics, and its concentration on more formal, written sources, such research has not always received the attention it deserved. (One linguist in the 1990s even went as far as to describe ideophones as the ‘lunatic fringe’ of language.) Dingemanse points out that even Westermann’s detailed writings had been largely ignored – a ‘forgotten treasure’ – until he recently unearthed them. But in the past decade, more and more linguists and cognitive scientists are coming to recognise them. ‘The topic has seen rapid adoption, and many people are now working on it, and those that aren’t at least feel they should acknowledge it,’ Dingemanse told me in an email.

This burgeoning understanding is of great philosophical importance, suggesting that we should embrace some long-abandoned theories of language. Millennia before Saussure had proposed the ‘arbitrariness of the linguistic sign’, philosophers had debated whether some words are inherently better at expressing an idea. Plato, for instance, records the philosopher Cratylus arguing that there should be a natural connection between a word’s form and its meaning. Linguists refer to this phenomenon as iconicity, the opposite of arbitrariness.

The sheer number of documented ideophones suggests that these ideas are worth revisiting. ‘Languages are on a spectrum; each language is on a balancing point between the forces of arbitrariness and iconicity,’ says Marcus Perlman, a linguist and cognitive scientist at the University of Birmingham in the UK. Dingemanse agrees, noting that while ‘there are still lots of degrees of freedom in language’, the general principle of absolute arbitrariness needs to be modified to acknowledge that both arbitrary and iconic features play a role in transmitting meaning.

Japanese parents use ideophones far more frequently during the first few years of a child’s life

Recognising the importance of iconicity could solve some lingering scientific mysteries, including the process of language acquisition during childhood. To understand why this is a conundrum, put yourself in the mind of a baby or toddler, hearing the swell of conversation around her. Imagine, for instance, that she is watching a rabbit hop across the lawn – while her mother or father says: ‘Look at the rabbit! Look at it hop!’ How is the baby meant to know which word refers to its own actions, which word refers to the rabbit itself, and which refers to its movement? More importantly, how does she know to generalise what she has learnt – so that the same word applies to all rabbits of different colours and sizes? Or that the word ‘hop’ can apply to any creature – even a human – moving in a stop-start, jumpy fashion?

Gestures such as pointing, and the parent’s gaze, could obviously assist in distinguishing some elements of the scene. But Mutsumi Imai at Keio University in Japan and Sotaro Kita at the University of Warwick in the UK argue that sound symbolism can also give subtle cues that help a child to identify what a word is referring to – an idea they call the ‘sound symbolism bootstrapping hypothesis’.

As evidence, Imai and Kita have shown that one- and two-year-olds, when given a sound-symbolic word, were more likely to direct their attention at the appropriate object or movement. The children also found it easier to remember and generalise the sound-symbolic words afterwards – such that, if they learn a new verb, they know it can be applied to other scenarios, suggesting the sound symbolism helps them grasp that the word refers to motion.

It’s telling that Japanese mothers and fathers consistently use ideophones far more frequently during the first few years of a child’s life. This raises another big question: how do children learn languages such as English, without such a widespread, systematic use of sound symbolism? There is now some evidence that parents in these cultures invent their own, or select existing words with slightly more sound-symbolic forms (such as ‘teeny-weeny’ to mean small). To achieve the same ends, they might also use prosodic cues, such as intonation, stress and rhythm, as well as more dramatic pronunciation – another feature of ideophones.

Even more profoundly, ideophones might offer us a glimpse of the early origins of language at least 40,000 years ago. The emergence of speech is a longstanding mystery for evolutionary theorists. In general, the evolution of a complex trait such as language should happen gradually. But if the arbitrariness principle holds, and speech is meaningful only by convention, our amazingly open-ended communicative abilities would have required a huge evolutionary leap. Without a recognised form of language already in place, how could humans’ first vocalisations convey anything useful to their peers?

For this reason, many theorists have preferred a ‘gesture first’ theory – the idea that language arose first with pantomimed hand gestures that slowly evolved into more conventional signs. But this hypothesis only shifts the problem, because it doesn’t fully explain how or why most humans now communicate primarily through speech rather than with signed languages.

According to some scholars, including Imai and Kita, the research on sound symbolism – and ideophones in particular – can help us to solve that puzzle: the iconic associations between sound and meaning could have offered a starting point for the group to develop a shared lexicon.

The first words must have conveyed whole scenes rather than discrete objects

Some preliminary evidence in favour of this hypothesis comes from Perlman. In one of his studies, a group of participants played charades, but instead of using gestures, they were allowed to use only completely novel vocalisations for concepts such as ‘man’, ‘rock’, ‘knife’, ‘hunt’, ‘cook’ and ‘sharp’ – while others guessed what they meant. Contrary to expectations, their accuracy – around 50 per cent – was far higher than chance would have allowed (in this experiment, around 10 per cent). ‘For the majority of these concepts, people had really consistent intuitions about what sounds map onto what kinds of meanings,’ Perlman told me.

Admittedly, many of the utterances took the form of sound onomatopoeia – a knife’s vocalisation involved a ‘whooshing’ sound of a blade, for instance. But combined with the research on sound symbolism and ideophones, it supports the notion that iconic vocalisations, where the word’s form somehow ‘resembles’ its meaning, might have been a starting point for communication between speakers with no previous language.

Kita hypothesises that, like ideophones, the first words must have conveyed whole scenes rather than discrete objects, evolving only later to be more like conventional words. And like ideophones, these utterances might have been dramatic in nature – using a tone of voice, facial expression and gesture – with the sound symbolism to help other group members connect the utterances to their meaning, such as an object’s appearance or movement. The sound-symbolic patterns that we still find today are like linguistic ‘fossils’, Kita says, that can help us to guess what those early words might have sounded like.

There is a danger, however, that these evolutionary theories entrench the idea that today’s ideophones are somehow primitive – a prejudice that has lingered since those first detailed studies of African ideophones. But Dingemanse says that this view could not be further from the truth. Ideophones move us a little closer to understanding how, through sounds alone, two individuals can share sensual experiences across time and space. They should remind us that language is deeply rooted in the body; that each word is, in some small way, a performance-piece that deploys many of our senses. ‘Poetry helps you see things in a new light, helps you savour words, is evocative of sensory scenes,’ Dingemanse told me. ‘That is exactly what ideophones do in many of the world’s languages.’