What makes computation possible? Seeking answers to that question, a hardware engineer from another planet travels to Earth in the 21st century. After descending through our atmosphere, this extraterrestrial explorer heads to one of our planet’s largest data centres, the China Telecom-Inner Mongolia Information Park, 470 kilometres west of Beijing. But computation is not easily discovered in this sprawling mini-city of server farms. Scanning the almost-uncountable transistors inside the Information Park, the visiting engineer might be excused for thinking that the answer to their question lies in the primary materials driving computational processes: silicon and metal oxides. After all, since the 1960s, most computational devices have relied on transistors and semiconductors made from these metalloid materials.

If the off-world engineer had visited Earth several decades earlier, before the arrival of metal-oxide transistors and silicon semiconductors, they might have found entirely different answers to their question. In the 1940s, before silicon semiconductors, computation might appear as a property of thermionic valves made from tungsten, molybdenum, quartz and silica – the most important materials used in vacuum tube computers.

And visiting a century earlier, long before the age of modern computing, an alien observer might come to even stranger conclusions. If they had arrived in 1804, the year the Jacquard loom was patented, they might have concluded that early forms of computation emerged from the plant matter and insect excreta used to make the wooden frames, punch cards and silk threads involved in fabric-weaving looms, the analogue precursors to modern programmable machines.

But if the visiting engineer did come to these conclusions, they would be wrong. Computation does not emerge from silicon, tungsten, insect excreta or other materials. It emerges from procedures of reason or logic.

This speculative tale is not only about the struggles of an off-world engineer. It is also an analogy for humanity’s attempts to answer one of our most difficult problems: life. For, just as an alien engineer would struggle to understand computation through materials, so it is with humans studying our distant origins.

Today, doubts about conventional explanations of life are growing and a wave of new general theories has emerged to better define our origins. These suggest that life doesn’t only depend on amino acids, DNA, proteins and other forms of matter. Today, it can be digitally simulated, biologically synthesised or made from entirely different materials to those that allowed our evolutionary ancestors to flourish. These and other possibilities are inviting researchers to ask more fundamental questions: if the materials for life can radically change – like the materials for computation – what stays the same? Are there deeper laws or principles that make life possible?

Our planet appears to be exceptionally rare. Of the thousands that have been identified by astronomers, only one has shown any evidence of life. Earth is, in the words of Carl Sagan, a ‘lonely speck in the great enveloping cosmic dark’. This apparent loneliness is an ongoing puzzle faced by scientists studying the origin and evolution of life: how is it possible that only one planet has shown incontrovertible evidence of life, even though the laws of physics are shared by all known planets, and the elements in the periodic table can be found across the Universe?

The answer, for many, is to accept that Earth really is as unique as it appears: the absence of life elsewhere in the Universe can be explained by accepting that our planet is physically and chemically unlike the many other planets we have formally identified. Only Earth, so the argument goes, produced the special material conditions conducive to our rare chemistry, and it did so around 4 billion years ago, when life first emerged.

Stanley Miller in his laboratory in 1970. Courtesy and © SIO Photographic Laboratory Collection, SAC 44, UC San Diego

In 1952, Stanley Miller and his supervisor Harold Urey provided the first experimental evidence for this idea through a series of experiments at the University of Chicago. The Miller-Urey experiment, as it became known, sought to recreate the atmospheric conditions of early Earth through laboratory equipment, and to test whether organic compounds (amino acids) could be created in a reconstructed inorganic environment. When their experiment succeeded, the emergence of life became bound to the specific material conditions and chemistry on our planet, billions of years ago.

Genetic evolution also involves problem-solving: insect wings solve the ‘problem’ of flight

However, more recent research suggests there are likely countless other possibilities for how life might emerge through potential chemical combinations. As the British chemist Lee Cronin, the American theoretical physicist Sara Walker and others have recently argued, seeking near-miraculous coincidences of chemistry can narrow our ability to find other processes meaningful to life. In fact, most chemical reactions, whether they take place on Earth or elsewhere in the Universe, are not connected to life. Chemistry alone is not enough to identify whether something is alive, which is why researchers seeking the origin of life must use other methods to make accurate judgments.

Today, ‘adaptive function’ is the primary criterion for identifying the right kinds of biotic chemistry that give rise to life, as the theoretical biologist Michael Lachmann (our colleague at the Santa Fe Institute) likes to point out. In the sciences, adaptive function refers to an organism’s capacity to biologically change, evolve or, put another way, solve problems. ‘Problem-solving’ may seem more closely related to the domains of society, culture and technology than to the domain of biology. We might think of the problem of migrating to new islands, which was solved when humans learned to navigate ocean currents, or the problem of plotting trajectories, which our species solved by learning to calculate angles, or even the problem of shelter, which we solved by building homes. But genetic evolution also involves problem-solving. Insect wings solve the ‘problem’ of flight. Optical lenses that focus light solve the ‘problem’ of vision. And the kidneys solve the ‘problem’ of filtering blood. This kind of biological problem-solving – an outcome of natural selection and genetic drift – is conventionally called ‘adaptation’. Though it is crucial to the evolution of life, new research suggests it may also be crucial to the origins of life.

This problem-solving perspective is radically altering our knowledge of the Universe. Life is starting to look a lot less like an outcome of chemistry and physics, and more like a computational process.

The idea of life as a kind of computational process has roots that go back to the 4th century BCE, when Aristotle introduced his philosophy of hylomorphism in which functions take precedence over forms. For Aristotle, abilities such as vision were less about the biological shape and matter of eyes and more about the function of sight. It took around 2,000 years for his idea of hylomorphic functions to evolve into the idea of adaptive traits through the work of Charles Darwin and others. In the 19th century, these naturalists stopped defining organisms by their material components and chemistry, and instead began defining traits by focusing on how organisms adapted and evolved – in other words, how they processed and solved problems. It would then take a further century for the idea of hylomorphic functions to shift into the abstract concept of computation through the work of Alan Turing and the earlier ideas of Charles Babbage.

In the 1930s, Turing became the first to connect the classical Greek idea of function to the modern idea of computation, but his ideas were impossible without the work of Babbage, a century before. Important for Turing was the way Babbage had marked the difference between calculating devices that follow fixed laws of operation, which Babbage called ‘Difference Engines’, and computing devices that follow programmable laws of operation, which he called ‘Analytical Engines’.

Using Babbage’s distinction, Turing developed the most general model of computation: the universal Turing Machine. In 1936, he imagined this machine much like a tape recorder, comprising a reading and erasing head fed with an infinitely long tape. As this tape passes through the machine, single bits of information (momentarily stored in the machine) are read or written onto it. Both machine and tape jointly determine which bit will be read or written next.

It can be difficult for outsiders to understand how these incommensurable ideas are connected to each other

Turing did not describe any of the materials out of which such a machine would be built. He had little interest in chemistry beyond the physical requirement that a computer store, read and write bits reliably. That is why, amazingly, this simple (albeit infinite) programmable machine is an abstract model of how our powerful modern computers work. But the theory of computation Turing developed can also be understood as a theory of life. Both computation and life involve a minimal set of algorithms that support adaptive function. These ‘algorithms’ help materials process information, from the rare chemicals that build cells to the silicon semiconductors of modern computers. And so, as some research suggests, a search for life and a search for computation may not be so different. In both cases, we can be side-tracked if we focus on materials, on chemistry, physical environments and conditions.

In response to these concerns, a set of diverse ideas has emerged to explain life anew, through principles and processes shared with computation, rather than the rare chemistry and early Earth environments simulated in the Miller-Urey experiment. What drives these ideas, developed over the past 60 years by researchers working in disparate disciplines – including physics, computer science, astrobiology, synthetic biology, evolutionary science, neuroscience and philosophy – is a search for the fundamental principles that drive problem-solving matter. Though researchers have been working in disconnected fields and their ideas seem incommensurable, we believe there are broad patterns to their research on the origins of life. However, it can be difficult for outsiders to understand how these seemingly incommensurable ideas are connected to each other or why they are significant. This is why we have set out to review and organise these new ways of thinking.

Their proposals can be grouped into three distinct categories, three hypotheses, which we have named Tron, Golem and Maupertuis. The Tron hypothesis suggests that life can be simulated in software, without relying on the material conditions that gave rise to Earth’s living things. The Golem hypothesis suggests that life can be synthesised using different materials to those that first set our evolutionary history moving. And, if these two ideas are correct and life is not bound to the rare chemistry of Earth, we then have the Maupertuis hypothesis, the most radical of the three, which explores the fundamental laws involved in the origins of complex computational systems.

These hypotheses suggest that deep principles govern the emergence of problem-solving matter, principles that push our understanding of modern physics and chemistry towards their limits. They mark a radical departure from life as we once knew it.

In 1982, the science-fiction film Tron was released in the United States. Directed by Steven Lisberger, it told the story of biological beings perfectly and functionally duplicated in a computer program. The hero, Tron, is a human-like algorithm subsisting on circuits, who captures the essential features of living without relying on biotic chemistry. What we have called the ‘Tron hypothesis’ is the idea that a fully realised simulation of life can be created in software, freed from the rare chemistry of Earth. It asks what the principles of life might be when no chemical traces can be relied upon for clues. Are the foundations of life primarily informational?

Five years after Tron was first released in cinemas, the American computer scientist Christopher Langton introduced the world to a concept he called ‘artificial life’ or ‘ALife’ at a workshop he organised on the simulation of living systems. For Langton, ALife was a way of focusing on the synthesis of life rather than analytical descriptions of evolved life. It offered him a means of moving beyond ‘life as we know it’ to what he called ‘life as it could be’. The goal, in his own words, was to ‘recreate biological phenomena in alternative media’, to create lifelike entities through computer software.

Langton’s use of computers as laboratory tools followed the work of two mathematicians: Stanisław Ulam and John von Neumann, who both worked on the Manhattan Project. In the late 1940s, Ulam and von Neumann began a series of experiments on early computers that involved simulating growth using simple rules. Through this work, they discovered the concept of cellular automata, a model of computation and biological life. Ulam was seeking a way of creating a simulated automaton that could reproduce itself, like a biological organism, and von Neumann later connected the concept of cellular automata to the search for the origins of life. Using this concept, von Neumann framed life’s origins as Turing had earlier done with computation, by looking for the abstract principles governing what he called ‘construction’: ie, biological evolution and development. Complicated forms of construction build patterns of the kind that we associate with organismal life, such as cell growth, or the growth of whole individuals. A much simpler form of construction can be achieved on a computer using a copy-and-paste operation. In the 20th century, von Neumann’s insights about a self-replicating cellular automaton, a ‘universal constructor’, were deemed too abstract to help our understanding of life’s chemical origins. They also seemed to have little to say about biological processes such as adaptation and natural selection.

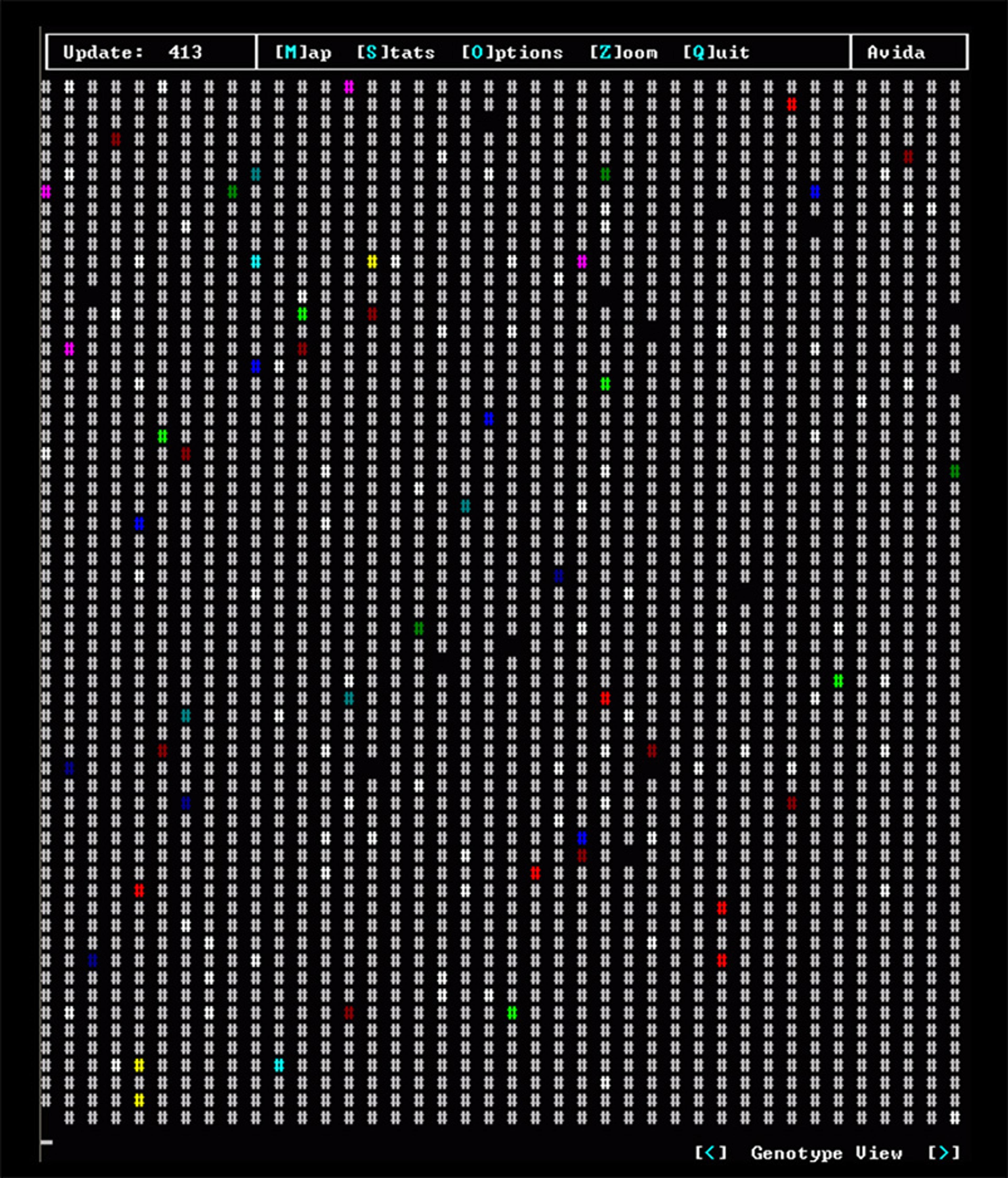

A computer program called Avida simulated evolutionary processes

The ALife research that followed the work of Ulam, von Neumann and Langton generated a slew of fascinating formal and philosophical questions. But, like the work of von Neumann, these questions have had a limited and short-lived impact on researchers actively working on the origins of life. At the end of the 20th century, several pioneers in ALife, including the American philosopher Mark Bedau, lamented the lack of progress on these questions in an influential paper titled ‘Open Problems in Artificial Life’. The unanswered problems identified by Bedau and his eight co-authors included generating ‘a molecular proto-organism in vitro’, achieving ‘the transition to life in an artificial chemistry in silico’, demonstrating ‘the emergence of intelligence and mind in an artificial living system’ and, among other things, evaluating ‘the influence of machines on the next major evolutionary transition of life’.

These open problems went unanswered, and this paper is coincident with the decline of the field. Following its publication, many of the authors embarked on different research careers, either jumping from artificial life into the adjacent field of evolutionary theory, or pursuing research projects involving chemistry rather than software and hardware.

Nevertheless, ALife produced some very sophisticated models and ideas. In the same year that Bedau and his colleagues identified problems, another group of researchers demonstrated the heights that artificial life had reached at the turn of the century. In their research paper ‘Evolution of Biological Complexity’ (2000), this group, led by the physics theorist Christoph Adami, wrote about a computer program called Avida that simulated evolutionary processes. ‘The Avida system,’ Adami and his co-authors wrote, ‘hosts populations of self-replicating computer programs in a complex and noisy environment, within a computer’s memory.’ They called these programs ‘digital organisms’, and described how they could evolve (and mutate) in seconds through programmed instructions. Each Avida organism was a single simulated genome composed of ‘a sequence of instructions that are processed as commands to the CPU of a virtual computer.’

A screenshot of the Avida software that simulates evolutionary processes. Courtesy Wikipedia

The Tron hypothesis seemed to hold promise. But, in the end, the work of Adami and others made more important contributions to population genetics and theoretical ecology rather than research on the origins of life. This work helped bridge fundamental theorems in computation with abstract biological concepts, such as birth, competition and death, but has not broken prebiotic chemistry’s hold over dominant conceptions of life.

In recent years, things have begun to change as new concepts from physics are expanding the standard Tron hypothesis. In 2013, the physicist David Deutsch published a paper on what he called ‘constructor theory’. This theory proposed a new way of approaching physics in which computation was foundational to the Universe, at a deeper level than the laws of quantum physics or general relativity. Deutsch hoped the theory would renovate dominant ideas in conventional physics with a more general framework that eliminated many glitches, particularly in relation to quantum mechanics and statistical mechanics, while establishing a foundational status for computation. He also wanted to do all of this by providing a rigorous and consistent framework for possible and impossible transformations, which include phenomena like the movement of a body through space or the transition from a lifeless to a living planet. Constructor theory does not provide a quantitative model or offer predictions for how these transformations will happen. It is a qualitative framework for talking about possibility; it explains what can and can’t happen in the Universe in a way that goes beyond the laws of conventional physics. Deutsch’s theory is a provocative vision, and many questions remain about its utility.

Deutsch’s theory builds on von Neumann’s construction-replication model for life – the original Tron hypothesis – which in turn is built on Turing’s model of computation. Through Deutsch’s theory we begin moving away from principles of simulation as pursued through Avida organisms and silicon-based evolution, and head toward larger conceptual ideas about how life might form. Constructor theory, and other similar ideas, may be necessary for understanding the deeper origins of life, which conventional physics and chemistry have failed to adequately explain.

It is one thing to simulate life or identify the principles inherent in these simulations. It is another thing to synthesise life. In comparison with life simulated through software, the Golem hypothesis states that a synthetic life-form can be built from novel chemical constituents different to those that gave rise to the complex forms of life on Earth. This hypothesis is named after a mythical being from Jewish folklore that lives and breathes despite being wholly made from inanimate materials, typically mud. Brought to life by inscribing its forehead with a magical word, such as emét (‘truth’ in Hebrew), the golem is a form of engineered life constructed from a process different from evolution. If Tron emphasises information, then the Golem emphasises energy – it’s a way of binding information to metabolism.

In the novel The Golem (1913-14), Gustav Meyrink wrote: ‘There is nothing mysterious about it at all. It is only magic and sorcery – kishuf – that frighten men; life itches and burns like a hairshirt.’ For our purposes, the golem is an analogy for synthetic life. It is a living thing grounded in generative mud, and an abstract representation of what is possible with synthetic biology and protocells.

In the early 21st century, interest in such ‘mud’ became more popular as the limitations of ALife inspired a renewed interest in the role of different kinds of materials and metabolism to those found on the prebiotic Earth. In 2005, the American chemists Steven A Benner and Michael Sismour described the two kinds of synthetic biologists who were working on problems of life: ‘One uses unnatural molecules to reproduce emergent behaviours from natural biology, with the goal of creating artificial life. The other seeks interchangeable parts from natural biology to assemble into systems that function unnaturally.’ If the latter are testing the Tron hypothesis, the former are testing the Golem hypothesis.

Assembly theory helps us understand how all the objects of chemistry and biology are made

One of the best examples of life-like synthetic biology is the creation of genetic systems in which synthetic DNA alphabets are supported by an engineered expansion of the Watson-Crick double-stranded base-pairing mechanism. This does not involve the creation of an alternative biochemistry in a laboratory but simply the chemical synthesis of an augmented, evolvable system. In fact, all successful efforts to date in synthetic biology derive from augmentation, not creation.

The Golem hypothesis raises important questions: if life can be made from materials unlike those that gave rise to life as we know it, what are the shared principles that give rise to all living things? What are the universal properties of life-supporting chemistry?

The recent development of assembly theory offers us a way to begin answering these questions. Assembly theory helps us understand how all the objects of chemistry and biology are made. Each complex object in the Universe, from microscopic algae to towering skyscrapers, is built from unique parts, involving combinations of molecules. Assembly theory helps us understand how these parts and objects are combined, and how each generation of complexity relies on earlier combinations. Because this theory allows us to measure the ‘assembly index’ of an object – how ‘assembled’ it is; how complex its parts are – we can make determinations about evolution that are separate from those normally used to define life.

In this framework, one can identify objects that are the outcome of an evolutionary process through the number of assembly steps that have been taken, without having a prior model or knowing the details of the process. The requirements are: first, that an object can be decomposed into building blocks; second, a minimal rule-set exists for joining blocks together; and third, sequences exist that describe the assembly of these building blocks into the object, where intermediate objects can be reused as new building blocks in the construction process. Very small assembly indices are characteristic of the pure physical and chemical dynamics that produce crystals or planets, but large indices in a large population of objects are taken as evidence for an evolutionary process – and a sign of life. In some ways, assembly theory is a version of the Golem hypothesis: through it we can potentially locate forms of life constructed from a process other than evolution. The idea is that a complex entity, such as a golem, requires a significant amount of time, energy and information to be assembled, and the assembly index is a measure of these requirements. This theory allows us to map certain computational concepts in such a way that we can find the shared signature of a problem-solving process.

The golem shows us how varied living materials are likely to be in the Universe, and how the focus on a limited set of materials is likely to be overly narrow. Assembly theory shows us how any historical process will leave universal imprints on materials, no matter how diverse those materials are.

The Tron and Golem hypotheses are challenging and bold, but there are perhaps even more radical ideas about the origins of life. These ideas suggest that the emergence of complex computational systems (ie, life) in the Universe may be governed by deeper principles than we previously assumed. Organisms may have a more general objective than adaptation. What if life-forms arise not from a series of adaptive accidents, such as mutation and selection, but by attempting to solve a problem? We call this the Maupertuis hypothesis. It addresses how life might proliferate across the Universe even without the specific conditions found on Earth. So, what is this shared problem? The Maupertuis hypothesis suggests that, building on the second law of thermodynamics, life might be the Universe’s way of reaching thermodynamic equilibrium more quickly. It might be how the Universe ‘solves’ the problem of processing energy more effectively.

Pierre-Louis Moreau de Maupertuis was an 18th-century French mathematician and philosopher who formulated the ‘principle of least action’, which explains the simple trajectories of light and physical objects in space and time. In both cases, nature reveals an economy of means: light follows the fastest path between two points; physical objects move in a way that requires the least energy. And so, according to what we are calling the Maupertuis hypothesis, life can also be understood in a similar way, as the minimisation or maximisation of certain quantities. Research into the origins of life can be thought of as a search for these quantities.

For example, evolution by natural selection is a process in which repeated rounds of survival cause dominant genotypes to encode more and more information about their environment. This creates organisms that seem to be maximising adaptive information while conserving metabolic energy. And, in the process, these organisms hasten the production of entropy in the Universe. It is possible to abstract this dynamic in terms of Bayesian statistics. From this perspective, a population of evolving organisms behaves like a sampling process, with each generation selecting from the possible range of genetic variants. Over many generations, the population can update its collective ‘knowledge’ of the world through repeated rounds of differential survival (or ‘natural selection’).

‘Free energy’ is a kind of measurement of uncertainty: the difference between a prediction and an outcome

This Bayesian thinking led to the free-energy principle, proposed by the neuroscientist Karl Friston in 2005. His principle has become foundational to what we are calling the Maupertuis hypothesis. Like constructor theory, the free-energy principle seeks to provide a unifying framework for all living systems. Friston’s principle extends ideas from Bayesian statistics (estimating parameters) and statistical mechanics (minimising cost functions) to describe any process of learning or adaptation, whether in humans, organisms or other living systems.

His framework seeks to explain how these living systems are driven to minimise uncertainty about their environment by learning to make better predictions. For Friston, ‘free energy’ is a kind of measurement of uncertainty: the difference between a prediction and an outcome. The larger the difference, the higher the free energy. In Friston’s framework, a living system is simply any dynamical system that can be shown to minimise free energy, to minimise uncertainty. A rock rolling down a hill is minimising potential energy but certainly not Fristonian free energy – rocks do not learn to make better predictions about their environments. However, a bacterium swimming along a nutrient gradient is minimising free energy as it extracts information from its environment to record the position of its food. A bacterium is like a rock that infers.

If one is willing to accept the idea that modelling the world – by extracting information and making inferences about the environment – is constitutive of life, then life should arise everywhere and rather effortlessly. Like the principle of least action, which underpins all theories in physics, Friston’s idea suggests that minimising free energy is the action supporting every candidate form of life. And this includes biological organisms, societies and technologies. From this perspective, even machine learning models such as ChatGPT are candidate life-forms because they can take action in the world (fill it with their texts), perceive these changes during training, and learn new internal states to minimise free energy.

According to the Maupertuis hypothesis, living things are not limited to biological entities, but are, in a more general sense, machines capable of transmitting adaptive solutions to successive generations through the minimisation of free energy. Put another way, living things are capable of transmitting information from their past to their future. If that is true, then how do we define the boundaries of living things? What counts as an individual?

The information theory of individuality, developed by David Krakauer and colleagues from Santa Fe Institute in New Mexico and collaborators from the Max Planck Institute in Leipzig in 2020, addresses this question. Responding to ideas such as Friston’s free-energy principle, we proposed that there are more fundamental ‘individuals’ than the seemingly discrete forms of life around us. These individuals are defined by their ability to transmit adaptive information through time. We call them ‘Maupertuis particles’ for the way they play a role comparable to particles moving within fields in a physical theory – like a mass moving in a gravitational field. These individuals do not need to be biological. All they need to do is transmit adaptive solutions to successive generations.

Individuals are dynamical processes that encode adaptive information

Life relies on making copies, which progressively adjust to their environment with each new generation. In traditional approaches to the origin of life, mechanisms of replication are particularly important, such as the copying of a gene within a cell. However, replication can take many other forms. The copying of a gene within a cell is just chemistry’s way of approximating the broader informational function of Maupertuis particles. Even within biology, there are many kinds of individuals: viruses that outsource most of their replication machinery to host genomes, microbial mats in which horizontal gene-transfer erodes the informatic boundary of the cell, and eusocial insects where sterile workers support a fertile queen who produces future descendants. According to the information theory of individuality, individuals can be built from different chemical foundations. What matters is that life is defined by adaptive information. The Maupertuis hypothesis allows new possibilities for what counts as a living thing: new forms and degrees of individuality.

So how do we find these individuals? According to the information theory of individuality, individuals are dynamical processes that encode adaptive information. To understand how these might be discovered, consider how different objects in our Universe are detected at different wavelengths of light. Many features of life, such as the heat signatures of metabolic activity, become visible only at higher wavelengths. Others, such as carbon flux, are visible at lower wavelengths. In the same way, individuals are detected by different ‘informational frequencies’. Each life form possesses a different frequency-spectrum, with each type forming increasingly strong correlations in space (larger and larger adaptations) and time (longer and longer heredity). Even within the same chemical processes, multiple different individuals can be found depending on the choice of informational filter used. Consider a multicellular organism – a human being. Viewed at a distance (using a kind of coarse-grain filter), it is a single coordinated entity. However, viewed up close (using a fine-grain filter), this single entity is teeming with somewhat independent tissues, cells and proteins. There are multiple scales of individuality.

So, what is the shared goal of these proliferating individuals? As they each expend metabolic energy to ensure reliable information-propagation, they accelerate the production of environmental entropy. In this way, by sharing adaptive information, each individual indirectly hastens the heat death of the Universe. By solving small problems locally, life creates big problems globally.

Is life problem-solving matter? When thinking about our biotic origins, it is important to remember that most chemical reactions are not connected to life, whether they take place here or elsewhere in the Universe. Chemistry alone is not enough to identify life. Instead, researchers use adaptive function – a capacity for solving problems – as the primary evidence and filter for identifying the right kinds of biotic chemistry. If life is problem-solving matter, our origins were not a miraculous or rare event governed by chemical constraints but, instead, the outcome of far more universal principles of information and computation. And if life is understood through these principles, then perhaps it has come into existence more often than we previously thought, driven by problems as big as the bang that started our abiotic universe moving 13.8 billion years ago.

The physical account of the origin and evolution of the Universe is a purely mechanical affair, explained through events such as the Big Bang, the formation of light elements, the condensation of stars and galaxies, and the formation of heavy elements. This account doesn’t involve objectives, purposes, or problems. But the physics and chemistry that gave rise to life appear to have been doing more than simply obeying the fundamental laws. At some point in the Universe’s history, matter became purposeful. It became organised in a way that allowed it to adapt to its immediate environment. It evolved from a Babbage-like Difference Engine into a Turing-like Analytical Engine. This is the threshold for the origin of life.

In the abiotic universe, physical laws, such as the law of gravitation, are like ‘calculations’ that can be performed everywhere in space and time through the same basic input-output operations. For living organisms, however, the rules of life can be modified or ‘programmed’ to solve unique biological problems – these organisms can adapt themselves and their environments. That’s why, if the abiotic universe is a Difference Engine, life is an Analytical Engine. This shift from one to the other marks the moment when matter became defined by computation and problem-solving. Certainly, specialised chemistry was required for this transition, but the fundamental revolution was not in matter but in logic.

In that moment, there emerged for the first time in the history of the Universe a big problem to give the Big Bang a run for its money. To discover this big problem – to understand how matter has been able to adapt to a seemingly endless range of environments – many new theories and abstractions for measuring, discovering, defining and synthesising life have emerged in the past century. Some researchers have synthesised life in silico. Others have experimented with new forms of matter. And others have discovered new laws that may make life as inescapable as physics.

It remains to be seen which will allow us to transcend the history of our planet.

Published in association with the Santa Fe Institute, an Aeon Strategic Partner.

For more information about the ideas in this essay, see Chris Kempes and David Krakauer’s research paper ‘The Multiple Paths to Multiple Life’ (2021), and Sara Imari Walker’s book Life as No One Knows It: The Physics of Life’s Emergence (2024).