In an age of anxiety, the words sound so reassuring: predictive policing. The first half promises an awareness of events that have not yet occurred. The second half clarifies that the future in question will be one of safety and security. Together, they perfectly match the current obsession with big data and the mathematical prediction of human actions. They also address the current obsession with crime in the Western world – especially in the United States, where this year’s presidential campaign has whipsawed between calls for law and order and cries that black lives matter. A system that effectively anticipated future crime could allow an elusive reconciliation, protecting the innocents while making sure that only the truly guilty are targeted.

It is no surprise, then, that versions of predictive policing have been adopted (or soon will be) in Atlanta, New York, Philadelphia, Seattle and dozens of other US cities. These programs are finally putting the enticing promises to a real-world test. Based on statistical analysis of crime data and mathematical modelling of criminal activity, predictive policing is intended to forecast where and when crimes will happen. The seemingly unassailable goal is to use resources to fight crime and serve communities most effectively. Police departments and city administrations have welcomed this approach, believing it can substantially cut crime. William Bratton, who in September stepped down as commissioner of New York City’s police department – the nation’s biggest – calls it the future of policing.

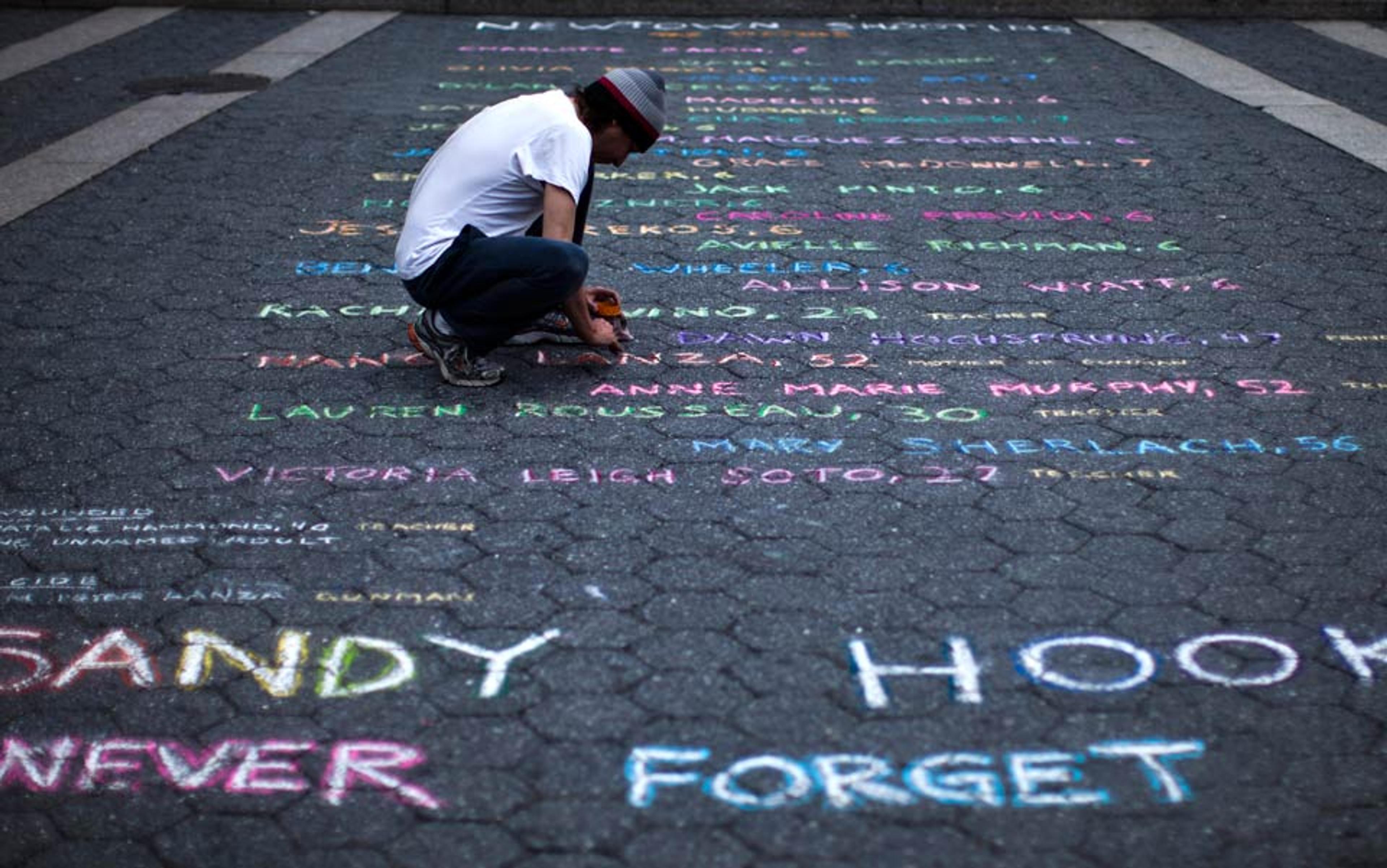

But even if predictive policing cuts crime as claimed, which is open to question, it raises grave concerns about its impact on civil rights and minorities – especially after the fatal police shooting in 2014 of Michael Brown, an unarmed 18-year-old black man, in Ferguson, a suburb of St Louis in Missouri. Subsequent fatal interactions between police and minorities, including the deaths of several unarmed black citizens through police actions and a brutal ambush of police in Dallas, spotlight their ongoing troubled interactions. So does a recent Department of Justice report that found widespread racism in the operations of the Baltimore police department. Predictive policing is likely to affect these issues by offering police new ways to seek and scrutinise criminal suspects without unfairly singling out minority communities. However, rather than allaying public concerns, it might end up increasing tensions between police and minority communities.

The American Civil Liberties Union (ACLU) has issued multiple warnings that predictive policing could encourage racial profiling, and could finger individuals or groups selected by the authorities as crime-prone, or even criminal, without any crime. Equally troubling, the approach is motivated by the reductive dream of cleanly solving social problems with computers. Like any technology, predictive policing is subject to the ‘technological imperative’, the drive to carry a technology to its ultimate without considering its human costs. Our society is supposed to be based on fair and equal justice for all but, to many critics, predictive policing relies on a contrary vision of targeted justice, meted out according to where and how citizens happen to live as determined by computer algorithm.

The first step toward sorting out these issues is understanding the predictive process. One example is PredPol, the most widely used and publicised commercial predictive software, now operating in some 50 police departments around the US (including major cities such as Los Angeles and Atlanta) and in Kent in the UK. It works by combing through droves of old and new police records about type, place and time of crimes to analyse trends and to project upcoming criminal activity including property crime, drug activity and more. These results are used to highlight ‘hot spots’ 500 feet by 500 feet square, an area of several city blocks, that are likely to be the sites of certain crimes in the near future. Officers can then go out on daily patrol armed with these locations, instructed to give them extra attention with an eye for criminal activity.

Statistical modelling of criminal behaviour might seem far-fetched, but we encounter statistical distributions of human characteristics all the time; think of the ‘bell curve’ that describes things such as height or test scores. Criminal actions betray their own statistical patterns. Consider burglaries: we don’t know when or where a specific burglary will happen, but statistical analysis has shown that, once it does, other burglaries tend to cluster around it.

Statistics-based policing leads to the understandable fear that it will turn into automated ‘policing by algorithm’, though police departments have been using statistics for a long time, largely due to Bratton. In 1994, during his first tour as NYPD commissioner, he introduced CompStat, a tool to track crime statistics. The experiment was widely considered a success, as crime rates fell substantially (though they dropped in many other large cities too). By 2008, as the Los Angeles chief of police, he was suggesting that compiled data could be used to predict crime, and worked within LAPD and with federal agencies to develop this approach. The project received considerable media coverage that soon converged on the PredPol software, whose roots in Los Angeles gave it a strong early connection to LAPD.

These roots have academic origins that go back to the anthropologist Jeffrey Brantingham at the University of California, Los Angeles, who studies how people make choices within complex environments. In the field, this might mean examining how tribal hunter-gatherers find their next meal. Applying his knowledge to criminal behaviour, Brantingham concluded that urban criminals use similar processes when they choose homes to burgle or cars to steal. To turn this insight into a predictive model embodying ‘good social science and good math’, as Brantingham puts it, he recruited several UCLA mathematicians to work on the problem in 2010 and 2011.

One of them, George Mohler (now at Santa Clara University in California), found a promising approach. As he explains it, human behaviour often shows ‘a well-defined underlying statistical distribution’. Knowing the distribution and drawing on past records, a data scientist can develop algorithms that give ‘fairly accurate estimates of the probability of various behaviours, from clicking on an ad to committing, or being the victim of, a crime’. Mohler’s big finding was that ‘self-exciting point processes’, the statistical model that describes the aftershocks that follow earthquakes, also describes the temporal and geographic distributions of burglaries and other crimes. This result, published in the Journal of the American Statistical Association in 2011, is the basis of the ETAS algorithm (epidemic type aftershock sequence) at the heart of the PredPol software. In 2012, Brantingham and Mohler founded and remain involved with the PredPol company in Santa Cruz, California. In 2015, it was projected to raise revenue in excess of $5 million.

The extended model crosses an important line, escalating from the property crime that PredPol emphasises to violent crime

PredPol is not alone. Police departments can choose from among several competing products including HunchLab, whose development by the Philadelphia-based Azavea Corporation began in 2008. HunchLab is used in Philadelphia and in Miami, was recently installed by the St Louis County Police Department after the Ferguson shooting, and is under test by NYPD. This is emblematic of the rapid spread of predictive policing. According to the criminologist Craig Uchida of Justice and Security Strategies Incorporated: ‘Every police department in cities of 100,000 people and up will be using some form of predictive policing in the next few years.’

HunchLab combines several criminological models and data sets. Like PredPol, it seeks repetitive patterns; but according to HunchLab’s product manager Jeremy Heffner, crimes such as homicide need more data to build a reliable model, since the ETAS algorithm applies mostly to property crimes. HunchLab adds risk terrain modelling, which correlates where crimes happen with specific types of locations such as bars and bus stops; temporal and weather data, such as season of the year and temperature; and what HunchLab describes, without particulars, as ‘socioeconomic indicators’ and ‘historic crime levels’. As noted by Andrew Ferguson, a legal scholar at the University of the District of Columbia, this extended model crosses an important line, escalating from the property crime that PredPol emphasises to violent crime.

HunchLab’s analysis yields lists of types of crimes, from theft to homicide; their level of risk for different areas in a city; and recommendations about deploying police resources to counter these criminal activities. This information can be given to officers starting on patrol or, in what is a step beyond the PredPol approach, sent via mobile devices to officers in the field to manage them in real time based on their current locations.

The looming question is, do PredPol and HunchLab really reduce crime? The answer so far is incomplete at best. PredPol publicises crime-reduction numbers that are, it says, the result of adopting its software but Azavea has not done so. Heffner says that the company focuses instead on learning how police departments use HunchLab to change officer behaviour or otherwise impact crime levels. Meanwhile, much of what we perceive about the value of predictive policing rests on PredPol’s claims.

These seemingly show great success. The company reports that the LAPD Foothills Division saw a 13 per cent reduction in general crime in the four months after PredPol was installed, compared with a 0.4 per cent increase in areas without it. In Atlanta, crime fell by 8 per cent and 9 per cent in two police zones using the software but remained flat or increased in four zones without it; and an ‘aggregate’ 19 per cent decrease in crime across the city was attributed mostly to PredPol. The company also cites double-digit percentage decreases in crime after the software entered service in smaller cities.

Such numbers have impressed elected leaders in city halls across the US. Eager to find answers to both long-standing and current issues in policing, they have quickly adopted the predictive approach. In 2013, Seattle’s mayor Mike McGinn announced that an earlier trial of PredPol would be expanded citywide. In 2014, Atlanta’s mayor Kasim Reed praised predictive policing in The Wall Street Journal, writing that Atlanta’s use of PredPol resulted in crime ‘falling below the 40-year lows we have already seen’. He added: ‘In the future, police will perfect the use of predictive analytics to thwart crimes before they occur’ – a welcome prediction today when belief in the ‘unbiased’ nature of computer algorithms would seem to smooth out sharp political differences about crime levels in the US.

PredPol is hardly an unbiased source, however, and the limited external analyses so far have not shown similar successes. In 2013, Chicago police used data to identify and put on a ‘hot list’ some 400 people considered likely to be involved in fatal shootings as shooters or victims. The number has since been raised to 1,400, many of whom have received ‘custom notifications’ – home visits by police to warn them they are known to the department. However, a just-published RAND Corporation study of the original 2013 project shows that it did not reduce homicides. The report states that individuals on the hot list ‘are not more or less likely to become a victim of a homicide or shooting than the comparison group’, but they have a higher probability of being arrested for a shooting. Nevertheless, a similar effort is now under way in Kansas City, Missouri. A separate RAND assessment of a predictive program developed and used by the police department in Shreveport, Louisiana concluded: ‘The program did not generate a statistically significant reduction in property crime.’

More patrol time on hot spots could indeed be reducing crime; then again, on days when there is little crime, officers could have more time to visit suspect areas

The biggest dataset so far comes from a long-term study of PredPol as used by LAPD and the Kent police, published in 2015 in the Journal of the American Statistical Association. This is not an independent evaluation like the third-party RAND reports, as would be the gold standard; five of its seven authors (including Brantingham and Mohler) have or had connections to PredPol. Still, its peer review, design and statistical analysis make it worth consideration.

From 2011 to 2013, the authors carried out a ‘double blind’ experiment. Officers going on patrol in both police departments were guided at random toward areas predicted either by the ETAS algorithm or by human crime-analysts. The type of information being used was unknown to each officer, and known to only a few administrators. On average, ETAS predicted nearly twice as much crime as the human analysts did. As officers increased patrol time on the ETAS hot spots, that correlated with an average 7 per cent decrease in crime. In contrast, patrol time spent on hot spots predicted by the analysts did not correlate with a statistically significant reduction in crime.These results carry caveats. If ETAS outdoes human analysts in predicting crime, that might be because it better integrates old and new data. But with only four human analysts of unknown effectiveness included in the study, the comparison is not wholly convincing. Also, the crime reduction should be considered in the context of the statistical truism ‘correlation is not causation’. More patrol time on ETAS hot spots could indeed be reducing crime; then again, on days when there is little crime for whatever reason, officers could have more time to visit suspect areas.

The 7 per cent correlation suggests that ETAS is doing something right, but it is premature to assert that PredPol unquestionably reduces crime, and certainly not at the double-digit levels that PredPol reports. Nevertheless, PredPol is driven by data and should be judged by data. The results from this study in the field credibly show that the ETAS algorithm has some value, and that predictive policing is worth testing more fully to confirm its effectiveness in reducing crime.

Even if that effectiveness were to become firmly established, questions remain about how the technology affects policing. PredPol sees positive results for police-community interactions, claiming that the software helps officers ‘build relationships with residents to engage them in community crime-watch efforts’. After a US Department of Justice report pointed to distrust of police as a factor in the shooting of Brown and the resulting riots in Ferguson, police in St Louis similarly hope that HunchLab will improve community relations. Perhaps the Department of Justice report about the Baltimore police will inspire a similar reaction.

Many activists, defenders of civil rights and legal experts see the opposite, that predictive technology stokes community resentment by unfairly targeting innocent people, minorities and the vulnerable, and threatens Fourth Amendment safeguards against unreasonable search and seizure. Earlier this year, Matthew Harwood and Jay Stanley of the ACLU wrote: ‘Civil libertarians and civil rights activists… tend to view [predictive policing technology] as a set of potential new ways for the police to continue a long history of profiling and pre-convicting poor and minority youth.’ These authors condemned the technology for its potential to send ‘a flood of officers into the very same neighbourhoods they’ve always over-policed’ and for its lack of transparency.

Transparency is essential because much of the potential for abuse depends on the integrity of the data used by the predictive software. Skewed data would distort predictions and judgments about their value; but since police culture resists revealing its methods, crime data is generally closed to scrutiny. It is hard to determine if the incidence of crime has been underreported, as NYPD and LAPD have recently been caught doing, or if racial factors taint the data. PredPol and HunchLab state that they use no racial or ethnic information, but the data they do use might already embed racial bias that would carry forward in new crime predictions to further entrench the bias. Also unknown is how PredPol and HunchLab manipulate the data because their algorithms are proprietary, as are those used by other brands of predictive software.

Officers might stop people without ‘reasonable suspicion’ merely because they’re in areas defined by predictive algorithms, thus weakening Fourth Amendment rights

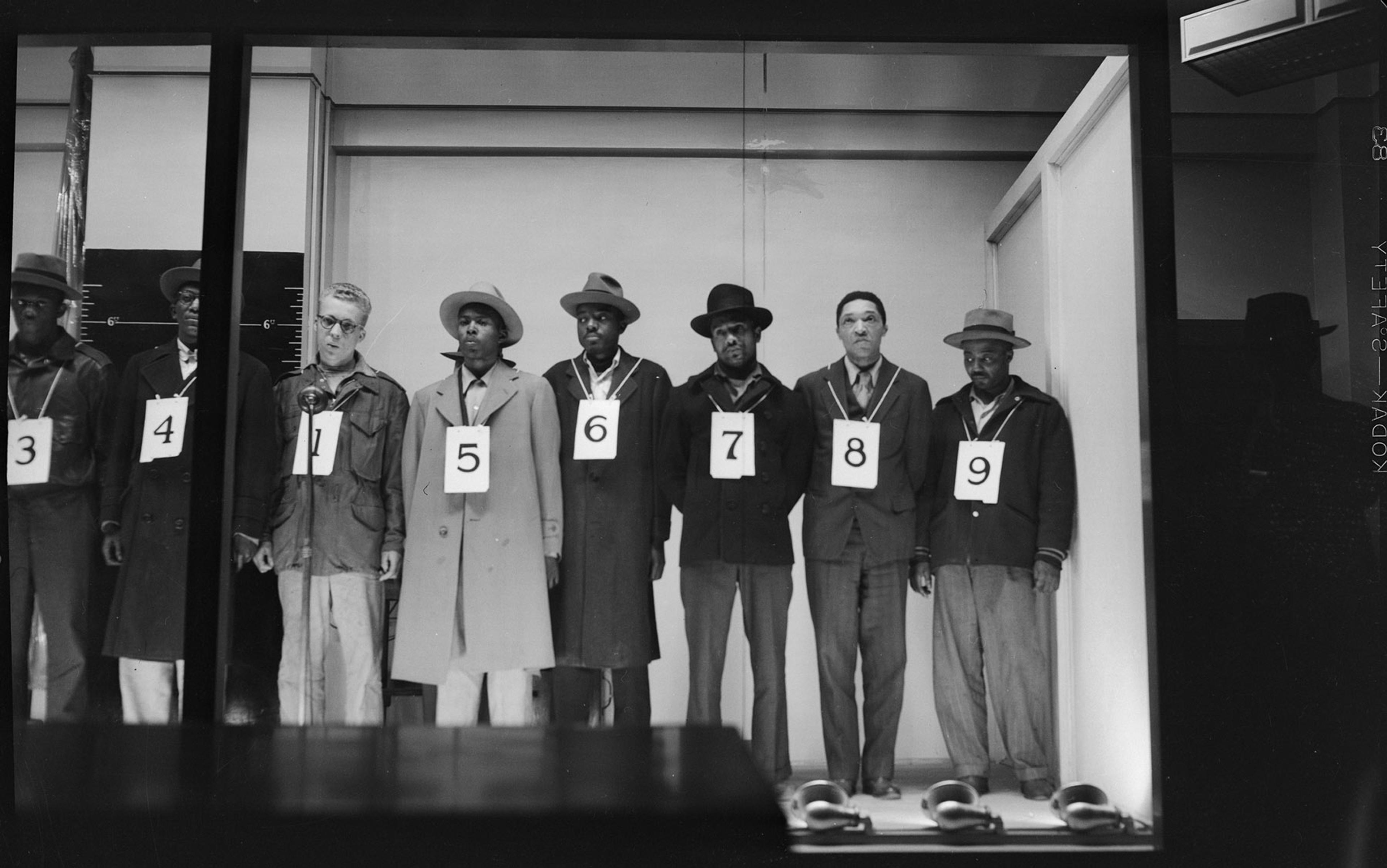

Issues with predictive methods are amplified in stop-and-frisk policing, where a police officer may stop and search anyone who shows ‘reasonable’ signs of criminal activity. Though upheld by the US Supreme Court in 1968, this practice is highly controversial today. A majority of those stopped but mostly not arrested are black or Latino. In a recent New York Times forum, experts in policing and the law disagreed about whether stop-and-frisk truly reduces crime in New York City and elsewhere, or rather is ineffective and merely worsens the already poor relations between police and minority communities so painfully seen in Dallas, St Louis and elsewhere.

Predictive policing enters into the debate because stop-and-frisk occurs mostly in high-crime areas (HCAs in law-enforcement lingo), that is, the hot spots at the centre of predictive analysis. As noted by Alexander Kipperman, a Philadelphia lawyer who has studied the impacts of predictive policing, a court decision in 2000 suggested that ‘[police] officers might reasonably view otherwise innocent behaviour as suspicious when within a HCA’. Kipperman, along with Ferguson, points out that officers might therefore feel justified in stopping people without ‘reasonable suspicion’ let alone ‘probable cause’, merely because of their presence in areas defined by predictive algorithms, thus weakening Fourth Amendment rights, especially among minorities. Even without a full stop-and-frisk approach, predictive policing means that more people, whether innocent or guilty, are scrutinised in hot zones.

Extending predictive policing to violent crime, as HunchLab does, greatly raises the stakes for trusting its recommendations. Raising even more concerns, Azavea is developing a HunchLab module that tracks ex-offenders to ‘predict the likelihood of each offender committing another crime and [prioritise] suspects for investigation in connection with new crime events’. That is the same approach used by the Chicago police to create their targeted ‘hot list’.

Many of the problems with predictive policing seen so far arise from giving algorithms too much weight compared with human judgment; blindly following a computer program that directs more officers to a certain location at a certain time does nothing to guarantee fair and effective use of that force. Yet algorithms can augment human abilities rather than replace them. For officers on the street, we might find that combining personal experience with guidance from predictive software enables them to deal better with what they encounter daily.

Therefore, besides conducting further testing to determine the true effectiveness of predictive policing, we need to train police officers in how best to blend Big Data recommendations with their own street knowledge. In that regard, the challenges of computer-guided policing are not fundamentally different from those of earlier statistical approaches, with one caveat: the actions of officers in the street responding to computer output in real time are harder to control than thoughtful long-term analysis of statistical trends.

As Kipperman and Ferguson point out, independent review and broad protections are an essential part of rising to those challenges. Ferguson notes that ‘the criminal justice system has eagerly embraced a data-driven future without significant political oversight or public discussion’. He proposes measures such as an outside organisation to audit crime data, correct errors and bias, and acknowledge them when they are found; further studies of the social science and criminology behind predictive policing; accountability at the internal police level and the external community level; and an understanding that a focus on hot spots and statistics diverts attention from examining the root causes of crime.

Kipperman proposes that predictive policing be separated from police departments altogether and carried out instead by ‘independent and neutral agencies’, to reduce external pressures and maintain Fourth Amendment rights. Sensible and just as these recommendations are, however, it is difficult to see how the necessary new structures would be built and administered. The gathering and use of reliable national crime statistics, for instance, has itself long been an issue in the law enforcement and criminal justice communities.

Like other new technologies in the digital age, such as the rapid development of self-driving cars, the predictive approach follows its own imperatives. ‘Predictive policing has far outpaced any legal and political accountability,’ Ferguson writes. He worries that the seductive appeal of a data-driven approach might have ‘overwhelmed considerations of utility or effectiveness and ignored considerations of fairness or justice’. Surely, if any technology requires careful scrutiny, it is one that directly affects people’s lives and futures under our justice system. With the will to put proper oversight in place – and with appropriate efforts from the police, the courts, civil rights advocates and an over-arching national agency such as the Department of Justice – maybe we can ensure that the benefits of predictive policing exceed its costs.