In the movie Sliding Doors (1998), a woman named Helen, played by Gwyneth Paltrow, rushes to catch a train on the London Underground, but just misses it, watching helplessly from the platform as the doors slide shut. The film explores two alternative universes, comparing the missed-train universe to a parallel reality in which she caught the train just in time. It wasn’t a cinematic masterpiece – the critics aggregated at Rotten Tomatoes give it only a 63 per cent ‘fresh’ rating – but it vividly confronts a question that many of us have asked at one time or another: if events had unfolded slightly differently, what would the world be like?

This question, applied to the history of life on our planet, has long beguiled thinkers of all stripes. Was the appearance of intelligent life an evolutionary fluke, or was it inevitable? This was one of the central themes in Stephen Jay Gould’s book, Wonderful Life: The Burgess Shale and the Nature of History (1989). If we re-played the tape of evolution, so to speak, would Homo sapiens – or something like it – arise once again, or was humanity’s emergence contingent on a highly improbable set of circumstances?

At first glance, everything that’s happened during the 3.8 billion-year history of life on our planet seems to have depended quite critically on all that came before. And Homo sapiens arrived on the scene only 200,000 years ago. The world got along just fine without us for billions of years. Gould didn’t mention chaos theory in his book, but he described it perfectly: ‘Little quirks at the outset, occurring for no particular reason, unleash cascades of consequences that make a particular future seem inevitable in retrospect,’ he wrote. ‘But the slightest early nudge contacts a different groove, and history veers into another plausible channel, diverging continually from its original pathway.’

One of the first lucky breaks in our story occurred at the dawn of biological complexity, when unicellular life evolved into multicellular. Single-cell organisms appeared relatively early in Earth’s history, around a billion years after the planet itself formed, but multicellular life is a much more recent development, requiring a further 2.5 billion years. Perhaps this step was inevitable, especially if biological complexity increases over time – but does it? Evolution, we’re told, does not have a ‘direction’, and biologists balk at any mention of ‘progress’. (The most despised image of all is the ubiquitous monkey-to-man diagram found in older textbooks – and in newer ones too, if only because the authors feel the need to denounce it.) And yet, when we look at the fossil record, we do, in fact, see, on average, a gradual increase in complexity.

But a closer look takes out some of the mystery of this progression. As Gould pointed out, life had to begin simply – which means that ‘up’ was the only direction for it to go. And indeed, a recent experiment suggests that the transition from unicellular to multicellular life was, perhaps, less of a hurdle than previously imagined. In a lab at the University of Minnesota, the evolutionary microbiologist William Ratcliff and his colleagues watched a single-celled yeast (Saccharomyces cerevisiae) evolve into many-cell clusters in fewer than 60 days. The clusters even displayed some complex behaviours – including a kind of division of labour, with some cells dying so that others could grow and reproduce.

But even if evolution has a direction, happenstance can still intervene. Most disruptive are the mass extinctions that plague Earth’s ecosystems with alarming regularity. The most catastrophic of these, the Permian-Triassic extinction, occurred about 250 million years ago, and wiped out 96 per cent of marine species, along with 70 per cent of land-dwellers. Gould examined the winners and losers of a more ancient mass extinction, the Cambrian-Ordovician extinction, which happened 488 million years ago, and found the poster child for biological luck – an eel-like creature known as Pikaia gracilens, which might be the precursor of all vertebrates. Had it not survived, the world could well be spineless.

At every stage, there are winners and losers. The Permian-Triassic extinction, devastating as it might have been, was good news for the dinosaurs, which appear shortly afterward, and flourished for some 165 million years. But then, the dinosaurs endured their own slings and arrows – including the mother of all projectiles, in the form of an asteroid that slammed into the Gulf of Mexico 66 million years ago. Debris kicked up by the impact blocked sunlight and might have triggered catastrophic global cooling. (The impact theory, although favoured, has some competition; some researchers believe volcanic eruptions to be the culprit. And just this spring, a new theory, with cosmological leanings, was put forward: as our solar system moves through the galaxy, it’s suggested, the Earth could encounter regions unusually dense in ‘dark matter’, possibly triggering a catastrophe.) Whatever the cause, it was a disaster for the dinosaurs – but good news for the little furry creatures that became, eventually, us.

It would be an exaggeration to say that Simon Conway Morris played Moriarty to Gould’s Sherlock Holmes – but the two men certainly found themselves on opposite sides of a deep intellectual divide. To Gould, the late Harvard paleontologist, evolution was deeply contingent, an endless series of fluke events, the biological equivalent of just-caught and just-missed subway trains. By contrast, Conway Morris, a professor of paleobiology at the University of Cambridge, focused on convergence: evolution, he argued, is not random, but strongly constrained; where environmental niches exist, evolution finds a way to fill them, often with similar creatures.

Everywhere Conway Morris looked, he saw convergence. He points, for example, to the appearance of tiger-like animals in both North and South America – animals that arose along separate evolutionary paths (the North American version was a placental mammal, the ancestors of today’s wild cats; in South America, they were marsupials). And then there are the tenrecs of Madagascar – which look remarkably similar to the hedgehogs found in Europe, and yet evolved separately. One might also look to the air: evolution has found at least four distinct ways for life to take wing, first with insects and later with pterosaurs, birds and bats.

Perhaps the most studied case of convergence is the evolution of the eye. The English evolutionary biologist Richard Dawkins points out that the eye has evolved at least 40 times (and perhaps as many as 60 times) over history. Eyes evolve so easily, in fact, that a fish known as Bathylychnops exilis, also dubbed the javelin spookfish, evolved a second pair of eyes – putting it one eye ahead of the fish that haunts Mr Burns in the TV show The Simpsons.)

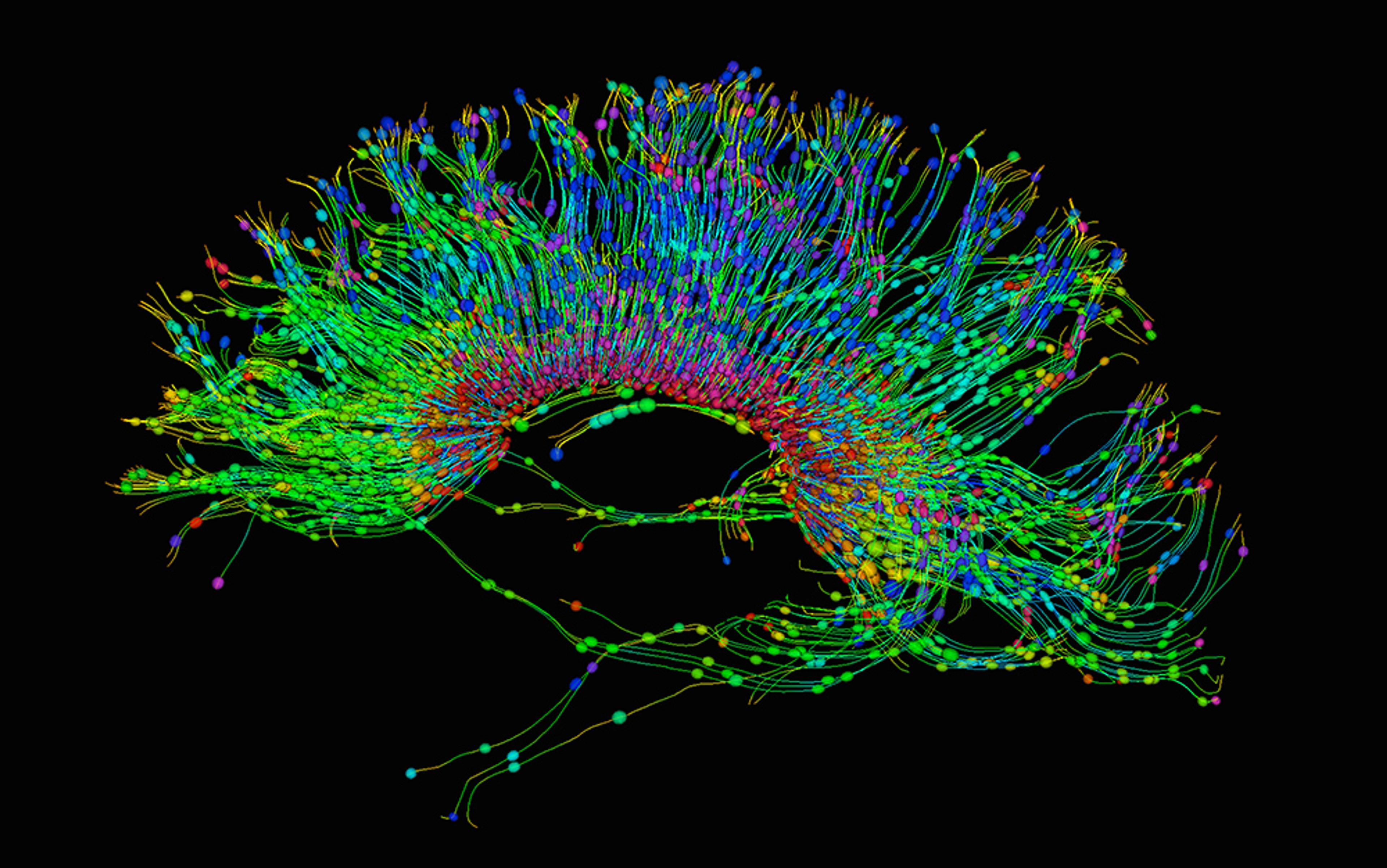

the hominin lineage is quite ‘bushy’, with multiple, parallel evolutionary paths

Could the contingency/convergence debate be settled in the laboratory? For nearly 30 years, the microbial ecologist Richard Lenski at Michigan State University has been cultivating 12 separate populations of E coli. To date, they’ve evolved through more than 60,000 generations. For the most part, the lineages show little difference from each other – but there have been a few notable exceptions. In one flask, the bacteria have split off into two size-groups, one much larger than the other; the divergence began a few years after the experiment began. Some years later, another lineage learned how to feed off a different chemical in their flask, consuming citrate (while the other lineages consumed only glucose); the lineage grew rapidly in total population. Lenski’s data is provocative, but ultimately inconclusive in terms of settling the contingency/convergence question. Both are clearly relevant, but difficult to quantify. Even the cleverest experiments could prove unsatisfying.

Unfortunately, none of this seems to have very much to do with us. Back when that monkey-to-man diagram prevailed, it was easy to imagine a sort of evolutionary ‘ladder’, with Homo sapiens (naturally) occupying the top rung. However, the hominin lineage is now seen to be quite ‘bushy’, with multiple, parallel evolutionary paths, going back to the time when our ancestors diverged from the ancestors of today’s chimpanzees, around 7 million years ago, according to some researchers.

During most of the hominin saga, there is little ‘progress’ to be seen. There was tool use, beginning around 3.3 million years ago – but innovation was sporadic. New types of implements, observes Ian Tattersall, emeritus curator with the American Museum of Natural History in New York, ‘were typically introduced only at intervals of hundreds of thousands or even millions of years, with minimal refinement in between’. Indeed, the ‘normal’ state of affairs was ‘technological stability’ that could easily last ‘tens or even hundreds of thousands of years’, according to the paleoanthropologist Daniel Adler of the University of Connecticut. Today, technology spreads in the blink of an eye, but in prehistoric times there was no guarantee an idea would spread at all, mainly due to low populations.

When populations are low, extinction looms. According to one theory, the eruption of a ‘supervolcano’ known as Toba, in Indonesia, some 70,000 years ago, triggered a period of global cooling that lasted decades, perhaps centuries. Human populations shrank to between 3,000 and 10,000 individuals. We made it through this ‘genetic bottleneck’ – but it’s worth remembering that every other hominin species has vanished. Some, such as Homo erectus, lived for perhaps 1.6 million years; others, such as Homo floresiensis, winked in and out of existence in less than 100,000 years.

Throughout human prehistory, biological change and technological change ran in parallel. Brains were increasing in size – but this was not unique to our ancestors, and can be seen across multiple hominin species. Something very complicated was going on – a kind of arms race, Tattersall suggests, in which cognitive capacity and technology reinforced each other. At the same time, each branch of the human evolutionary tree was forced to adapt to an ever-changing climate. At the time of the dinosaurs, the Earth was some 10 degrees warmer than today – but by 30 million years ago, a cooling trend began. The ice ages of the Pleistocene were still to come, but already the forested woodlands where the first primates evolved were receding. Tropical forests were giving way to open grasslands. An animal adapted to life in the trees faced great perils on the open savannah. But if that same animal was capable of co‑operation and tool use, that would have obvious advantages. In the end, only one hominin was left standing.

Tattersall and many others have suggested that symbolic thought and complex language gave Homo sapiens the edge, but there were physiological requirements that had to be met before the gabfest could begin. The larynx of most primates sits higher up in the throat. Only when it moved lower in the throat could our larynx produce the wide range of sounds associated with human language. Speaking also requires intricate co‑ordination of vocal cords, lips, tongue and mouth. That co‑ordination is likely facilitated by two specific regions of the left cerebral cortex, which probably took their modern form only within the last 2 million years.

big game animals succumbed not to our muscle power – which was puny – but to our brainpower

Language isn’t only a matter of physiology. It likely evolved alongside increasingly complex social behaviour, made possible through enhanced cognitive powers, including the development of what psychologists call ‘theory of mind’ – the ability to discern that others have thoughts and intentions of their own. Equally crucial was the ability to think about objects and events that are not immediately before our eyes. We could remember the past. We could envision the future – and plan for it. We had created a brand-new world within the mind, but the pay‑off was in the real, physical world, with big game animals succumbing not to our muscle power – which was puny – but to our brainpower.

Language almost certainly evolved hand in hand with improving technology. If our ancestors could imagine tracking and killing a mammoth, they could also picture the kinds of stone tools that would make the job easier – both the initial slaughter and the later dismembering. And, crucially, once a tool was invented, that knowledge could be passed on, not only by showing but also by telling. ‘Here’s how you sharpen an axe…’

By now, of course, we’re discussing not only biological evolution, but also cultural evolution. In cultural matters, the p-word is even more despised than in biology – but it is harder to ignore. Cultural innovation seems to show directionality. Once you invent a spear, or an arrow, or a plough, you don’t un-invent it.

One afternoon this winter, I sat with the paleoarcheologist Michael Chazan in his laboratory at the University of Toronto, drinking tea and discussing the subtle interplay between culture and biology. Chazan is an expert on the early use of stone tools and fire. He’s done extensive fieldwork in Europe, the Middle East and Africa. He’s spent many years investigating artifacts from the Wonderwerk Cave in South Africa, which has seen 2 million years of continuous human activity; it might be the site where our ancestors first learned to control fire. The tables in Chazan’s lab are strewn with stone axes and arrowheads of all shapes and sizes, and his shelves are stacked high with white cardboard boxes containing still more Stone Age artifacts.

‘In technology, you really can see trends through time,’ Chazan says. ‘Things change in a directional fashion, and they do so because of the internal logic of how technology works.’ But there’s also a social context in which technology develops – and that’s a crucial part of the equation. Consider how our ancestors learned to control fire. Learning how to keep a fire burning is great – but until we learned to start a fire at will, a semi-permanent base camp would be needed in order for the technique to be passed down. For many historians, agriculture stands out as the last great innovation on the path to modern civilisation, in part because it provided a permanent ‘base camp’ for larger human communities. With the planting and harvesting of wheat and barley, and the domestication of livestock, it can seem like a short and perhaps inevitable step to the walls of Jericho and the pyramids of Giza. This doesn’t remove contingency from the equation. ‘There is nothing inevitable about the origins of agriculture,’ Chazan says. ‘It didn’t have to happen – but once it happened, it’s irreversible.’

And what about all of our other cultural developments, from music and mathematics to humour and religion? There was another, very similar hominin species living alongside us, until 35,000 years ago: the Homo neanderthalensis, the Neanderthals. Once thought to be brutish and dim-witted, Neanderthals have come to seem nearly human: whether (and how well) they spoke is not known, but they had big brains, used sophisticated tools, and buried their dead (although in graves less elaborate than those of early Homo sapiens). In a human-free world, would Neanderthals have filled the role of planet-transforming biped?

Suddenly I’m imagining Neanderthals, rather than humans, riding the Piccadilly Line

‘There’s no anatomical reason to say they were any less clever than modern humans,’ says Chazan. ‘There’s no archeological context where we have Neanderthals and modern humans doing the same thing, where you can say: “Oh, modern humans were so much smarter at this thing than Neanderthals.”’ Recent findings suggest that Neanderthals adorned themselves with jewellery and feathers, and painted their bodies with black and red pigments. It’s even been suggested that simple line-drawings found in a cave near Gibraltar are a Neanderthal ‘map’, or at least that they served some symbolic function. But that interpretation has been the subject of much debate, and in fact all of these claims are controversial.

I ask Chazan if the Neanderthals could have come to dominate the planet, had they not been forced to compete with our own species. ‘I don’t see any reason why not,’ he says. ‘Would we end up with cities of Neanderthals? Who knows. I can’t think of any reason to say: “No, that couldn’t happen.”’ Suddenly I’m imagining Neanderthals, rather than humans, riding the Piccadilly Line.

But perhaps the evolution of intelligent primates was itself a fluke. Intelligence doesn’t seem to be a ‘good idea’ that evolution produces over and over again, at least not the way the eye is. The late evolutionary biologist Ernst Mayr once pointed out that of the (approximately) 30 known phyla of animals, intelligence evolved only once (in the chordates) – and within the thousands of subdivisions of chordates, high intelligence is found only in primates, ‘and even there only in one small subdivision’, as Mayr put it. Mayr was willing to grant the possibility of a lesser degree of intelligence to cephalopods, and today some researchers would add dolphins and corvids to the list – but he’s right to cast our linguistic, symbol-using species as an oddity.

These questions might seem rather remote, given that we can’t actually rewind the tape of life, but there might be another way of investigating these alternative histories.

Since ancient times, humans have wondered whether there is intelligent life elsewhere in the cosmos. The fact that we haven’t heard so much as a peep from ET suggests that intelligent aliens are not ubiquitous. The rest of the galaxy could be lifeless, or perhaps there’s primitive life of some kind, but some evolutionary hurdle – one of these many that we’ve been looking at, or perhaps something quite different – is staggeringly difficult to surmount. Or perhaps we are merely alone for now.

The evolution of intelligence could be such an unlikely (and lengthy) process that, while many intelligent civilisations might arise, most will do so in the far future. The same argument can be applied to our own planet. Complex life is still in its youth, relative to the age of the Universe; perhaps our planet’s future will contain intelligences superior to our own, possibly – but not necessarily – our descendants. Maybe intelligent life is inevitable, and we’re merely the first to the party.