The standard theory of cosmology is called the Lambda cold dark matter (ΛCDM) model. As that name suggests, the theory postulates the existence of dark matter – a mysterious substance that (according to the theorists) comprises the bulk of the matter in the Universe. It is widely embraced. Every cosmologist working today was educated in the Standard Model tradition, and virtually all of them take the existence of dark matter for granted. In the words of the Nobel Prize winner P J E Peebles: ‘The evidence for the dark matter of the hot Big Bang cosmology is about as good as it gets in natural science.’

There is one problem, however. For four decades and counting, scientists have failed to detect the dark matter particles in terrestrial laboratories. You might think this would have generated some doubts about the standard cosmological model, but all indications are to the contrary. According to the 2014 edition of the prestigious Review of Particle Physics: ‘The concordance model [of cosmology] is now well established, and there seems little room left for any dramatic revision of this paradigm.’ Still, shouldn’t the lack of experimental confirmation at least give us pause?

In fact, there are competing cosmological theories, and not all of them contain dark matter. The most successful competitor is called modified Newtonian dynamics (MOND). Observations that are explained under the Standard Model by invoking dark matter are explained under MOND by postulating a modification to the theory of gravity. If scientists had confirmed the existence of the dark particles, there would be little motivation to explore such theories as MOND. But given the absence of any detections, the existence of a viable alternative theory that lacks dark matter invites us to ask: does dark matter really exist?

Philosophers of science are fascinated by such situations, and it is easy to see why. The traditional way of assessing the truth or falsity of a theory is by testing its predictions. If a prediction is confirmed, we tend to believe the theory; if it is refuted, we tend not to believe it. And so, if two theories are equally capable of explaining the observations, there would seem to be no way to decide between them.

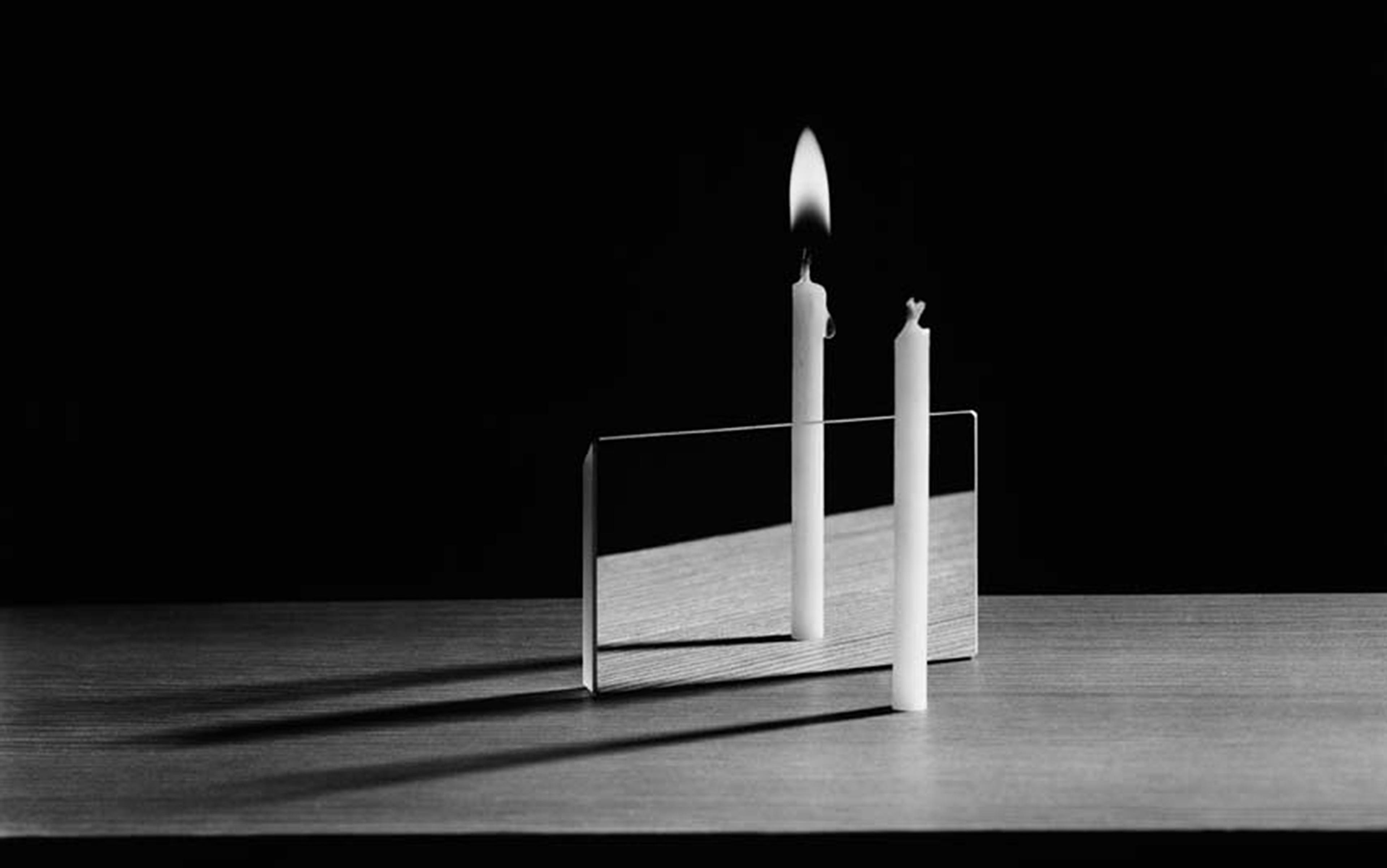

What is a poor scientist to do? How is she to decide? It turns out that the philosophers have some suggestions. They point out that scientific theories can achieve correspondence with the facts in two very different ways. The ‘bad’ way is via post-hoc accommodation: the theory is adjusted, or augmented, to bring it in line with each new piece of data as it becomes available. The ‘good’ way is via prior prediction: the theory correctly predicts facts in advance of their discovery, without – and this is crucial – any adjustments to the original theory.

It is probably safe to say that no theory gets everything exactly right on the first try. But philosophers are nearly unanimous in arguing that successful, prior prediction of a fact assigns a greater warrant for belief in the predicting theory than post-hoc accommodation of that fact. For instance, the philosopher of science Peter Lipton wrote:

When data need to be accommodated … the scientist knows the answer she must get, and she does whatever it takes to get it … In the case of prediction, by contrast, there is no motive for fudging, since the scientist does not know the right answer in advance … As a result, if the prediction turns out to have been correct, it provides stronger reason to believe the theory that generated it.

Some philosophers go so far as to argue that the only data that can lend any support to a theory are data that were predicted in advance of experimental confirmation; in the words of the philosopher Imre Lakatos, ‘the only relevant evidence is the evidence anticipated by a theory’. Since only one (at most) of these two cosmological theories can be correct, you might expect that only one of them (at most) manages to achieve correspondence with the facts in the preferred way. That expectation turns out to be exactly correct. And (spoiler alert!) it is not the Standard Model that is the favoured theory according to the philosophers’ criterion. It’s MOND.

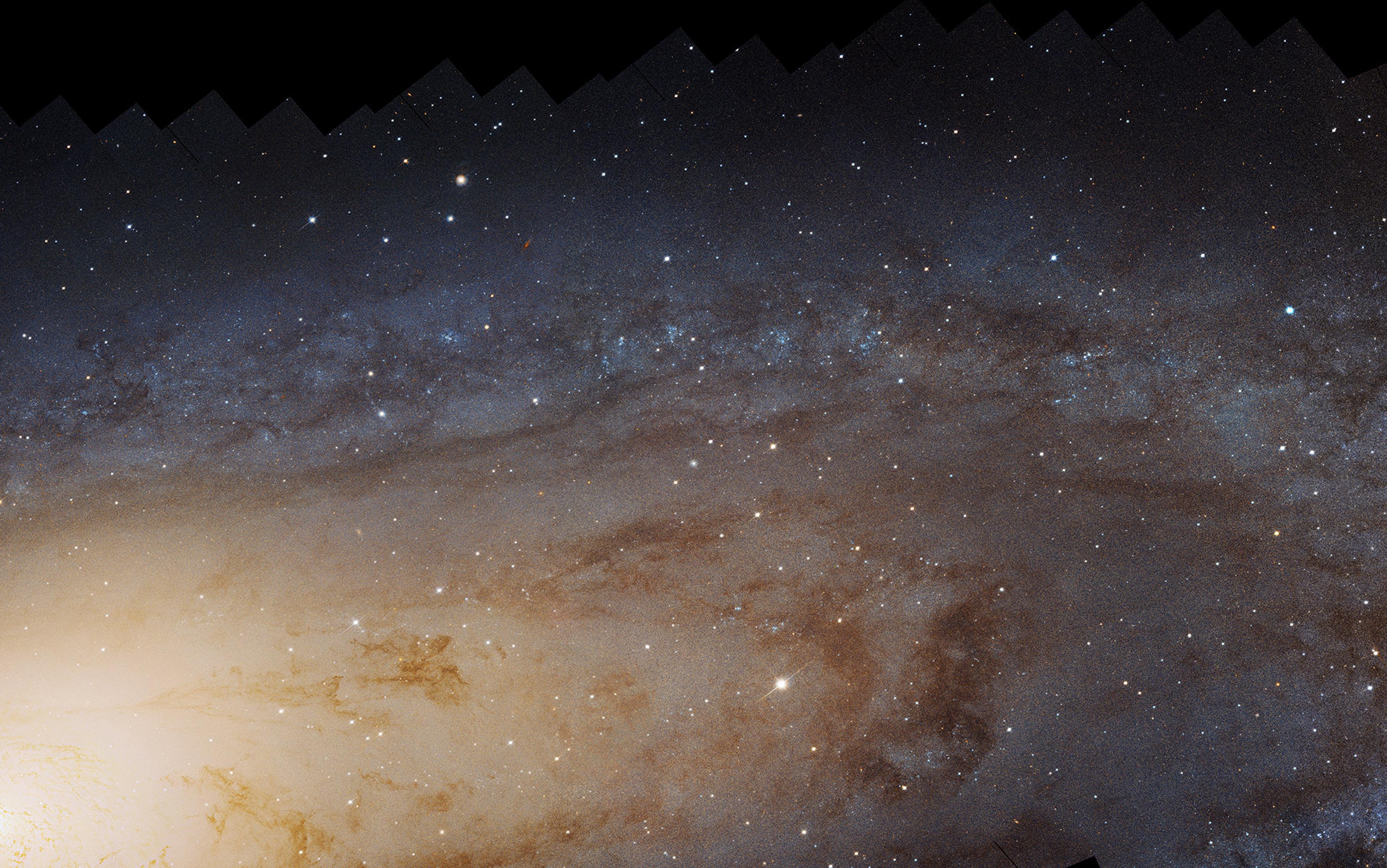

Dark matter was a response to an anomaly that arose, in the late 1970s, from observations of spiral galaxies such as our Milky Way. The speed at which stars and gas clouds orbit about the centre of a galaxy should be predictable given the observed distribution of matter in the galaxy. The assumption here is that the gravitational force from the observed matter (stars, gas) is responsible for maintaining the stars in their circular orbits, just as the Sun’s gravity maintains the planets in their orbits. But this prediction was decisively contradicted by the observations. It was found that, sufficiently far from the centre of every spiral galaxy, orbital speeds are always higher than predicted. This anomaly needed to be accounted for.

Cosmologists had a solution. They postulated that every galaxy is embedded in a ‘dark matter halo’, a roughly spherical cloud composed of some substance that generates just the right amount of extra gravity needed to explain the high orbital speeds. Since we do not observe this matter directly, it must consist of some kind of elementary particle that does not interact with electromagnetic radiation (that includes light, but also radio waves, gamma rays etc). No particle was known at the time to have the requisite properties, nor have particle physicists yet found evidence in their laboratory experiments for such a particle, in spite of looking very hard since the early 1980s. The cosmologists had their solution to the rotation-curve anomaly, but they lacked the hard data to back it up.

Milgrom took seriously the possibility that the theory of gravity might simply be wrong

In 1983, an alternative explanation for the rotation-curve anomaly was proposed by Mordehai Milgrom, a physicist at the Weizmann Institute of Science in Israel. Milgrom noticed that the anomalous data had two striking regularities that were not accounted for by the dark matter hypothesis. First: orbital speeds are not simply larger than predicted. In every galaxy, the orbital speed rises as one moves away from the centre and then remains at a high value as far out as observations permit. Astronomers call this property ‘asymptotic flatness of the rotation curve’. Second: the anomalously high orbital speeds invariably appear in regions of space where accelerations due to gravity drop below a certain characteristic, and very small, value. That is: one can predict, in any galaxy, exactly where the motions will begin to deviate from Newtonian dynamics.

This characteristic acceleration value, which Milgrom dubbed a0, is much lower than the acceleration due to the Sun’s gravity anywhere in our solar system. So, by measuring orbital speeds in the outskirts of spiral galaxies, astronomers were testing gravitational theory in a way that had never been done before. Milgrom knew that there were many instances in the history of science where the need for a new theory became apparent only when an existing theory was tested in a new way. And so he took seriously the possibility that the theory of gravity might simply be wrong.

In three papers published in 1983, Milgrom proposed a simple modification to Isaac Newton’s laws that relate gravitational force to acceleration. (Albert Einstein’s theory reduces to Newton’s simpler theory in the regime of galaxies.) He showed that his modification correctly predicts the asymptotic flatness of orbital rotation curves.

Milgrom was careful to acknowledge that he had designed his hypothesis in order to produce that known result. But his theory also predicted that the effective gravitational force was computable given the observed distribution of normal matter alone – not just in the case of ultra-low accelerations, but everywhere. And when astronomers tested this bold prediction, they found that it was correct. Milgrom’s hypothesis correctly predicts the rotation curve of every galaxy that has been tested in this way. And it does so without postulating the presence of dark matter.

Note the stark difference between the way in which the two theories explain the anomalous rotation-curve data. The standard cosmological model executes an ad-hoc manoeuvre: it simply postulates the existence of whatever amount and distribution of dark matter are required to reconcile the observed stellar motions with Newton’s laws. Whereas Milgrom’s hypothesis correctly predicts orbital speeds given the observed distribution of normal matter alone. No Standard Model theorist has ever come up with an algorithm that is capable of doing anything as impressive as that.

Many philosophers would argue that this predictive success of Milgrom’s theory gives us a warrant for believing that his theory – as opposed to the Standard Model – is correct. But the story does not end there. Milgrom’s theory makes a number of other novel predictions that have been confirmed by observational astronomers. Doing justice to all of these would take a book (and, in fact, I’ve recently written such a book), but I will mention one example here. Milgrom’s theory predicts that a galaxy’s total mass in normal (non-dark) matter, which astrophysicists like to call the ‘baryonic mass’, is proportional to the fourth power of the rotation speed measured far from the galaxy’s centre. This novel prediction also turned out to be correct. (For obscure historical reasons, Milgrom’s predicted relation is nowadays called the ‘baryonic Tully-Fisher relation’, or BTFR.)

Astrophysics is rife with correlations between observed quantities, but exact relations such as the BTFR are unheard of: they are the sort of thing one associates with a high-level theory (think: the ideal gas law of statistical thermodynamics), not with a messy discipline like astrophysics.

What would a Standard Model cosmologist predict for a relation such as the BTFR? The simple answer is: nothing. Their theory contains no prescription for relating a galaxy’s baryonic mass to its asymptotic rotation speed. But astrophysicists are diligent and clever, and they have come up with a way to try to accommodate relations like the BTFR under the ΛCDM model. Their scheme is to carry out large-scale computer simulations of the formation and evolution of galaxies, starting from uniform initial conditions in the early Universe. The simulated galaxies can then be ‘observed’ and their properties tabulated. The earliest attempts of this sort yielded nothing very similar to Milgrom’s predicted relation. But in the decades since then, theorists have come up with more-or-less plausible mechanisms for linking the normal and dark matter in their simulated galaxies, in such a way that they can obtain something approximating the BTFR. The currently favoured mechanism, called ‘feedback’, is based on the (reasonable) idea that some of the gas that would otherwise form into stars is pushed out from the dark halo by the stars themselves, via stellar winds or supernova explosions. If the ‘feedback prescription’ is chosen carefully enough, just the right amount of gas can be ejected, from dark halos of each size, to yield the correct relation.

Standard Model theorists have not yet succeeded in reproducing the BFTR via their simulations. But let’s suppose that, one day, they do succeed. Would that success lend support to their cosmological theory, in the same way that the successful ab initio prediction of the relation by Milgrom lends support to his hypothesis?

The issue is not that the rival theory is too complicated; it is that the Standard Model is too simple

Philosophers of science have an answer: a resounding ‘no’. John Worrall, for instance, writes that ‘when one theory has accounted for a set of facts by parameter-adjustment, while a rival accounts for the same facts directly and without contrivance, then the rival does, but the first does not, derive support from those facts.’ On this view, it doesn’t matter whether the parameters being adjusted are meant to represent actual physical processes (such as feedback) or not. The fact that Milgrom’s hypothesis correctly predicts the relation ‘without contrivance’ means that it ‘wins’: it is the sole hypothesis that derives support from those data.

Now, the preference on the part of philosophers for scientific theories that predict in advance previously unknown laws or relations is quite in line with the preference that scientists themselves have expressed, over and over again, going back centuries. For instance, Gottfried Wilhelm Leibniz wrote in 1678: ‘Those hypotheses deserve the highest praise … by whose aid predictions can be made, even about phenomena or observations which have not been tested before.’ And so the question naturally arises: why have most cosmologists been so dismissive of MOND, given that MOND exhibits the very quality that scientists prize so highly?

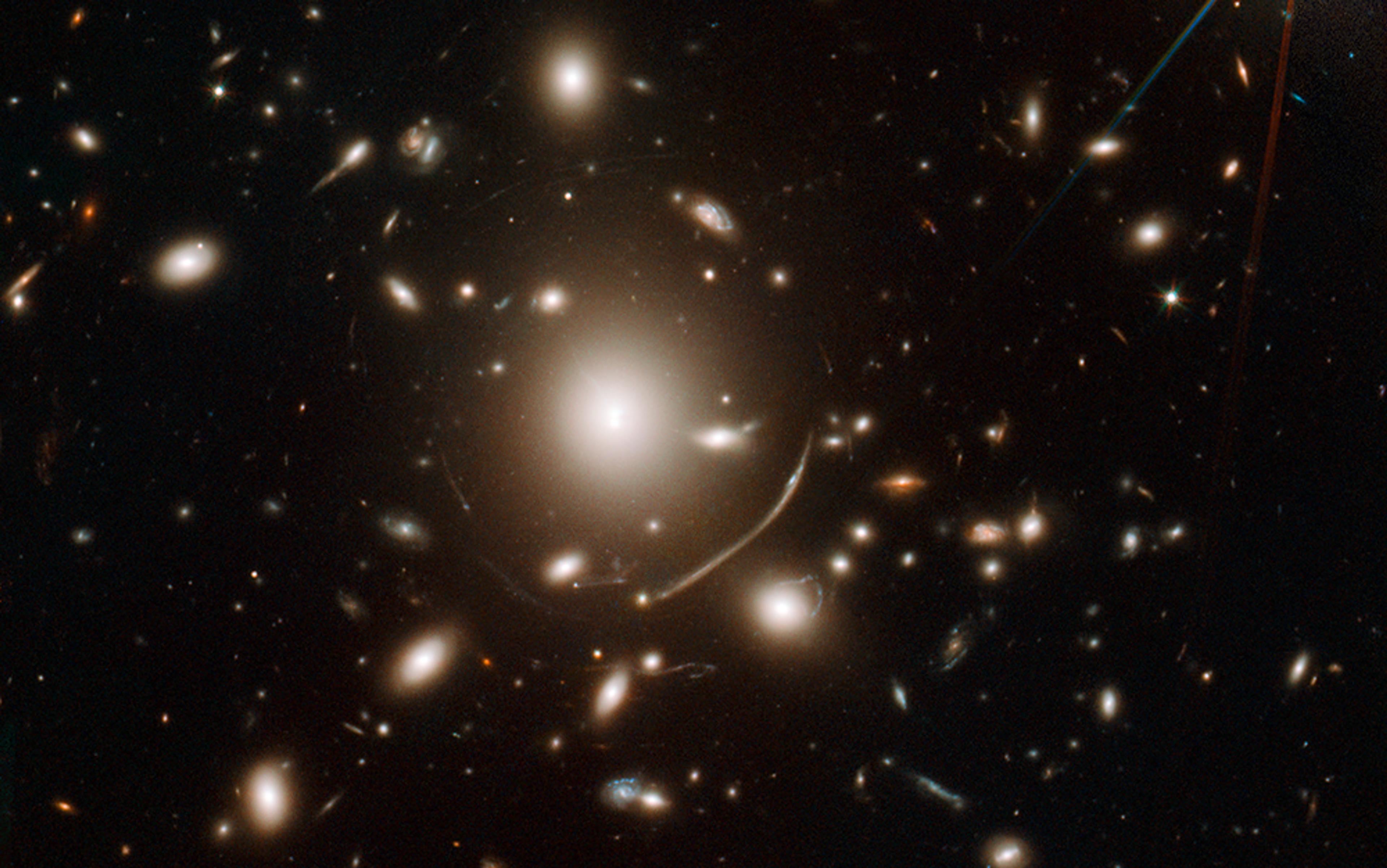

Up until a few years ago, this disdainful attitude was defensible. One of the most touted successes of the standard cosmological model is its ability to reproduce (‘accommodate’ would be a better word) the so-called cosmic microwave background (CMB) spectrum – the statistical properties of temperature fluctuations in the CMB, the Universe-filling radiation that was produced soon after the Big Bang. Milgrom’s theory did not originally do this, at least not as well as the Standard Model. But that situation has now changed. Just last year, two theorists in the Czech Republic, Constantinos Skordis and Tom Złośnik, showed that there exist fully relativistic versions of Milgrom’s hypothesis that are perfectly capable of reproducing the CMB data without dark matter. This relativistic version of MOND, which they call RMOND, incorporates an additional field that mimics the behaviour of particle dark matter on the largest cosmological scales, and yields Milgromian dynamics on the scale of galaxies.

Prior to this success, a number of Standard Model cosmologists had gone on record to say that fitting the CMB data was the single thing that MOND needed to do in order to be taken seriously. For instance, the cosmologist Ruth Durrer told The Atlantic: ‘A theory must do really well to agree with [the CMB] data. This is the bottleneck.’ Now that this bottleneck no longer exists, has the Standard Model community embraced the RMOND theory as a bona fide competitor to theirs?

Not so much. An argument that is making the rounds nowadays goes something like this: ‘Yes, [R]MOND works, but it is so much more complicated than our theory, which just invokes one thing – dark matter – to explain the observations.’ This criticism misses the mark. The issue is not that RMOND is too complicated; it is that the dark matter of the Standard Model is too simple. Milgromian theorists have understood for a long time that there is just no way that a formless entity such as dark matter can spontaneously rearrange itself – and keep rearranging itself – so as to produce the striking regularities that we observe in the kinematics of nearby galaxies. Skordis and Złośnik achieve this by postulating an almost minimal (it seems to me) modification to Einstein’s theory. I can hardly imagine that any truly successful theory could be much simpler than theirs.

Like almost all scientific theories, both MOND and the Standard Model are faced with anomalies: data that seem difficult to explain. In the case of MOND, I am aware of just one important anomaly: it has to do with the observed dynamics of galaxy clusters. The ΛCDM model is in a seemingly worse state. That theory fails to adequately explain any of MOND’s successful new predictions, and, in addition, Standard Model theorists have identified at least half a dozen puzzles that are (in my opinion) as least as problematic for their theory as the galaxy cluster anomaly is for MOND. My point here is not that one should judge the two theories by cataloguing their failures. Rather, the novel predictive successes of MOND give us a warrant for believing (at least provisionally) that this theory is correct, and therefore that it is worth the effort to try and solve the existing puzzles.

The argument from predictive success is a good reason to favour MOND over the standard cosmological model. But one can hope for more: for what Karl Popper called a ‘crucial experiment’: an experimental or observational result that decisively favours one theory over the other. For instance, Einstein’s theory implies something called the strong equivalence principle (SEP): that the internal dynamics of a system (such as a galaxy) that is freely moving in an external gravitational field should not depend on the external field strength. Milgrom’s theory violates the SEP, and in fact it has recently been claimed, based on observations of galaxies, that the SEP is violated. That result, if confirmed, would rule out Einstein’s theory of gravity while at the same time confirming a prediction of Milgrom’s theory.

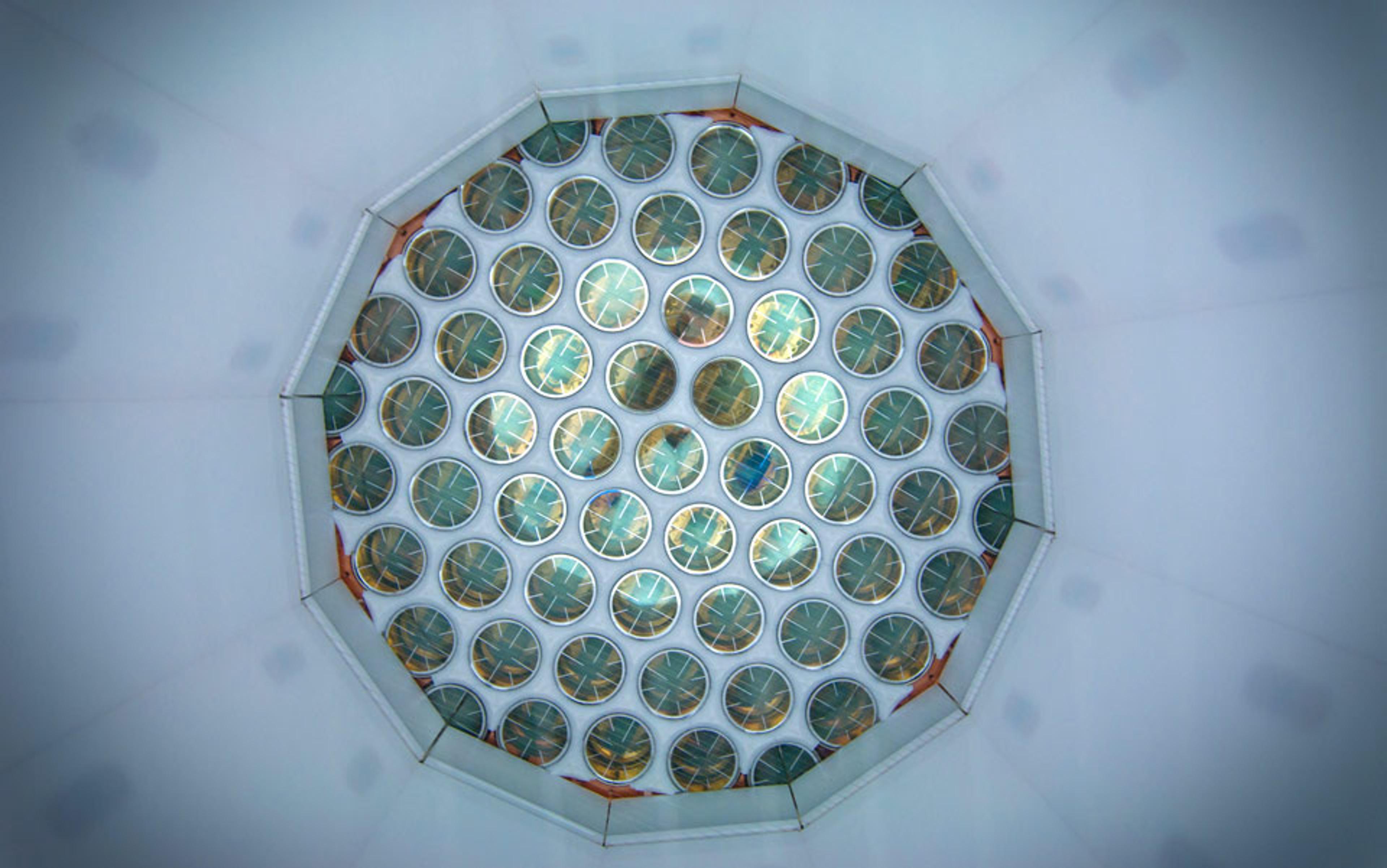

A decisive result in favour of the ΛCDM model would be a laboratory detection of dark matter particles. Standard Model cosmologists are aware of this, and since the early 1980s a number of sophisticated (and very expensive) detectors have been in operation that were specially designed to record the presence of the particles. About half a dozen such experiments are currently active; the sensitivity of state-of-the-art detectors is about 10 million times that of the earliest experiments. But no event has yet been observed that can reasonably be interpreted as the track of a dark matter particle.

Of course, the failure to detect the dark matter particles is expected under MOND. Does this negative experimental result constitute evidence in support of MOND? Most scientists would probably say ‘no’; as Carl Sagan said (about something completely different): ‘Absence of evidence is not evidence of absence.’ And, in fact, Standard Model cosmologists routinely argue that the persistent failure to detect the particles can be accounted for by assuming that the particles, even if present, undergo negligibly weak interactions with the normal matter in their detectors.

The same postulates that remove the need for dark matter lead to novel predictions that have been confirmed

I think the philosophers might disagree. Paul Feyerabend argued that an ambiguous experimental result could sometimes be interpreted as an effective refutation of the theory being tested, even if scientists were clever enough to ‘explain away’ the apparent failure. The necessary condition, he said, was the existence of an alternative theory that naturally explained the result:

The reason why a refutation through alternatives is stronger is easily seen. The direct case is ‘open’, in the sense that a different explanation of the apparent failure of the theory … might seem to be possible. The presence of an alternative [theory] makes this attitude much more difficult, if not impossible, for we possess now not only the appearance of failure … but also an explanation, on the basis of a successful theory, of why failure actually occurred.

By successful alternative theory, Feyerabend meant a theory that both explained the negative experimental result, and that also generated new, testable predictions. The confirmation of those new predictions, he argued, constituted an effective refutation of the original theory. MOND amply meets Feyerabend’s criteria for the alternative theory, since the same postulates that remove the need for dark matter lead (as we have seen) to a number of novel predictions that have been observationally confirmed.

Feyerabend was arguing here, as he often did, for a methodological rule that is nowadays called the ‘principle of proliferation’: the thesis that judgments about the performance of a theory are much sounder if there exist alternative theories with which a comparison can be made. If no one had ever hit upon a theory, such as MOND, that explains cosmological data without dark matter, the failure of experimental physicists to detect the dark matter particles could reasonably be ascribed to some combination of poorly understood phenomena (‘Dreckeffekte’ – garbage effects – was Feyerabend’s sarcastic term in German). But the existence of a theory such as MOND forces scientists to take seriously the possibility that their experimental failure constitutes a falsification of their theory in favour of MOND.

If Feyerabend were alive today, I am certain that he would be delighted at the fact that there are two viable contenders for the correct theory of cosmology. I think he would be intrigued to learn that one, and only one, of these two theories has repeatedly been found to ‘anticipate the data’ – to make surprising predictions that turned out to be correct. And I think he would urge cosmologists to put their effort into identifying crucial experiments that could decisively favour one theory over the other.

At the same time, I am equally certain that Feyerabend would be critical of the manner in which Milgrom’s theory has been treated by the larger scientific community. Textbooks and review articles on cosmology rarely mention MOND at all, and, when they do, it is almost always in dismissive terms. And while I cannot quote statistics, it is pretty clear that winning a research grant, or publishing a scientific paper, or getting tenure, is harder (all else being equal) for Milgromian researchers than for Standard Model scientists.

I honestly don’t know whether this troubling state of affairs reflects a general ignorance about MOND, or whether some darker psychological mechanism is at work. But I hope that scientists and educators can begin creating an environment in which the next generation of cosmologists will feel comfortable exploring alternative theories of cosmology.

This Essay was made possible through the support of a grant to Aeon from the John Templeton Foundation. The opinions expressed in this publication are those of the author and do not necessarily reflect the views of the Foundation. Funders to Aeon Magazine are not involved in editorial decision-making.