Some things occur just by chance. Mark Twain was born on the day that Halley’s comet appeared in 1835 and died on the day it reappeared in 1910. There is a temptation to linger on a story like that, to wonder if there might be a deeper order behind a life so poetically bracketed. For most of us, the temptation doesn’t last long. We are content to remind ourselves that the vast majority of lives are not so celestially attuned, and go about our business in the world. But some coincidences are more troubling, especially if they implicate larger swathes of phenomena, or the entirety of the known universe. During the past several decades, physics has uncovered basic features of the cosmos that seem, upon first glance, like lucky accidents. Theories now suggest that the most general structural elements of the universe — the stars and planets, and the galaxies that contain them — are the products of finely calibrated laws and conditions that seem too good to be true. What if our most fundamental questions, our late-at-night-wonderings about why we are here, have no more satisfying answer than an exasperated shrug and a meekly muttered ‘Things just seem to have turned out that way’?

It can be unsettling to contemplate the unlikely nature of your own existence, to work backward causally and discover the chain of blind luck that landed you in front of your computer screen, or your mobile, or wherever it is that you are reading these words. For you to exist at all, your parents had to meet, and that alone involved quite a lot of chance and coincidence. If your mother hadn’t decided to take that calculus class, or if her parents had decided to live in another town, then perhaps your parents never would have encountered one another. But that is only the tiniest tip of the iceberg. Even if your parents made a deliberate decision to have a child, the odds of your particular sperm finding your particular egg are one in several billion. The same goes for both your parents, who had to exist in order for you to exist, and so already, after just two generations, we are up to one chance in 1027. Carrying on in this way, your chance of existing, given the general state of the universe even a few centuries ago, was almost infinitesimally small. You and I and every other human being are the products of chance, and came into existence against very long odds.

And just as your own existence seems, from a physical point of view, to have been wildly unlikely, the existence of the entire human species appears to have been a matter of blind luck. Stephen Jay Gould argued in 1994 that the detailed course of evolution is as chancey as the path of a single sperm cell to an egg. Evolutionary processes do not innately tend toward Homo sapiens, or even mammals. Rerun the course of history with only a slight variation and the biological outcome might have been radically different. For instance, if the asteroid hadn’t struck the Yucatán 66 million years ago, dinosaurs might still have run of this planet, and humans might have never evolved.

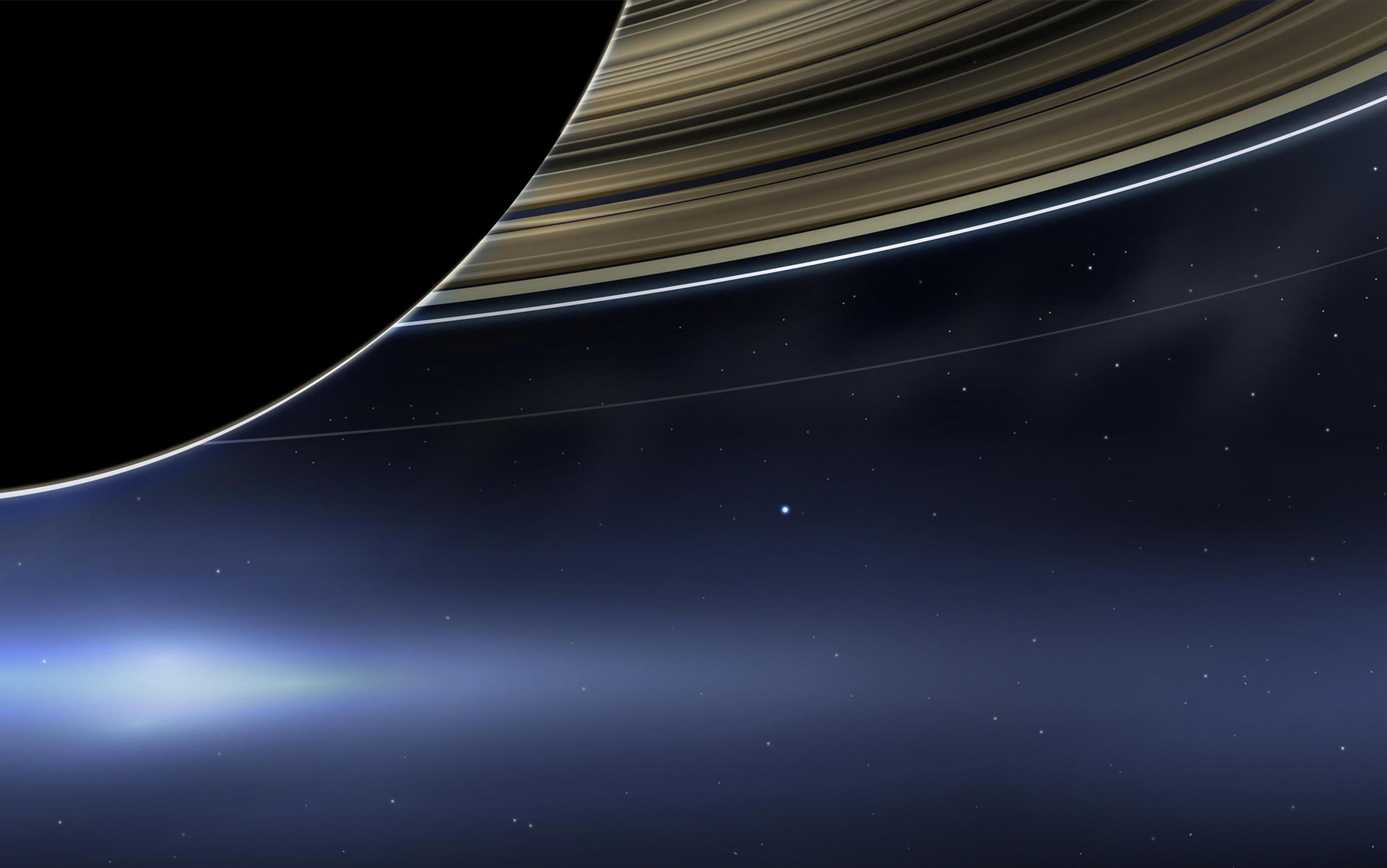

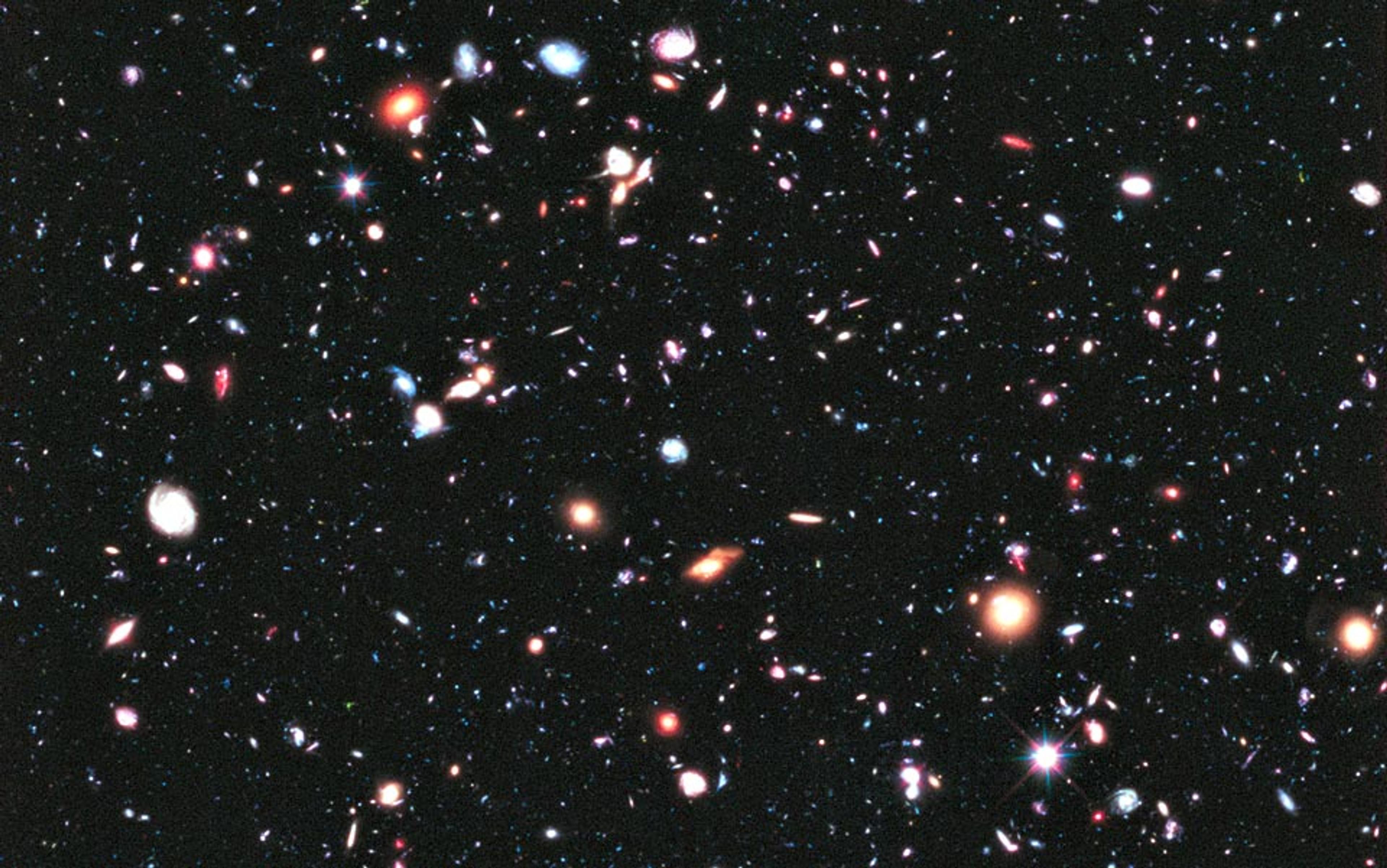

It can be emotionally difficult to absorb the radical contingency of humanity. Especially if you have been culturally conditioned by the biblical creation story, which makes humans out to be the raison d’être of the entire physical universe, designated lords of a single, central, designed, habitable region. Nicolaus Copernicus upended this picture in the 16th century by relocating the Earth to a slightly off-centre position, and every subsequent advance in our knowledge of cosmic geography has bolstered this view — that the Earth holds no special position in the grand scheme of things. The idea that the billions of visible galaxies, to say nothing of the expanses we can’t see, exist for our sake alone is patently absurd. Scientific cosmology has consigned that notion to the dustbin of history.

So far, so good, right? As tough as it is to swallow, you can feel secure in the knowledge that you are an accident and that humanity is, too. But what about the universe itself? Can it be mere chance that there are galaxies at all, or that the nuclear reactions inside stars eventually produce the chemical building blocks of life from hydrogen and helium? According to some theories, the processes behind these phenomena depend on finely calibrated initial conditions or unlikely coincidences involving the constants of nature. One could always write them off to fortuitous accident, but many cosmologists have found that unsatisfying, and have tried to find physical mechanisms that could produce life under a wide range of circumstances.

Ever since the 1920s when Edwin Hubble discovered that all visible galaxies are receding from one another, cosmologists have embraced a general theory of the history of the visible universe. In this view, the visible universe originated from an unimaginably compact and hot state. Prior to 1980, the standard Big Bang models had the universe expanding in size and cooling at a steady pace from the beginning of time until now. These models were adjusted to fit observed data by selecting initial conditions, but some began to worry about how precise and special those initial conditions had to be.

For example, Big Bang models attribute an energy density — the amount of energy per cubic centimetre — to the initial state of the cosmos, as well as an initial rate of expansion of space itself. The subsequent evolution of the universe depends sensitively on the relation between this energy density and the rate of expansion. Pack the energy too densely and the universe will eventually recontract into a big crunch; spread it out too thin and the universe will expand forever, with the matter diluting so rapidly that stars and galaxies cannot form. Between these two extremes lies a highly specialised history in which the universe never recontracts and the rate of expansion eventually slows to zero. In the argot of cosmology, this special situation is called W = 1. Cosmological observation reveals that the value of W for the visible universe at present is quite near to 1. This is, by itself, a surprising finding, but what’s more, the original Big Bang models tell us that W = 1 is an unstable equilibrium point, like a marble perfectly balanced on an overturned bowl. If the marble happens to be exactly at the top it will stay there, but if it is displaced even slightly from the very top it will rapidly roll faster and faster away from that special state.

This is an example of cosmological fine-tuning. In order for the standard Big Bang model to yield a universe even vaguely like ours now, this particular initial condition had to be just right at the beginning. Some cosmologists balked at this idea. It might have been just luck that the Solar system formed and life evolved on Earth, but it seemed unacceptable for it to be just luck that the whole observable universe should have started so near the critical energy density required for there to be cosmic structure at all.

And that’s not the only fine-tuned initial condition implied by the original Big Bang model. If you train a radio-telescope at any region of the sky, you observe a cosmic background radiation, the so-called ‘afterglow of the Big Bang’. The strange thing about this radiation is that it is quite uniform in temperature, no matter where you measure it. One might suspect that this uniformity is due to a common history, and that the different regions must have arisen from the same source. But according to the standard Big Bang models they don’t. The radiation traces back to completely disconnected parts of the initial state of the universe. The uniformity of temperature would therefore already have had to exist in the initial state of the Big Bang and, while this initial condition was certainly possible, many cosmologists feel this would be highly implausible.

In 1980, the American cosmologist Alan Guth proposed a different scenario for the early universe, one that ameliorated the need for special initial conditions in accounting for the uniformity of background radiation and the energy density of the universe we see around us today. Guth dubbed the theory ‘inflation’ because it postulates a brief period of hyper-exponential expansion of the universe, occurring shortly after the Big Bang. This tremendous growth in size would both tend to ‘flatten’ the universe, driving W very close to 1 irrespective of what it had been before, and would imply that the regions from which all visible background radiation originated did, in fact, share a common history.

At first glance, the inflationary scenario seems to solve the fine-tuning problem: by altering our story about how the universe evolved, we can make the present state less sensitive to precise initial conditions. But there are still reasons to worry, because, after all, inflation can’t just be wished into existence; we have to postulate a physical mechanism that drives it. Early attempts to devise such a mechanism were inspired by the realisation that certain sorts of field — in particular, the hypothesised Higgs field — would naturally produce inflation. But more exact calculations showed that the sort of inflation that would arise from this Higgs field would not produce the universe we see around us today. So cosmologists cut the Gordian knot: instead of seeking the source of the inflation in a field already postulated for some other reason, they simply assume a new field — the ‘inflaton’ field — with just the characteristics needed to produce the phenomena.

Had the constants of nature taken slightly different values, we would not be here

Unfortunately, the phenomena to be explained, which include not just the present energy density and background radiation but also the formation and clustering of galaxies and stars, require that the inflation take a rather particular form. This ‘slow-roll’ inflation in turn puts very strict constraints on the form of the inflaton field. The constraints are so severe that some cosmologists fear one form of fine-tuning (exact initial conditions in the original Big Bang theory) has just been traded for another form (the precise details of the inflaton field). But the inflationary scenario fits so well with the precise temperature fluctuations of the background radiation that an inflationary epoch is now an accepted feature of the Big Bang theory. Inflation itself seems here to stay, even while the precise mechanism for inflation remains obscure, and worryingly fine-tuned.

Here we reach the edge of our understanding, and a deep, correlative uncertainty about whether there is a problem with our current explanations of the universe. If the origin of the inflaton field is unknown, how can one judge whether its form is somehow ‘unusual’ and ‘fine-tuned’ rather than ‘completely unsurprising’? As we have seen, the phenomena themselves do not wear such a designation on their sleeves. What is merely due to coincidence under one physical theory becomes the typical case under another and, where the physics itself is unclear, judgments about how ‘likely’ or ‘unlikely’ a phenomenon is become unclear as well. This problem gets even worse when you consider certain ‘constants of nature’.

Just as the overall history and shape of the visible universe depends upon special initial conditions in the original Big Bang model, many of the most general features of the visible universe depend quite sensitively on the precise values of various ‘constants of nature’. These include the masses of the fundamental particles (quarks, electrons, neutrinos, etc) as well as physical parameters such as the fine-structure constant that reflect the relative strength of different forces. Some physicists have argued that, had the values of these ‘constants’ been even slightly different, the structure of the universe would have been altered in important ways. For example, the proton is slightly lighter than the neutron because the down quark is slightly heavier than the up, and since the proton is lighter than the neutron, a proton cannot decay into a neutron and a positron. Indeed, despite intensive experimental efforts, proton decay has never been observed at all. But if the proton were sufficiently heavier than the neutron, protons would be unstable, and all of chemistry as we know it would be radically changed.

Similarly, it has been argued that if the fine-structure constant, which characterises the strength of the electromagnetic interaction, differed by only 4 per cent, then carbon would not be produced by stellar fusion. Without a sufficient abundance of carbon, carbon-based life forms could not exist. This is yet another way that life as we know it could appear to be radically contingent. Had the constants of nature taken slightly different values, we would not be here.

Some physicists simply feel that the existence of stars and planets and life ought not to require so much ‘luck’

The details of these sorts of calculations should be taken with a grain of salt. It might seem like a straightforward mathematical question to work out what the consequences of twiddling a ‘constant’ of nature would be, but think of the tremendous intellectual effort that has had to go into figuring out the physical consequences of the actual values of these constants. No one could sit down and rigorously work out an entirely new physics in a weekend. And even granting the main conclusion, that many of the most widespread structures of the universe and many of the more detailed physical structures that support living things depend sensitively on the values of these constants — what follows?

Some physicists simply feel that the existence of stars and planets and life ought not to require so much ‘luck’. They would prefer a physical theory that yields the emergence of these structures as typical and robust phenomena, not hostage to a fortunate throw of the cosmic dice that set the values of the constants. Of course, the metaphor of a throw of the cosmic dice is unfortunate: if a ‘constant of nature’ really is a fixed value, then it was not the product of any chancey process. It is not at all clear what it means to say, in this context, that the particular values that obtain were ‘improbable’ or ‘unlikely’.

If, however, we think that the existence of abundant carbon in the universe ought not to require a special and further unexplained set of values for the constants of nature, what options for explanation do we have? We have seen how changing the basic dynamics of the Big Bang can make some phenomena much less sensitive to the initial conditions, and so appear typical rather than exceptional. Could any sort of physics provide a similar explanation for the values of the ‘constants of nature’ themselves?

One way to counter the charge that an outcome is improbable is to increase the number of chances it has to occur. The chance that any particular sperm will find an egg is small, but the large number of sperm overcomes this low individual chance so that offspring are regularly produced. The chance of a monkey writing Hamlet by randomly hitting keys on a typewriter is tiny, but given enough monkeys and typewriters, the hypothetical probability of producing a copy of the play approaches 100 per cent. Similarly, even if the ‘constants of nature’ have to fall in a narrow range of values for carbon to be produced, make enough random choices of values and at least one choice might yield the special values. But how could there be many different ‘choices’ of the constants of nature, given that they are said to be constant?

String theory provides a possibility. According to it, space-time has more dimensions that are immediately apparent, and these extra dimensions beyond four are ‘compactified’ or curled up at microscopic scale, forming a Calabi-Yau manifold. The ‘constants of nature’ are then shown to be dependent on the exact form of the compactification. There are hundreds of thousands, and possibly infinitely many, distinct possible Calabi-Yau manifolds, and so correspondingly many ways for the ‘constants of nature’ to come out. If there is a mechanism for all of these possibilities to be realised, it will be likely that at least one will correspond to the values we observe.

One theory of inflation, called eternal inflation, provides a mechanism that would lead to all possible manifolds. In this theory, originally put forth by the cosmologists Andrei Linde at Stanford and Alexander Vilenkin at Tufts, the universe is much, much larger and more exotic than the visible universe of which we are aware. Most of this universe is in a constant state of hyper-exponential inflation, similar to that of the inflationary phase of the new Big Bang models. Within this expanding region, ‘bubbles’ of slowly expanding space-time are formed at random, and each bubble is associated with a different Calabi-Yau compactification, and hence with different ‘constants of nature’. As with the monkeys and the typewriters, just the right combination is certain to arise given enough tries, and so, it’s no wonder that life-friendly universes such as ours exist, and it’s also no wonder that living creatures such as ourselves would be living in one.

There is one other conceptual possibility for overcoming fine-tuning that is worth our consideration, even if there is no explicit physics to back it up yet. In this scenario, the universe’s dynamics do not ‘aim’ at any particular outcome, nor does the universe randomly try out all possibilities, and yet it still tends to produce worlds in which physical quantities might appear to have been adjusted to one another. The name for this sort of physical process is homeostasis.

Here is a simple example. When a large object starts falling through the atmosphere, it initially accelerates downward due to the force of gravity. As it falls faster, air resistance increases, and that opposes the gravitational force. Eventually, the object reaches a terminal velocity where the drag exactly equals the force of gravity, the acceleration stops, and the object falls at a constant speed.

Suppose intelligent creatures evolved on such a falling object after it had reached the terminal velocity. They develop a theory of gravity, on the basis of which they can calculate the net gravitational force on their falling home. This calculation would require determining the exact composition of the object through its whole volume in order to determine its mass. They also develop a theory of drag. The amount of drag produced by part of the surface of the object would be a function of its precise shape: the smoother the surface, the less drag. Since the object is falling at a constant speed, the physics of these creatures would include a ‘constant of nature’ relating shapes of the surface to forces. In order to calculate the total drag on the object, the creatures would have to carefully map the entire shape of the surface and use their ‘constant of nature’.

Our modern understanding of cosmology demotes many facts of central importance to humans — in particular the very existence of our species — to mere cosmic accident

Having completed these difficult tasks, our creatures would discover an amazing ‘coincidence’: the total gravitational force, which is a function of the volume and composition of the object, almost exactly matches the total drag, which is a function of the shape of the surface! It would appear to be an instance of incredible fine-tuning: the data that go into one calculation would have nothing to do with the data that go into the other, yet the results match. Change the composition without changing the surface shape, or change the surface shape without changing the composition, and the two values would no longer be (nearly) equal.

But this ‘miraculous coincidence’ would be no coincidence at all. The problem is that our creatures would be treating the velocity of the falling object as a ‘constant of nature’ — after all, it has been a constant as long as they have existed — even though it is really a variable quantity. When the object began to fall, the force of gravity did not balance the drag. The object therefore accelerated, increasing the velocity and hence increasing the drag, until the two forces balanced. Similarly, we can imagine discovering that some of the quantities we regard as constants are not just variable between bubbles but variable within bubbles. Given the right set of opposing forces, these variables could naturally evolve to stasis, and hence appear later as constants of nature. And stasis would be a condition in which various independent quantities have become ‘fine-tuned’ to one another.

The problem of cosmological fine-tuning is never straightforward. It is not clear, in the first place, when it is legitimate to complain that a physical theory treats some phenomenon as a highly contingent ‘product of chance’. Where the complaint is legitimate, the cosmologist has several different means of recourse. The inflationary Big Bang illustrates how a change in dynamics can convert delicate dependence on initial conditions to a robust independence from the initial state. The bubble universe scenario demonstrates how low individual probabilities can be overcome by multiplying the number of chances. And homeostasis provides a mechanism for variable quantities to naturally evolve to special unchanging values that could easily be mistaken for constants of nature.

But our modern understanding of cosmology does demote many facts of central importance to humans — in particular the very existence of our species — to mere cosmic accident, and none of the methods for overcoming fine-tuning hold out any prospect for reversing that realisation. In the end, we might just have to accommodate ourselves to being yet another accident in an accidental universe.