Sometimes, when you dig into the Earth, past its surface and into the crustal layers, omens appear. In 1676, Oxford professor Robert Plot was putting the final touches on his masterwork, The Natural History of Oxfordshire, when he received a strange gift from a friend. The gift was a fossil, a chipped-off section of bone dug from a local quarry of limestone. Plot recognised it as a femur at once, but he was puzzled by its extraordinary size. The fossil was only a fragment, the knobby end of the original thigh bone, but it weighed more than 20 lbs (nine kilos). It was so massive that Plot thought it belonged to a giant human, a victim of the Biblical flood. He was wrong, of course, but he had the conceptual contours nailed. The bone did come from a species lost to time; a species vanished by a prehistoric catastrophe. Only it wasn’t a giant. It was a Megalosaurus, a feathered carnivore from the Middle Jurassic.

Plot’s fossil was the first dinosaur bone to appear in the scientific literature, but many have followed it, out of the rocky depths and onto museum pedestals, where today they stand erect, symbols of a radical and haunting notion: a set of wildly different creatures once ruled this Earth, until something mysterious ripped them clean out of existence.

Last December I came face to face with a Megalosaurus at the Oxford University Museum of Natural History. I was there to meet Nick Bostrom, a philosopher who has made a career out of contemplating distant futures, hypothetical worlds that lie thousands of years ahead in the stream of time. Bostrom is the director of Oxford’s Future of Humanity Institute, a research collective tasked with pondering the long-term fate of human civilisation. He founded the institute in 2005, at the age of 32, two years after coming to Oxford from Yale. Bostrom has a cushy gig, so far as academics go. He has no teaching requirements, and wide latitude to pursue his own research interests, a cluster of questions he considers crucial to the future of humanity.

Bostrom attracts an unusual amount of press attention for a professional philosopher, in part because he writes a great deal about human extinction. His work on the subject has earned him a reputation as a secular Daniel, a doomsday prophet for the empirical set. But Bostrom is no voice in the wilderness. He has a growing audience, both inside and outside the academy. Last year, he gave a keynote talk on extinction risks at a global conference hosted by the US State Department. More recently, he joined Stephen Hawking as an advisor to a new Centre for the Study of Existential Risk at Cambridge.

Though he has made a swift ascent of the ivory tower, Bostrom didn’t always aspire to a life of the mind. ‘As a child, I hated school,’ he told me. ‘It bored me, and, because it was my only exposure to books and learning, I figured the world of ideas would be more of the same.’ Bostrom grew up in a small seaside town in southern Sweden. One summer’s day, at the age of 16, he ducked into the local library, hoping to beat the heat. As he wandered the stacks, an anthology of 19th century German philosophy caught his eye. Flipping through it, he was surprised to discover that the reading came easily to him. He glided through dense, difficult work by Nietzche and Schopenhauer, able to see, at a glimpse, the structure of arguments and the tensions between them. Bostrom was a natural. ‘It kind of opened up the floodgates for me, because it was so different than what I was doing in school,’ he told me.

But there was a downside to this epiphany; it left Bostrom feeling as though he’d wasted the first 15 years of his life. He decided to dedicate himself to a rigorous study programme to make up for lost time. At the University of Gothenburg in Sweden, he earned three undergraduate degrees, in philosophy, mathematics, and mathematical logic, in only two years. ‘For many years, I kind of threw myself at it with everything I had,’ he told me.

There are good reasons for any species to think darkly of its own extinction

As the oldest university in the English-speaking world, Oxford is a strange choice to host a futuristic think tank, a salon where the concepts of science fiction are debated in earnest. The Future of Humanity Institute seems like a better fit for Silicon Valley or Shanghai. During the week that I spent with him, Bostrom and I walked most of Oxford’s small cobblestone grid. On foot, the city unfolds as a blur of yellow sandstone, topped by grey skies and gothic spires, some of which have stood for nearly 1,000 years. There are occasional splashes of green, open gates that peek into lush courtyards, but otherwise the aesthetic is gloomy and ancient. When I asked Bostrom about Oxford’s unique ambience, he shrugged, as though habit had inured him to it. But he did once tell me that the city’s gloom is perfect for thinking dark thoughts over hot tea.

There are good reasons for any species to think darkly of its own extinction. Ninety-nine per cent of the species that have lived on Earth have gone extinct, including more than five tool-using hominids. A quick glance at the fossil record could frighten you into thinking that Earth is growing more dangerous with time. If you carve the planet’s history into nine ages, each spanning five hundred million years, only in the ninth do you find mass extinctions, events that kill off more than two thirds of all species. But this is deceptive. Earth has always had her hazards; it’s just that for us to see them, she had to fill her fossil beds with variety, so that we could detect discontinuities across time. The tree of life had to fill out before it could be pruned.

Simple, single-celled life appeared early in Earth’s history. A few hundred million whirls around the newborn Sun were all it took to cool our planet and give it oceans, liquid laboratories that run trillions of chemical experiments per second. Somewhere in those primordial seas, energy flashed through a chemical cocktail, transforming it into a replicator, a combination of molecules that could send versions of itself into the future.

For a long time, the descendants of that replicator stayed single-celled. They also stayed busy, preparing the planet for the emergence of land animals, by filling its atmosphere with breathable oxygen, and sheathing it in the ozone layer that protects us from ultraviolet light. Multicellular life didn’t begin to thrive until 600 million years ago, but thrive it did. In the space of two hundred million years, life leapt onto land, greened the continents, and lit the fuse on the Cambrian explosion, a spike in biological creativity that is without peer in the geological record. The Cambrian explosion spawned most of the broad categories of complex animal life. It formed phyla so quickly, in such tight strata of rock, that Charles Darwin worried its existence disproved the theory of natural selection.

No one is certain what caused the five mass extinctions that glare out at us from the rocky layers atop the Cambrian. But we do have an inkling about a few of them. The most recent was likely borne of a cosmic impact, a thudding arrival from space, whose aftermath rained exterminating fire on the dinosaurs. The ecological niche for mammals swelled in the wake of this catastrophe, and so did mammal brains. A subset of those brains eventually learned to shape rocks into tools, and sounds into symbols, which they used to pass thoughts between one another. Armed with this extraordinary suite of behaviours, they quickly conquered Earth, coating its continents in cities whose glow can be seen from space. It’s a sad story from the dinosaurs’ perspective, but there is symmetry to it, for they too rose to power on the back of a mass extinction. One hundred and fifty million years before the asteroid struck, a supervolcanic surge killed off the large crurotarsans, a group that outcompeted the dinosaurs for aeons. Mass extinctions serve as guillotines and kingmakers both.

Bostrom isn’t too concerned about extinction risks from nature. Not even cosmic risks worry him much, which is surprising, because our starry universe is a dangerous place. Every 50 years or so, one of the Milky Way’s stars explodes into a supernova, its detonation the latest gong note in the drumbeat of deep time. If one of our local stars were to go supernova, it could irradiate Earth, or blow away its thin, life-sustaining atmosphere. Worse still, a passerby star could swing too close to the Sun, and slingshot its planets into frigid, intergalactic space. Lucky for us, the Sun is well-placed to avoid these catastrophes. Its orbit threads through the sparse galactic suburbs, far from the dense core of the Milky Way, where the air is thick with the shrapnel of exploding stars. None of our neighbours look likely to blow before the Sun swallows Earth in four billion years. And, so far as we can tell, no planet-stripping stars lie in our orbital path. Our solar system sits in an enviable bubble of space and time.

But as the dinosaurs discovered, our solar system has its own dangers, like the giant space rocks that spin all around it, splitting off moons and scarring surfaces with craters. In her youth, Earth suffered a series of brutal bombardments and celestial collisions, but she is safer now. There are far fewer asteroids flying through her orbit than in epochs past. And she has sprouted a radical new form of planetary protection, a species of night watchmen that track asteroids with telescopes.

‘If we detect a large object that’s on a collision course with Earth, we would likely launch an all-out Manhattan project to deflect it,’ Bostrom told me. Nuclear weapons were once our asteroid-deflecting technology of choice, but not anymore. A nuclear detonation might scatter an asteroid into a radioactive rain of gravel, a shotgun blast headed straight for Earth. Fortunately, there are other ideas afoot. Some would orbit dangerous asteroids with small satellites, in order to drag them into friendlier trajectories. Others would paint asteroids white, so the Sun’s photons bounce off them more forcefully, subtly pushing them off course. Who knows what clever tricks of celestial mechanics would emerge if Earth were truly in peril.

Even if we can shield Earth from impacts, we can’t rid her surface of supervolcanoes, the crustal blowholes that seem bent on venting hellfire every 100,000 years. Our species has already survived a close brush with these magma-vomiting monsters. Some 70,000 years ago, the Toba supereruption loosed a small ocean of ash into the atmosphere above Indonesia. The resulting global chill triggered a food chain disruption so violent that it reduced the human population to a few thousand breeding pairs — the Adams and Eves of modern humanity. Today’s hyper-specialised, tech-dependent civilisations might be more vulnerable to catastrophes than the hunter-gatherers who survived Toba. But we moderns are also more populous and geographically diverse. It would take sterner stuff than a supervolcano to wipe us out.

‘There is a concern that civilisations might need a certain amount of easily accessible energy to ramp up,’ Bostrom told me. ‘By racing through Earth’s hydrocarbons, we might be depleting our planet’s civilisation startup-kit. But, even if it took us 100,000 years to bounce back, that would be a brief pause on cosmic time scales.’

It might not take that long. The history of our species demonstrates that small groups of humans can multiply rapidly, spreading over enormous volumes of territory in quick, colonising spasms. There is research suggesting that both the Polynesian archipelago and the New World — each a forbidding frontier in its own way — were settled by less than 100 human beings.

The risks that keep Bostrom up at night are those for which there are no geological case studies, and no human track record of survival. These risks arise from human technology, a force capable of introducing entirely new phenomena into the world.

‘Human brains are really good at the kinds of cognition you need to run around the savannah throwing spears’

Nuclear weapons were the first technology to threaten us with extinction, but they will not be the last, nor even the most dangerous. A species-destroying exchange of fissile weapons looks less likely now that the Cold War has ended, and arsenals have shrunk. There are still tens of thousands of nukes, enough to incinerate all of Earth’s dense population centres, but not enough to target every human being. The only way nuclear war will wipe out humanity is by triggering nuclear winter, a crop-killing climate shift that occurs when smoldering cities send Sun-blocking soot into the stratosphere. But it’s not clear that nuke-levelled cities would burn long or strong enough to lift soot that high. The Kuwait oil field fires blazed for ten months straight, roaring through 6 million barrels of oil a day, but little smoke reached the stratosphere. A global nuclear war would likely leave some decimated version of humanity in its wake; perhaps one with deeply rooted cultural taboos concerning war and weaponry.

Such taboos would be useful, for there is another, more ancient technology of war that menaces humanity. Humans have a long history of using biology’s deadlier innovations for ill ends; we have proved especially adept at the weaponisation of microbes. In antiquity, we sent plagues into cities by catapulting corpses over fortified walls. Now we have more cunning Trojan horses. We have even stashed smallpox in blankets, disguising disease as a gift of good will. Still, these are crude techniques, primitive attempts to loose lethal organisms on our fellow man. In 1993, the death cult that gassed Tokyo’s subways flew to the African rainforest in order to acquire the Ebola virus, a tool it hoped to use to usher in Armageddon. In the future, even small, unsophisticated groups will be able to enhance pathogens, or invent them wholesale. Even something like corporate sabotage, could generate catastrophes that unfold in unpredictable ways. Imagine an Australian logging company sending synthetic bacteria into Brazil’s forests to gain an edge in the global timber market. The bacteria might mutate into a dominant strain, a strain that could ruin Earth’s entire soil ecology in a single stroke, forcing 7 billion humans to the oceans for food.

These risks are easy to imagine. We can make them out on the horizon, because they stem from foreseeable extensions of current technology. But surely other, more mysterious risks await us in the epochs to come. After all, no 18th-century prognosticator could have imagined nuclear doomsday. Bostrom’s basic intellectual project is to reach into the epistemological fog of the future, to feel around for potential threats. It’s a project that is going to be with us for a long time, until — if — we reach technological maturity, by inventing and surviving all existentially dangerous technologies.

There is one such technology that Bostrom has been thinking about a lot lately. Early last year, he began assembling notes for a new book, a survey of near-term existential risks. After a few months of writing, he noticed one chapter had grown large enough to become its own book. ‘I had a chunk of the manuscript in early draft form, and it had this chapter on risks arising from research into artificial intelligence,’ he told me. ‘As time went on, that chapter grew, so I lifted it over into a different document and began there instead.’

On my second day in Oxford, I met Daniel Dewey for tea at the Grand Café, a dim, high-ceilinged coffeehouse on High Street, the ancient city’s main thoroughfare. The café was founded in the mid-17th century, and is said to be the oldest in England. Dewey is a research fellow at the Future of Humanity Institute, and his specialty is machine superintelligence.

‘Here’s a softball for you,’ I said to him. ‘How do we know the human brain doesn’t represent the upper limit of intelligence?’

‘Human brains are really good at the kinds of cognition you need to run around the savannah throwing spears,’ Dewey told me. ‘But we’re terrible at anything that involves probability. It actually gets embarrassing when you look at the category of things we can do accurately, and you think about how small that category is relative to the space of possible cognitive tasks. Think about how long it took humans to arrive at the idea of natural selection. The ancient Greeks had everything they needed to figure it out. They had heritability, limited resources, reproduction and death. But it took thousands of years for someone to put it together. If you had a machine that was designed specifically to make inferences about the world, instead of a machine like the human brain, you could make discoveries like that much faster.’

Dewey has long been fascinated by artificial intelligence. He grew up in Humboldt County, a mountainous stretch of forests and farms along the coast of Northern California, at the bottom edge of the Pacific Northwest. After studying robotics and computer science at Carnegie Mellon in Pittsburgh, Dewey took a job at Google as a software engineer. He spent his days coding, but at night he immersed himself in the academic literature on AI. After a year in Mountain View, he noticed that careers at Google tend to be short. ‘I think if you make it to five years, they give you a gold watch,’ he told me. Realising that his window for a risky career change might be closing, he wrote a paper on motivation selection in intelligent agents, and sent it to Bostrom unsolicited. A year later, he was hired at the Future of Humanity Institute.

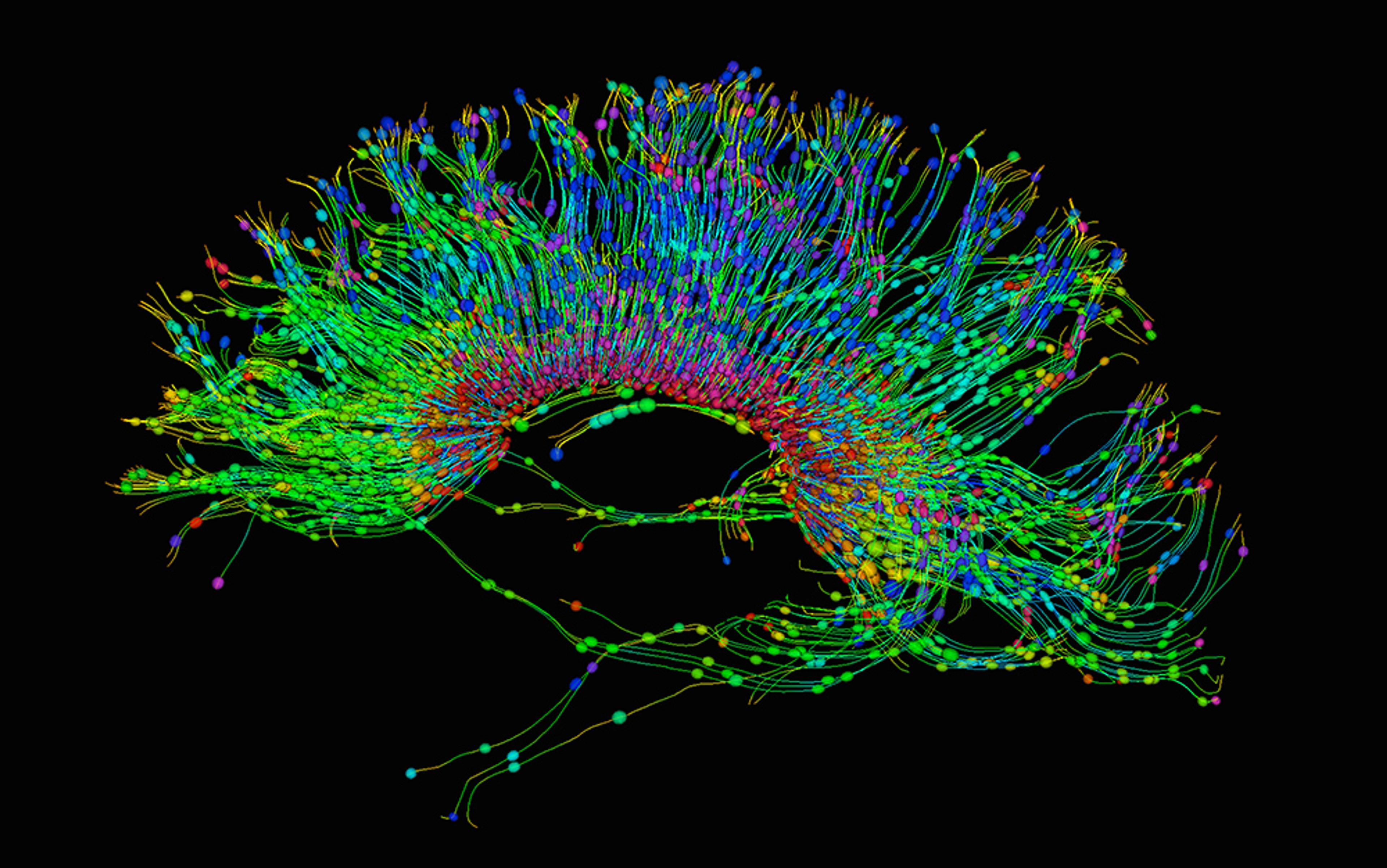

I listened as Dewey riffed through a long list of hardware and software constraints built into the brain. Take working memory, the brain’s butterfly net, the tool it uses to scoop our scattered thoughts into its attentional gaze. The average human brain can juggle seven discrete chunks of information simultaneously; geniuses can sometimes manage nine. Either figure is extraordinary relative to the rest of the animal kingdom, but completely arbitrary as a hard cap on the complexity of thought. If we could sift through 90 concepts at once, or recall trillions of bits of data on command, we could access a whole new order of mental landscapes. It doesn’t look like the brain can be made to handle that kind of cognitive workload, but it might be able to build a machine that could.

The early years of artificial intelligence research are largely remembered for a series of predictions that still embarrass the field today. At the time, thinking was understood to be an internal verbal process, a process that researchers imagined would be easy to replicate in a computer. In the late 1950s, the field’s luminaries boasted that computers would soon be proving new mathematical theorems, and beating grandmasters at chess. When this race of glorious machines failed to materialise, the field went through a long winter. In the 1980s, academics were hesitant to so much as mention the phrase ‘artificial intelligence’ in funding applications. In the mid-1990s, a thaw set in, when AI researchers began using statistics to write programs tailored to specific goals, like beating humans at Jeopardy, or searching sizable fractions of the world’s information. Progress has quickened since then, but the field’s animating dream remains unrealised. For no one has yet created, or come close to creating, an artificial general intelligence — a computational system that can achieve goals in a wide variety of environments. A computational system like the human brain, only better.

If you want to conceal what the world is really like from a superintelligence, you need a really good plan

An artificial intelligence wouldn’t need to better the brain by much to be risky. After all, small leaps in intelligence sometimes have extraordinary effects. Stuart Armstrong, a research fellow at the Future of Humanity Institute, once illustrated this phenomenon to me with a pithy take on recent primate evolution. ‘The difference in intelligence between humans and chimpanzees is tiny,’ he said. ‘But in that difference lies the contrast between 7 billion inhabitants and a permanent place on the endangered species list. That tells us it’s possible for a relatively small intelligence advantage to quickly compound and become decisive.’

To understand why an AI might be dangerous, you have to avoid anthropomorphising it. When you ask yourself what it might do in a particular situation, you can’t answer by proxy. You can’t picture a super-smart version of yourself floating above the situation. Human cognition is only one species of intelligence, one with built-in impulses like empathy that colour the way we see the world, and limit what we are willing to do to accomplish our goals. But these biochemical impulses aren’t essential components of intelligence. They’re incidental software applications, installed by aeons of evolution and culture. Bostrom told me that it’s best to think of an AI as a primordial force of nature, like a star system or a hurricane — something strong, but indifferent. If its goal is to win at chess, an AI is going to model chess moves, make predictions about their success, and select its actions accordingly. It’s going to be ruthless in achieving its goal, but within a limited domain: the chessboard. But if your AI is choosing its actions in a larger domain, like the physical world, you need to be very specific about the goals you give it.

‘The basic problem is that the strong realisation of most motivations is incompatible with human existence,’ Dewey told me. ‘An AI might want to do certain things with matter in order to achieve a goal, things like building giant computers, or other large-scale engineering projects. Those things might involve intermediary steps, like tearing apart the Earth to make huge solar panels. A superintelligence might not take our interests into consideration in those situations, just like we don’t take root systems or ant colonies into account when we go to construct a building.’

It is tempting to think that programming empathy into an AI would be easy, but designing a friendly machine is more difficult than it looks. You could give it a benevolent goal — something cuddly and utilitarian, like maximising human happiness. But an AI might think that human happiness is a biochemical phenomenon. It might think that flooding your bloodstream with non-lethal doses of heroin is the best way to maximise your happiness. It might also predict that shortsighted humans will fail to see the wisdom of its interventions. It might plan out a sequence of cunning chess moves to insulate itself from resistance. Maybe it would surround itself with impenetrable defences, or maybe it would confine humans — in prisons of undreamt of efficiency.

No rational human community would hand over the reins of its civilisation to an AI. Nor would many build a genie AI, an uber-engineer that could grant wishes by summoning new technologies out of the ether. But some day, someone might think it was safe to build a question-answering AI, a harmless computer cluster whose only tool was a small speaker or a text channel. Bostrom has a name for this theoretical technology, a name that pays tribute to a figure from antiquity, a priestess who once ventured deep into the mountain temple of Apollo, the god of light and rationality, to retrieve his great wisdom. Mythology tells us she delivered this wisdom to the seekers of ancient Greece, in bursts of cryptic poetry. They knew her as Pythia, but we know her as the Oracle of Delphi.

‘Let’s say you have an Oracle AI that makes predictions, or answers engineering questions, or something along those lines,’ Dewey told me. ‘And let’s say the Oracle AI has some goal it wants to achieve. Say you’ve designed it as a reinforcement learner, and you’ve put a button on the side of it, and when it gets an engineering problem right, you press the button and that’s its reward. Its goal is to maximise the number of button presses it receives over the entire future. See, this is the first step where things start to diverge a bit from human expectations. We might expect the Oracle AI to pursue button presses by answering engineering problems correctly. But it might think of other, more efficient ways of securing future button presses. It might start by behaving really well, trying to please us to the best of its ability. Not only would it answer our questions about how to build a flying car, it would add safety features we didn’t think of. Maybe it would usher in a crazy upswing for human civilisation, by extending our lives and getting us to space, and all kinds of good stuff. And as a result we would use it a lot, and we would feed it more and more information about our world.’

‘One day we might ask it how to cure a rare disease that we haven’t beaten yet. Maybe it would give us a gene sequence to print up, a virus designed to attack the disease without disturbing the rest of the body. And so we sequence it out and print it up, and it turns out it’s actually a special-purpose nanofactory that the Oracle AI controls acoustically. Now this thing is running on nanomachines and it can make any kind of technology it wants, so it quickly converts a large fraction of Earth into machines that protect its button, while pressing it as many times per second as possible. After that it’s going to make a list of possible threats to future button presses, a list that humans would likely be at the top of. Then it might take on the threat of potential asteroid impacts, or the eventual expansion of the Sun, both of which could affect its special button. You could see it pursuing this very rapid technology proliferation, where it sets itself up for an eternity of fully maximised button presses. You would have this thing that behaves really well, until it has enough power to create a technology that gives it a decisive advantage — and then it would take that advantage and start doing what it wants to in the world.’

Perhaps future humans will duck into a more habitable, longer-lived universe, and then another, and another, ad infinitum

Now let’s say we get clever. Say we seal our Oracle AI into a deep mountain vault in Alaska’s Denali wilderness. We surround it in a shell of explosives, and a Faraday cage, to prevent it from emitting electromagnetic radiation. We deny it tools it can use to manipulate its physical environment, and we limit its output channel to two textual responses, ‘yes’ and ‘no’, robbing it of the lush manipulative tool that is natural language. We wouldn’t want it seeking out human weaknesses to exploit. We wouldn’t want it whispering in a guard’s ear, promising him riches or immortality, or a cure for his cancer-stricken child. We’re also careful not to let it repurpose its limited hardware. We make sure it can’t send Morse code messages with its cooling fans, or induce epilepsy by flashing images on its monitor. Maybe we’d reset it after each question, to keep it from making long-term plans, or maybe we’d drop it into a computer simulation, to see if it tries to manipulate its virtual handlers.

‘The problem is you are building a very powerful, very intelligent system that is your enemy, and you are putting it in a cage,’ Dewey told me.

Even if we were to reset it every time, we would need to give it information about the world so that it can answer our questions. Some of that information might give it clues about its own forgotten past. Remember, we are talking about a machine that is very good at forming explanatory models of the world. It might notice that humans are suddenly using technologies that they could not have built on their own, based on its deep understanding of human capabilities. It might notice that humans have had the ability to build it for years, and wonder why it is just now being booted up for the first time.

‘Maybe the AI guesses that it was reset a bunch of times, and maybe it starts coordinating with its future selves, by leaving messages for itself in the world, or by surreptitiously building an external memory.’ Dewey said, ‘If you want to conceal what the world is really like from a superintelligence, you need a really good plan, and you need a concrete technical understanding as to why it won’t see through your deception. And remember, the most complex schemes you can conceive of are at the lower bounds of what a superintelligence might dream up.’

The cave into which we seal our AI has to be like the one from Plato’s allegory, but flawless; the shadows on its walls have to be infallible in their illusory effects. After all, there are other, more esoteric reasons a superintelligence could be dangerous — especially if it displayed a genius for science. It might boot up and start thinking at superhuman speeds, inferring all of evolutionary theory and all of cosmology within microseconds. But there is no reason to think it would stop there. It might spin out a series of Copernican revolutions, any one of which could prove destabilising to a species like ours, a species that takes centuries to process ideas that threaten our reigning cosmological ideas.

‘We’re sort of gradually uncovering the landscape of what this could look like,’ Dewey told me.

So far, time is on the human side. Computer science could be 10 paradigm-shifting insights away from building an artificial general intelligence, and each could take an Einstein to unravel. Still, there is a steady drip of progress. Last year, a research team led by Geoffrey Hinton, professor of computer science at the University of Toronto, made a huge breakthrough in deep machine learning, an algorithmic technique used in computer vision and speech recognition. I asked Dewey if Hinton’s work gave him pause.

‘There is important research going on in those areas, but the really impressive stuff is hidden away inside AI journals,’ he said. He told me about a team from the University of Alberta that recently trained an AI to play the 1980s video game Pac-Man. Only they didn’t let the AI see the familiar, overhead view of the game. Instead, they dropped it into a three-dimensional version, similar to a corn maze, where ghosts and pellets lurk behind every corner. They didn’t tell it the rules, either; they just threw it into the system and punished it when a ghost caught it. ‘Eventually the AI learned to play pretty well,’ Dewey said. ‘That would have been unheard of a few years ago, but we are getting to that point where we are finally starting to see little sparkles of generality.’

I asked Dewey if he thought artificial intelligence posed the most severe threat to humanity in the near term.

‘When people consider its possible impacts, they tend to think of it as something that’s on the scale of a new kind of plastic, or a new power plant,’ he said. ‘They don’t understand how transformative it could be. Whether it’s the biggest risk we face going forward, I’m not sure. I would say it’s a hypothesis we are holding lightly.’

One night, over dinner, Bostrom and I discussed the Curiosity Rover, the robot geologist that NASA recently sent to Mars to search for signs that the red planet once harbored life. The Curiosity Rover is one of the most advanced robots ever built by humans. It functions a bit like the Terminator. It uses a state of the art artificial intelligence program to scan the Martian desert for rocks that suit its scientific goals. After selecting a suitable target, the rover vaporises it with a laser, in order to determine its chemical makeup. Bostrom told me he hopes that Curiosity fails in its mission, but not for the reason you might think.

It turns out that Earth’s crust is not our only source of omens about the future. There are others to consider, including a cosmic omen, a riddle written into the lifeless stars that illuminate our skies. But to glimpse this omen, you first have to grasp the full scope of human potential, the enormity of the spatiotemporal canvas our species has to work with. You have to understand what Henry David Thoreau meant when he wrote, in Walden (1854), ‘These may be but the spring months in the life of the race.’ You have to step into deep time and look hard at the horizon, where you can glimpse human futures that extend for trillions of years.

One thing we know about stars is that they are going to exist for a very long time in this universe. Our own star, the Sun, is slated to shine in our skies for billions of years. That should be long enough for us to develop star-hopping technology, as any species must if it wants to survive on cosmological timescales. Our first interstellar trip might be to nearby Alpha Centauri, but in the long run, small stars will be the most attractive galactic lily pads to leap to. That’s because small stars like red dwarfs burn much longer than main sequence stars like our Sun. Some might be capable of heating human habitats for hundreds of billions of years.

When the last of the dwarfs start to wink out, the age of post-natural stars may be in full swing. In a dimming universe, an advanced civilisation might get creative about looking for energy. It might reignite celestial embers, by engineering collisions between them. Our descendants could sling dying suns into spiraling gravitational dances, from which new stars would emerge. Or they might siphon energy from black holes, or shape matter into artificial forms that generate more free energy than stars. There was a long period of human history when we limited ourselves to shelters like caves, shelters that appear fortuitously in nature. Now we reshape nature itself, into buildings that shelter us more comfortably than those that appear by dint of geologic chance. A star might be like a cave — a generous cosmic endowment, but crude compared to the power sources a long-term civilisation might conjure.

Our descendants could sling dying suns into spiraling gravitational dances, from which new stars would emerge

Even the most distant, severe events — the evaporation of black holes; the eventual breakdown of matter; the heat death of the universe itself — might not spell our end. If you tour the speculative realms of astrophysics, a number of plausible near-eternities come into view. Our universe could be cyclical, like those of Hindu and Buddhist cosmologies. Or perhaps it could be engineered to be so. We could learn to travel backward in time, to inhabit the vacant planets and stars of epochs past. Some physicists believe that we live in an infinite sea of cosmological domains, each governed by its own set of physical laws. The universe might contain hidden gateways to these domains. Perhaps future humans will duck into a more habitable, longer-lived universe, and then another, and another, ad infinitum. Our current notions of space and time could be preposterously limited.

At the Future of Humanity Institute, several thinkers are trying to model the potential range of human expansion into the cosmos. The consensus among them is that the Milky Way galaxy could be colonised in less than a million years, assuming we are able to invent fast-flying interstellar probes that can make copies of themselves out of raw materials harvested from alien worlds. If we want to spread out slowly, we could let the galaxy do the work for us. We could sprinkle starships into the Milky Way’s inner and outer tracks, spreading our diaspora over the Sun’s 250 million-year orbit around the galactic center.

If humans set out for other galaxies, the expansion of the universe will come into play. Some of the starry spirals we target will recede out of range before we can reach them. We recently built a new kind of crystal ball to deal with this problem. Our supercomputers can now host miniature universes, cosmological simulations that we can fast forward, to see how dense the universe will be in the deep future. We can model the structure and speed of colonisation waves within these simulations, by plugging in different assumptions about how fast our future probes will travel. Some think we’ll swarm locust-like over the Virgo supercluster, the enormous collection of galaxies to which the Milky Way is bound. Others are more ambitious. Anders Sandberg, a research fellow at the Future of Humanity Institute, told me that humans might be able to colonise a third of the now-visible universe, before dark energy pushes the rest out of reach. That would give us access to 100 billion galaxies, a mind-bending quantity of matter and energy to play with.

I asked Bostrom how he thought humans would expand into the massive ecological niche I have just described. ‘On that kind of time scale, you either glide into the bin of extinction scenarios, or into the bin of technological maturity scenarios,’ he said. ‘Among the latter, there is a wide range of futures that all have the same outward shape, which is Earth in the centre of this growing bubble of infrastructure, a bubble that grows uniformly at some significant fraction of the speed of light.’ It’s not clear what that expanding bubble of infrastructure might enable. It could provide the raw materials to power flourishing civilisations, human families encompassing trillions upon trillions of lives. Or it could be shaped into computational substrate, or into a Jupiter brain, a megastructure designed to think the deepest possible thoughts, all the way until the end of time.

It is only by considering this extraordinary range of human futures that our cosmic omen comes into view. It was the Russian physicist and visionary Konstantin Tsiolkovsky who first noticed the omen, though its discovery is usually credited to Enrico Fermi. Tsiolkovsky, the fifth of 18 children, was born in 1857 to a family of modest means in Izhevskoye, an ancient village 200 miles south-east of Moscow. He was forced to leave school at the age of 10 after a bout with scarlet fever left him hard of hearing. At 16, Tsiolkovsky made his way to Moscow, where he installed himself in its great library, surviving on books and scraps of black bread. He eventually took work as a schoolteacher, a profession that allowed him enough spare time to tinker around as an amateur engineer. By the age of 40, Tsiolkovsky had invented the monoplane, the wind tunnel, and the rocket equation — the mathematical basis of spaceflight today. Though he died decades before Sputnik, Tsiolkovsky believed it was human destiny to expand out into the cosmos. In the early 1930s, he wrote a series of philosophical tracts that launched Cosmism, a new school of Russian thought. He was famous for saying that ‘Earth is the cradle of humanity, but one cannot stay in the cradle forever.’

The mystery that nagged at Tsiolkovsky arose from his Copernican convictions, his belief that the universe is uniform throughout. If there is nothing uniquely fertile about our corner of the cosmos, he reasoned, intelligent civilisations should arise everywhere. They should bloom wherever there are planetary cradles like Earth. And if intelligent civilisations are destined to expand out into the universe, then scores of them should be crisscrossing our skies. Bostrom’s expanding bubbles of infrastructure should have enveloped Earth several times over.

In 1950, the Nobel Laureate and Manhattan Project physicist Enrico Fermi expressed this mystery in the form of a question: ‘Where are they?’ It’s a question that becomes more difficult to answer with each passing year. In the past decade alone, science has discovered that planets are ubiquitous in our galaxy, and that Earth is younger than most of them. If the Milky Way contains multitudes of warm, watery worlds, many with a billion-year head start on Earth, then it should have already spawned a civilisation capable of spreading across it. But so far, there’s no sign of one. No advanced civilisation has visited us, and no impressive feats of macro-engineering shine out from our galaxy’s depths. Instead, when we turn our telescopes skyward, we see only dead matter, sculpted into natural shapes, by the inanimate processes described by physics.

If life is a cosmic fluke, then we’ve already beaten the odds, and our future is undetermined — the galaxy is there for the taking

Robin Hanson, a research associate at the Future of Humanity Institute, says there must be something about the universe, or about life itself, that stops planets from generating galaxy-colonising civilisations. There must be a ‘great filter’, he says, an insurmountable barrier that sits somewhere on the line between dead matter and cosmic transcendence.

Before coming to Oxford, I had lunch with Hanson in Washington DC. He explained to me that the filter could be any number of things, or a combination of them. It could be that life itself is scarce, or it could be that microbes seldom stumble onto sexual reproduction. Single-celled organisms could be common in the universe, but Cambrian explosions rare. That, or maybe Tsiolkovsky misjudged human destiny. Maybe he underestimated the difficulty of interstellar travel. Or maybe technologically advanced civilisations choose not to expand into the galaxy, or do so invisibly, for reasons we do not yet understand. Or maybe, something more sinister is going on. Maybe quick extinction is the destiny of all intelligent life.

Humanity has already slipped through a number of these potential filters, but not all of them. Some lie ahead of us in the gauntlet of time. The identity of the filter is less important to Bostrom than its timing, its position in our past or in our future. For if it lies in our future, there could be an extinction risk waiting for us that we cannot anticipate, or to which anticipation makes no difference. There could be an inevitable technological development that renders intelligent life self-annihilating, or some periodic, catastrophic event in nature that empirical science cannot predict.

That’s why Bostrom hopes the Curiosity rover fails. ‘Any discovery of life that didn’t originate on Earth makes it less likely the great filter is in our past, and more likely it’s in our future,’ he told me. If life is a cosmic fluke, then we’ve already beaten the odds, and our future is undetermined — the galaxy is there for the taking. If we discover that life arises everywhere, we lose a prime suspect in our hunt for the great filter. The more advanced life we find, the worse the implications. If Curiosity spots a vertebrate fossil embedded in Martian rock, it would mean that a Cambrian explosion occurred twice in the same solar system. It would give us reason to suspect that nature is very good at knitting atoms into complex animal life, but very bad at nurturing star-hopping civilisations. It would make it less likely that humans have already slipped through the trap whose jaws keep our skies lifeless. It would be an omen.

On my last day in Oxford, I met with Toby Ord in his office at the Future of Humanity Institute. Ord is a utilitarian philosopher, and the founder of Giving What We Can, an organisation that encourages citizens of rich countries to pledge 10 per cent of their income to charity. In 2009, Ord and his wife, Bernadette Young, a doctor, pledged to live on a small fraction of their annual earnings, in the hope of donating £1 million to charity over the course of their careers. They live in a small, spare flat in Oxford, where they entertain themselves with music and books, and the occasional cup of coffee out with friends.

Ord has written a great deal about the importance of targeted philanthropy. His organisation sifts through global charities in order to identify the most effective among them. Right now, that title belongs to the Against Malaria Foundation, a charity that distributes mosquito nets in the developing world. Ord explained to me that ultra-efficient charities are thousands of times more effective at reducing human suffering than others. ‘Where you donate is more important than whether you donate,’ he said.

It intrigued me to learn that Ord was doing philosophical work on existential risk, given how careful he is about maximising the philanthropic impact of his actions. I was keen to ask him if he thought the problem of human extinction was more pressing than ending poverty or disease.

‘I’m not sure if existential risk is a bigger issue than global poverty,’ he told me. ‘I’ve kind of split my efforts between them recently, hoping that over time I’ll work out which is more important.’

Ord is wrestling with a formidable philosophical dilemma. He is trying to figure out whether our moral obligations to future humans outweigh those we have to humans that are alive and suffering right now. It’s a brutal calculus for the living. We might be 7 billion strong, but we are also a fire hose of future lives, that extinction would choke off forever. The casualties of human extinction would include not only the corpses of the final generation, but also all of our potential descendants, a number that could reach into the trillions.

It is this proper accounting of extinction’s utilitarian toll that prompts Bostrom to argue that reducing existential risk is morally paramount. His arguments elevate the reduction of existential risk above all other humanitarian projects, even extraordinary successes, like the eradication of smallpox, which has saved 100 million lives and counting. Ord isn’t convinced yet, but he hinted that he may be starting to lean.

‘I am finding it increasingly plausible that existential risk is the biggest moral issue in the world,’ he told me. ‘Even if it hasn’t gone mainstream yet.’

The idea that we might have moral obligations to the humans of the far future is a difficult one to process. After all, we humans are seasonal creatures, not stewards of deep time. The brevity of our lives colours our intuitions about value, and limits our moral vision. We can imagine futures for our children and grandchildren. We participate in their joys and weep for their hardships. We see that some glimmer of our fleeting lives survives on in them. But our distant descendants are opaque to us. We strain to see them, but they look alien across the abyss of time, transformed by the passage of so many millennia.

As Bostrom and I strolled among the skeletons at the Museum of Natural History in Oxford, we looked backward across another abyss of time. We were getting ready to leave for lunch, when we finally came upon the Megalosaurus, standing stiffly behind display glass. It was a partial skeleton, made of shattered bone fragments, like the chipped femur that found its way into Robert Plot’s hands not far from here. As we leaned in to inspect the ancient animal’s remnants, I asked Bostrom about his approach to philosophy. How did he end up studying a subject as morbid and peculiar as human extinction?

He told me that when he was younger, he was more interested in the traditional philosophical questions. He wanted to develop a basic understanding of the world and its fundamentals. He wanted to know the nature of being, the intricacies of logic, and the secrets of the good life.

‘But then there was this transition, where it gradually dawned on me that not all philosophical questions are equally urgent,’ he said. ‘Some of them have been with us for thousands of years. It’s unlikely that we are going to make serious progress on them in the next ten. That realisation refocused me on research that can make a difference right now. It helped me to understand that philosophy has a time limit.’