The relationship between mathematics and morality is easy to think about but hard to understand. Suppose Jane sees five people drowning on one side of a lake and one person drowning on the other side. There are life-preservers on both sides of the lake. She can either save the five or the one, but not both; she clearly needs to save the five. This is a simple example of the use of mathematics to make a moral decision – five is greater than one, so Jane should save the five.

Moral mathematics is the application of mathematical methods, such as formal logic and probability, to moral problems. Morality involves moral concepts such as good and bad, right and wrong. But morality also involves quantitative concepts, such as harming more or fewer persons, and taking actions that have a higher or lower probability of creating benefit or causing harm. Mathematical tools are helpful for making such quantitative comparisons. They are also helpful in the innumerable contexts where we are unsure what the consequences of our actions will be. Such scenarios require us to engage in probabilistic thinking, and to evaluate the likelihood of particular outcomes. Intuitive reasoning is notoriously fallible in such cases, and, as we shall see, the use of mathematical tools brings precision to our reasoning and helps us eliminate error and confusion.

Moral mathematics employs numbers and equations to represent relations between human lives, obligations and constraints. Some might find this objectionable. The philosopher Bernard Williams once wrote that moral mathematics ‘will have something to say even on the difference between massacring 7 million, and massacring 7 million and one.’ Williams expresses the common sentiment that moral mathematics ignores what is truly important about morality: concern for human life, people’s characters, their actions, and their relationships with each other. However, this does not mean mathematical reasoning has no role in ethics. Ethical theories judge whether an act is morally better or worse than another act. But they also judge by how much one act is better or worse than another. Morality cannot be reduced to mere numbers, but, as we shall see, without moral mathematics, ethics is stunted.

In this essay, I will discuss various ways in which moral mathematics can be used to tackle questions and problems in ethics, concentrating primarily on the relationship between morality, probability and uncertainty. Moral mathematics has limitations, and I discuss decision-making concerning the very far future as a demonstrative case study for its circumscribed applicability.

Let us begin by considering how ethics should not use mathematics. In his influential book Reasons and Persons (1984), the philosopher Derek Parfit considers several misguided principles of moral mathematics. One is share-of-the-total, where the goodness or badness of one’s act is determined by one’s share in causing good or evil. According to this view, joining four other people in saving 100 trapped miners is better than going elsewhere and saving 10 similarly trapped miners – even if the 100 miners might be saved by the four people alone. This is because one person’s share of the total goodness would be to save 20 people (100/5), twice that of saving 10 people. But this allows 10 people to die needlessly. The share-of-the-total principle ignores that joining the four people in saving 100 miners does not causally contribute to saving them, while going elsewhere to save 10 miners does.

Another misguided principle is ignoring small chances. Many acts we regularly perform have a small chance of doing great good or great harm. Yet we typically ignore highly improbable outcomes in our moral calculations. Ignoring small chances may be a failure of rationality, not of morality, but it nevertheless may lead to the wrong moral conclusions when employed in moral mathematics. When the outcome may affect very many people, as in the case of elections, the small chance of making a difference may be significant enough to offset the cost of voting.

It is very wrong to administer small electric shocks, despite their individual imperceptibility

Yet another misguided principle is ignoring imperceptible effects. One example is when the imperceptible harm or benefits are part of a collective action. Suppose 1,000 wounded men need water, and it is to be distributed to them from a 1,000-pint barrel to which 1,000 people each add a pint of water. While each pint added gives each wounded man only 1/1,000th of a pint, it is nonetheless morally important to add one’s pint, since 1,000 people adding a pint collectively provides the 1,000 wounded men a pint each. Conversely, suppose each of 1,000 people administer an imperceptibly small electric shock to an innocent person; the combined shock would result in that person’s death. It is very wrong for them to administer their small shocks, despite their individual imperceptibility.

So moral mathematics must be attentive to, and seek to avoid, such common pitfalls of practical reasoning. Moral mathematics must be sensitive to circumstances. Often, it needs to consider extremely small probabilities, benefits or harms. But this conclusion is in tension with another requirement from moral mathematics: it must be practical, which requires that it be tolerant of error and capable of responding to uncertainty.

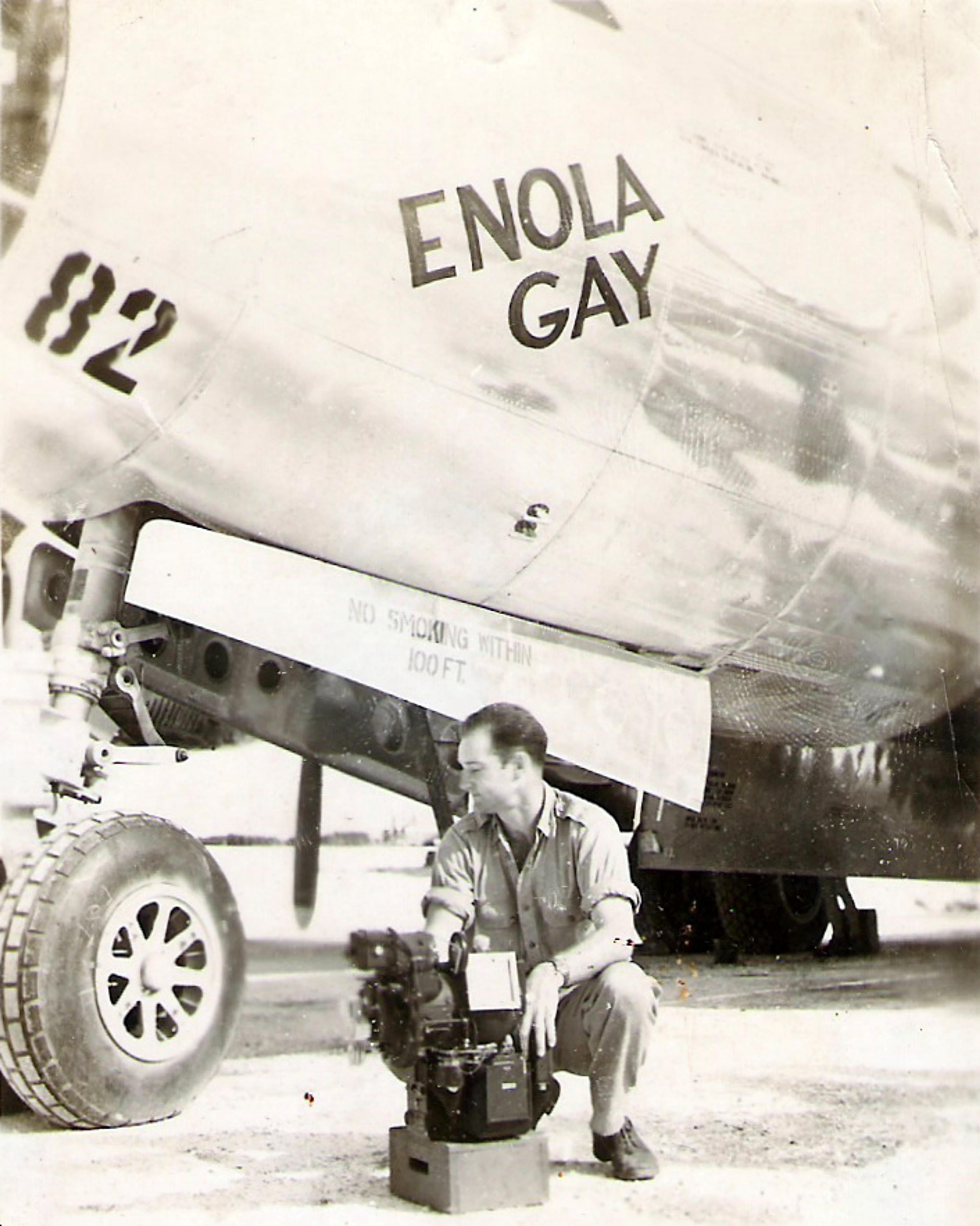

During the Second World War, allied bombers used the Norden bombsight, an analogue computer that calculated where a plane’s bombs would strike, based on altitude, velocity and other variables. But the bombsight was intolerant of error. Despite entering the same (or very similar) data into the Norden bombsight, two bombardiers on the same bomb run might be instructed to drop their bombs at very different times. This was due to small variations in data they entered, or because the two bombsights’ components were not absolutely identical. Like a bombsight, moral mathematics must be sensitive to circumstances, but not too sensitive. This can be achieved if we remember that not all small probabilities, harms and benefits are created equal.

Thomas Ferebee, a bombardier on the Boeing B-29 Enola Gay, with the Norden bombsight in 1945. Courtesy Wikimedia

According to statistical mechanics, there is an unimaginably small probability that subatomic particles in a state of thermodynamic equilibrium will spontaneously rearrange themselves in the form of a living person. Call them a Boltzmann Person – a variation on the ‘Boltzmann Brain’, suggested by the English astronomer Arthur Eddington in a 1931 thought experiment, intended to illustrate a problem with Ludwig Boltzmann’s solution to a puzzle in statistical mechanics. I can ignore the risk of such a person suddenly materialising right in front of my car. It does not justify my driving at 5 mph the entire trip to the store. But I cannot drive recklessly, ignoring the risk of running over a pedestrian. The probability of running over a pedestrian is low, but not infinitesimally low. Such events, while rare, happen every day. There is, in the words of the American philosopher Charles Sanders Peirce, a ‘living doubt’ whether I will run over a pedestrian while driving, so I must drive carefully to minimise that probability. There is no such doubt about a person materialising in front of my car. The possibility may be safely ignored.

Moral mathematics also helps to explain why events with imperceptible effects, which are significant in one situation, can be insignificant in another. Adding 1/1,000th of a pint of water to a vessel for a wounded person is significant if many others also add their share, so the total benefits are significant. But in isolation, when the total amount of water given to a wounded person is 1/1,000th of a pint, this benefit is so small that almost any other action – say, calling an ambulance a minute sooner – is likely to produce a greater total benefit. Conversely, it is very wrong to administer an imperceptibly small electric shock to a person because it contributes to a total harm of torturing a person to death. But administering a small electric shock as a prank, as with a novelty electric handshake buzzer, is much less serious, as the total harm is very small.

The application of moral mathematics, indeed of all moral decision-making, is always clouded by uncertainty

Moral mathematics also helps us determine the required level of accuracy for a particular set of circumstances. Beth is threatened by an armed robber, so she is permitted to use necessary and proportionate force to stop the robbery. Suppose she shoots the robber in the leg to stop him. Even if she uses significantly more force – say, shooting her assailant in both legs – it may be permissible because she is very uncertain about the exact force needed to stop the robber. The risk she faces is very high, so she is plausibly justified in using significantly more force to protect herself, even if it will end up being excessive. By quantifying the risk Beth faces, moral mathematics allows her to also quantify how much force she can permissibly use.

Moral mathematics, then, must be sensitive to circumstances and tolerant of errors grounded in uncertainty, such as Beth’s potentially excessive, but justifiable, use of force. The application of moral mathematics, and indeed of all moral decision-making, is always clouded by uncertainty. As Bertrand Russell wrote in his History of Western Philosophy (1945): ‘Uncertainty, in the presence of vivid hopes and fears, is painful, but must be endured if we wish to live without the support of comforting fairy tales.’

To respond to uncertainty, many fields, such as public policy, actuarial calculations and effective altruism, use expected utility theory, which is one of the most powerful tools of moral mathematics. In its normative application, expected utility theory explains how people should respond when the outcomes of their actions are not known with certainty. It assigns an amount of ‘utility’ to each outcome – a number indicating how much an outcome is preferred or preferable – and proposes that the best option is that with the highest expected utility, determined by the calculation of probabilities.

In standard expected utility theory, the utility of outcomes is subjective. Suppose there are two options: winning £1 million for certain, or winning £3 million with a 50 per cent probability. Our intuitions about such cases are unclear. A guaranteed payout sounds great, but a 50 per cent chance of an even bigger win is very tempting. Expected utility theory cuts through this potential confusion. Winning £1 million has a utility of 100 for Bob. Winning £3 million has a utility of only 150, since Bob can live almost as well on £1 million as with £3 million. This specification of the diminishing marginal utility of additional resources is the kind of precision that intuitive reasoning struggles with. On the other hand, winning nothing has a negative utility of -50. Not only will Bob win no money, but he will deeply regret not getting the guaranteed £1 million. For Bob, the expected utility of the first option is 100 × 1 = 100. The expected utility of the second option is 150 × 0.5 + (-50) × 0. 5 = 50. The guaranteed £1 million is the better option.

Conversely, suppose Alice has a life-threatening medical condition. An operation to save her life would cost £2 million. For Alice, the utilities of £0 and of £1 million are both 0; neither outcome would save her. But the utility of £3 million is 500 because it will save her life – and make her a millionaire. For Alice, the expected utility of the first option is 0 × 1 = 0. The expected utility of the second option is 500 × 0.5 + 0 × 0.5 = 250. For Alice, a 50 per cent chance of £3 million is better than a guaranteed £1 million. This shows how moral mathematics adds useful precision to our potentially confused intuitive reasoning.

Many believe that morality is objective. For this reason, moral mathematics often employs expected value theory, in which the moral utilities of outcomes are objective. Moral value, in the simplest terms, is how objectively morally good or bad an act is. Suppose the millionaires Alice and Bob consider donating £1 million each to either saving the rainforest in the Amazon basin or to reducing global poverty. Expected value theory recommends choosing the option with the highest objective expected moral utility. Which charity has a higher objective expected moral utility is difficult to determine. But, once determined, Alice and Bob should both donate to it. One of the two options is simply morally better.

Expected utility theory, like expected value theory, is a powerful moral mathematical tool for responding to uncertainty. But both theories risk being misapplied due to their reliance on probabilities. Humans are notoriously bad at probabilistic reasoning. There is a tiny probability of winning certain lotteries or of dying in a shark attack, estimated at approximately 1 in a few millions. Yet we tend to overestimate the probabilities of such rare events, because our perception of their probabilities is distorted by things like wishful thinking and fear. We tend to overestimate the probability of very good and very bad things happening.

The less we know about the future, the less we can assign exact probabilities in the present

A further mistake is to assign a high probability to an outcome that actually has a lower probability. An example is the gambler’s fallacy: the gambler reasons that if ‘black’ comes up in a game of roulette 10 times in a row, then ‘red’ is bound to be next. The gambler wrongly assigns too high a probability to ‘red’. Another mistake is the principle of indifference: in the absence of evidence, we should assign an equal probability to all outcomes. Heads and tails should be assigned a 0.5 probability if one knows nothing about the coin being tossed; these probabilities should be adjusted only if one discovers that the coin is imbalanced.

Yet another type of mistake is to assign definite values when the values are unclear. Consider a very high-value event that has a very low probability. Suppose a commando mission has a very small probability of winning a battle. Whether winning the battle will save 1 million or 10 million lives and whether the probability of the mission’s success is 0.0001 or 0.00000001 are matters of conjecture. The expected moral value of the mission varies by a factor of 10,000: from 0.1 lives saved (0.0000001 × 1,000,000) to 1,000 lives (0.0001 × 10,000,000). So expected value theory might recommend aborting the mission as not worth the life of even one soldier (since 1 > 0.1) or undertaking it even if it will certainly cost 999 lives (since 1,000 > 999).

Expected value theory is of little help in this case. It is useful in responding to uncertainty only when the probabilities are grounded in the available data. The less we know about the future, the less likely we are to be able to assign exact probabilities in the present. One should not simply assign a specific probability and moral value when these figures are not grounded in the available data, especially not to achieve a high expected value. Outcomes with a tiny probability of success do not become permissible or required merely because success would cause a great amount of good. Moral mathematics can tempt us to seek precision where none is available and can thus allow us to manipulate the putatively objective calculations of expected value theory.

At what timescale, then, can we assign tiny probabilities to future events and why? On a shorter timescale, uncertainty can decrease. An event with a daily 1 per cent likelihood is very likely to occur in the next year, assuming the daily likelihood remains 1 per cent. On a longer timescale, uncertainty about events with tiny probabilities tends to increase. We can rarely say an event will have a daily 1 per cent probability 10 years from now. If each action can lead to multiple outcomes, uncertainty will quickly compound and ramify, much like compound interest on a long-term loan. In fact, according to recent empirical literature, ‘there is statistical evidence that long-term forecasts have a worse success rate than a random guess.’ Our capacity to assess the future declines significantly as time horizons increase.

But now consider longtermism, the idea that ‘because of the potential vastness of the future portion of the history of humanity and other sentient life, the primary determinant of optimal policy, philanthropy and other actions is the effects of those policies and actions on the very long-run future, rather than on more immediate considerations.’ Longtermism is a currently celebrated application of moral mathematics, but it demonstrates some of the mistakes we might be drawn into if we don’t pay sufficient attention to its limitations.

Longtermists claims that humanity is at a pivot point; choices made this century may shape its entire future. We can positively influence our future in two ways. First, by averting existential risks such as man-made pandemics, nuclear war and catastrophic climate change. This will increase the number and wellbeing of future people. Second, by changing civilisation’s trajectory – that is, moving funds and other resources – to longtermist causes.

The number of future people longtermism considers is huge. If humanity lasts 1,000,000 years – a typical time for mammalian species – the number of humans yet to be born is in the trillions at least. The numbers become much larger if technological progress allows future people to colonise other planets, so that, as the argument goes, ‘our descendants develop over the coming millions, billions, or trillions of years’, in the words of Nick Beckstead of the Future of Humanity Institute. According to the estimate cited in Nick Bostrom’s book Superintelligence (2014), the number of future people could reach 1058, or the number 1 followed by 58 zeros.

Expected value theory recommends prioritising space exploration over reducing global poverty

The wellbeing of future generations is a practical question for us, here and now, because the future is affected by the present, by our actions. This was articulated more than 100 years ago by the British economist Arthur Cecil Pigou in his influential book The Economics of Welfare (1920). Pigou warned against the tendency of present generations to devote too few resources to the interests of future generations: ‘the environment of one generation can produce a lasting result, because it can affect the environment of future generations,’ he wrote. In the essay A Mathematical Theory of Saving (1928), the philosopher, mathematician and economist Frank Ramsey addressed the question of how much society should save for future generations – over and above what individuals save for their children or grandchildren. Parfit’s book Reasons and Persons is itself the locus classicus of population ethics, a field that discusses the problems and paradoxes arising from the fact that our actions may affect the identities of those who will come to exist. As Parfit wrote in ‘Future Generations: Further Problems’ (1982), ‘we ought to have as much concern about the predictable effects of our acts whether these will occur in 200 or 400 years.’

The views of Pigou, Ramsey and Parfit concern comparatively short-term long-term thinking, dealing with mere hundreds of years. Longtermism, on the other hand, deals with the implications of our choices on all future generations – thousand, millions, and billions of years in the future. But this long-term thinking faces two major challenges. First, this very far future is hard to predict – any number of factors that we cannot now know anything about will determine how our current actions play out. Second, we are simply ignorant of the long-term effects of our actions. Longtermism thus risks what the Oxford philosopher Hilary Greaves has called cluelessness: that unknown long-term effects of our actions will swamp any conclusions reached by considering their reasonably foreseeable consequences.

Longtermism claims that we ‘can now use expected value theory to hedge against uncertainty’, as the moral philosopher William MacAskill put it to The Guardian this summer. In many circumstances, the argument goes, unknown consequences do not overwhelm our ability to make informed decisions based on the foreseeable consequences of our actions. For instance, we are uncertain about the probability of succeeding in space exploration. But if we succeed, it will allow the existence of trillions of times of more future people throughout space than if humanity never leaves Earth. For any probability of space exploration succeeding higher than, say, 0.0000000001 per cent, expected value theory recommends prioritising space exploration over reducing global poverty, even if the latter course of action will save millions of current lives.

This conclusion is highly unpalatable. Should we actually make significant sacrifices to minutely lower the probability of extremely bad outcomes, or minutely increase the probability of extremely good outcomes? In reply, some longtermists argue for fanaticism. Fanaticism is the view that for every guaranteed outcome, however bad, and every probability, however small, there always exists some extreme, but very unlikely, disaster such that the certain bad outcome is better than a tiny probability of the extreme disaster. Conversely, for every outcome, however good, and every probability, however small, there always exists some heightened goodness, such that the tiny probability of this elevated goodness is still better than the guaranteed good outcome. Fanaticism suggests that saving millions of current lives is, therefore, worse than the tiny probability of benefiting trillions of people in the future. Prioritising space exploration over the lives of millions today is not an artefact of expected value theory, but proof of it achieving the correct moral result.

While fanaticism is intriguing, it cannot deal with the extreme uncertainty of the probabilities about the far future, while still allowing the use of expected value theory. The uncertainty of our knowledge about the effect of current policies on the far future is near total. We cannot obtain evidence about how to use resources to accomplish the goals that longtermism advocates, evidence that is necessary to assign probabilities to outcomes. But the uncertainty about the far future cannot be downplayed merely by assuming an astronomical number of future people multiplied by their expected wellbeing. It is tempting to apply moral mathematics in such cases, to seek clarity and precision in the face of uncertainty, just as we do in the cases of lotteries and charitable donations. But we must not imagine that we know more than we do. If using expected value theory to estimate the moral significance of the far future under conditions of uncertainty leads to fanaticism or cluelessness, it is the wrong tool for the job. Sometimes, our uncertainty is simply too great, and the moral thing to do is to admit we do not have any idea about the probabilities of future events.

It is true that, if there is at least a tiny probability of space exploration benefiting a trillion future people, then the expected value of prioritising space exploration would be very high. But there is also at least a tiny probability that prioritising the reduction of global poverty would be the best way to benefit trillions of future people. Perhaps one of those saved will be the genius who will develop a feasible method of space travel. Moreover, if there is also at least a tiny probability of unavoidable human extinction in the near future, reducing current suffering should take priority. Once we are dealing with the very distant future and concomitantly with tiny probabilities, it is possible that virtually any imaginable policy will benefit future people the most.

Expected value theory, then, does not help longtermism to prioritise choices among means under conditions of uncertainty. The actual probability that investing in space exploration today will allow humanity to reach the stars 1,000,000 years from now is unknown. We know only that it is possible (probability > 0), but not certain (probability < 1). This makes the expected value indefinite and, therefore, expected value theory cannot be applied. The outcomes of investing in space exploration today cannot be assigned a probability, however high or low. Galileo could not have justified his priorities on the grounds that his research would eventually take humanity into space, not least because he could not have assigned any probability, however high or low, to Apollo 11 landing on the Moon 300 years after his death.

Moral mathematics forces precision and clarity. It allows us to better understand the moral commitments of our ethical theories, and identify the sources of disagreement between them. And it helps us draw conclusions from our ethical assumptions, unifying and quantifying diverse arguments and principles of morality, thus discovering the principles embedded in our moral conceptions.

Notwithstanding the power of mathematics, we must not forget its limitations when applied to moral issues. Ludwig Wittgenstein once argued that confusion arises when we become bewitched by a picture. It is easy to be seduced by compelling numbers that misrepresent reality. Some may think this is a good reason to keep morality and mathematics apart. But I think this tension is ultimately a virtue, rather than a vice. It remains a task of moral philosophy to meld these two fields together. Perhaps, as John Rawls put it in his book A Theory of Justice (1971), ‘if we can find an accurate account of our moral conceptions, then questions of meaning and justification may prove much easier to answer.’