Here is a dilemma you may find familiar. On the one hand, a life well lived requires security, safety and regularity. That might mean a family, a partner, a steady job. On the other hand, a life well lived requires new experiences, risk and authentic independence, in ways incompatible with a family or partner or job. Day to day, it can seem not just challenging to balance these demands, but outright impossible. That’s because, we sense, the demands of a good life are not merely difficult; sometimes, the demands of a good life actually contradict. ‘Human experience,’ wrote the novelist George Eliot in 1876, ‘is usually paradoxical.’

One aim of philosophy is to help us make sense of our lives, and one way philosophy has tried to help in this regard is through logic. Formal logic is a perhaps overly literal approach, where ‘making sense’ is cashed out in austere mathematical symbolism. But sometimes our lives don’t make sense, not even when we think very hard and carefully about them. Where is logic then? What if, sometimes, the world truly is senseless? What if there are problems that simply cannot be resolved consistently?

Formal logic as we know it today grew out of a project during the 17th-century Enlightenment: the rationalist plan to make sense of the world in mathematical terms. The foundational assumption of this plan is that the world does make sense, and can be made sense of: there are intelligible reasons for things, and our capacity to reason will reveal these to us. In his book La Géométrie (1637), René Descartes assumed that the world could be covered by a fine-mesh grid so precise as to reduce geometry to analysis; in his Ethics (1677), Baruch Spinoza proposed a view of Nature and our place in it so precise as to be rendered in proofs; and in a series of essays written around 1679, G W Leibniz envisioned a formal language capable of expressing every possible thought in structure-preserving, crystalline symbols – a characteristica universalis – that obeys precise algebraic rules, allowing us to use it to find answers – a calculus ratiocinator.

Rationalism dreams big. But dreams are cheap. The startling thing about this episode is that, by the turn of the 20th century, Leibniz’s aspirations seemed close to coming true due to galvanic advances across the sciences, so much so that the influential mathematician David Hilbert was proposing something plausible when in 1930 he made the rationalist assumption a credo: ‘We must know, we will know.’

Hilbert’s credo was based in part on the spectacular successes of logicians in the late 19th century carving down to the bones of pure mathematics (geometry, set theory, arithmetic, real analysis) to find the absolute certainty of deductive validity. If logic itself can be understood in exacting terms, then the project of devising a complete and consistent theory of the world (or at least, the mathematical basis thereof) appeared to be in reach – a way to answer every question, as Hilbert put it, ‘for the honour of human understanding itself’.

But even as Hilbert was issuing his credo and elaborating his plans for solving the Entscheidungsproblem – of building what we would now call a computer that can mechanically decide the truth or falsity of any sentence – all was not well. Indeed, all had not been well for some time.

It was nonsense. In a few short lines, Frege’s life work had been shown to be a failure

Already in 1902, on the verge of completing his life’s work, the logician Gottlob Frege received an ominous letter from Bertrand Russell. Frege had been working to provide a foundation for mathematics of pure logic – to reduce complex questions about arithmetic and real analysis to the basic question of formal, logical validity. If this programme, known as logicism, were successful then the apparent certainty of logical deduction, the inescapable truth of the conclusions of sound derivations, would percolate up, so to speak, into all mathematics (and any other area reducible to mathematics). In 1889, Frege had devised an original ‘concept notation’ for quantified logic exactly for this goal, and had used it for his Basic Laws of Arithmetic (two volumes of imposing symbolism, published in 1893 and 1903). Russell shared this logicist goal, and in his letter to Frege, Russell said, in essence, that he had liked Frege’s recent book very much, but had just noticed one little oddity: that one of the basic axioms upon which Frege had based all his efforts seemed to entail a contradiction.

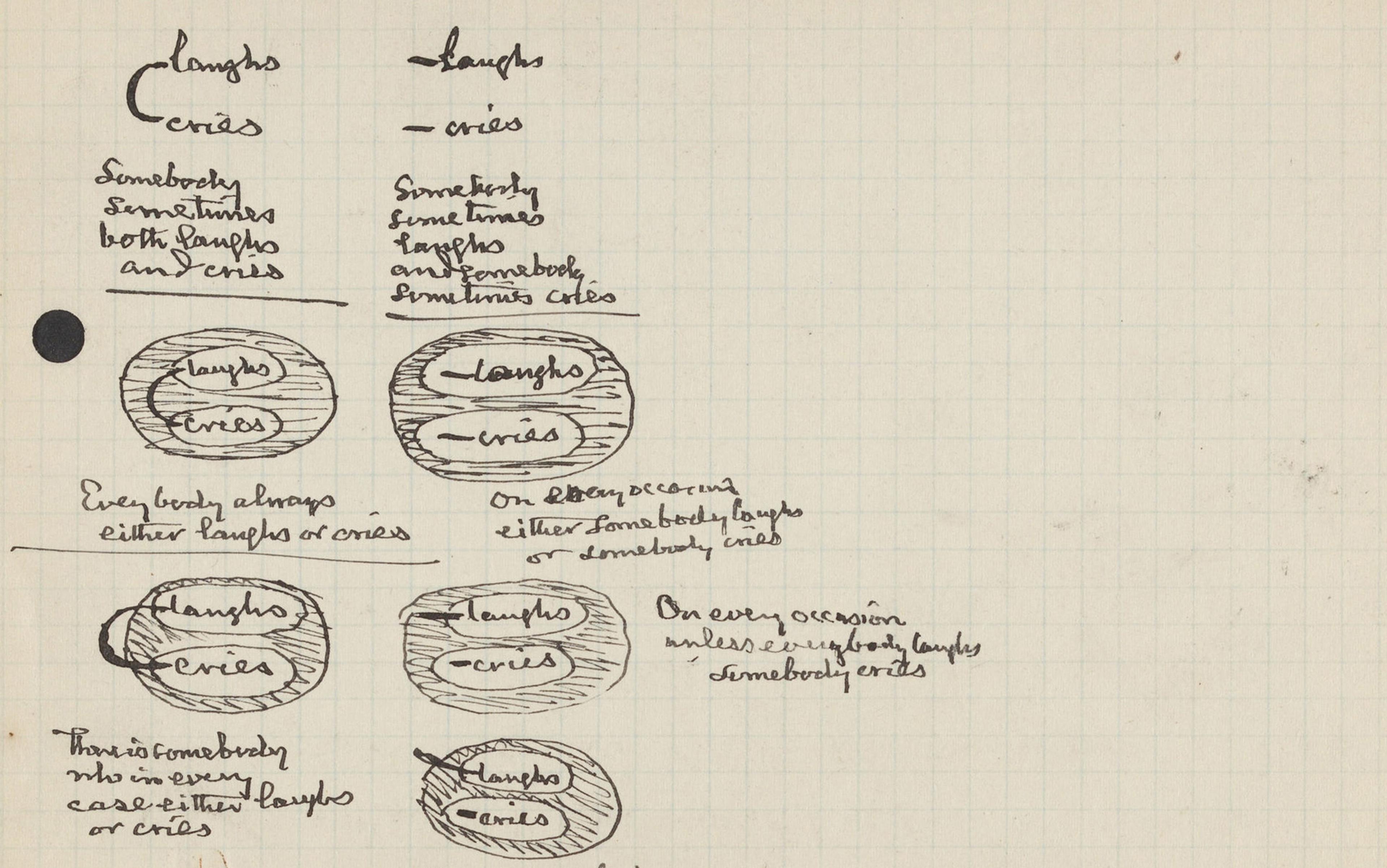

Frege had assumed what he called ‘Basic Law V’ which says, in effect: Sets are collections of things that share a property. For example, the set of all triangles is comprised of all and only the triangles. This seemed obvious enough for Frege to assume as a self-evident logical truth. But from Basic Law V, Russell showed that Frege’s system could prove a statement of the form P and not-P as a theorem. It is called Russell’s Paradox:

Let R be the collection of all things with the property of ‘not being a self-member’. (For example, the set of triangles is not itself a triangle, so it is an R.) What about R itself? If R is in R, then it is not, by definition of R; if R is not in R, then it is, again by definition. It must be one or the other – so it is both: R is in R and R is not in R, self-membered and not, a contradiction.

The whole system was in fact inconsistent, and thus – in Frege and Russell’s view – absurd. Nonsense. In a few short lines, Frege’s life work had been shown to be a failure.

He would continue to work for another two decades, but his grand project was destroyed. Russell would also spend the next decades trying to come to terms with own his simple discovery, first writing the monumental but flawed Principia Mathematica (three volumes, 1910-13) with Alfred North Whitehead, then eventually pivoting away from logic without ever really solving the problem. Years would pass, with some of the best minds in the world trying mightily to overcome the contradiction Russell had found, without finding a fully satisfactory solution.

By 1931, a young logician named Kurt Gödel had leveraged a similar paradox out of Russell’s own system. Gödel found a statement that, if provable true or false – that is, decidable – would be inconsistent. Gödel’s incompleteness theorems show that there cannot be a complete, consistent and computable theory of the world – or even just of numbers! Any complete and computable theory will be inconsistent. And so, the Enlightenment rationalist project, from Leibniz to Hilbert’s programme, has been shown impossible.

Or so goes the standard story. But the lesson that we must give up on a full understanding of the world in which we live is an enormous pill to swallow. It has been almost a century or more since these events, filled with new and novel advances in logic, and some philosophers and logicians think it is time for a reappraisal.

If the world were a perfect place, we would not need logic. Logic tells us what follows from things we already believe, things we are already committed to. Logic helps us work around our fallible and finite limitations. In a perfect world, the infinite consequences of our beliefs would lie transparently before us. ‘God has no need of any arguments, even good ones,’ said the logician Robert Meyer in 1976: all the truths are apparent before God, and He does not need to deduce one from another. But we are not gods and our world is not perfect. We need logic because we can go wrong, because things do go wrong, and we need guidance. Logic is most important for making sense of the world when the world appears to be senseless.

The story just told ends in failure in part because the logic that Frege, Russell and Hilbert were using was classical logic. Frege assumed something obvious and got a contradiction, but classical logic makes no allowance for contradiction. Because of the classical rule of ex contradictione quodlibet (‘from a contradiction everything follows’), any single contradiction renders the entire system useless. But logic is a theory of validity: an attempt to account for what conclusions really do follow from given premises. As contemporary ‘anti-exceptionalists about logic’ have noted, theories of logic are like everything else in science and philosophy. They are developed and debated by people, and all along there have been disagreements about what the correct theory of logic is. Through that ongoing debate, many have suggested that a single contradiction leading to arbitrary nonsense seems incorrect. Perhaps, then, the rule of ex contradictione itself is wrong, and should not be part of our theory of logic. If so, then perhaps Frege didn’t fail after all.

Over the past decades, logicians have developed mathematically rigorous systems that can handle inconsistency not by eradicating or ‘solving’ it, but by accepting it. Paraconsistent logics create a new opportunity for theories that, on the one hand, seem almost inalienably true (like Frege’s Basic Law V) but, on the other, are known to contain some inconsistencies, such as blunt statements of the form P and not-P. In classical logic, there is a hard choice: give up any inconsistent theory as irrational, or else devolve into apparent mysticism. With these new advances in formal logic, there may be a middle way, whereby sometimes an inconsistency can be retained, not as some mysterious riddle, but rather as a stone-cold rational view of our contradictory world.

This is a rationalism that rationally accommodates some apparent irrationality

Paraconsistent logics have been most famously promoted by Newton da Costa since the 1960s, and Graham Priest since the 1970s. Though viewed initially (and still) with some scepticism, ‘paraconsistent logics’ now have an official mathematics classification code (03B53, according to the American Mathematical Society) and there have been five World Congress of Paraconsistency meetings since 1997. These logics are now studied by researchers across the globe, and hold out the prospect of accomplishing the impossible: recasting the very laws of logic itself to make sense of our sometimes seemingly senseless situation. If it works, it could ground a new sort of Enlightenment project, a rationalism that rationally accommodates some apparent irrationality. On this sort of approach, truth is beholden to rationality; but rationality is also ultimately beholden to truth.

That might sound a little perplexing, so let’s start with a very ordinary example. Suppose you are waiting for a friend. They said they would meet you around 5pm. Now it is 5:07. Your friend is late. But then again, it is still only a few minutes after 5pm, so really, your friend is not late yet. Should you call them? It is a little too soon, but maybe it isn’t too soon … because your friend is both late and not late. (What they’re not is neither late nor not late, because you are clearly standing there and they clearly haven’t arrived.) Whatever you think of this, paraconsistent logic simply counsels that, at this point, you should not conclude, however provisionally, that the Moon is made of green cheese, or 2+2=5, or that maybe aliens did build the pyramids after all. That would be just bad reasoning.

Now, such situations are so commonplace that perhaps it seems implausible that some fancy system of non-classical logic is needed to explain what is going on. But maybe we are so enmeshed in contradictions in our day-to-day lives, so constantly pulled in multiple conflicting directions at once, that we don’t even notice, except when the inconsistency becomes so insistent that it can’t be ignored.

Paraconsistent logics help us find structure in the noise. Most strikingly, a subfield within paraconsistent logic has emerged that focuses on its applications to mathematics. One idea here would be to go back to Frege’s grand system in his Grundgesetze and recast it in a paraconsistent logic. Classical approaches mandate finding a way so that Russell’s paradox is no longer derivable (as has been attempted by many). A paraconsistent approach, on the other hand, will allow the paradox to go through, just in a way that does not do (too much) damage. Richard Sylvan, an early visionary in inconsistent mathematics, proposed in the late 1970s an axiomatic set theory that ‘meets the paradoxes head on’. In recent years, there have been several good, though inconclusive, steps in this direction. The idea is that, in this way, the foundations of mathematics can be set back on the path to finding unshakable (if paradoxical) certainty at its bottom.

An immediate concern about a paraconsistent approach is that it looks like a kind of cheating. It seems to sidestep the hard work of philosophical theorising or scientific theory-building. The worry, articulated recently by the philosopher of science Alan Musgrave in ‘Against Paraconsistentism’ (2020), is that:

It can plausibly be maintained that the growth of human knowledge has been and is driven by contradictions. More precisely, that it has been and is driven by the desire to remove contradictions in various systems of belief.

If paraconsistent logics allow us to rest easy with an inconsistent theory, then there would be no impetus to improve. Another way to put the objection is that paraconsistency seems to offer an easy way out of difficult problems, a way to shrug off any objection or counter-evidence, to maintain flawed or failed theories long after they’ve been discredited. Does archaeological evidence contradict ancient-alien theory? No worries! This is just a contradiction, no threat to the theory. Rational debate seems stymied, if not destroyed.

This methodological objection points back to some of the assumptions that operate below the surface in our scientific and philosophical theories, from the Enlightenment and earlier. Often, there are two or more competing theories to explain some given data. How do we decide which to adopt? A standard account from Thomas Kuhn, in 1977, is that we weigh up various theoretical virtues: consistency, yes, but also explanatory depth, accord with evidence, elegance, simplicity, and so forth. Ideally, we might have all of these, but criteria such as simplicity will be set aside if it is outweighed by, say, predictive power. And so too for consistency, say paraconsistent logicians such as Priest and Sylvan.

Classical logic makes too many proofs go through when they shouldn’t

Any of the theoretical virtues are virtuous only to the extent that they match the world. For example, all else being equal, a simpler theory is better than a more complicated one. But ‘all else’ is rarely equal, and as people from Aristotle to David Hume point out, the simpler theory is only better to the extent that the world itself is simple. If not, then not. So too with consistency. The virtue of any given theory then will be a matter of its match with the world. But if the world itself is inconsistent, then consistency is no virtue at all. If the world is inconsistent – if there is a contradiction at the bottom of logic, or at the bottom of a bowl of cereal – a consistent theory is guaranteed to leave something out.

What does prompt progress, then, in cases where we might decide that an inconsistent theory is allowable? There are many ways one theory may be better than another. In many cases, consistency will still win out (eg, your friend is not arriving at both 5:12 and 5:20), and your best waiting-for-a-friend-theory should not say that they are. But that theory choice is more to do with facts about people and time than logical consistency. Using inconsistency as a catch-all, as classical logic does, looks – to a paraconsistent logician – like a too-blunt avoidance of the real hard work: of thinking things through on an individual basis. ‘How then are we to determine whether a given contradiction in a given context is rationally acceptable?’ Priest and Sylvan asked in 1983. There is a simple solution: ‘A preliminary answer is that, at this stage, we need to consider each sort of case on its merits.’

A more on-the-ground answer to Musgrave’s methodological worries – and a warning to anyone tempted by paraconsistency as some kind of free pass – is that working within paraconsistent logics makes things more difficult, not less. The paraconsistent idea is that classical logic makes too many arguments valid, too many proofs go through when they shouldn’t, and so these validities and proofs are removed from the logical machinery. That makes drawing conclusions in a paraconsistent framework much harder because there are fewer inferential paths available. Someone who attempts a paraconsistent ancient-aliens theory may find that constructing valid arguments in their new ‘more permissive’ system is too challenging to be worth the effort.

And that points to some serious practical problems for paraconsistency that have emerged since it was proposed. Perhaps Frege need no longer worry that Russell’s contradiction will lead to 0=1 in his theory. But Frege also wants his theory to prove that 1+1=2 and support other elementary arithmetic. A paraconsistent Frege may start to worry, with good reason, that these true results are no longer derivable either. Depending on your views about the role of logic in mathematics, this issue, sometimes called ‘classical recapture’, is a serious problem. Sylvan put forward the idea of ‘rehabilitating’ mathematics using paraconsistency – trying to regrow established truths rather in the way one might rehabilitate a damaged ecosystem. As of today, much of Sylvan’s project remains undone. Work on this problem has been one of the most active and challenging areas of paraconsistent research.

What would a rehabilitated rationalist project look like? Gödel proved there cannot be a theory that answers every question in a consistent and computable way. The prevailing wisdom is that we will need to make do with theories that are either incomplete, or uncomputable (which comes to much the same thing as incompleteness, since, even if there is an answer, we have no effective way to get at it). A paraconsistent way forward would be to look for a system with precise and effective rules, that does answer every question after all – a complete description of the world (or at least the mathematical bit) – but where the system sometimes ‘over’-answers the question, saying both YES and NO. Because sometimes, maybe, the answer is both YES and NO.

The deep unease about paraconsistency, beyond methodological issues about scientific progress or practical problems about devising proofs, is what it would philosophically mean to accept a worldview that includes some falsity (where ‘false’ means having a true negation). How can a false theory be acceptable? And if it is or could be, then once consistency is no longer inviolable, is there any hard ground any more? If falsity is possible, then maybe everything is possible, and not in a good way. Perhaps this is what Musgrave is gesturing at when he says ‘an inconsistent theory provides no good explanation of anything’. If this is correct, any restored paraconsistent Enlightenment project will be a Pyrrhic victory, or worse.

The explanations an inconsistent theory provides, it must be admitted, may not look like what traditional philosophers have been expecting. But the expectations of traditional philosophers have not come to pass; indeed, Gödel gave us a mathematical proof that they will never come to pass. In the meantime, there are other kinds of valuable explanation right in front of us. As Schrödinger put it: ‘The task is not so much to see what no one has yet seen, but to think what no one has yet thought, about that which everybody sees.’

What if Wittgenstein had found what he was looking for and just didn’t recognise it?

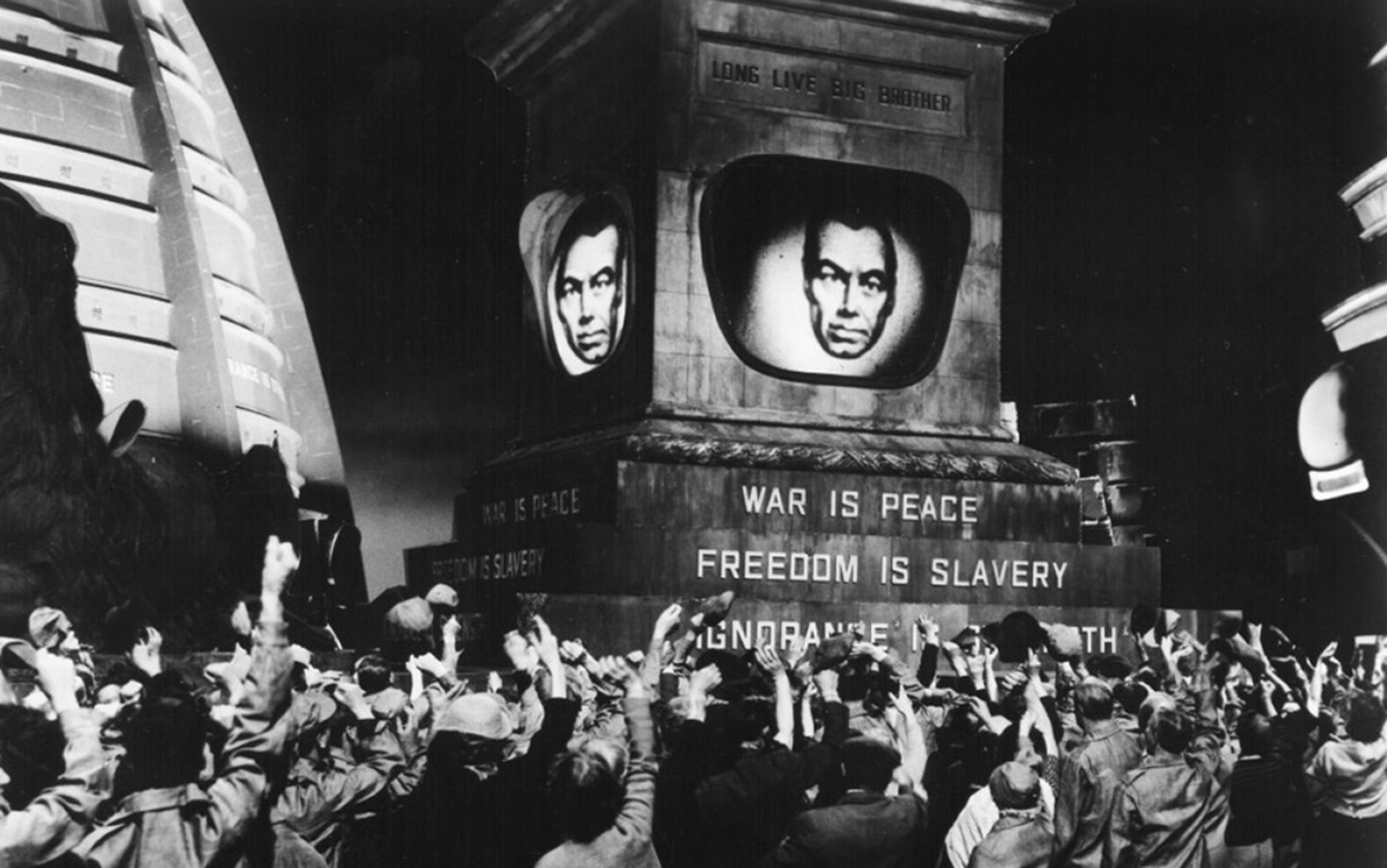

In 1921, a young Ludwig Wittgenstein’s Tractatus Logico-Philosophicus was published, after Russell’s qualified achievement with Principia but in advance of Gödel’s limiting theorems. Wittgenstein announces in the book’s forward that he has solved all the problems of philosophy. He marches to this conclusion through a relentless sequence of numbered propositions, appearing to lay out the nature of logic, what it can accomplish and, most crucially, what it cannot. By the end, his march arrives at the coastline, as it were, where we can look out at the vastness of the ocean, though logic will take us no further. According to Wittgenstein, the limits of logic mean that what is truly important in life can be shown but not said. He writes: ‘The solution of the riddle of life in space and time lies outside space and time.’ But then, he adds: ‘The riddle does not exist.’

And so, Wittgenstein is forced to conclude that all of his talk about showing and saying and riddles has itself been illegitimate. Yes, ‘there are, indeed, things that cannot be put into words … They are what is mystical’ – but the mystical is itself logically impossible, a senseless contradiction. Wittgenstein must admit that his whole beautiful book has been, by his own lights, nonsense, and the problems of philosophy are not so much solved as passed over in silence.

Wittgenstein was looking, like many, for an Archimedean point, a place ‘outside’ the world. This would be the view sub specie aeternitatis (borrowing a phrase from Spinoza). Only from there, he thought, could the world be explained. In seeking even to articulate that, to draw the limits of what we can understand, Wittgenstein contradicts himself, and inevitably so. He finds a contradiction, just as Frege and Russell and Gödel did when they attempted a complete theory. Wittgenstein took this as a kind of failure. But what if he had found what he was looking for and just didn’t recognise it? Perhaps Wittgenstein, like many others, felt pushed to make a false choice between a mysticism that provides some all-encompassing but inarticulate sense of the world, and a rational theory that is rigorous and precise but must be forever incomplete, inadequate.

This is a false choice if there can be a theory of the world that does both. Paraconsistency today does not have such a theory ready, but it holds out the (im)possibility of one, someday. It has recently been applied to religious worldviews (to Buddhism by Priest, to Christianity by Jc Beall). Maybe the lesson to take is that the philosophical Archimedean point at the end of the Enlightenment project will need to be both in, and not in, the world. ‘The proposition that contradicts itself,’ wrote the later Wittgenstein, ‘would stand like a monument (with a Janus head) over the propositions of logic.’

If we are living in an inconsistent world, in a world with contradictions from the foundations of mathematics to the triviality of dinner appointments, then its logic will leave room for falsity, doubts and disagreements. That is something many philosophers outside analytic and logical traditions have been urging for some time. As Simone de Beauvoir wrote: ‘Let us try to assume our fundamental ambiguity … One does not offer an ethics to a god.’ God would have no need of a logic, not even a paraconsistent one. But maybe we do.