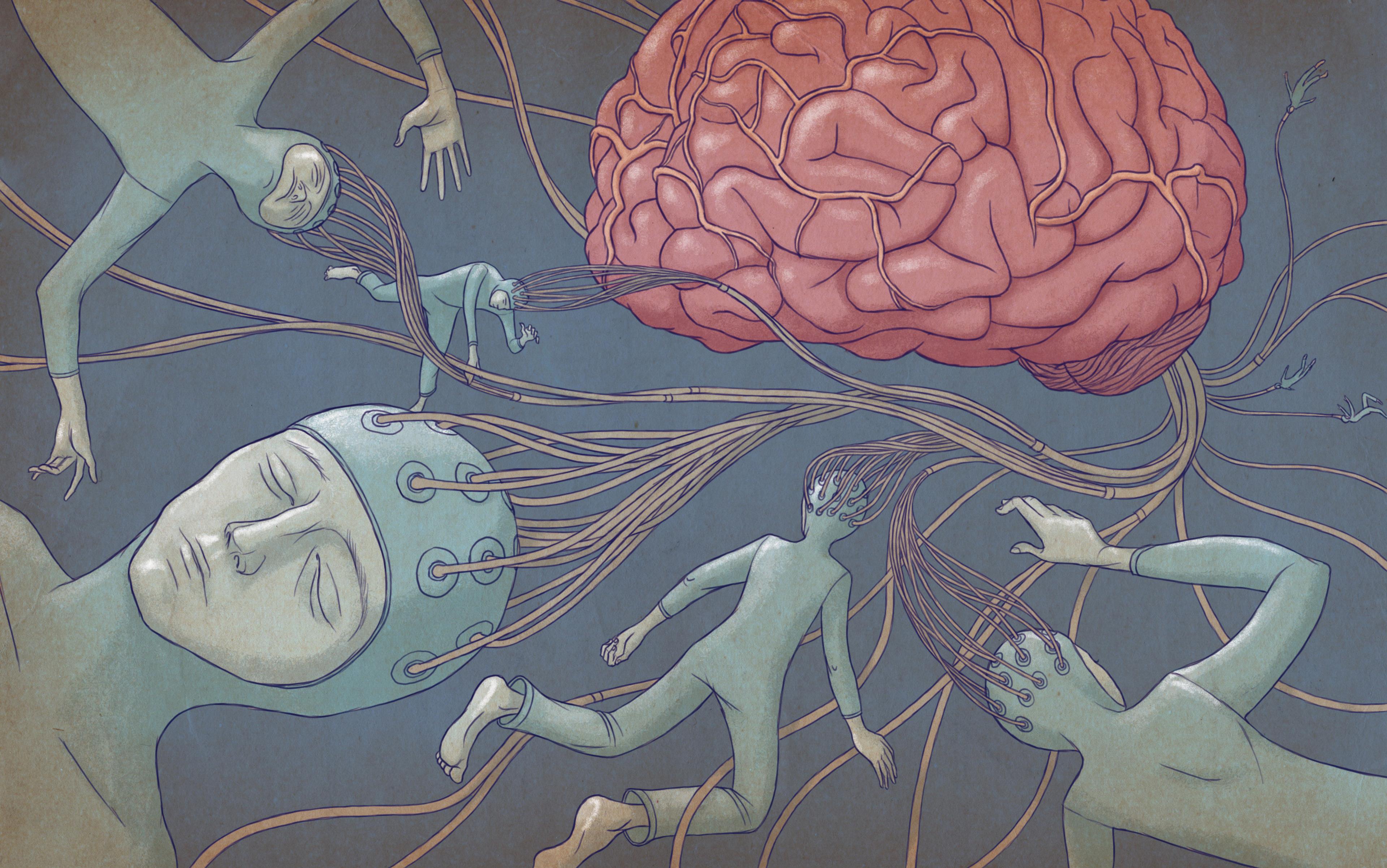

If you’ve ever dabbled in role-playing games – either online or in old-fashioned meatspace – you’ll know how easy it is to get attached to your avatar. It really hurts when your character gets mashed by a troll, felled by a dragon or slain by a warlock. The American sociologist (and enthusiastic gamer) William Sims Bainbridge has taken this relationship a step further, creating virtual representations for at least 17 deceased family members. In a 2013 essay about online avatars, he foresees a time when we’ll be able to offload parts of our identity onto artificially intelligent simulations of ourselves that could function independently of us, and even persist after we die.

What sorts of responsibilities would we owe to these simulated humans? However else we might feel about violent computer games, no one seriously thinks it’s homicide when you blast a virtual assailant to oblivion. Yet it’s no longer absurd to imagine that simulated people might one day exist, and be possessed of some measure of autonomy and consciousness. Many philosophers believe that minds like ours don’t have to be hosted by webs of neurons in our brains, but could exist in many different sorts of material systems. If they’re right, there’s no obvious reason why sufficiently powerful computers couldn’t hold consciousness in their circuits.

Today, moral philosophers ponder the ethics of shaping human populations, with questions such as: what is the worth of a human life? What kind of lives should we strive to build? How much weight should we attach to the value of human diversity? But when it comes to thinking through the ethics of how to treat simulated entities, it’s not clear that we should rely on the intuitions we’ve developed in our flesh-and-blood world. We feel in our bones that there’s something wrong with killing a dog, and perhaps even a fly. But does it feel quite the same to shut down a simulation of a fly’s brain – or a human’s? When ‘life’ takes on new digital forms, our own experience might not serve as a reliable moral guide.

Adrian Kent, a theoretical physicist at the University of Cambridge, has started to explore this lacuna in moral reasoning. Suppose we become capable of emulating a human consciousness on a computer very cheaply, he suggested in a recent paper. We’d want to give this virtual being a rich and rewarding environment to interact with – a life worth living. Perhaps we might even do this for real people by scanning their brain in intricate detail and reproducing it computationally. You could imagine such a technology being used to ‘save’ people from terminal illness; some transhumanists today see it as a route to immortal consciousness.

Sure, this might all be a pipe dream – but bear with it. Now let’s bring to the table a set of moral principles known as utilitarianism, introduced by Jeremy Bentham in the late 18th century and subsequently refined by John Stuart Mill. All things considered, Bentham said, we should strive to attain the maximum happiness (or ‘utility’) for the greatest number of people. Or, to use Mill’s words: ‘actions are right in proportion as they tend to promote happiness, wrong as they tend to produce the reverse of happiness’.

As a principle for good conduct, there’s plenty to criticise. For example, how can we measure or compare types of happiness – weighing up the value of a grandmother’s love, for example, against the elation of being a virtuoso concert pianist? ‘Even if you want to take utilitarianism seriously, you don’t really know what the qualities you’re putting into the calculus really mean,’ Kent tells me. Nonetheless, most belief systems today implicitly accept that a moral compass pointing towards greater happiness is more sound than one aligned in the opposite direction.

In Kent’s scenario, one might be tempted to argue on utilitarian grounds that we’re obliged to go forth and multiply our simulated beings – call them sims – without constraint. In the real world, such unchecked procreation has obvious drawbacks. People would struggle, emotionally and economically, with huge families; overpopulation is already placing great strain on global resources; and so on. But in a virtual world, those limits needn’t exist. You could simulate a utopia with almost unlimited resources. Why, then, should you not make as many worlds as possible and fill them all with sublimely contented sims?

‘A universe with a billion identical Alices is less interesting than one with a billion different individuals’

Our intuition might answer: what’s the point? Maybe a conscious sim just wouldn’t have the same intrinsic value as a new flesh-and-blood person. That’s a doubt that Michael Madary, a specialist in the philosophy of mind and ethics of virtual reality at Tulane University in New Orleans, believes we ought to take seriously. ‘Human life has a mysterious element to it, leading us to ask classical philosophical questions such as: why is there something instead of nothing? Is there meaning to life? Are we obligated to live ethically?’ he told me. ‘The simulated mind might ask these questions, but, from our perspective, they are all phoney’ – since these minds exist only because we chose to invent them.

To which one might respond, as some philosophers already have: what’s to say that we’re not all simulated beings of this sort? We can’t rule out the possibility, yet we still regard such questions as meaningful to us. So we might as well assume their validity in a simulation.

Pressing on, then, Kent asks: is it morally preferable to create a population of identical beings, or one in which everyone is different? It’s certainly more efficient to make the beings identical – we need only the information required for one of them to make N of them. But our instincts probably tell us that diversity somehow has more worth. Why, though, if there’s no reason to think that N different individuals will have greater happiness than N identical ones?

Kent’s perspective is that different lives are preferable to multiple copies of a single life. ‘I find it hard to escape the intuition that a universe with a billion independent, identical emulations of Alice is less interesting and less good a thing to have created than a universe with a billion different individuals emulated,’ he says. He calls this notion replication inferiority.

In a cosmos populated by billions of Alices, Kent wonders if it’s even meaningful to talk about the same life duplicated many times – or whether we’d simply be talking about a single life, spread out over many worlds. That might mean that many Alices in identical environments are no more valuable than one, a scenario he describes as replication futility. ‘I edge towards this view,’ Kent says – but he admits that he can’t find a watertight argument to defend it.

Kent’s thought-experiment touches on some longstanding conundrums in moral philosophy that have never been satisfactorily addressed. The British philosopher Derek Parfit, who died last year, dissected them in his monumental work on identity and the self, Reasons and Persons (1984). Here, Parfit pondered questions such as how many people there should be, and whether it’s always morally better to add a life worth living to the tally of the world, when we can.

Even if you accept a utilitarian point of view, there’s a problem with seeking the greatest happiness for the greatest number: the dual criteria create ambiguity. For example, imagine that we have control over how many people there are in a world of finite resources. Then you might think that there must be some optimal number of people that allows (in principle) the best use of resources to ensure happiness and prosperity to all. But surely we could find room in this utopia for just one more person? Wouldn’t it be acceptable to decrease everyone’s happiness by a minuscule amount to permit one more happy life?

The trouble is, there’s no end to that process. Even as the numbers go on swelling, the additional happiness of the new lives could outweigh the cost to those already alive. What you end up with, said Parfit, is the ‘repugnant conclusion’: a scenario where the best outcome is a bloated population of people whose lives are all miserable, yet still marginally better than no life at all. Collectively, their meagre scraps of happiness add up to more than the sum for a smaller number of genuinely happy individuals. ‘I find this conclusion hard to accept,’ Parfit wrote – but can we justify that intuition? For his part, Kent is not sure. ‘I don’t think there is any consensual resolution of the repugnant conclusion,’ he says.

Despite what Tolstoy says about unhappy families, many miserable lives would be identical in their drabness

At the core of the matter is what Parfit called the ‘nonidentity problem’: how can we think rationally about questions concerning individuals whose very existence or not depends on choices we make (such as whether we can find room for ‘just one more’)? It’s not so hard, in principle, to weigh up the harms and benefits that might accrue to an individual if we take some course of action that affects them. But if that calculus entails the possibility of the person never having existed, we no longer know how to do the maths. Compared with the zero of nonexistence, almost anything is a gain, and so all kinds of unappetising scenarios seem to become morally supportable.

There’s another, even stranger scenario in this game of population utilitarianism. What if there was an individual being with such enormous capacity for happiness that it gained much more than any of the sacrifices it demanded of others? The American philosopher Robert Nozick called this creature a ‘utility monster’, and summoned it up as a critique of utilitarianism in his book Anarchy, State and Utopia (1974). This picture seems, in Nozick’s words, ‘to require that we all be sacrificed in the monster’s maw, in order to increase total utility’. Much of Parfit’s book was an attempt – ultimately unsuccessful – to find a way to escape both the repugnant conclusion and the utility monster.

Now recall Kent’s virtual worlds full of sims, and his replication inferiority principle – that a given number of different lives has more worth than the same number of identical ones. Perhaps this allows us to escape Parfit’s repugnant conclusion. Despite what Leo Tolstoy says about the particularity of unhappy families in the opening line of Anna Karenina (1878), it seems likely that the immense number of miserable lives would be, in their bleak drabness, all pretty much identical. Therefore, they wouldn’t add up to increase the total happiness drip by drip.

But by the same token, replication inferiority favours the utility monster – for by definition the monster would be unique, and therefore all the more ‘worthy’, in comparison with some inevitable degree of similarity among the lives fed into its ravenous maw. That doesn’t feel like a very satisfactory conclusion either. ‘It would be nice for people to devote more attention to these questions,’ Kent admits. ‘I’m in a state of some puzzlement about them.’

For the American libertarian economist Robin Hanson – a professor of economics at George Mason University in Virginia and a research associate at the Future of Humanity Institute at the University of Oxford – these musings are not thought-experiments so much as predictions of the future. His book The Age of Em (2016) imagines a society in which all humans upload their consciousness to computers so as to live virtual lives as ‘emulations’ (not sims, then, but ems). ‘Billions of such uploads could live and work in a single high-rise building, with roomy accommodations for all,’ he writes.

Hanson has considered in detail how this economy might work. Ems could be of any size – some would be very small – and time might run at different rates for ems compared with humans. There would be extensive surveillance and subsistence wages, but ems could probably shut out that misery by choosing to remember lives of leisure. (Hanson is among those who think we might already be living in such a virtual world.)

This scenario allows the possibility of duplicated selves, as once a mind has been transferred to a computer, making copies of it should be rather easy. Hanson says that the problem of identity then becomes fuzzy-edged: duplicates are ‘the same person’ initially, but gradually diverge in their personal identity as they proceed to live out separate lives, rather as identical twins do.

‘I don’t think human morals are robust enough for situations so far outside our ancestors’ experience’

Hanson envisages that duplication of persons will be not only possible, but desirable. In the coming age of ems, people with particularly valuable mental traits would be ‘uploaded’ multiple times. And in general, people will want to make multiple copies of themselves anyway as a form of insurance. ‘They would prefer enough redundancy in their implementation to ensure that they can persist past unexpected disasters,’ Hanson says.

But he doesn’t think they’ll opt for Kent’s scenario of identical lives. Ems ‘would place very little value on running the exact same life again in different times and places,’ Hanson told me. ‘They will place value on many copies mainly because those copies can do work, or form relationships with others. But such work and relations require that each copy be causally independent, and have histories entwined with their differing tasks or relation partners.’

Still, ems would need to grapple with moral quandaries that we’re ill-equipped to evaluate right now. ‘I don’t think that the morals that humans evolved are general or robust enough to give consistent answers to such situations so far outside of our ancestors’ experience,’ Hanson says. ‘I predict that ems would hold many different and conflicting attitudes about such things.’

Right now, all this might sound uncomfortably like the apocryphal medieval disputes about angels dancing on pinheads. Could we ever make virtual lives that lay claim to real aliveness in the first place? ‘I don’t think anyone can confidently say whether it’s possible or not,’ says Kent – partly because ‘we have no good scientific understanding of consciousness.’

Even so, technology is marching ahead, and these questions remain wide open. The Swedish philosopher Nick Bostrom, also at the Future of Humanity Institute, has argued that the computing power available to a ‘posthuman’ civilisation should easily be able to handle simulated beings whose experience of the world is every bit as ‘real’ and rich as ours. (Bostrom is another of those who believes it is possible that we’re already in such a simulation.) But questions about how we ought to shape populations might not need to wait until the posthuman era. This ‘could become a real dilemma for future programmers, researchers and policymakers in the not necessarily far distant future,’ says Kent.

There might already be real-world implications for Kent’s scenario. Arguments about utility maximisation and the nonidentity problem arise in discussions of the promotion and the prevention of human conception. When should a method of assisted conception be refused on grounds of risk, such as growth abnormalities in the child? No new method can ever be guaranteed to be entirely safe (conception never is); IVF would never have begun if that had been a criterion. It’s commonly assumed that such techniques should fall below some risk threshold. But a utilitarian point of view challenges that idea.

For example, what if a new method of assisted reproduction had a moderate risk of very minor birth defects – such as highly visible birthmarks? (That’s a real argument: Nathaniel Hawthorne’s short story ‘The Birth-Mark’ (1843), in which an alchemist-like figure tries, with fatal consequences, to remove his wife’s blemish, was cited in 2002 by the bioethics council of the then US president George W Bush’s administration, as they deliberated on questions about assisted conception and embryo research.) It’s hard to argue that people born by that method who carried a birthmark would be better off never having been conceived by the technique in the first place. But where then do we draw the line? When does a birth defect make it better for a life not to have been lived at all?

Think of movie depictions of identical twins or ranks of identical clones: it’s never a good sign

Some have cited this quandary in defence of human reproductive cloning. Do the dangers, such as social stigma or distorted parental motives and expectations, really outweigh the benefit of being granted life? Who are we to make that choice for a cloned person? But whom then would we be making the choice for, before the person exists at all?

This sort of reasoning seems to require us to take on a God-like creative agency. Yet a feminist observer might fairly ask if we’re simply falling prey to a version of the Frankenstein fantasy. In other words, is this a bunch of men getting carried away at the prospect of being able to finally fabricate humans, when women have had to ponder the calculus of that process forever? The sense of novelty that infuses the whole debate certainly has a rather patriarchal flavour. (It can’t be ignored that Hanson is something of a hero in the online manosphere, and has been roundly criticised for his exculpatory remarks about ‘incels’ and the imperatives of sexual ‘redistribution’. He also betrays a rather curious attitude to the arrow of historical causation when he notes in The Age of Em that male ems might be in higher demand than female ems, because of ‘the tendency of top performers in most fields today to be men’.)

Even so, the prospect of virtual consciousness does raise genuinely fresh and fascinating ethical questions – which, Kent argues, force us to confront the intuitive value we place on variations in lives and demographics in the here and now. It’s hard to see any strong philosophical argument for why a given number of different lives are morally superior to the same number of identical ones. So why do we typically think that? And how might that affect our other assumptions and prejudices?

One could argue that perceived homogeneity in human populations corrodes the capacity for empathy and ethical reasoning. Rhetoric about people from unfamiliar backgrounds as being ‘faceless’ or a ‘mass’ implies that we value their lives less than those whom we perceive to be more differentiated. Obviously, we don’t like to think that this is the way that people see the world now, says Kent – ‘but it’s not obviously true’.

Might we even have an evolutionarily imprinted aversion to a perception of sameness in individuals, given that genetic diversity in a population is essential for its robustness? Think of movie depictions of identical twins or ranks of identical clones: it’s never a good sign. These visions are uncanny – a sensation that Sigmund Freud in 1919 linked to ‘the idea of the “double” (the doppelgänger) … the appearance of persons who have to be regarded as identical because they look alike’. For identical twins, this might manifest as a voyeuristic fascination. But if there were a hundred or so ‘identical’ persons, the response would probably be total horror.

We don’t seem set to encounter armies of duplicates any time soon, either in the real world or the virtual one. But, as Kent says: ‘Sometimes the value of thought-experiments is that they give you a new way of looking at existing questions in the world.’ Imagining the ethics of how to treat sims, whether in the form of Hanson’s worker-drones or Bainbridge’s replacement relatives, exposes the shaky or absent logic that we instinctively use to weigh up the moral value of our own lives.