In 1793, a yellow fever epidemic hit Philadelphia, where the founding father and physician Benjamin Rush advocated aggressive bleeding – the removal of large quantities of blood from either an artery or vein, often with leeches. At the time, bloodletting was a common medical practice: it was thought to balance the body’s humours (blood, phlegm, black bile, yellow bile). Subject to intense controversy as medicine developed a stronger basis in experimental evidence, Rush’s propensity to bleed, and bleed a lot, was condemned by some of his contemporaries, although other doctors also purged their patients. By the end of the 19th century, the practice was used only for a few rare conditions.

Now, in the midst of a devastating pandemic, and as scientists work feverishly to find COVID-19 cures and a viable vaccine, we look back at Rush’s treatments as archaic, useless and likely harmful. Indeed, bleeding seems barbaric today (although leeches have been approved by the United States’ Food and Drug Administration for certain purposes). Yet, while medical therapeutics have advanced considerably, many current treatments are also aggressive. We voluntarily undergo them because they are based on a stronger understanding of the body and mechanisms of disease. That is, we think that they really save lives, unlike bleeding, which occasionally killed patients.

Not so fast: some contemporary critics claim that modern medicine is still risky, if not more so. Consider the expansion of disease categories to include personality quirks and body types, side-effects that demand further medications, drug interactions that are deadly, and medical supervision of things left well enough alone. If 18th-century medicine lacked a scientific basis, our problem might be too many therapies for our own good.

The expansion of treatment has led to a critical response – ‘medicalisation’, which describes a skeptical approach to mainstream medicine’s social role in defining health. It also directly criticises the enlarging pharmacopoeia that is now an expected part of life in modern times. Indeed, medicalisation suggests that we could be subjecting ourselves to treatments that involve so much overkill that they are killing us – the same way we look at bloodletting today.

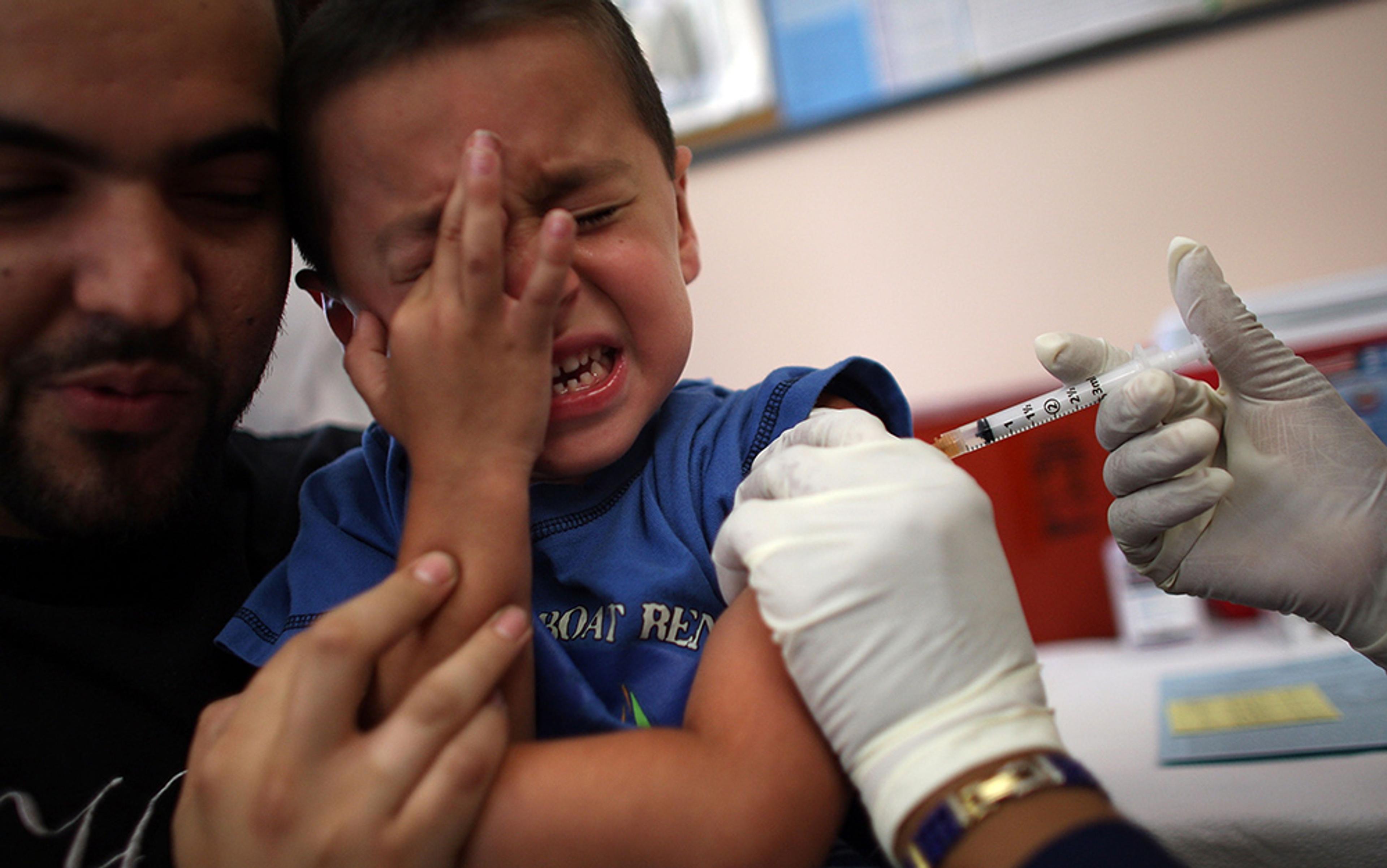

The medicalisation critique underpins popular interest in alternative medicine, mind-body techniques for wellbeing and, most pointedly, vaccine skepticism. The mainstream usually attributes vaccine skepticism to vaccine misinformation online, science denial and scientific illiteracy. Parents want the best for their children, the story goes, but are primed to act irrationally because they don’t understand population health risks and benefits, or they mistakenly correlate prevalent chronic illnesses to vaccinations.

But the concept of medicalisation provides another compelling frame to understand why some people refuse what others consider to be life-saving preventive medicine. It’s not that these parents distrust science in totality – it’s that they, among others, disagree that medicine should have such an authoritative role in determining how to live a healthy life. In this, they echo distinctly modern anxieties about how advancements in science and medicine might not always be good for us.

To understand the modern origins of medicalisation, look to the 1950s, when medicine’s therapeutic advancements were beginning to be shadowed with skepticism about its increasing social authority – the shift from deviance to illness in understanding adolescent rebellion, for example, or the increasing diagnoses of hyperactivity in children, or even the consensus that alcoholism is a disease. Given mainstream medicine’s forward march in the middle of the century – the development of antibiotics in the 1940s, the invention of a successful polio vaccine in the 1950s, and new drug treatments for psychiatric illnesses (among many other advancements) – there was much to celebrate but also much to worry about.

There were scares about drugs such as thalidomide. Originally marketed in Europe in the late-1950s to treat morning sickness, it was discovered to cause severe birth deformities, most dramatically evident in babies’ severely foreshortened or absent limbs. The thalidomide episode demonstrated that, without proper regulation, pharmaceutical companies could take drugs to market that caused harm. Even with proper regulation, companies could make mistakes that harmed people. In 1955, shortly after federal licensure of Jonas Salk’s first polio vaccine, 200,000 children were injected with inadequately killed poliovirus vaccine. The result was 40,000 people sick with polio, of whom 200 became paralysed, and 10 died. While the Cutter Incident, as this event came to be known, didn’t diminish public confidence in vaccination at the time, it represents a moment in US history that has been repeated again and again, with later generations less tolerant of industrial mistakes (or misconduct) than earlier ones.

The 1950s were also a time of emerging concerns about modern character development. Sociologists identified adherence to social norms and external approval as dangerous personality structures; they criticised the ‘other-directed personality’ (too concerned with external approval) and the ‘authoritarian personality’ (set on controlling others). Paul Goodman’s Growing Up Absurd (1960) focused on the problem of modern societies lacking adequate meaningful work for young adults. Resistance to social norms in the post-Second World War period is evident in popular movies such as Rebel Without a Cause (1955) and led to the flowering of the ‘opt out’ movements of the 1960s – hippies, druggies and back-to-nature enthusiasts.

Concerns about medicine merged with questions about the government’s overall commitment to the wellbeing of its citizens. The rise of the civil rights movement, the Cold War and potential nuclear devastation, developing evidence about environmental contaminants and, eventually, the Vietnam War, all stoked the fires of mistrust. Indeed, emerging social movements encouraged collectivist outlooks and political action to improve health, while medicine increasingly focused on the individual lifestyle choices that led to disease states. A shining example is the Framingham Heart Study, which began in Massachusetts in 1948, and has been nationally funded ever since. That work launched the medical term ‘risk factor’ by targeting diet, exercise and smoking as the key contributors to cardiovascular disease.

In this context, antipsychiatry was born. The antipsychiatry movement attacked mainstream psychiatric treatment of those who didn’t conform to social expectations. It responded directly to increasingly popular midcentury practices such as electroconvulsive therapy (ECT), lobotomy, and the institutionalisation of people with socially aberrant behaviours. ECT and lobotomy were aggressive treatments that are historical symbols of psychiatry’s abusive control of patients, although they were also widely heralded at the time as modern, effective treatments. António Egas Moniz shared the 1949 Nobel Prize in Physiology or Medicine for his invention of lobotomy. Yet, by the 1950s, lobotomy was waning as a treatment. A growing appetite for individuality and personal freedom clashed with psychiatric practices that seemed to snuff out those very qualities as threatening to mental health.

Fiction and memoir recounting this time focused on the horrors of institutionalisation and the abuses of power that characterised the patient experience. Novels such as One Flew Over the Cuckoo’s Nest by Ken Kesey (1962) contributed to public awareness of these concerns, especially institutionalisation and lobotomy, as sadistic tools of psychiatric authority. Susanna Kaysen’s memoir Girl, Interrupted (1993) detailed her institutionalisation in a mental facility in the late 1960s for what she describes as being a slightly depressed, confused teenager. The Bell Jar (1963), a semi-autobiographical novel by Sylvia Plath recounted the poet’s own mental breakdown and treatment in the 1950s. While not condemning the ECT depicted in it, her overall descriptions of treatment for depression and institutionalisation were far from flattering.

In addition to its focus on psychiatric treatments, antipsychiatry also targeted society. The psychiatrists R D Laing and Thomas Szasz thought that mental illness was a sane response to a sick society, and claimed that mental illness was a myth. If society was alienating and oppressive, why was medicine engaging with such force to make people fit in?

Medicalisation also attracted feminists resisting male medical authority over women’s health

The rise of psychotropic medications, including the increasing ease of administering drugs to anyone whose personality or behaviours went against social norms, accelerated these concerns. Those who felt themselves to be different both sought out therapeutic cures and resisted normalisation, especially as the 1960s countercultural movements flourished, offering them alternative ways to understand their differences and feelings of alienation and despair. Themes of individuality and freedom traded between the emerging social movements of the 1960s and antipsychiatry critics, allowing both to capitalise on resistance to organised medicine and its championing of social norms.

Some antipsychiatry critics simply wanted to ‘reframe mental illness’, primarily through existential or sociological explanations of mental suffering. Szasz, however, questioned the reality of mental illness as disease. His book The Myth of Mental Illness (1960) concludes that psychiatrists, instead of treating mental illness, really ‘deal with personal, social, and ethical problems in living’. Thus, the assault on psychiatry was, in part, definitional – what counted as a mental illness? Was psychiatric practice truly scientific? How did psychiatrists distinguish socially aberrant behaviours from those that indicated illness and mental disease? In the 1960s, acid trips and other alternative experiences of reality were heralded as visionary and socially liberating. Antipsychiatry opened a space to question whether psychiatric diagnoses were arbitrary or reliable, as well as how they operated as agents of social control.

The American psychologist David Rosenhan’s groundbreaking article ‘On Being Sane in Insane Places’ (1973) argued that sane people could feign mental illness and be institutionalised without actually being mentally ill. Published in Science, Rosenhan’s paper demonstrated with damning evidence the claims that antipsychiatry critics had been making for more than a decade – that psychiatric categories were neither reliable nor based in evidence, and that psychiatric treatments were abusive to patients by controlling their behaviours in alignment with social norms.

Rosenhan’s article is one of the most reprinted and cited in the field. The psychiatric profession went on to rewrite its diagnostic manual, and psychiatric hospitals were closed in line with his recommendations. Psychiatry eventually became more oriented toward biological explanations. Yet even those approaches brought a certain amount of criticism because they were seen to overreach the discipline’s authority by pathologising adaptive behaviours or the vicissitudes of ordinary personal development. Antipsychiatry activists still decry the power of pharmaceutical companies to define mental illnesses through the development of treatments for them.

By the 1970s, the suspicion directed at psychiatry had spread to much of the rest of medicine over the same issue – social control – and led to the flourishing of the medicalisation critique. Like antipsychiatry, concerns about medicalisation channelled robust themes in US history – concerns about individual freedom in a society increasingly adherent to social norms; distrust of professionals and big business; and longstanding interest in natural, healthy living. Medicalisation also attracted feminists resisting male medical authority over women’s health. In 1970, the Boston Women’s Health Book Collective published the first version of Our Bodies, Ourselves (called Women and Their Bodies), which focused on reproductive health and abortion.

And medicalisation found a prophet in the intellectual iconoclast Ivan Illich, a Croatian-Austrian Catholic priest who wrote wideranging criticisms of modern institutions, demonstrating how they inhibited human creativity, productivity and flourishing. In Deschooling Society (1971), he encouraged the radical homeschool movement that is increasingly popular in the 21st century, arguing that mass schooling had a deadening effect on children’s engagement with the world.

In Medical Nemesis (1975), Illich made a starkly prescient argument against medicine as a dangerous example of what some call ‘the managed life’, where every aspect of normal living requires input from an institutionalised medical system. It was Illich who introduced the term ‘iatrogenesis’, from the Greek, meaning doctor-caused illness. There were three levels of physician-caused illness, as far as he was concerned: clinical, social and cultural.

Clinical iatrogenesis comprises treatment side-effects that sicken people. Chemotherapy for cancer is a case in point – it saves lives, but introduces new threats by compromising patients’ immune systems and damaging noncancerous tissues.

Social iatrogenesis describes patients as individual consumers of treatment who are self-interested agents rather than actively political individuals who could work for broader social transformations to improve the health of all.

Cultural iatrogenesis is for Illich the deepest level of medicine-caused illness – and his deepest critique of medicine. In it, people’s innate capacities to confront and experience suffering, illness, disappointment, pain, vulnerability and death are displaced by medicine. One example focuses on childbirth as it moved from home and the company of women to the hospital and the supervision of mostly male obstetricians. In ‘Medicalisation and Primary Care’ (1982), Illich wrote:

No doubt … neonatal survival and, later, maternal survival increased – but at a cost of medicalisation. What the doctor delivers is, tendentiously, a life-long patient, who perhaps after long education will be able to sell care to others – but hardly a person free for neighbourly love.

Here, medicine takes a technical approach to ordinary life events, hollowing out the rich interpersonal relations of caring that defined being human for millennia.

Medications and treatments lead to more medications and treatments, rather than true health

Illich described medicine as a ‘radical monopoly’ – an institution that ‘disable[s] people from doing or making things on their own’. Radical monopolies are things, practices or institutions that become important in themselves, rather than for the services they render. Social forces consolidate to sustain their existence, further disabling and alienating people from their own capacities. A classic example of a nonmedical radical monopoly is the automobile, which comes to actively displace other forms of transportation, whereupon local and national infrastructures are created to serve it. As a result, people depend on automobiles and no longer on themselves (their bodies and strength) for transportation, and the built environment is made for cars, not people, to get around.

As a radical monopoly, medicine becomes an end in and of itself, rather than a tool for healing. Doctors are technicians who work for impersonal institutions. Medicine, which used to promote natural healing by working with the body, is now a practice located in a faceless bureaucracy without commitment to individuals or their personhood, relying on technical expertise rather than rapport and humanism. Medications and treatments lead to more medications and treatments, rather than true health.

In addition, the critique goes, modern medicine reduces autonomy all around – doctors lose their historical stature and authority, and patients no longer control their bodies, their environments or their will. Cultural, social and personal human experiences are turned into technical matters that are managed bureaucratically: patient records are handled through the electronic medical record; intermediaries such as insurance companies determine what kinds of treatments are covered and which are not. People are encouraged to plan around these contingencies rather than live their lives fully and experience the surprises and tragedies that accompany normal living, that is, to manage life rather than live it.

Make no mistake: for Illich – and the public – much about medical advancement was good. Sanitation, vector control, inoculation, and general access to dental and primary medical care were hallmarks of ‘a truly modern culture that fostered self-care and autonomy’. The problem arose when bureaucratic managers and the entire emerging structure of medicine limited the freedom of individuals to choose their own care or undergo bodily healing on their own.

Illich’s medicalisation critique helped to fuel natural healing and patient autonomy movements, demonstrating widespread cultural hesitation about the medical profession at the same time that people clamoured for new treatments and technologies to fight debilitating diseases such as cancer. This push-pull dynamic of medical advancement (on the one hand) and simultaneous concerns about medicine’s impact on the meaning of life (on the other hand) reveal a characteristically modern angst about how the very things that make us modern might be hurting us. Some phenomena, such as the rise of antibiotic-resistant bacteria, demonstrate that these concerns are not ill-founded.

There were other influential voices. The American physician Robert Mendelsohn, a self-described ‘medical heretic’, popularised antimedicine arguments through his books and a syndicated newspaper column (later a newsletter) called The People’s Doctor. Mendelsohn accused medicine of becoming a new religion, and agreed with Illich that it had become less about healing than about the maintenance of medicine itself, especially doctors’ livelihoods. Procedures that Mendelsohn felt had little evidence of benefit (chest X-rays, circumcision) were part of routine practice. He argued that the only thing supporting them was faith, making doctors more like priests than healthcare providers. The dependence on medicine – inculcated in children through well-child visits that included vaccinations – created ill health: ‘We’re not getting healthier as the bill gets higher, we’re getting sicker,’ he wrote in Confessions of a Medical Heretic (1979).

Long a member of the medical advisory board of La Leche League, a breastfeeding support organisation, he promoted home birth and breastfeeding. In obstetrics, where nature was rarely allowed to take its course, he argued, routine practice was organised for physicians, not mothers, and children were damaged as a result. Mendelsohn became a leader in the shift toward natural remedies and practices that flowered in the 1970s and ’80s – such as home birth and breastfeeding – even though his reasons were rooted more in conservatism than counterculture. In his book How to Raise a Healthy Child in Spite of Your Doctor (1984), he called paediatricians dangerous because they indoctrinated children to see medicine as the answer to all illness, and turned them into medicine-seeking adults.

After that, through the 1980s and ’90s, sociologists named a new concern – ‘biomedicalisation’, which can be seen in the development of electronic medical records; the rise of genetic testing; the increasing use of screening tests to prevent disease; and the intense calibration of nutrition, exercise and medications for wellness. While medicalisation emphasises increasing medical control over bodily processes (such as sleep), biomedicalisation focuses on prevention through the evaluation of risk – it describes not just normalisation, but the forward-thinking optimisation of bodies. Such optimisation might include choosing viable embryos for in vitro fertilisation, deciding to undergo mastectomy after a positive result for a breast-cancer gene mutation, taking vitamins after a genetic test suggests susceptibility to macular degeneration, or forgoing coffee during pregnancy on the chance that caffeine negatively affects developing foetuses.

By engaging in these increasingly technical health-related practices, critics argue, we are changing not only what it means to be healthy but also what it means to be ‘normal’. For instance, people have always taken drugs to manage the stresses of ordinary life (think cigarettes, alcohol, opium, morphine, cocaine, Valium, Miltown), but the development of new antidepressant drugs (such as Prozac and Paxil) in the 1980s changed previous approaches to mood disorders and other mental illnesses. In conjunction with changing diagnostic criteria, these new drugs enabled people to regulate or enhance their personalities and psychic experience, in part because side-effects were felt to be minor compared with older psychoactive drugs.

Now millions of people not only identify as depressed or socially anxious (a change attributable to medicalisation), but also take these medications in order to be more like themselves. Today, not taking medications to alleviate lesser mood disorders, sadness or transient depression is often seen as strange. This is not to say that such medications don’t help people. Yet, regulating behaviours and bodily experiences that previously might have been chalked up to ‘nerves’ or an inherited susceptibility is seen as a social obligation and, therefore, less a treatment choice than adherence to emerging social norms.

In recent years, pushback against medicine has been heightened by exposés of faulty research, purposeful deception and ethical conflicts in scientific peer review. Primary themes are overdiagnosis and overtreatment, as well as questionable biomedical research practices that range from worrisome to corrupt.

Treating people for asymptomatic conditions has become normal

The American physician H Gilbert Welch, who co-authored Overdiagnosed (2011) with his colleagues Lisa Schwartz and Steven Woloshin, provides a persuasive argument that overtreatment causes unnecessary medicalisation and ill health among people who aren’t ill. Welch says this is made possible by changing diagnostic features of diseases and so-called ‘prediseases’, by routine use of screening tests to find problems that don’t produce symptoms or ill health, and by the rigid dictum that early detection saves lives.

Notably, overtreated people are the most enthusiastic advocates for screening and early diagnosis, to which they attribute their good health. Apparently, testing is self-reinforcing. In daily life, it’s almost impossible to counteract these effects – indeed, there has been uproar when screening recommendations are rescinded or reduced, even when the reduced recommendations are supported by evidence.

How did this happen? In his book Prescribing by Numbers (2007), Jeremy Greene paints the picture: pharmaceutical companies influence medicine to treat numbers and abstractions rather than bodily symptoms, primarily through clinical trials, which ostensibly test the safety and efficacy of newly developed drugs. Clinical trials do more than just establish drug safety and efficacy, however: they also make sense of the disease state and create processes for treating risks, not just symptoms. As a result, treating people for asymptomatic conditions has become normal: for example, by treating the cholesterol count rather than the physical symptoms of heart disease. Those treated for high cholesterol who never get heart disease or never have a heart attack credit the medication for saving their lives, even if they never would have become ill in the first place.

There is also evidence of outright corruption in the production of medical ‘facts’. In Deadly Medicines and Organised Crime (2013), the Danish physician and scientist Peter Gøtzsche, a founding member of the Nordic Cochrane Center, details corrupt relations between physicians and governmental regulatory agencies, and collusion between medical journals and the pharmaceutical industry that perpetuates false research outcomes favouring industry results. (We have for years watched this phenomenon play out in the tragedy of the opioid crisis.) In his book Drugs for Life (2012), the American cultural anthropologist Joseph Dumit points out that, as a normal outcome of its research practices, pharma produces medical facts that favour the use of newer, more expensive drugs, even though many patients would do better on older, less expensive formulations. Or they would do better with no treatment at all.

In this web of anxiety, vaccines play a starring role. In the 1960s, their development for the so-called mild diseases of childhood – rubella (German measles), measles and mumps – caused the medical community to change its perspective on these illnesses. Once they became vaccine-preventable diseases (VPDs), their complications were investigated more fully, and public health professionals presented them as more threatening than before. The ‘well-child visit’ was established to coincide with the vaccine schedule and, as Mendelsohn and others charged, conditioned children to become medicalised adults seeking treatment for the normal vicissitudes of life. Mendelsohn’s concerns that multiple vaccinations at one time might be ill-advised, that individual children might not fare well in mass vaccination campaigns and that vaccines could induce autoimmune conditions are all echoed in contemporary vaccine dissent.

Since the mid-1980s, vaccines have been front and centre in processes of biomedicalisation. Advances in vaccinology led to an explosion in vaccine development, as well as the inclusion of more vaccines into the recommended schedule in the 1990s and 2000s. Federal vaccine recommendations are complex processes utilising data from human subject trials and mathematical modelling, in addition to policy considerations concerning both the personal and societal costs of illness. Chickenpox (varicella) is a good example. Prior to the introduction of the varicella vaccine in the mid-1990s in the US, chickenpox was thought to cause slightly more than 100 deaths annually, mostly in individuals with compromised immune systems. Millions of children got the chickenpox and spent a week or two at home in a misery of fever, itching and scratching. Parents tried to get all their kids infected so that everyone had it at the same time.

The Centers for Disease Control and Prevention (CDC) recommendation for universal childhood chickenpox vaccination in the US highlighted the benefit of indirect costs, such as parental lost wages for those weeks spent at home with itchy, feverish kids. After initial federal recommendation in 1995, almost all US states mandated the vaccine for school entry. The vast majority of US children are today vaccinated against the disease, and incidence of chickenpox has dropped accordingly: the CDC reports that each year, more than 3.5 million cases, 9,000 hospitalisations, and 100 deaths in the US are prevented by the vaccine. Other dangerous infections that can attend chickenpox infection, especially Methicillin-resistant Staphylococcus aureus (MRSA), are avoided. Interestingly, as a result of vaccination success, there is very little wildly circulating varicella virus. Those of us who had the chickenpox as children are now more susceptible to shingles as adults: being around kids with chickenpox functioned as a normal ‘booster’ for our antibodies against the virus. Thus, the need for effective shingles vaccines for older adults, which were developed and licensed in the 2000s.

In the chickenpox example, we see vaccine development, licensure and recommendation, in connection with the school-entry mandates, as biomedicalisation across several domains. The vaccine is a biotechnical replacement for a previous embodied ritual of childhood, one that entails the control of infectious disease, the optimisation of family sick leave, the minimisation of expense to society, and the necessity to record another milestone of childhood in an electronic medical record and a school immunisation file.

For vaccine accepters and public health authorities, varicella vaccine is a victory. But for vaccine skeptics, it serves as a cautionary tale about a widespread but unserious illness that doesn’t merit routine vaccination recommendations or compulsion. It’s worth noting that not all countries mandate varicella vaccination or recommend its routine use as a childhood vaccine. The United Kingdom is a good example. Variations in national recommendations suggest different perspectives on disease seriousness and vaccine acceptance, and give the lie to common arguments that science supports broad recommendations in all cases. Vaccination recommendations are policy decisions that use various forms of evidence in specific social contexts. Many Americans who support vaccines in general wonder why vaccines against chickenpox are mandated for school entry in most states. A process that doesn’t seem to distinguish between serious and less serious illnesses thus motivates suspicion against the entire system.

Vaccine dissent draws to it the anxieties of our age and magnifies them

Contemporary vaccine dissent echoes medicalisation and biomedicalisation critiques of the past. Some vaccine skeptics resist mainstream medicine’s focus on drug treatment altogether, preferring alternative approaches they perceive as more natural. Some worry that vaccines are causing chronic illnesses in susceptible people; others believe that the government doesn’t have the right to dictate healthcare practices to citizens. Some just want more family authority over healthcare decisions. Most, however, are joined in their concern that government regulatory agencies and Big Pharma are just too cosy with one another to trust the safety and efficacy data that can license and recommend vaccines for public use.

Vaccine dissent resonates with both emerging and longstanding distrust in medicine. Concerns that medicine as a profession has too much social authority, is too intent on maintaining itself, and recommends treatments for profit rather than for health are all animating factors in vaccine skepticism. If the evidence and the actors are suspect, what and whom does one trust? Are the processes by which we know things trustworthy? Are we confident that medical treatments work as designed – that they don’t lead to negative side-effects worse than the cures? How does the enlarging field of medical therapeutics change our lives – that is, transform humanity – and are there downsides to these changes that we are not aware of?

Modernity is characterised both by the technological advancements that have made our current standards of living possible and by the worry that these same advancements will lead to our downfall as a species. Vaccines are uniquely subject to these kinds of concerns. They emerged directly from the germ theory of disease, and demonstrate the wondrous opportunity to prevent illnesses that have devastated humankind since our beginnings. As such, they reflect modernity, defining its promise and its perils. But because they are medical treatments practised on healthy people, they take on an outsized symbolic significance – are they saviours of humankind or a demonstration of human hubris, attempts to control natural forces that either define us as humans or cannot be controlled?

Placing vaccine dissent within medicalisation shows it to be fully consonant with this strong trend of American skepticism. Pundits, reporters and physicians – who routinely rail against the perceived irrationality of parents resistant to vaccination – would do well to recognise this fact. The current strength of vaccine dissent – a small but vocal voice in the public sphere – draws on this lengthy history of concern about medical expansion and social authority. It is not a passing fad amenable to re-education. And its warnings about undiscovered side-effects and potential and actual dangers are unsettling, even to those who faithfully vaccinate on schedule. By exploiting science’s Achilles’ heel – its inability to demonstrate that X never causes Y, only that it has not been shown to – vaccine dissent draws to it the anxieties of our age and magnifies them.

In our current pandemic crisis, disagreements over stay-at-home measures and other restraints on individual liberty are fought over this terrain. While some vaccine skeptics might change their views as a result of the immediate threat of COVID-19, others have found their way to vocal protests about governmental actions to mitigate the spread of the virus. While it is tempting to join the majority in identifying these protesters as simply ‘anti-science’, it is more illuminating to see them in light of the long history of concerns about the social role of medicine and the state’s enforcement authority to prevent infectious disease. We can anticipate that a coronavirus vaccine will only animate this tension which, at its most basic level, is about what it means to be a modern human, with tools that might save us or might be our destruction.

Lessons from history are always ambivalent. Benjamin Rush was a medical visionary. He initiated more humane care for the mentally ill, and believed in eating more vegetables (a radical view in the 1790s). He improved medical services during the Revolutionary War. His propensity to bloodletting is not his only, nor most significant, medical legacy, yet it tends to be the thing most known about him.

As we seek out cutting-edge treatments yet worry that the medicines might endanger our health, the same type of paradox prevails today. Vaccination critics, along with the many Americans who go to naturopaths, are attracted to personalised medicine, or resist drug ads for the next new pill, are part of this tension, in which trust in institutions and belief in technological miracles are set against fears that institutional forms such as professional medicine can’t recognise individual singularity and specific human vulnerabilities, and might indeed be doing more harm than good.

Anti/Vax (2019) by Bernice L Hausman is published via Cornell University Press.