What has intelligence? Slime moulds, ants, fifth-graders, shrimp, neurons, ChatGPT, fish shoals, border collies, crowds, birds, you and me? All of the above? Some? Or, at the risk of sounding transgressive: maybe none? The question is a perennial one, often dusted off in the face of a previously unknown animal behaviour, or new computing devices that are trained to do human things and then do those things well. We might intuitively feel our way forwards – choosing, for example, to accept border collies and children, deny shrimp and slime moulds, and argue endlessly about different birds – but really it’s impossible to answer this question until we’ve dealt with the underlying issue. What, exactly, is intelligence?

Instead of a measurable, quantifiable thing that exists independently out in the world, we suggest that intelligence is a label, pinned by humanity onto a bag stuffed with a jumble of independent traits that helped our ancestors thrive. Though people treat intelligence as a coherent whole, it remains ill-defined because it’s really a shifting array masquerading as one thing. We propose that it’s hard to empirically quantify intelligence because it exists only relative to our expectations – expectations that are human and, moreover, individual to particular humans. Because of this, much like Monty Python’s Spanish Inquisition, intelligence often turns up in the places we least expect it.

Intelligence is not central to the success of most life on Earth. Consider the grasses: they’ve flourished across incredibly diverse global environments, without planning or debating a single step. Planarian worms regrow any part of their body and are functionally immortal, a trick we can manage only in science fiction. And a microscopic virus effectively shut down global human movement in 2020, without having any notion of what humans even are.

As archaeologists, however, when we track the success of our species over millennia, the temptation is to tie it all to some single, objective trait, a bright guiding star. That is where the concept of intelligence comes in. Our evolutionary success seems to map directly onto our smarts, through the invention of increasingly elaborate tools by our increasingly clever great-great-great-etc-grandparents. In this pervasive – albeit stylised and narrow – version of the human story, stone hand-axes and symbolic beads led inevitably to agriculture, writing and mechanised landscapes, setting the stage for more recent triumphs, including winning wars and Nobel Prizes, accumulating wealth, and reaching the Moon (first, preferably).

Despite its nebulous nature, intelligence is important to us, and so we seek it in others – in romantic partners, pets, leaders, friends and coworkers. We sometimes imbue ornery or helpful objects of daily use with intelligence, for example, when we are helped by a new smartphone app or foiled by a Machiavellian padlock. It’s a trait that we wonder about and endlessly debate the existence of in nonhuman animals (hereafter, animals), from wild elephants and dolphins to caged monkeys and cats. Massive effort is currently directed towards trying to understand intelligence, and build vastly more of it, under the umbrella of artificial intelligence (AI) programs. It is even a fundamental part of what we hope to find in alien life, made explicit in the long-running search for extraterrestrial intelligence (SETI).

But despite facilitating the global reach of our species, intelligence remains notoriously slippery to define. When pressed, scholars often point to more tractable mental skills such as abstraction, problem-solving, efficiency, learning, planning, social cognition and adaptability – even numeracy or the ability to recognise oneself in a mirror – although they quibble over which ones most demonstrate intelligent behaviour.

This plurality is precisely what we should anticipate: intelligence is not and never has been a single entity. Instead, it is a hominin-shaped heuristic, a way for us to easily perceive valued characteristics in other people. Like beauty, it lies in the eye of the beholder. And just as we cannot expect to automate the personal, shifting lens through which each of us sees beauty, a search for anything like artificial general intelligence (AGI) misses the point: nothing in intelligence makes sense except in the light of humanity, and our own evolved perceptions.

African grey parrots have the smarts of a human child, but much smaller brains than might be expected

The natural world overflows with animals that see, hear, smell and feel in very different ways than we do, along with living in conditions that would crush, freeze, dissolve or cook us alive. There are also a multitude of smaller and single-celled organisms thriving in ways that don’t easily fit into our scale of reality, not to mention the kingdoms of plants and fungi out there. Every species alive today can be considered our equal in the success game, by the simple virtue of continued existence. Physically speaking, humans are a middling mammal with an odd hair pattern, a badly evolved back, and a mouth that no longer fits all our adult teeth. All of which is why we really like brains.

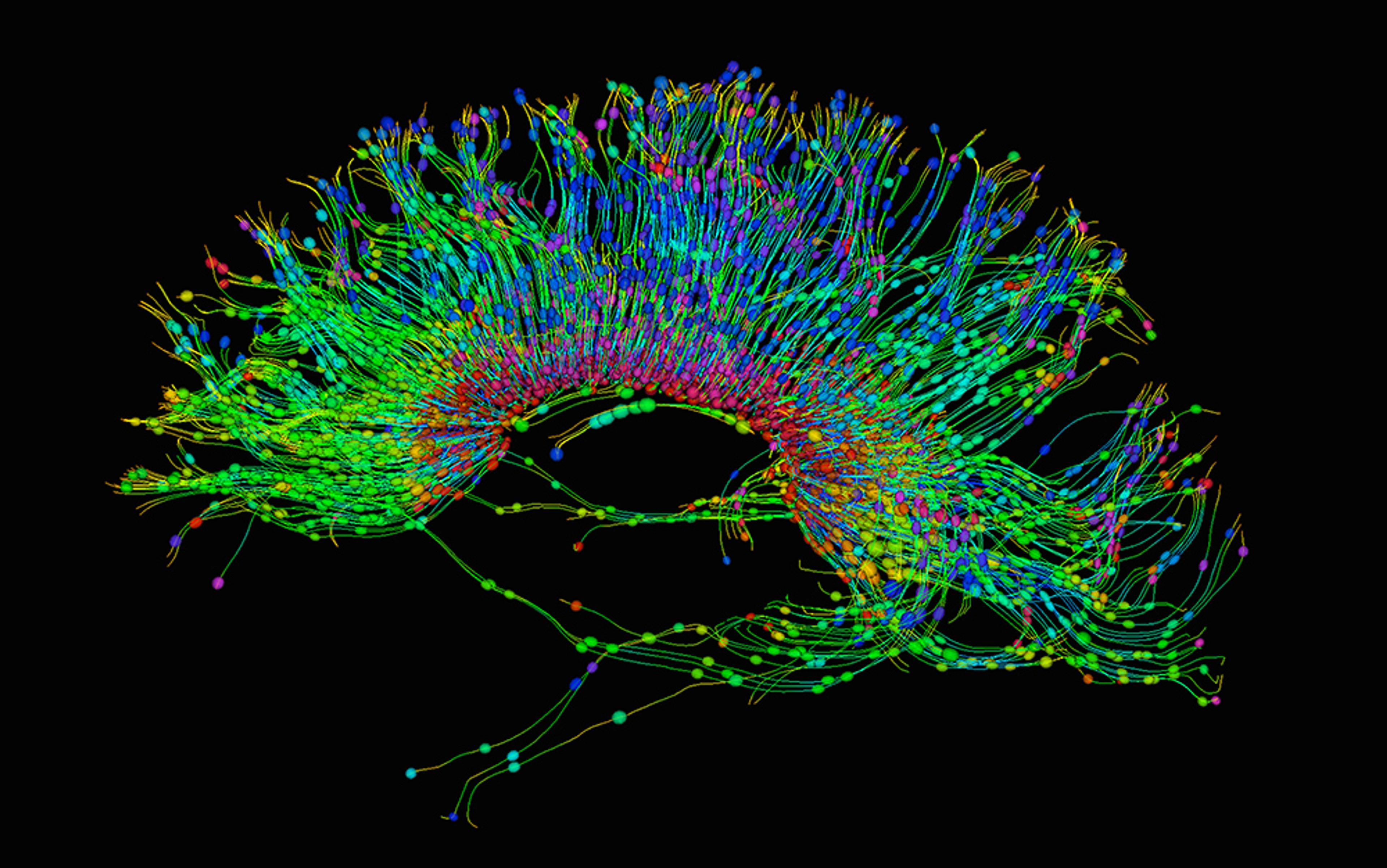

Absolute brain size, relative brain size, brain organisation, and neuronal density have all been used to predict where intelligence will emerge. Among living animals, Homo sapiens has the highest encephalisation quotient, meaning that our brains are much bigger than expected for our body size. This plays to our vanity, but some of the smartest creatures out there have brains quite unlike ours – cuttlefish, for example, rely on neurons in their arms for complex problem-solving. African grey parrots have the smarts of a human child, but much smaller brains than might be expected. Shrews, on the other hand, have some of the highest neuronal densities among mammals but, ironically, they aren’t terribly shrewd. Tiny-brained digger wasps use tools, and monarch butterflies perform continent-spanning annual migrations. Large brains are important for human intelligence, but life finds other ways to succeed.

Adding to the mire, intelligent behaviour in people is not always the result of conscious choice or rational strategy, but can arise from autonomic processes. The cognitive bubbling up of hunches, intuitions and gut feelings can often be credited to ‘lower-order’ systems such as the sympathetic nervous system or the amygdala, or manifest as subliminal or subconscious conditioned responses to environmental cues. In some contexts, the brain itself has been suggested as a poor candidate for the locus of intelligence. Supporters of swarm or collective intelligence tell us that the problem of problem-solving can be shared among a host of similar entities, as in a shoal of fish or a surge of grasshoppers. Ants build boats, bridges and metropolises with populations in the millions, and yet their individual cerebral horsepower doesn’t amount to much. The boundaries of an interacting group – the nest, the shoal, the rational mind, the nation-state – all can be argued as the scale at which true intelligence arises. Paradoxically, we value intelligence as a marker of individual success, yet it exists both as a collective of our own neurons, and an aggregate of collective behaviour. To paraphrase Inigo Montoya, we keep using this word, but perhaps it does not mean what we think it means.

If we are going to continue talking about intelligence, we need to at least make sure we’re talking about the same thing. Our starting point is (we hope) uncontroversial: intelligence is a label that humans use to help dissect the world. The label’s existence does not automatically mean that there is a single, true thing to which it corresponds; just as, a few centuries ago, having the word phlogiston did not guarantee the existence of a special substance contained in burnable things. That may seem obvious, but it emphasises that ultimately it is people who choose and name what matters. To answer the question of what intelligence is, we first need to recognise that it’s us – people – asking that question.

Unlike most other organisms, we don’t usually solve our problems with parts of our bodies. We needn’t have the warmest down, sharpest teeth, most toxic skin secretions, or larynxes best optimised for echolocation. Instead, we think about stuff, and then we modify our environments to our advantage; we create tools, employ strategies, construct complex habitats, and move symbols around. This is how humans work. Intelligence does not refer to a single measurable trait or quality, but rather it indexes behaviours and capacities that have arisen at different times throughout our species’ evolutionary history. Rather than a package that popped into being fully formed at a single point in time, this patchwork of selective advantages accrued over many millennia. No surprise, then, that the traits recognisable to us as intelligence co-occur almost exclusively in modern humans.

Intelligence evolved and is evolving. Some 7 million years ago, our last common ancestors with chimpanzees were already capable of cultural behaviours and tool use, and probably had advanced understandings of physical cause and effect. Around 3.4 million years ago, our clever 105 great-grandmother (someone like Lucy the australopith) made, then used, sharp stone tools to strategically scavenge meat. Access to meat gave her descendants the extra energy they needed to fuel their costly brain tissues, with which they then formulated ever more complicated tools and strategies.

Intelligence is a way we identify co-occurring traits that, in our species, are likely to mean ‘success’

Since that time, our lineage has been doubling down on intelligence as an investment strategy. Our Homo erectus ancestors, from 1.8 million years ago, endowed us with the ability to hunt, maybe to cook, and make elaborate tools like hand-axes, watercraft and baby slings. The increasing need to transfer knowledge and strategically coordinate with one another gave a selective advantage to those who were good communicators. Some kind of speech probably burbled up between 2 million and 500,000 years ago, between Homo erectus and our last common ancestor with Neanderthals and Denisovans. The ability to encode information externally – in symbolic media such as beads, tally sticks, tattoos or cave paintings – also heralds back to one of our Middle Pleistocene ancestors. What is clear is that our own species took this ball and ran with it, inventing writing, concrete, iPhones, chambers of commerce, and quantum computers all in the past 10,000 years.

Looking back, it makes sense that human intelligence is hard to pin down. Intelligence is not a single empirical, positivist quality that exists in nature – it’s a way we identify co-occurring traits that, in our species, are likely to mean ‘success’. Intelligence is real, because it’s real to us, but it’s not one thing. As an analogy, think of a rainbow. Rainbows exist, sure, but only to someone watching water droplets with the sun at less than a 42-degree angle at their back. A rainbow is a unified concept that indexes a known thing, and yet a rainbow is inherently a matter of perspective. Assuming these preconditions for rainbow-viewing are met, what we’re really talking about is an aggregate of culturally derived (is turquoise blue or green?), discrete yet overlapping (red or orangey red or orange?), arbitrary yet real (blue isn’t yellow, but grades into it) divisions in the spectrum of visible light. Moreover, a rainbow makes sense to us as a concept only because we have an evolved sensory apparatus that can perceive it, as primates who typically have three eye cone-cell types. Intelligence has much the same properties: think of it as the ever-shifting rainbow our ancestors used to get things done. It indexes an aggregate of evolutionarily adaptive components, with discrete yet overlapping (numeracy, tool use, symbolic thinking) and arbitrarily divided yet real (chess grandmaster intelligence vs diplomat intelligence vs rocket scientist intelligence vs customer service intelligence) capacities that kept our ancestors alive.

So why do we keep insisting that all these things go together as a unified whole? What is the point of discerning intelligence in one another, and why does it matter so much to us that we’ve spent billions trying to find and create it in machines?

Throughout our history, assessing the capacities of other humans – the default actors in our evolutionary social world – has been a matter of life and death. The adaptive advantage in not merely having but in recognising intelligence has paid great evolutionary dividends. It is a priceless cipher, alerting us to skills in communication, coordination, technology, strategy, planning, pattern recognition, and using the environment to our advantage. We can view human life as a set of reliably occurring problems, literally everyday problems, that revolve around survival, comfort, and finding meaning in maintaining our existence. Most of those problems are common enough across our friends, family and neighbours that any solutions they come up with will work for us too, alongside any social benefits that come from just fitting in with the group. Likewise, their mistakes – especially the fatal ones – offer valuable lessons for our own actions that should not or could not be learned independently. Picking up on those cues is a key part of surviving in the human world.

The capacity to enact, recognise and transmit novel, adaptive, ‘intelligent’ behaviours kept our ancestors alive, but not through feats of strength or physical prowess. Consider again one of our australopith ancestors, who – using just their body – didn’t stand a chance against the business end of a leopard. Every one of Lucy’s neighbours who tried to go it alone against a big cat risked a quick death, but those who paid attention to the ways that their fellow hominins survived leopard encounters were forewarned and literally forearmed. Leopards are a solvable problem, and those who solved it were displaying a quality that our species has decided to call intelligence.

It doesn’t matter if the solution was self-camouflage, fashioning a pointy stick, coordinating an australopith horde, constructing a covered pit, yelling instructions to your friend in a tree, gaining the high ground, or offering the cat a mouse (each requiring different levels of environmental, technological or social coordination and planning); its reliance on the judgment of observers means intelligence is outcome-based. Obviously, group members who manifest this trait are those we should seek to align ourselves with – emulate, befriend, marry, have on our team, listen to, promote as leaders – or to watch out for. In a human evolutionary context, assessing intelligence served as a gloss for ways of doing that gave our ancestors a unique competitive edge. While the outputs of intelligence may have changed over time, they continue to grab our attention because together they advertise fitness, and have been maintained across generations through adaptive social selection to ensure humans’ survival.

Finding intelligence triggers a mental alarm bell, then, in the same way that seeing beauty does. Its survival value means that we are predisposed to look for it. The human brain has been described as a prediction machine, one that builds a statistical model of the world from all the stuff that flows through our senses, and then tracks how well that model matches new information as it comes in. Having an accurate model makes processing reality more efficient – a benefit for expensive tissue like your brain – since you need to pay attention only to those rare bits of information that are out of alignment. The result is that most of the external world can be ignored much of the time, as you move through an environment largely populated by background caricatures of trees, clouds, buildings and even people. However, our minds also have a set of alerts that can drag you out of cruise control. Predators trigger those alerts, as does a sudden loud noise, an unexpected fall, a delicious smell from a nearby bakery or hotdog stand, or a particularly attractive person walking by. What these events have in common is not their intrinsic nature, but what they elicit in us: surprise.

Since we’re quick to assign intelligence to surprising solutions, we are also prone to false positives

Surprisal, as it is sometimes formally called, is what happens when the expected world and the reported one don’t match up. This technical version of surprise aligns neatly with everyday experiences that lead to laughter, shock, fear and so on, depending on whether the surprise is welcome or not. Crucially here, surprise is subjective and fluid over time. It is relational, existing only in comparison to our expectations. Surprise’s job is to alert us that there is something about the world that requires our attention, something we didn’t anticipate – favourable or not. Intelligence, then, invokes a particular flavour of surprise, when we see someone achieve an outcome that goes beyond our own model – built from our personal experiences to that point – of how the world can, or will, be solved.

Since we’re quick to assign intelligence to surprising solutions, we are also prone to false positives. Unexpected actors activate our cognitive tripwire. We might be surprised, for example, and see unexpected intelligence in decision-making slime moulds that navigate to solve a maze, or in an octopus named Otto solving the problem of nearby bright lights by shooting water jets to short out his aquarium’s electrical network. And it is hard not to be surprised when we learn of California ground squirrels that chew up discarded rattlesnake skin and rub it on their body, disguising their scent from the predator. But when the same squirrel freezes in oncoming traffic, we certainly don’t index that as intelligent behaviour. What we may fail to realise is that squirrels are hardwired to avoid detection by not triggering their natural predators’ sensitivity to movement. Behaving inflexibly – enacting a rigid, time-tested behavioural pattern as a response to certain stimuli – will, just like the ‘intelligent’ snakeskin-rubbing behaviour, usually ensure the animal’s survival. In each case, the core of intelligence lies not in what the slime mould, octopus or squirrel is doing, nor in the adaptive context for a particular behaviour, but comes from within us. We hallucinate intelligence.

Animals are particularly well suited to ring our evolved alarm bells. Many of them move and interact with the world in ways that we can broadly understand, living at a speed and size that we can comfortably watch, and, like us, facing a daily search for food, shelter and mates. And the more like us an animal is – if it has two eyes and a jaw and four limbs and lives on land – the more readily we can map their solutions to their own problems onto our own expectations. But even things that don’t resemble us regularly catch us out. When an animal surprises us by achieving a goal, solving a problem, or enacting a successful strategy that we did not expect, we are primed to register the mismatch between the demonstrated behaviour and our expectations as intelligence.

This happens more than we might think, for example, when we mistakenly think that something is too simple or small to perform a complex sequence of actions. In this way, bees or bacteria can appear more intelligent the more we get to know them. However, we have inbuilt limits to how long we can remain surprised. Continued enquiry may ultimately set a new baseline of expectations, to the extent that we lose our surprise and dial back how much of their behaviour we label as intelligence, until eventually we come to see it as explicable evolutionary programming. We recalibrate our expectations, just in time to stop short of ascribing ‘true’ intelligence to nonhuman entities. For example, we tell ourselves that humans do something clever or tactical because our brains have simulated that this course of action will produce favourable outcomes, but when we learn that ants do the same thing by enacting preprogrammed responses to pheromones, surely that doesn’t count. This cycle emphasises again that the watcher plays the central role, rather than any innate characteristic of – or favourable outcome for – the watched. Just as your ability to feel surprise is fluid, dependent on your age, your cultural background, and what you know and expect from a situation, so too is your assignment of intelligence relational and changeable. Take the example of salticid spiders, like those of the genus Portia, which can plan a complicated route from where they stand to potential prey, and then follow that path even if they can no longer see the prey during their journey. If, like us, you expected spiders not to be capable of essentially creating and using a mental map, that is a surprising discovery. But it doesn’t change what those spiders had been doing this whole time, under our very noses – it tells us about what we predicted they could do.

Moreover, when we describe other animals or things as having intelligence, we may inadvertently impute them with other human-like qualities. If a sea otter can use tools, we might unconsciously assume that it is like us in other ways; maybe it has counting skills, thinks abstractly, plans ahead, or knows its reflection in a mirror. If it’s intelligent, how could it not? But that is an unwarranted leap, emerging from the way we have built self-centred definitions of intelligence. In humans, skilful tool use is a highly accurate indicator of a certain level of development in theory of mind (the ability to attribute mental states to others), delayed gratification and impulse control, procedural strategy, and numeracy, because those traits co-develop as human children grow up. A person speaking to you using a complex, recursive language very likely can also plan what they will have for dinner and execute on that plan, not because language is the sign of intelligence, but because language is a sign of a human, and humans are also good at planning, compared with other known life forms. Like life and time, intelligence is a helpful shorthand for a complex idea that helps us structure our lives, as people. It is primarily a synonym for humanness, and judging other animals by this metric does a disservice to their own unique sea otterness, worminess, or sharkfulness.

In our view, intelligence has inadvertently become a ‘human success’-shaped cookie cutter we squish onto other species. Switching from baking to sports metaphors, we could say that everyone else – animals, amoebas, AIs and aliens – has to play the game on a field that we have laid out, according to rules that we have established and proven ourselves extremely competent at following. We prize novelty and efficiency, so we are surprised when an animal, swarm or program does things more quickly than we expected, or takes unexpected shortcuts to solving a problem (as the AI AlphaGo did in game two, move 37 of its matches against the world Go champion Lee Sedol in 2016).

Humanity’s relationship to AI is characterised by similar cycles of underestimation and surprise, followed by exploration, understanding and explanation, and a subsequent downgrading of our belief that intelligence is currently at play. Current large language models (LLMs) such as ChatGPT converse in sentences that are almost indistinguishable from those of another person, and their rapid search ability, multiple layers of tweakable parameters, and training on massive bodies of human knowledge allow them to succeed at standard intelligence tests. However, the brittleness and uncertain mechanisms of these programs have led to doubt about whether this is ‘true’ artificial intelligence, which instead might be found only when machines can deal with abstract concepts, generalising from small numbers of examples to predict missing pieces – or the next piece in a series of puzzles, something we humans happen to be good at. Once again, human minds are the shibboleth in the shadows: if a computer exhibits one trait of human intelligence, but not the others, it slips in our estimation of true smarts.

What might success look like to a tardigrade, or a pigeon, or a horseshoe crab?

This is sometimes called the ‘AI effect’, explained by the computer scientist Larry Tesler as our tendency to believe that ‘Intelligence is whatever machines haven’t done yet.’ Now that it is possible for machines to beat human chess grandmasters, the game is no longer widely seen as a marker of ‘true’ intelligence. In areas of medicine where AI diagnoses are more reliable than those of doctors, diagnosing those diseases will similarly be considered unintelligent, mere rote computing. What changes is not the theoretical ability of a machine to match or exceed a human, but our understanding of what a given system is capable of. Once we can reliably predict its success, it is no longer surprising, and the machine’s intelligence is relegated as merely mechanistic. The goalposts move of their own accord.

Mobile intelligence goalposts are not unique to animals and AI, and we expect they have been around as long as there have been humans. Many of our recent and distant ancestors lived in tectonically active regions, prone to volcanoes and earthquakes. These notoriously unpredictable and occasionally catastrophic events are ripe for being seen as the handiwork of intelligent – if mercurial – gods or spirits. However, with greater knowledge has come greater understanding, and predictions and explanations of eruptions and earthquakes are increasingly (albeit not yet perfectly) accurate. A child might still be surprised by the sudden noise of a thunderstorm and attribute some form of punitive or malevolent intelligence to it. An educated adult knows better, and instead attributes human-like intelligence to a new chatbot – but only for now. These are normal reactions to an unpredictable world. We do best when we know whom to appease, and with whom our allegiances lie.

The things we call intelligence have transformed us from small, slow, physically weak apes to the solar system’s most lethal apex predators. However, when we ask whether other animals are intelligent, we’re not usually asking what capacities or kinds of bodies were advantageous in their evolutionary past. We’re really asking whether they do things the way we do. Sometimes, the Venn diagram of animals’ success strategies overlaps with ours (hello dolphins!). But in seeking intelligence, we’re really seeking ourselves – seeking success strategies that match those found in our own evolutionary story. If a machine trained on human speech passably reproduces human speech; if a squirrel enacts a stereotyped behaviour as a response to a stimulus; if a bear, or a daffodil for that matter, won’t learn to press a lever that allows it to open a box to get a treat – so what? A focus on behaviours that resemble ours often plasters over much more interesting questions. What might success look like to a tardigrade, or a pigeon, or a horseshoe crab? Would a peacock mantis shrimp, able to see an almost unfathomable array of colours (as well as polarised light) and strike with incredible force while generating ultrasonic cavitation bubbles, be moved by our ability to beat them at checkers?

Where does all this leave intelligence as a marker of human success? Actually, it’s nicely intact. We will continue to correlate intelligence with adaptation, sophistication, learning, planning, strategy, abstraction and so on that we see in the people around us. We’ve evolved to do it, so we’ll keep doing it. If somebody’s capacity for those things elicits your attention, and then surprises you, intelligence is there. After all, intelligence is a unifying concept, but what it unifies is the human experience: it is the little drawing on our badge of success as a species.

Viewed this way, intelligence is unshackled from any one parochial definition. Parents will follow the changing intelligence of their growing children, animal lovers will be delighted by what they see as the intelligence of their pets, and AI researchers will authoritatively state that playing chess just isn’t intelligent behaviour (any more). Rather than seeking to quantifiably compare these things, we can instead realise that they don’t – and don’t need to – align at a deeper level.

Eventually, instead of talking about how machines, animal collectives, or individual birds and bugs exhibit intelligence, we should be better prepared to investigate how they evolved or iterated those actions in their own evolutionary spaces, unshackled from human-shaped standards. For those seeking a middle ground, we might be tempted to say that each species has its own intelligence, but that claim carries too much baggage at this point. A planet full of problem-solving life exists apart from humans, and none of it is obligated to fit neatly into our subjective, self-serving mindset. We need to avoid the real risk that we will miss animal or machine (or plant, fungal, bacterial, or even extraterrestrial) ways of succeeding just because they are fundamentally alien to our conceptual toolkit.

Like gazing through a stained-glass window at a vibrantly coloured, snow-covered landscape, intelligence isn’t just what we’re looking for, it’s what we are looking through. Humans value intelligence, and that is not about to change. What may change is our capacity to appreciate other kinds of life on their own terms, divorced from anthropocentric box-checking. What we hope our suggestion does is prevent any one limited metric from skewing or obscuring the diverse kinds of success that exist in our world, including those we have yet to discover. We won’t just see more clearly, we’ll see more than we did before. If intelligence is no longer a default metric for species’ worthiness, how might our value judgments shift? Would we be more inclined toward wonder, and might this wonder impel us to conserve the other wondrous creatures with whom we share this planet, and the environments in which they evolved their own flavours of success? We think that would be the smart thing to do.