In the autumn of 2007, the then prime minister of the UK, Gordon Brown, was riding on a wave of popularity. He had just taken the reins from Tony Blair, adroitly dealt with a series of national crises (terrorist plots, foot and mouth disease, floods) and was preparing to go to the country for a new mandate for his government. But then a decision to postpone the election tarred him with a reputation for dithering and his authority began to crumble. The lasting impression was not one of astute politicking — it was of a man plagued by indecision.

Whether lingering too long over the menu at a restaurant, or abrupt U-turns by politicians, flip-flopping does not have a good reputation. By contrast, quick, decisive responses are associated with competency: they command respect. Acting on gut feelings without agonising over alternative courses of action has been given scientific credibility by popular books such as Malcolm Gladwell’s Blink (2005), in which the author tries to convince us of ‘a simple fact: decisions made very quickly can be every bit as good as decisions made cautiously and deliberately’. But what if the allure of decisiveness were leading us astray? What if flip-flopping were adaptive and useful in certain scenarios, shepherding us away from decisions that the devotees of Blink might end up regretting? Might a little indecision actually be a useful thing?

Let’s begin by casting a critical eye over our need for decision-making speed. It has been known for many years that the subjective ease with which we process information — termed ‘fluency’ by psychologists — affects how much we like or value things. For example, people judge fluent statements as more truthful, and easy-to-see objects as more attractive. This effect has consequences that extend beyond the lab: the psychologists Adam Alter and Daniel Oppenheimer, of the Stern School of Business in New York and the Anderson School of Management in Los Angeles respectively, found that if it a company name is easy to pronounce, it tends to have a higher stock price, all else being equal. The interpretation is that fluent processing of information leads people implicitly to attach more value to the company.

Fluency not only affects our perceptions of value; it also changes how we feel about our decisions. For example, if stimuli are made brighter during a memory test people feel more confident in their answers despite them being no more likely to be correct. In a study I conducted in 2009 with the psychologists Dorit Wenke and Patrick Haggard at University College London, we asked whether making actions more fluent also altered people’s sense of control over their decisions. We didn’t want to obviously signal that we were manipulating fluency, so we inserted a very briefly flashed arrow before the target appeared. In a separate experiment we confirmed that participants were not able to detect this arrow consciously. However, it still biased their decisions, slowing them down if the arrow was opposite in direction to their eventual choice, and speeding them up if it was in the same direction. We found that people felt more control over these faster, fluent decisions, even though they were unaware of the inserted arrow.

Fluent decisions, therefore, are associated with feelings of confidence, control, being in the zone. Mihaly Csikszentmihalyi, professor of psychology and management at Claremont Graduate University in California, has termed this the feeling of ‘flow’. For highly practised tasks, fluency and accuracy go hand in hand: a pianist might report feelings of flow when performing a piece that has been internalised after years of practice. But in novel situations, might our fondness for fluency actually hurt us?

To answer this question we need to digress a little. At the end of the Second World War, engineers were working to improve the sensitivity of radar detectors. The team drafted a working paper combining some new maths and statistics about the scattering of the target, the power of the pulse, and so on. They had no way of knowing at the time, but the theory they were sketching — signal detection theory, or SDT — would have a huge impact on modern psychology. By the 1960s, psychologists had become interested in applying the engineers’ theory to understand human detection — in effect, treating each person like a mini radar detector, and applying exactly the same equations to understand their performance.

Despite the grand name, SDT is deceptively simple. When applied to psychology, it tells us that decisions are noisy. Take the task of choosing the brighter of two patches on a screen. If the task is made difficult enough, then sometimes you will say patch ‘A’ when in fact the correct answer is ‘B’. On each ‘trial’ of our experiment, the brightness of each patch leads to firing of neurons in your visual cortex, a region of the brain dedicated to seeing. Because the eye and the brain form a noisy system — the firing is not exactly the same for each repetition of the stimulus — different levels of activity are probabilistic. When a stimulus is brighter, the cortex tends to fire more than when it is dimmer. But on some trials a dim patch will give rise to a high firing rate, due to random noise in the system. The crucial point is this: you have access to the outside world only via the firing of your visual cortical neurons. If the signal in cortex is high, it will seem as though that stimulus has higher contrast, even if this decision turns out to be incorrect. Your brain has no way of knowing otherwise.

But it turns out the brain has a trick up its sleeve when dealing with noisy samples of information, a trick foreshadowed by the British mathematician Alan Turing while in charge of wartime code-breaking efforts at the secret Bletchley Park complex. Each morning, the code-breakers would try new settings of the German Enigma machine in attempts to decode intercepted messages. The problem was how long to keep trying a particular pair of ciphers before discarding it and trying another. Turing showed that by accumulating multiple samples of information over time, the code-breakers could increase their confidence in a particular setting being correct.

Remarkably, the brain appears to use a similar scheme of evidence accumulation to deal with difficult decisions. We now know that, instead of relying on a one-off signal from the visual cortex, other areas of the brain, such as the parietal cortex, integrate several samples of information over hundreds of milliseconds before reaching a decision. Furthermore, this family of evidence accumulation models does a very good job at predicting the complex relationships between people’s response times, error rates and confidence in simple tasks such as the A/B decision above.

Putting these findings together, we learn that there is a benefit from being slow. When faced with a novel scenario, one that hasn’t been encountered before, Turing’s equations tell us that slower decisions are more accurate and less susceptible to noise. In psychology, this is known as the ‘speed-accuracy trade-off’ and is one of the most robust findings in the past 100 years or so of decision research. Recent research has begun to uncover a specific neural basis for setting this trade-off. Connections between the cortex and a region of the brain known as the subthalamic nucleus control the extent to which an individual will slow down his or her decisions when faced with a difficult choice. The implication is that this circuit acts like a temporary brake, extending decision time to allow more evidence to accumulate, and better decisions to be made.

We don’t yet know whether these insights apply to the weighing of more abstract information, such as decisions about restaurants or holiday destinations. But there are hints that a signal detection-like model might explain these choices too. Daniel McFadden, a Nobel Prize-winning economist at the University of California, Berkeley, proposed an influential theory of choice in the 1970s directly inspired by signal detection theory. The core idea is that the values we assign to choice options are themselves subject to random fluctuations. These fluctuations then lead to different choices from moment to moment.

The agonising feeling of conflict between two options is not necessarily a bad thing: it is the brain’s way of slowing things down

In a recent study, researchers at the California Institute of Technology (CalTech) looked for signs of this accumulation of value while undergraduates chose between snack items, such as chips or cookies. During each decision, two items were presented on either side of a computer screen, and the students’ eye movements were recorded. Despite decisions being made within a couple of seconds, the pattern of eye movements belied subtle aspects of the decision process. When the decision was difficult — when the items were equally appealing — the eyes switched back and forth between the items more often than when the decision was easy. How much we flip between decision items, therefore, reveals the accumulation of evidence for decision-making.

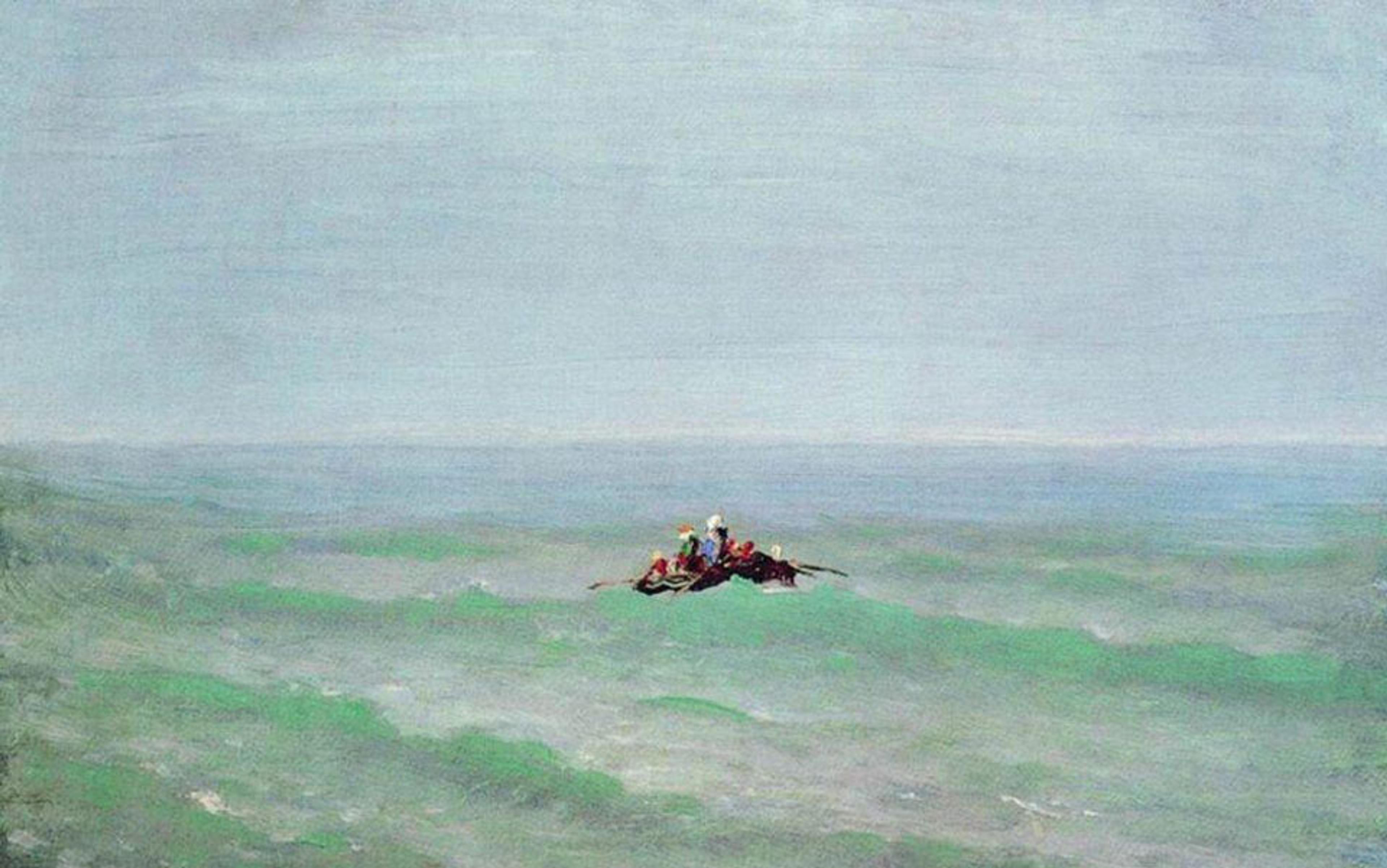

In the evidence accumulation framework, indecision has a surprisingly simple interpretation. ‘Activity’ vacillates between two options over a particular time period — milliseconds for sensory A/B decisions, but perhaps minutes or even days for important decisions such as buying a car. Crucially, however, this neural flip-flopping is not something to be avoided. Instead, flip-flopping is an overt behavioural sign of the brain’s weighing of evidence for and against a decision. Anecdotal evidence suggests we are not alone in showing overt signs of indecisiveness. In a pioneering experiment, J David Smith, professor of psychology at the University of Buffalo in New York, trained a dolphin named Natua to press one of two levers depending on whether a sound was low- or high-pitched. After Natua could perform the task on cue, Smith introduced ambiguous tones. Natua vacillated between the two levers, swimming towards one and then the other, as if he was uncertain which one to press.

Some researchers have interpreted these signs of indecision as indicating that the dolphin has awareness of its own uncertainty during the decision. This could be the case, but it does not have to be — it could be that swimming from one option to the other is the dolphin equivalent of humans moving their eyes between options in the CalTech study. Either way, evidence accumulation models tell us that indecision is not a bad thing — the brain is slowing things down for a reason.

The speed-accuracy trade-off indicates that there can be negative consequences from being too decisive. Quicker decisions are often associated with more errors and greater potential for regret further down the line. If we are forced to make a decision quickly, evidence accumulation might not have finished before the decision is executed. By the time our muscles are contracting and we are pressing the ‘send’ button on a rash email, we might have accumulated additional evidence to suggest that this is not a good idea. Such gradual realisation of regret is writ large in Ian McEwan’s novel Atonement (2001) in which one of the characters, Robbie, is ‘seized by horror and absolute certainty’ that the letter he has just sent to his sweetheart is not the one he intended, with consequences that ripple through the rest of his story.

The implication is that sometimes our actions decouple from our intentions, revealing an intimate connection between evidence accumulation, indecision and error correction. Experiments support our intuitions about this: in a simple A/B decision, within only tens of milliseconds after the wrong button is pressed, the muscles controlling the correct response begin to contract in order to rectify the error. For rash emailers, this response even has a modern corrective: Gmail users are now able to set up a 30-second grace period in which to ‘undo’ a previously sent message. In other words, a response can be made, or an email sent, before our decision circuitry has appropriately weighed up all the evidence. Yet only a short time later, when enough evidence has been accumulated, we might become aware of something we should have said or done, the l’esprit de l’escalier of the neurosciences.

Consistent with this idea, a recent study by the neuroscientists Lucie Charles and Stanislas Dehaene at the Neurospin Centre in Paris showed that when subjects make errors under time pressure, a neural signature of the response that was intended but not actually executed can be identified. Part of your brain seems to know what you should have done, even if the decision was executed too quickly, and too imperfectly, for the right action to have been made. In contrast, when given enough time to operate, intention and action work together in reasonable harmony, minimising the chances of a subsequent change of mind or regret.

Indecision is an inevitable feature of the neural mechanisms that underpin decision-making. With the right experimental set-up, we can observe the vacillations produced by this system in people’s behaviour. Cortico-basal ganglia circuits set the trade-off between speed and accuracy, and exquisitely sensitive mechanisms detect and correct errors even after decisions have been made. Yet decisiveness holds a subjective allure, perhaps because of the illusion of fluency, control and confidence it creates.

How should we reconcile the benefits of accumulating evidence with the costs of feeling disfluent? For simple decisions that take only a few seconds to make — from whether to reply to an email now or later, or which salad to have for lunch — there are some clear guidelines. First, decision-making tends to become more accurate if given a little extra time to operate. We should allow some indecision into our lives. Second, the agonising feeling of conflict between two options is not necessarily a bad thing: it is the brain’s way of slowing things down to allow a good decision to be made. Third, we should not ignore or suppress a change of mind after the fact — it is our brain’s way of using all the available information to correct inevitable errors in a time-limited process.

For important real-world decisions, however, the picture is certainly more complicated. The neuroscience of decision-making is in its infancy. Humans are endowed with the ability to simulate the future, and to use language to weigh up pros and cons — we can argue with ourselves and bounce ideas off others — all of which make such decisions very complex. But consider that important decisions are often the most difficult because they induce a state of indecision. (Charles Darwin even made a list of the pros and cons when deciding whether to marry, eventually deciding the pros outweighed the cons.) In these cases, our current understanding of the delicate balance of decision circuitry suggests we shouldn’t just blink and go with our gut instinct. In Habit (1890), the American philosopher William James said: ‘There is no more miserable human being than one in whom nothing is habitual but indecision.’ Yet enduring a little bracing indecision might be just what we need to navigate a busy, confusing world of choices.