Creativity doesn’t have a deep history. The Oxford English Dictionary records just a single usage of the word in the 17th century, and it’s religious: ‘In Creation, we have God and his Creativity.’ Then, scarcely anything until the 1920s – quasi-religious invocations by the philosopher A N Whitehead. So creativity, considered as a power belonging to an individual – divine or mortal – doesn’t go back forever. Neither does the adjective ‘creative’ – being inventive, imaginative, having original ideas – though this word appears much more frequently than the noun in the early modern period. God is the Creator and, in the 17th and 18th centuries, the creative power, like the rarely used ‘creativity’, was understood as divine. The notion of a secular creative ability in the imaginative arts scarcely appears until the Romantic Era, as when the poet William Wordsworth addressed the painter and critic Benjamin Haydon: ‘Creative Art … Demands the service of a mind and heart.’

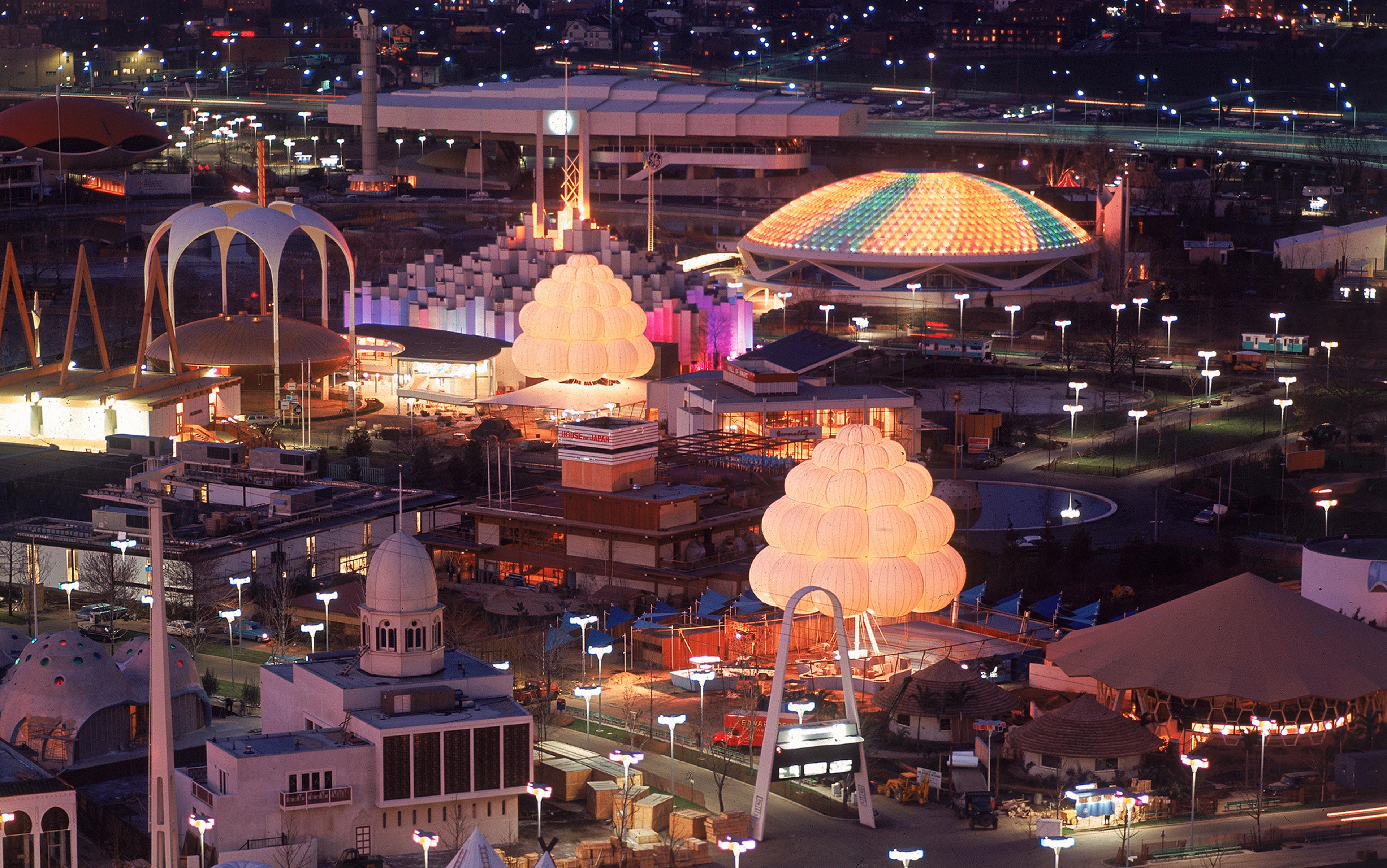

This all changes in the mid-20th century, and especially after the end of the Second World War, when a secularised notion of creativity explodes into prominence. The Google Ngram chart bends sharply upwards from the 1950s and continues its ascent to the present day. But as late as 1970, practically oriented writers, accepting that creativity was valuable and in need of encouragement, nevertheless reflected on the newness of the concept, noting its absence from some standard dictionaries even a few decades before.

Before the Second World War and its immediate aftermath, the history of creativity might seem to lack its object – the word was not much in circulation. The point needn’t be pedantic. You might say that what we came to mean by the capacity of creativity was then robustly picked out by other notions, say genius, or originality, or productivity, or even intelligence – or whatever capacity it was believed enabled people to think thoughts considered new and valuable. And in the postwar period, a number of commentators did wonder about the supposed difference between emergent creativity and such other long-recognised mental capacities. The creativity of the mid-20th century was entangled in these pre-existing notions, but the circumstances of its definition and application were new.

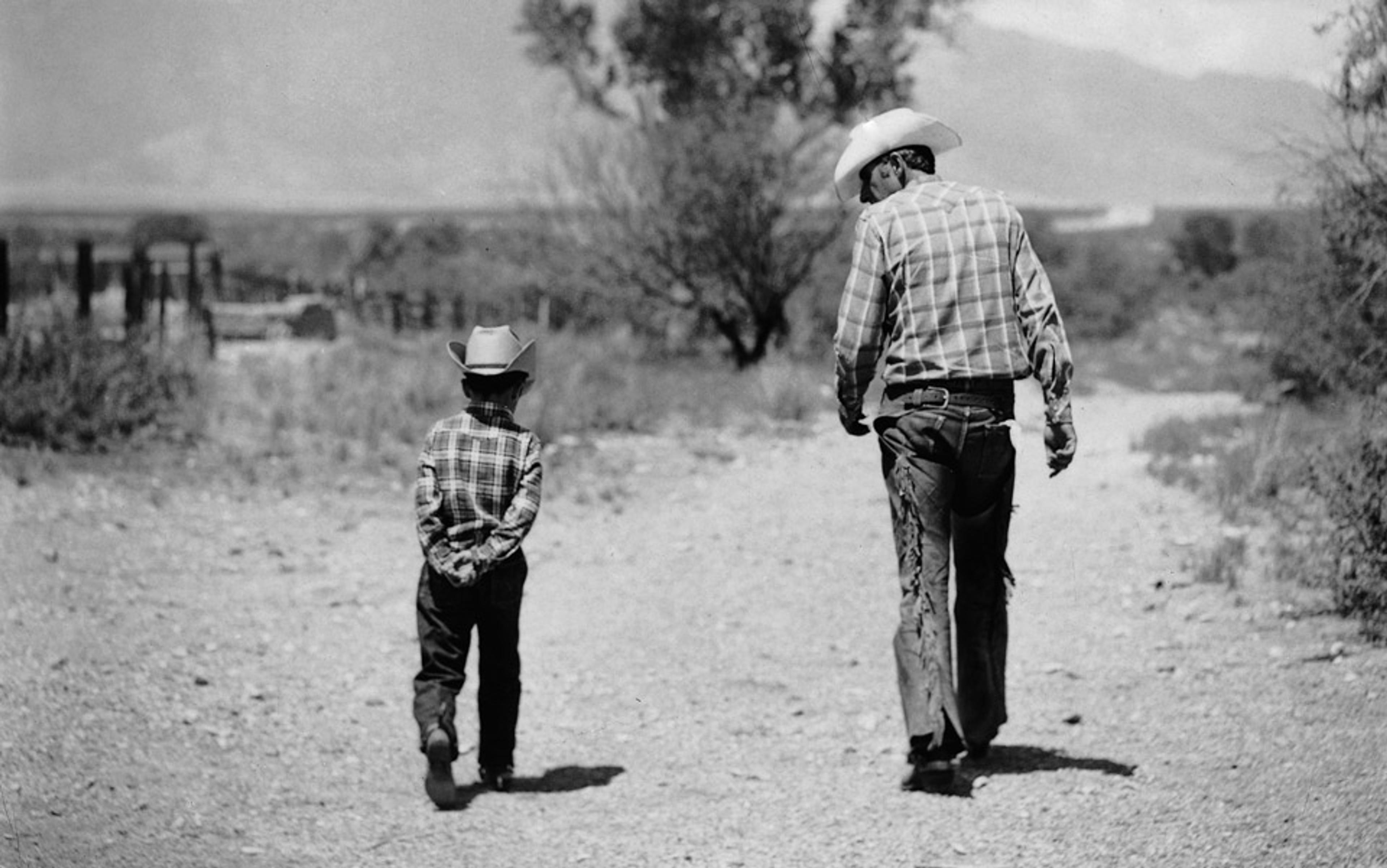

With those definitional considerations in mind, there were consequential changes during the 19th and early 20th centuries in views of the nature and circumstances of such diffuse categories as original and productive thought. Increasingly, these categories were being respecified as mundane, as inflections and manifestations of ordinary abilities, as competences that might even belong to an entity other than a specially and mysteriously endowed individual.

The idea of genius – of truly exceptional mental abilities – conceived in secular terms or as an inexplicable gift from above – remained alive and well, but it became both possible and, in some cases, prudent, for people who had done remarkably innovative things and who had thought remarkably new thoughts to disdain the designation of genius and to deny that they possessed unique intellectual endowments. You should understand that nothing special was going on.

In the same year that Charles Darwin’s On the Origin of Species (1859) was published, a book called Self-Help by the Scottish author Samuel Smiles sold far more copies. Self-Help was a kind of entrepreneur’s guide to success through hard work, and Smiles made clear what he thought about the relative significance of genius versus disciplined application in making new knowledge. There is such a thing as genius, Smiles wrote, but its role has been systematically exaggerated: ‘fortune is usually on the side of the industrious’; what’s needed is ‘common sense, attention, application, and perseverance’.

The Victorian novelist Anthony Trollope kept genius at arm’s length by telling us that fluent novel-writing was little more than a matter of 250 words per quarter-hour, adding up to 2,500 words before breakfast, every day. And when Darwin himself was asked whether he possessed any special talents, he replied that he had none, except that he was very methodical. ‘I have,’ he said, ‘a fair share of invention, and of common sense or judgment, such as every fairly successful lawyer or doctor must have, but not, I believe, in any higher degree.’

The old way to profits was monopolistic control; the new way was said to be constant innovation

These sorts of democratic sentiment were fast moving towards the norm. In 1854, the French biologist Louis Pasteur deflated the idea of scientific originality as a special gift: ‘Fortune favours the prepared mind.’ Be in the right place, with the right training, and you too can have important new ideas. Around 1903, the American inventor Thomas Edison gave us the still-popular formula that genius was ‘1 per cent inspiration, 99 per cent perspiration’, and the most celebrated scientific thinker of the 20th century – Albert Einstein – thought it intellectually and morally wrong to attribute mysterious gifts to people like him: ‘It strikes me as unfair, and even in bad taste, to select a few [individual personalities] for boundless admiration, attributing superhuman powers of mind and character to them.’

By the early 20th century, original work in the sciences was, in any case, being moved out of its once-religious Ivory Tower cloisters and entering the world of commerce, and this too encouraged demotic sentiments about pertinent intellectual capacities. First, the great German – and then the British and American – chemical, pharmaceutical and electrical companies were investing in both applied and basic research, hiring large numbers of academically trained scientists, with the idea that innovation was key to commercial success and that science properly belonged in commercial organisations. The old way to profits was monopolistic control; the new way was said to be constant innovation.

The wider culture at the time accounted the combination of professors and profits an odd one – academic eccentricity and demands for autonomy might rub up against corporate norms – and much reflective and practical thought went into arranging the circumstances that would allow companies to secure innovative people, permit them to do innovative things, and enhance their innovative output while, at the same time, keeping their attention on the right sorts of innovations, those that might yield corporate profit.

In such settings, the relevant category was still not creativity but a loosely connected set of competences, sometimes designated as originality, sometimes as productivity, more often not specially dwelt on or defined. Whatever those capacities were, they lay behind the outcomes for which scientific workers were employed. And employers tended to infer the competences from the concrete outcomes. There was, however, one notion of workers’ capacities from which corporate managers wished to dissociate themselves – and that was genius. Different firms had different sensibilities about scientific workers’ competences, but one response emerging from the new research labs of companies such as General Electric and Eastman Kodak was that creative and productive work didn’t have to do with hiring awkward geniuses but with finding the organisational forms that allowed people of ordinary gifts to achieve extraordinary things.

In 1920, a reflective director of industrial research at Eastman Kodak acknowledged the reality and value of genius, though he doubted that any company could expect to secure an adequate supply of such exceptional people. No matter: scientific workers who were well-trained and well-motivated could make valuable contributions even though they were ‘entirely untouched by anything that might be considered as the fire of genius’. At mid-century, corporate and bureaucratic employers varied in their opinions about whether the organisational difficulties attending genius were worth putting up with; some insisted that they were; others thought that the disruption caused by genius was too big a price to pay; and still others reckoned that a properly organised team of people of average abilities might constitute ‘a very good substitute for genius’.

Organisations should be designed to induce scientists from different disciplines to focus on a common project; to keep them talking to each other while maintaining ties with their home academic disciplines; and to get them to concentrate on commercially relevant projects while permitting enough freedom to ‘stare out the window’ and to think ‘blue sky’ thoughts. If you want profits, then – it was widely conceded – one price you pay is a significant amount of intellectual freedom, allowing the scientific workers to do just what they wanted to do, at least some of the time. The one-day-a-week-for-free-thought notion is not the recent invention of Google; it goes back practically forever in industrial research labs, and its justification was always hard-headed.

After the war, the Manhattan Project was often celebrated as a shining example of what effective organisation could achieve. True, many of the scientists building the bomb were regarded as geniuses, but many were not, and huge amounts of design and testing work and isotope manufacture was done by legions of scientists and engineers with ordinary qualifications. J Robert Oppenheimer was acclaimed for his administrative genius as much as for his scientific brilliance, though much of the organisational structure at Los Alamos and at isotope-producing sites was adapted from such companies as Westinghouse Electric. The Manhattan Project was proof that you could, after all, ‘herd cats’, organise geniuses and achieve things that were new and consequential – things that no lone genius or random aggregate of geniuses could have done.

The atomic bomb marked the end of the Second World War and the beginning of the Cold War. The creativity of Manhattan Project scientists and engineers has been abundantly celebrated by later commentators, not so much in the immediate aftermath: the word ‘creativity’ doesn’t appear in the official Smyth Report (1945) on the Project. And the only invocation of the notion of genius is deflationary and morally exculpatory: ‘This weapon has been created not by the devilish inspiration of some warped genius but by the arduous labour of thousands of normal men and women.’ But the new atomic world was just the institutional and cultural environment in which creativity emerged, flourished and was variously interpreted.

Among people with the relevant intellectual capacities, it would be useful to determine who might become ‘creative’

The military was a key actor in creativity’s Cold War history. That helps to make it a particularly American history, though there was uptake in other ‘free world’ settings. The American national requirement for ever-growing ‘stockpiles’ of scientific talent was clear in the earliest stages of superpower conflict, and it became urgent after Sputnik. ‘In the presence of the Russian threat,’ a psychologist wrote:

‘creativity’ could no longer be left to the chance occurrence of genius; neither could it be left in the realm of the wholly mysterious and the untouchable. Men had to be able to do something about it; creativity had to be a property in many men; it had to be something identifiable; it had to be subject to efforts to gain more of it.

There were proposals for scientific manpower boards to arrange continuing supplies of scientific personnel and to organise them in a way that enhanced ‘creative work’. Quantity was important, and the flow of scientific personnel depended in part on identifying populations of young people with the potential to become productive technical workers. Quality was very important too: among people with the right intellectual capacities, it would be useful to determine which of them might become ‘creative’. Could that psychological capacity be identified, broken out from other mental traits, measured?

It was this capacity that was increasingly called creativity, and there were many consumers for a stabilised and reliable characterisation of creativity and for means of assessing it. The bits of government – both military and civilian – concerned with the supply of technical personnel wanted less chancy, more dependable ways of identifying all sorts of talent; the military wanted techniques for spotting officers capable of initiative, improvisation and the ability to imagine new forms of warfare – in the American military strategist Herman Kahn’s Kennedy-era encouragement to contemplate fighting and surviving a thermonuclear war: to ‘think the unthinkable’. Businesses and governmental bureaucracies wanted the ability to spot adaptable managers; educational institutions wanted to select imaginative teachers; the new National Science Foundation (NSF) wanted better ways of deciding which grant applicants were most likely to produce significant breakthroughs. And, of course, institutions concerned with the arts and humanities wanted something similar, though these less-practically oriented concerns were not as significant in creativity’s Cold War history as were the making of war, profits and technical knowledge.

The expert human-science practices of defining and measuring mental abilities and supplying the results to institutional clients were, of course, well developed before the war. But, in the case of creativity, new military and corporate demand stimulated new academic supply, and this new self-fertilising academic supply encouraged yet more demand. In 1950, a leading psychologist lamented that only a tiny proportion of the professional literature was then concerned with creativity; within the decade, a self-consciously styled and elaborately supported ‘creativity movement’ developed. Bibliographies were produced giving the field a distinct identity; conferences were held; journals were founded; influential seminars on ‘creative engineering’ were held at MIT, Stanford and in commercial corporations, asking what creativity is, why it’s important, what factors influence it, and how it ought to be deployed; and the personnel divisions of all branches of the US military were intimately involved in Cold War creativity research.

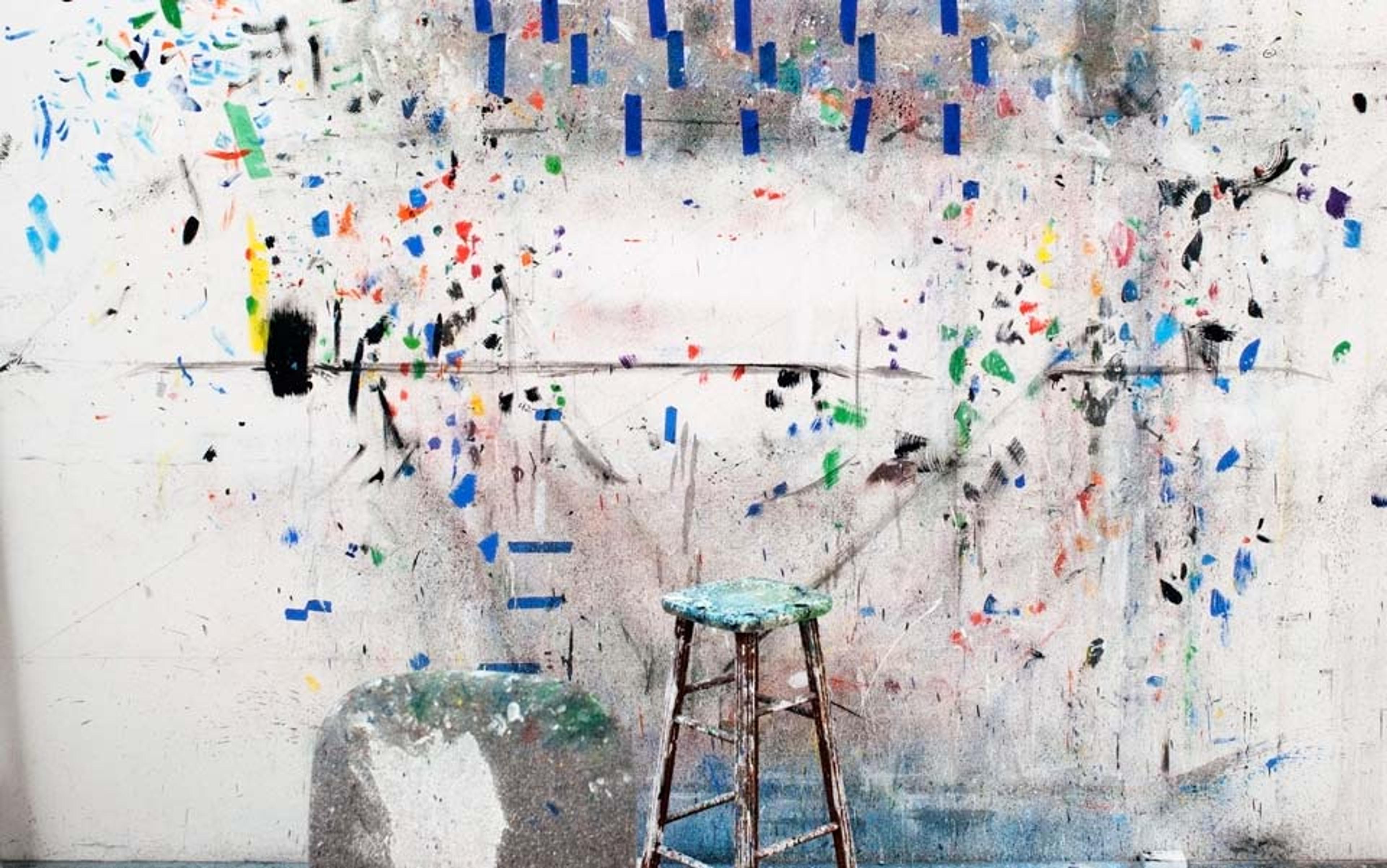

Definitions of creativity were offered; tests were devised; testing practices were institutionalised in the processes of educating, recruiting, selecting, promoting and rewarding. Creativity increasingly became the specific psychological capacity that creativity tests tested. There was never overwhelming consensus about whether particular definitions were the right ones or about whether particular tests reliably identified the desired capacity, but sentiment settled around a substantive link between creativity and the notion of divergent thinking. Individuals were said to have creativity if they could branch out, imagine a range of possible answers to a problem and diverge from stable and accepted wisdom, while convergent thinking moved fluently towards ‘the one right answer’. Asked, for example, how many uses there are for a chair, the divergent thinker could come up very many; the convergent thinker said only that you sat on it. Convergent and divergent thinking stood in opposition, just as conformity was opposed to creativity.

There was an essential tension between the capacities that made for group solidarity and those that disrupted collective ways of knowing, judging and doing. And much of that tension proceeded not just from practical ways of identifying mental abilities but from the moral and ideological fields in which creativity and conformity were embedded. For human science experts in the creativity movement and for their clients, conformity was not only bad for science, it was also morally bad; creativity was good for science, and it was also morally good.

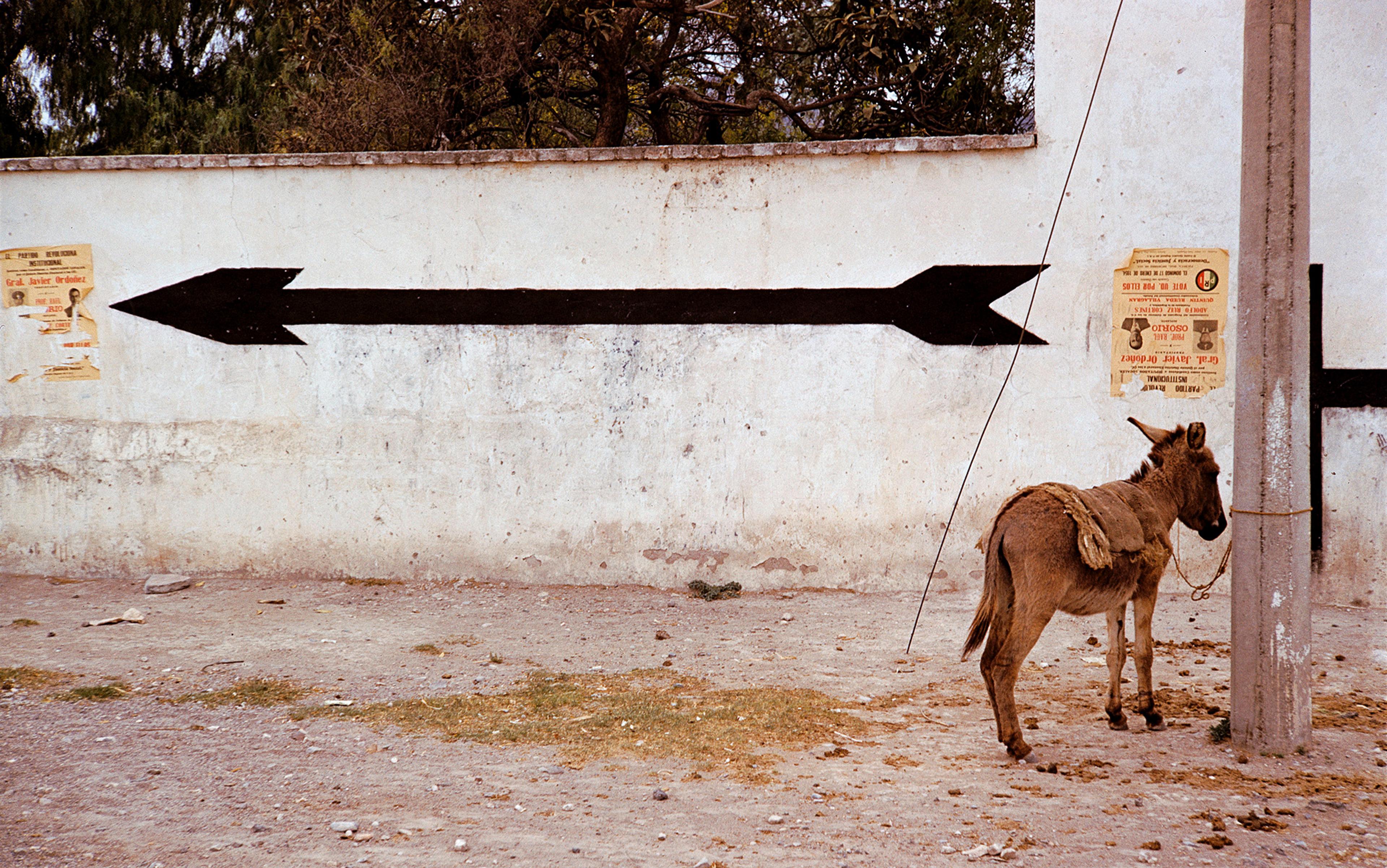

The historian Jamie Cohen-Cole has described the Cold War conjuncture of moral and instrumental sensibilities surrounding the supposed conflict between creativity and conformity. Creativity was valued for its practical outcomes – in science, technology, military and diplomatic strategy, advertising, business and much else – and it was integral to conceptions of what American citizens were, why they were free, why they were not the dupes of authoritarian dogma: why, in short, they were resistant to communism and able to maintain their authentic individuality. Creativity was a capacity that belonged, and should be encouraged to belong, he argued, to ‘a form of the exemplary self that would inoculate America against the dangers of mass society’. There were Cold War appreciations of creativity arising from specific forms of social interaction, but the opposition with conformity indexed creativity as a capacity belonging to the free-acting individual. The celebration of creativity was here an aspect of the celebration of individualism. The good society was a creative society, and open-mindedness had as much moral and political value as it promised cash-value. There was no choice but to embrace creativity and push back against conformity. To understand the stark realities of ‘the atomic age’ – wrote the psychologist Carl Rogers in 1954 – is to understand that ‘international annihilation will be the price we pay for a lack of creativity’.

The forces of conformity were the conditions for passages of science that came to be seen as creative

At the height of the Cold War, the upward curve of US expert enthusiasm for creativity met an ascending curve of concern that creativity was being squashed by social forms increasingly entrenched in US society. The Organization Man (1956) by the Fortune journalist and urbanist William H Whyte was a bestseller, sparking anxiety that business intentions to manage creativity were self-defeating, even a thin hypocritical veneer laid over crushing corporate conformity. Creativity was, in its nature, individual, eccentric and antagonistic to attempts to plan or organise it. If you seriously attempted to manage creative people, you might get the appearance of creativity but not its reality. President Dwight Eisenhower’s Farewell Address of 1961 warned against the dangers of the ‘military-industrial complex’, but it also fretted about the loss of ‘intellectual curiosity’ attendant on the rise of collectivised science, done on government contract or sponsored by industry.

From 1955 to 1971, the NSF sponsored a series of conferences on scientific creativity at the University of Utah, also partly funded by the Air Force. They were presided over by leading psychologists in the creativity movement, and they were attended by representatives of US education, government, the military and business (including General Electric, Boeing, Dow Chemical Company, Aerojet-General and the Esso Research Center). Everyone who was anyone in creativity research and its applications was there. The third of these Utah conferences in June 1959 was attended by a young Harvard physicist-turned-historian, Thomas S Kuhn. His contribution to proceedings began with an expression of bewilderment: he supposed that he was also concerned with creativity but, listening to the psychologists over the course of the meeting, he wondered why he had been invited, unsure about ‘how much we do, or even should, have to say to each other’.

Kuhn expressed deep scepticism about the foundational identification between creativity and divergent thinking. He noted the psychologists’ repeated depictions of the scientist free of preconceptions, continually rejecting tradition and continually embracing novelty; he wondered whether ‘flexibility and open-mindedness’ had not been too much stressed as requisites for basic scientific research; and he suggested that ‘something like “convergent thinking”’ was better talked about as essential to scientific advance than as an obstacle. What Kuhn was offering, and what he delivered a few years later as The Structure of Scientific Revolutions (1962), was something that might be described as a theory of scientific creativity without the psychologists’ category of creativity.

‘Normal science’ – science under a paradigm – was a form of puzzle-solving, its practitioners embracing tradition, accepting communal ‘dogma’, trying to extend the reach of the paradigm and to refine the fit between paradigm and evidence, aiming to converge on the unique and stable correct answer. Revolutionary change might involve individual acts of imagination, but the condition for the crises that provoked revolution was a community of scientists doing whatever they could to reduce anomaly, to fit pegs into holes until it was realised that the pegs might be square and the holes round. That is to say, the psychological and sociological forces of conformity were the necessary conditions for passages of science that came to be seen as creative. Tellingly, the word creativity never appears in Structure. You might, following Kuhn, say that the institution of science was creative, but you couldn’t say that this flowed from scientists’ divergent-thinking creativity.

Is creativity’s history progressive? The word itself, and the expert practices for identifying it, became prominent in the Cold War but, if you’re not bothered about that, you might say that related categories – being creative, a creative person – transitioned over time from the sacred power to a secular capacity, from a sacred to a secular value, from categories belonging to the vernacular to controlled ownership by academic experts, from something no one pays to find out about to elaborately funded expert practices. From the 1950s to the present, creativity has been established as something that everybody wants: in 1959, the director of scientific research at General Electric began an address to government officials with the bland assertion: ‘I think we can agree at once that we are all in favour of creativity,’ and he was right. Creativity had become an institutional imperative, a value that was the source of many other values.

That linear story is almost, but not quite, right. Kuhn’s case for tradition and dogma was a reactionary move, and a condition for its being even thinkable was reaction to mid-century celebrations of individualism, free-thinking and market-place models of progressive enquiry. But Kuhn’s small-c conservativism was widely misunderstood and confused with iconoclasm, and some radical ‘Kuhnians’ oddly took his book as a licence for political revolution.

About the same time, there was a more consequential response to creativity-enthusiasm emerging from the heart of forward-facing capitalism. The Harvard Business Review published an essay by Theodore Levitt, a marketing expert at the Harvard Business School, called ‘Creativity Is Not Enough’ (1963). Levitt didn’t doubt that there was an individual capacity called creativity, or that this capacity might be a psychological source of new ideas. Creativity was not, however, the royal road to good business outcomes, and conformity was being seriously undervalued. New ideas were not in short supply; executives were drowning in them: ‘there is really very little shortage of creativity and of creative people in American business,’ he wrote.

Many businesspeople didn’t dwell on distinctions between creativity and innovation, but Levitt did: creativity was having a new idea; innovation was the realisation of an idea in a specific outcome that the organisation valued; and it was innovation that really mattered. Creative people tended to be irresponsible, detached from the concrete processes of achieving organisational ends: ‘what some people call conformity in business is less related to the lack of abstract creativity than to the lack of responsible action.’ Levitt’s views were widely noted, but in the 1960s he was swimming against the tide. Creativity had been incorporated.

From that time to the present, you can say that creativity rose and rose. Everyone still wants it, perhaps more than ever; politicians, executives, educators, urban theorists and economists see it as the engine of economic progress and look for ways to have more of it. The creativity tests are still around, most continuing to identify creativity with divergent thinking; they have gone through successive editions; and there is a greater variety of them than there used to be. There are academic and professional organisations devoted to the study and promotion of creativity; there are encyclopaedias and handbooks surveying creativity research; and there are endless paper and online guides to creativity – or how, at least, to convince others that you have it.

The identity of the capacity called creativity has been affected by its normalisation

But this proliferating success has tended to erode creativity’s stable identity. On the one hand, creativity has become so invested with value that it has become impossible to police its meaning and the practices that supposedly identify and encourage it. Many people and organisations utterly committed to producing original thoughts and things nevertheless despair of creativity-talk and supposed creativity-expertise. They also say that undue obsession with the idea of creativity gets in the way of real creativity, and here the joke asks: ‘What’s the opposite of creativity?’ and the response is ‘Creativity consultants.’

Yet the expert practices of identifying people, social forms and techniques that produce the new and the useful are integral to the business world and its affiliates. There are formally programmed brainstorming sessions, brainwriting protocols to get participants on the same page, proprietary creative problem-solving routines, creative sessions, moodboards and storyboards, collages, group doodles, context- and empathy-mapping as visual representations of group thinking, lateral thinking exercises, and on and on. The production of the new and useful is here treated as something that can be elicited by expert-designed practices. Guides to these techniques now rarely treat creativity as a capacity belonging to an individual, and there are few if any mentions of creativity tests.

In the related worlds of high-tech and technoscience, Google (collaborating with Nature magazine and a media consultancy) sponsors an annual interdisciplinary conference called SciFoo Camp: it’s a freeform, no-agenda occasion for several hundred invited scientists, techies, artists, businesspeople and humanists – meant only to yield new, interesting and consequential ideas: Davos for geeks and fellow-travellers. There’s a YouTube video explaining SciFoo and very many online accounts of the thing.

So SciFoo is a star-dusted gathering of creative people, intended to elicit creative thoughts. I might well have missed something, but when I Googled ‘SciFoo’ and searched the first several pages of results, I didn’t find a single mention of creativity. When Google – and other high-tech and consulting businesses – hire people in nominally creative capacities, typical interview questions aim to assess personality – ‘What would you pick as your walk-up song?’ – or specific problem-solving abilities and dispositions – ‘How many golf balls can fit into a school bus?’ or ‘How would you solve the homelessness crisis in San Francisco?’ They want to find out something concrete about you and how you go about things, not to establish the degree to which you possess a measurable psychological quality.

In educational settings, creativity testing continues to flourish – where it is used to assess and select both students and teachers – but it is scarcely visible in some of late modernity’s most innovative settings. Producing new and useful things is not less important than it once was, but the identity of the capacity called creativity has been affected by its normalisation. A standard criticism of much creativity research, testing and theorising is that whatever should be meant by the notion is in fact task-specific, multiple not single. Just as critics say that there can be no general theory of intelligence, so you shouldn’t think of creativity apart from specific tasks, settings, motivations and individuals’ physiological states.

Creativity was a moment in the history of academic psychology. As an expert-defined category, creativity was also summoned into existence during the Cold War, together with theories of its identity, distinctions between creativity and seemingly related mental capacities, and tests for assessing it. But creativity also belongs to the history of institutions and organisations that were the clients for academic psychology – the military, business, the civil service and educational establishments. Creativity was mobilised too in the moral and political conflict between supposedly individualistic societies and their collectivist opponents, and it was enlisted to talk about, defend and pour value over US conceptions of the free-acting individual. This served to surround creativity with an aura, a luminous glow of ideology.

The Cold War ended, but the rise of creativity has continued. Many of its expert practices have been folded into the everyday life of organisations committed to producing useful novelty, most notably high-tech business, the management consultancies that seek to serve and advise innovatory business, and other institutions that admire high-tech business and aim to imitate its ways of working. That normalisation and integration have made for a loss of expert control. Many techniques other than defining and testing have been put in place that are intended to encourage making the new and useful, and the specific language of creativity has tended to subside into background buzz just as new-and-useful-making has become a secular religion. Should this continue, one can imagine a future of creativity without ‘creativity’.