A schism is emerging in the scientific enterprise. On the one side is the human mind, the source of every story, theory and explanation that our species holds dear. On the other stand the machines, whose algorithms possess astonishing predictive power but whose inner workings remain radically opaque to human observers. As we humans strive to understand the fundamental nature of the world, our machines churn out measurable, practical predictions that seem to extend beyond the limits of thought. While understanding might satisfy our curiosity, with its narratives about cause and effect, prediction satisfies our desires, mapping these mechanisms on to reality. We now face a choice about which kind of knowledge matters more – as well as the question of whether one stands in the way of scientific progress.

Until recently, understanding and prediction were allies against ignorance. Francis Bacon was among the first to bring them together in the early days of the scientific revolution, when he argued that scientists should be out and about in the world, tinkering with their instruments. This approach, he said, would avoid the painful stasis and circularity that characterised scholastic attempts to get to grips with reality. In his Novum Organum (1620), he wrote:

Our new method of discovering the sciences is such as to leave little to the acuteness and strength of wit, and indeed rather to level wit and intellect. For as in the drawing of a straight line, or accurate circle by the hand, much depends on its steadiness and practice, but if a ruler or compass be employed there is little occasion for either; so it is with our method.

Bacon proposed – perfectly reasonably – that human perception and reason should be augmented by tools, and by these means would escape the labyrinth of reflection.

Isaac Newton enthusiastically adopted Bacon’s empirical philosophy. He spent a career developing tools: physical lenses and telescopes, as well as mental aids and mathematical descriptions (known as formalisms), all of which accelerated the pace of scientific discovery. But hidden away in this growing dependence on instruments were the seeds of a disconcerting divergence: between what the human mind could discern about the world’s underlying mechanisms, and what our tools were capable of measuring and modelling.

Today, this gap threatens to blow the whole scientific project wide open. We appear to have reached a limit at which understanding and prediction – mechanisms and models – are falling out of alignment. In Bacon and Newton’s era, world accounts that were tractable to a human mind, and predictions that could be tested, were joined in a virtuous circle. Compelling theories, backed by real-world observations, have advanced humanity’s understanding of everything from celestial mechanics to electromagnetism and Mendelian genetics. Scientists have grown accustomed to intuitive understandings expressed in terms of dynamical rules and laws – such as Charles Darwin’s theory of natural selection, or Gregor Mendel’s principle of independent assortment, to describe how an organism’s genome is propagated via the separation and recombination of its parents’ chromosomes.

But in an age of ‘big data’, the link between understanding and prediction no longer holds true. Modern science has made startling progress in explaining the low-hanging fruit of atoms, light and forces. We are now trying to come to terms with the more complex world – from cells to tissues, brains to cognitive biases, markets to climates. Novel algorithms allow us to forecast some features of the behaviour of these adaptive systems that learn and evolve, while instruments gather unprecedented amounts of information about them. And while these statistical models and predictions often get things right, it’s nearly impossible for us to reconstruct how they did it. Instrumental intelligence, typically a machine intelligence, is not only resistant but sometimes actively hostile to reason. Studies of genomic data, for example, can capture hundreds of parameters – patient, cell-type, condition, gene, gene location and more – and link the origin of diseases to thousands of potentially important factors. But these ‘high-dimensional’ data-sets and the predictions they provide defy our best ability to interpret them.

If we could predict human behaviour with Newtonian and quantum models, we would. But we can’t. It’s this honest confrontation between science and complex reality that produces the schism. Some critics claim that it’s our own stubborn anthropocentrism – an insistence that our tools yield to our intelligence – that’s impeding the advancement of science. If only we’d quit worrying about placating human minds, they say, we could use machines to accelerate our mastery over matter. A computer simulation of intelligence need not reflect the structure of the nervous system, any more than a telescope reflects the anatomy of an eye. Indeed, the radio telescope provides a compelling example of how a radically novel and non-optical mechanism can exceed a purely optical function, with radio telescopes able to detect other galaxies that lie beyond the line of sight of the Milky Way.

The great divergence between understanding and prediction echoes Baruch Spinoza’s insight about history: ‘Schisms do not originate in a love of truth … but rather in an inordinate desire for supremacy.’ The battle ahead is whether brains or algorithms will be sovereign in the kingdom of science.

Paradoxes and their perceptual cousins, illusions, offer two intriguing examples of the tangled relationship between prediction and understanding. Both describe situations where we thought we understood something, only to be confronted with anomalies. Understanding is less well-understood than it seems.

Some of the best-known visual illusions ‘flip’ between two different interpretations of the same object – such as the face-vase, the duck-rabbit and the Necker cube (a wireframe cube that’s perceived in one of two orientations, with either face nearest to the viewer). We know that objects in real life don’t really switch on a dime like this, and yet that’s what our senses are telling us. Ludwig Wittgenstein, who was obsessed with the duck-rabbit illusion, suggested that one sees an object secondarily following a primary interpretation, as opposed to understanding an object only after it has been seen. What we see is what we expect to see.

The cognitive scientist Richard Gregory, in his wonderful book Seeing Through Illusions (2009), calls them ‘strange phenomena of perception that challenge our sense of reality’. He explains how they happen because our understanding is informed by the predictions of multiple different rule systems, applied out of context. In the Necker cube, each perception is consistent with perceptual data in three-dimensional space. But the absence of depth cues means that we have no way of deciding which interpretation is correct. So we switch between the two predictions through a lack of sufficient spatial understanding.

Paradoxes, like illusions, force intuition to collide with apparently basic facts about the world. Paradoxes are conclusions of valid arguments or observations that seem self-contradictory or logically untenable. They emerge frequently in the natural sciences – most notably in physics, in both its philosophical and scientific incarnations. The twin paradox, the Einstein-Podolsky-Rosen paradox and Schrödinger’s cat are all paradoxes that derive from the fundamental structure of relativity or quantum mechanics. And these are quite distinct from observational paradoxes such as the particle wave duality observed in the double-slit experiment. Nevertheless, in both of these categories of paradox, human understanding based on everyday causal reasoning fails to align with the predicted outcome of experiments.

When rules are applied to inputs that are not of the same structure, then we can expect weirdness

Even machines can suffer from paradoxes. ‘Simpson’s paradox’ describes the way that a trend appearing independently in several data-sets can disappear or even reverse when they’re combined – which means one data-set can be used to support competing conclusions. This occurs frequently in sport, where single players outperform others in any given season. However, when multiple seasons are combined, these players no longer lead, due to absolute differences such as the total games played, number of times batting, etc. There is also something known as an ‘accuracy paradox’, where models seem to perform well for completely circular reasons – that is, their solutions are essentially baked into their samples. This lies behind numerous examples of algorithmic bias where minorities based on race and gender are frequently misclassified, because the training data used as a benchmark for accuracy comes from our own biased and imperfect world.

Perhaps the most rigorous work on paradox was pursued by Kurt Gödel in Formally Undecidable Propositions of Principia Mathematica and Related Systems (1931). Gödel discovered that in every strictly formal mathematical system, there are statements that cannot be confirmed or refuted even when they are derived from the axioms of the system itself. The axioms of a formal system allow for the possibility of contradictions, and it is these contradictions that constitute the basis of the experience of paradox. Gödel’s basic insight was that any system of rules has a natural domain of application – but when rules are applied to inputs that are not of the same structure which guided the rules’ development, then we can expect weirdness.

This is exactly what can happen with adversarial neural networks, where two algorithms compete to win a game. One network might be trained to recognise one set of objects, such as stop signs. Meanwhile, its opponent might make some malicious minor modifications to a fresh data-set – say, stop signs with a few pixels moved about – leading the first network to categorise these images as anything from right-turn signs to speed-limit signs. Adversarial classifications look like extreme foolishness from a human point of view. But, as Gödel would appreciate, they might be perfectly natural errors from the perspective of the invisible rule-systems encoded in the neural network.

What paradox and illusion show us is that our ability to predict and to understand are dependent on essential deficiencies of thought, and that the limitations to achieving understanding can be very different from those that limit prediction. In just the same way that prediction is fundamentally bounded by sensitivity of measurement and the shortcomings of computation, understanding is both enhanced and diminished by the rules of inference.

The question of what we mean by ‘limits’ sheds light on why humans are drawn to all these machines and formalisms in the first place. The evolution of scientific culture, and of technology in its broadest sense, is a collection of means to break through the limits of cognition and language – limits that are Bacon’s bête noir in Novum Organum.

The relationship between understanding and prediction corresponds to a link between ontology (insights into the true nature of the world) and epistemology (the process of gaining knowledge about the world). Knowledge based on experiments can break through the barriers of our existing understanding and yield an appreciation for new and fundamental features of reality; in turn, those fundamental laws allow scientists to generate fresh predictions to test out in the world. When the branch of mathematics known as ‘set theory’ was shown to give rise to paradoxes, the subsequent development of something known as ‘category theory’ came to the rescue to partly overcome these limitations. When the Ptolemaic model of the solar system or the Newtonian model of mechanics generated incorrect astronomical expectations, relativity was introduced to capture the anomalous behaviour of large masses in fast motion. In this way, the ontological basis of a theory became the basis of new and better predictions; ontology begat epistemology.

But once scientific progress reaches a certain limit, ontology and epistemology appear to become enemies. In quantum mechanics, the uncertainty principle states that a particle’s momentum and position cannot both be known perfectly. It describes both a constraint on making perfectly accurate measurements (epistemology), and seems to involve an argument about a mechanism that creates the essential inseparability of position and momentum at the quantum scale (ontology). In practice, quantum mechanics involves applying the theory effectively in order to predict an outcome – not intuiting the mechanism that produces the result. In other words, ontology is absorbed by epistemology.

By contrast, the field of fundamental mechanisms in quantum mechanics seeks to explode this limit and give an explanation for why quantum theory is so predictive. The ‘many worlds’ interpretation, for example, abolishes quantum spookiness in favour of the incredible proposition that every observation engenders a new universe. The excitement of working at this limit is the way intellectual enquiry scintillates between the appearance of prediction and of understanding. It’s not a trivial task to distinguish between the epistemological problem and the ontological one here; they are closely related, even ‘coupled’ or ‘entangled’.

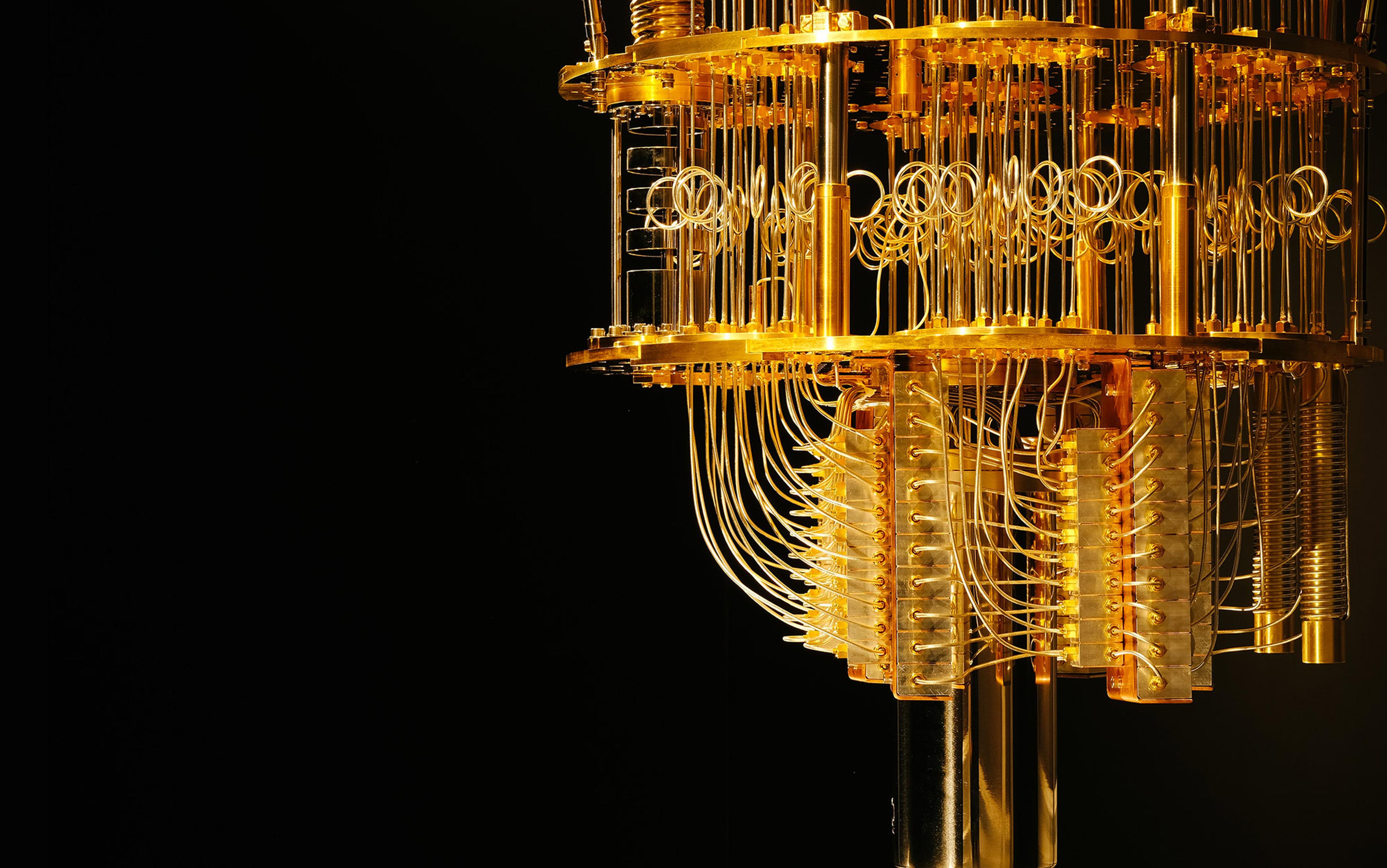

One ruthless way to make the problem go away is simply to declare that, at suitable limits, ontology vanishes – the precise sleight-of-hand performed by the Copenhagen school of quantum mechanics, whose passive-aggressive dictum was: ‘Shut up and calculate!’ (in David Mermin’s notorious coinage). Stop chattering about possible explanations for quantum spookiness, in other words; the search for fundamental mechanisms is a waste of time. Nowadays, though, it’s the modern computer, more than the quantum theorist, that’s devoid of any inclination to speak, and has no desire to do anything but quietly and inscrutably calculate.

Understanding speech was not the objective; predicting the correct translation was

Few scientists would settle for such a measly intellectual deal. In science, it’s close to a truism to say that a good theory is an elegant one – it encodes a simple (or ‘parsimonious’) explanation that can be intuitively grasped and communicated. A good theory, on this view of things, allows a person to hold a concept in totality in the mind’s eye, to improvise a miniature internal universe. In some domains, notably mathematical physics, the miniature universe of the human account and the large universe of reality converge. Both apples and planets follow trajectories described by the same equations of motion. This happy coincidence can be variously described as ‘consonance’, ‘conformability’ or the existence of ‘scale-invariant laws’.

The most striking of these conformable theories is the observation that the strength of certain forces is inversely proportional to the square of the distance from the source – which holds for both gravity at large scales and electromagnetism at small ones. As the late physicist Murray Gell-Mann put it:

As we peel the skins of the onion, penetrating to deeper and deeper levels of the structure of the elementary particle system, mathematics with which we become familiar because of its utility at one level suggests new mathematics, some of which may be applicable at the next level down – or to another phenomenon at the same level. Sometimes even the old mathematics is sufficient.

But sometimes our own enigmatic intuition becomes a block to practical progress. The cases of using computers to classify, translate and learn natural language illustrate the perils of seeking intuitive accounts of scientific phenomena. The allure of both HAL and Robby the Robot, from the films 2001: A Space Odyssey (1968) and Forbidden Planet (1956), was their ability to understand human language and respond with an appropriate level of ominous sarcasm, intelligible to their human interlocutors. But the evolution of machine translation and speech recognition ended up looking nothing like this. The most successful approaches to early speech recognition in the 1980s and ’90s employed mathematical models based on the structure of human speech, focusing on word categories and the higher-order syntactic and semantic relations of the sentence. Then in the late 1990s, deep neural networks appeared in earnest. These algorithms ignored much prior linguistic knowledge, instead allowing words to emerge spontaneously via training at a purely acoustic level. Understanding speech was not the objective; predicting the correct translation was. They went on to become devastatingly effective. Once the community of researchers had resigned itself to algorithmic opacity, the pragmatic solution became all too clear.

Neural networks capture the bind that contemporary science confronts. They show how complicated models that include little or no structured data about the systems they represent can still outperform theories based on decades of research and analysis. In this respect, the insights from speech recognition mirror those gleaned from training computers to beat humans at chess and Go: the representations and rules of thumb favoured by machines need not reflect the representations and rules of thumb favoured by the human brain. Solving chess solves chess, not thought.

But does the way we’ve surmounted the limits of human performance in chess and speech recognition reveal what it might mean to overcome limits in our prediction of physical reality – that is, to make progress in science? And can it tell us anything meaningful about whether the human need for understanding is hobbling the success of science?

The history of philosophy offers some routes out of the current scientific impasse. Plato was one of the first to address confusions of understanding, in the Theaetetus. The text is devoted to the question of epistemes – that is, a perception, a true judgment or a true belief, with an added explanation. In the dialogue, the ornery Socrates adduces geometry, arithmetic and astronomy as examples of the last category.

Theories of understanding were developed further by Immanuel Kant in his Critique of Pure Reason (1781). Kant makes a distinction between the worlds of matter and of mental representation – reality as ontology versus mental knowledge as epistemology. For Kant, there is only a representation of the world in the mind, and the material world can be known only through these representations. This means our so-called understanding is nothing more than an approximate and imperfect representation of empirical reality, whose Platonic existence (or perhaps non-existence) is the ultimate limit of knowledge. Kant’s argument doesn’t really help us distinguish understanding from knowledge; rather, it shifts understanding from a belief that can be defended to an internal representation that can’t be verified.

The philosopher John Searle explored the knowledge/understanding distinction in his influential book Minds, Brains and Science (1984), where he set out to challenge the Pollyannas of machine intelligence. Searle asks us to imagine someone in a room with no native understanding of the Chinese language, but well-equipped with a set of dictionaries and rules of grammar. When presented with a sentence in Chinese, these resources are used to translate the target sentence into native English. When one considers this thought experiment, it’s clear one needn’t understand the language one is translating – it’s only necessary that the translation achieve fidelity.

The Chinese room is a metaphorical means of analysing the limitations of algorithms, such as those that can list elements in a digital scene or translate sentences on a webpage. In both cases, the correct solutions are produced without any ‘understanding’ of the content. So what is the nature of this missing understanding that Searle is searching for?

The nature of understanding is the very ground for transmission and accumulation of knowledge

There are many Baconian implements that can stand in place of Searle’s room – such as slide rules to solve large multiplication problems, or geometric constructions using compass and protractors to prove theorems, or integration rules in calculus to solve large or even infinite sums. These techniques are effective precisely because they obviate the need for understanding. It’s enough to simply move accurately through the prescribed steps to guarantee a desired outcome. To understand, in each of these cases, is to explain the logic and the appropriate use of logarithms, the kinematic-geometric properties of a protractor or compass, or the underlying numerical basis for taking limits with rectangles to approximate areas. Thus, even in the practice of daily mathematics, we experience the schism between understanding and prediction.

Understanding is the means by which we overcome a world of paradox and illusion by opening up the black box of knowledge to modification. Understanding is the elucidation of justifiable mistakes. Once we appreciate that a wireframe cube is interpreted as a solid in three dimensions, then it’s clear why we see only one cubefront at time.

Data can be acquired without explanation and without understanding. The very definition of a bad education is simply to be drilled with facts: as in learning history by rote-memorising dates and events. But true understanding is the expectation that other human beings, or agents more generally, can explain to us how and why their methods work. We require some means of replicating an idea and of verifying its accuracy. This requirement extends to nonhuman devices that purport to be able to solve problems intelligently. Machines need to be able to give an account of what they’ve done, and why.

The requirement to explain is what links understanding to teaching and learning. ‘Teaching’ is the name we give to the effective communication of mechanisms that are causal (‘if you follow these rules, you will achieve long division), while ‘learning’ is the acquisition of an intuition for the relationships between causes and their effects (‘this is why long division rules work’). The nature of understanding is the very ground for the reliable transmission and cultural accumulation of knowledge. And, by extension, it is also the basis of all long-term prediction.

The prismatic writer Jorge Luis Borges could have been reflecting on all this when he wrote in the essay ‘History of the Echoes of a Name’ (1955):

Isolated in time and space, a god, a dream, and a man who is insane and aware of the fact repeat an obscure statement. Those words, and their two echoes, are the subject of these pages.

Let’s say that the god is the Universe, the dream our desire to understand, and the machines are the insane man, all repeating their obscure statements. Taken together, their words and echoes are the system of our scientific enquiry. It is the challenge of the 21st century to integrate the sciences of complexity with machine learning and artificial intelligence. The most successful forms of future knowledge will be those that harmonise the human dream of understanding with the increasingly obscure echoes of the machines.